Ziheng Zhang

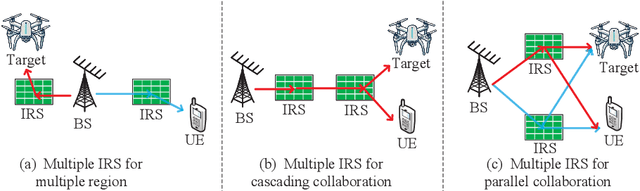

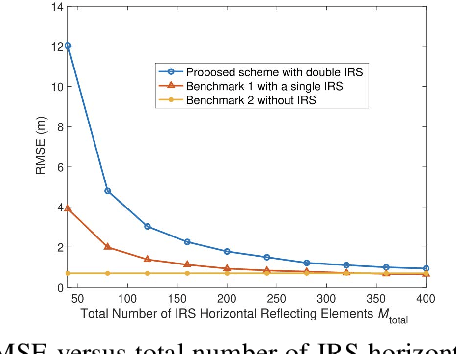

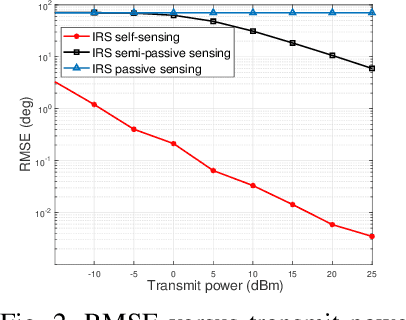

Intelligent Reflecting Surfaces for Integrated Sensing and Communications: A Survey

Nov 14, 2025

Abstract:The rapid development of sixth-generation (6G) wireless networks requires seamless integration of communication and sensing to support ubiquitous intelligence and real-time, high-reliability applications. Integrated sensing and communication (ISAC) has emerged as a key solution for achieving this convergence, offering joint utilization of spectral, hardware, and computing resources. However, realizing high-performance ISAC remains challenging due to environmental line-of-sight (LoS) blockage, limited spatial resolution, and the inherent coverage asymmetry and resource coupling between sensing and communication. Intelligent reflecting surfaces (IRSs), featuring low-cost, energy-efficient, and programmable electromagnetic reconfiguration, provide a promising solution to overcome these limitations. This article presents a comprehensive overview of IRS-aided wireless sensing and ISAC technologies, including IRS architectures, target detection and estimation techniques, beamforming designs, and performance metrics. It further explores IRS-enabled new opportunities for more efficient performance balancing, coexistence, and networking in ISAC systems, focuses on current design bottlenecks, and outlines future research directions. This article aims to offer a unified design framework that guides the development of practical and scalable IRS-aided ISAC systems for the next-generation wireless network.

Reconfigurable Airspace: Synergizing Movable Antenna and Intelligent Surface for Low-Altitude ISAC Networks

Nov 13, 2025Abstract:Low-altitude unmanned aerial vehicle (UAV) networks are integral to future 6G integrated sensing and communication (ISAC) systems. However, their deployment is hindered by challenges stemming from high mobility of UAVs, complex propagation environments, and the inherent trade-offs between coexisting sensing and communication functions. This article proposes a novel framework that leverages movable antennas (MAs) and intelligent reflecting surfaces (IRSs) as dual enablers to overcome these limitations. MAs, through active transceiver reconfiguration, and IRSs, via passive channel reconstruction, can work in synergy to significantly enhance system performance. Our analysis first elaborates on the fundamental gains offered by MAs and IRSs, and provides simulation results that validate the immense potential of the MA-IRS-enabled ISAC architecture. Two core UAV deployment scenarios are then investigated: (i) UAVs as ISAC users, where we focus on achieving high-precision tracking and aerial safety, and (ii) UAVs as aerial network nodes, where we address robust design and complex coupled resource optimization. Finally, key technical challenges and research opportunities are identified and analyzed for each scenario, charting a clear course for the future design of advanced low-altitude ISAC networks.

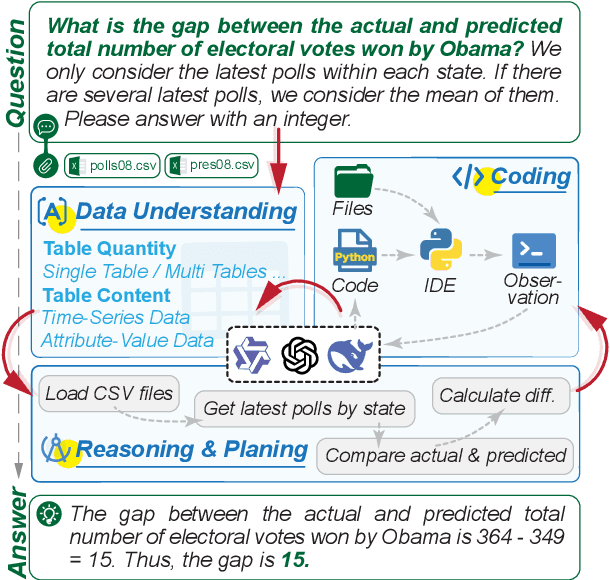

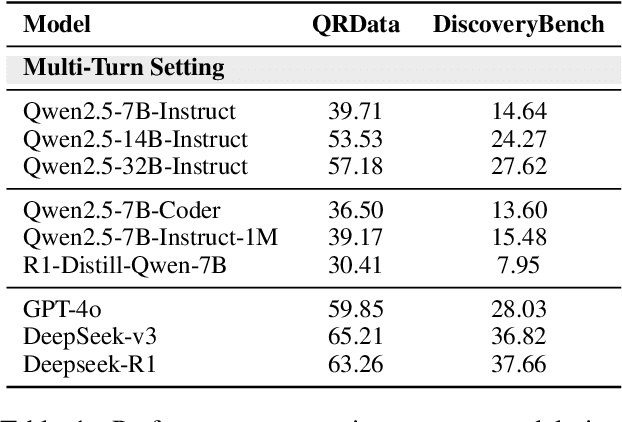

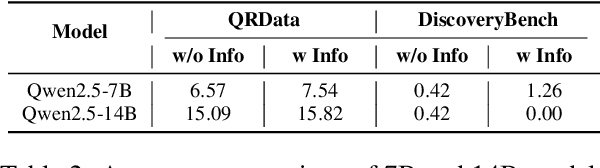

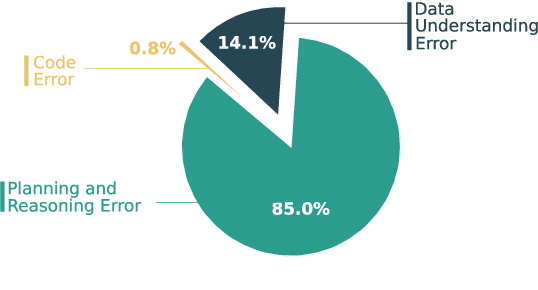

Why Do Open-Source LLMs Struggle with Data Analysis? A Systematic Empirical Study

Jun 24, 2025

Abstract:Large Language Models (LLMs) hold promise in automating data analysis tasks, yet open-source models face significant limitations in these kinds of reasoning-intensive scenarios. In this work, we investigate strategies to enhance the data analysis capabilities of open-source LLMs. By curating a seed dataset of diverse, realistic scenarios, we evaluate models across three dimensions: data understanding, code generation, and strategic planning. Our analysis reveals three key findings: (1) Strategic planning quality serves as the primary determinant of model performance; (2) Interaction design and task complexity significantly influence reasoning capabilities; (3) Data quality demonstrates a greater impact than diversity in achieving optimal performance. We leverage these insights to develop a data synthesis methodology, demonstrating significant improvements in open-source LLMs' analytical reasoning capabilities.

PADriver: Towards Personalized Autonomous Driving

May 08, 2025Abstract:In this paper, we propose PADriver, a novel closed-loop framework for personalized autonomous driving (PAD). Built upon Multi-modal Large Language Model (MLLM), PADriver takes streaming frames and personalized textual prompts as inputs. It autoaggressively performs scene understanding, danger level estimation and action decision. The predicted danger level reflects the risk of the potential action and provides an explicit reference for the final action, which corresponds to the preset personalized prompt. Moreover, we construct a closed-loop benchmark named PAD-Highway based on Highway-Env simulator to comprehensively evaluate the decision performance under traffic rules. The dataset contains 250 hours videos with high-quality annotation to facilitate the development of PAD behavior analysis. Experimental results on the constructed benchmark show that PADriver outperforms state-of-the-art approaches on different evaluation metrics, and enables various driving modes.

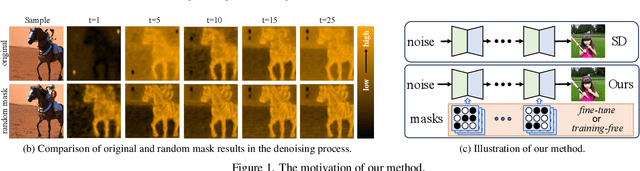

Not All Parameters Matter: Masking Diffusion Models for Enhancing Generation Ability

May 06, 2025

Abstract:The diffusion models, in early stages focus on constructing basic image structures, while the refined details, including local features and textures, are generated in later stages. Thus the same network layers are forced to learn both structural and textural information simultaneously, significantly differing from the traditional deep learning architectures (e.g., ResNet or GANs) which captures or generates the image semantic information at different layers. This difference inspires us to explore the time-wise diffusion models. We initially investigate the key contributions of the U-Net parameters to the denoising process and identify that properly zeroing out certain parameters (including large parameters) contributes to denoising, substantially improving the generation quality on the fly. Capitalizing on this discovery, we propose a simple yet effective method-termed ``MaskUNet''- that enhances generation quality with negligible parameter numbers. Our method fully leverages timestep- and sample-dependent effective U-Net parameters. To optimize MaskUNet, we offer two fine-tuning strategies: a training-based approach and a training-free approach, including tailored networks and optimization functions. In zero-shot inference on the COCO dataset, MaskUNet achieves the best FID score and further demonstrates its effectiveness in downstream task evaluations. Project page: https://gudaochangsheng.github.io/MaskUnet-Page/

DiT4SR: Taming Diffusion Transformer for Real-World Image Super-Resolution

Mar 30, 2025

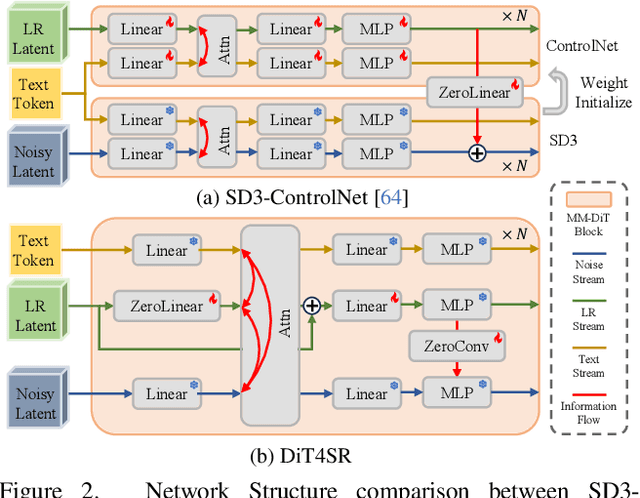

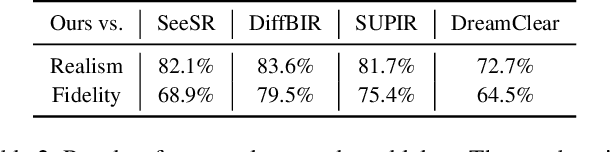

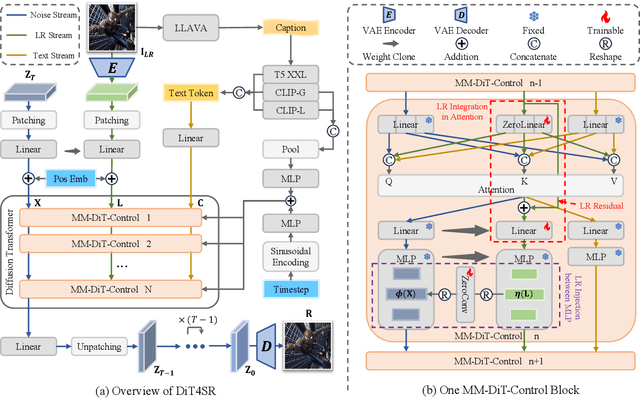

Abstract:Large-scale pre-trained diffusion models are becoming increasingly popular in solving the Real-World Image Super-Resolution (Real-ISR) problem because of their rich generative priors. The recent development of diffusion transformer (DiT) has witnessed overwhelming performance over the traditional UNet-based architecture in image generation, which also raises the question: Can we adopt the advanced DiT-based diffusion model for Real-ISR? To this end, we propose our DiT4SR, one of the pioneering works to tame the large-scale DiT model for Real-ISR. Instead of directly injecting embeddings extracted from low-resolution (LR) images like ControlNet, we integrate the LR embeddings into the original attention mechanism of DiT, allowing for the bidirectional flow of information between the LR latent and the generated latent. The sufficient interaction of these two streams allows the LR stream to evolve with the diffusion process, producing progressively refined guidance that better aligns with the generated latent at each diffusion step. Additionally, the LR guidance is injected into the generated latent via a cross-stream convolution layer, compensating for DiT's limited ability to capture local information. These simple but effective designs endow the DiT model with superior performance in Real-ISR, which is demonstrated by extensive experiments. Project Page: https://adam-duan.github.io/projects/dit4sr/.

Building Machine Learning Challenges for Anomaly Detection in Science

Mar 03, 2025

Abstract:Scientific discoveries are often made by finding a pattern or object that was not predicted by the known rules of science. Oftentimes, these anomalous events or objects that do not conform to the norms are an indication that the rules of science governing the data are incomplete, and something new needs to be present to explain these unexpected outliers. The challenge of finding anomalies can be confounding since it requires codifying a complete knowledge of the known scientific behaviors and then projecting these known behaviors on the data to look for deviations. When utilizing machine learning, this presents a particular challenge since we require that the model not only understands scientific data perfectly but also recognizes when the data is inconsistent and out of the scope of its trained behavior. In this paper, we present three datasets aimed at developing machine learning-based anomaly detection for disparate scientific domains covering astrophysics, genomics, and polar science. We present the different datasets along with a scheme to make machine learning challenges around the three datasets findable, accessible, interoperable, and reusable (FAIR). Furthermore, we present an approach that generalizes to future machine learning challenges, enabling the possibility of large, more compute-intensive challenges that can ultimately lead to scientific discovery.

Pioneer: Physics-informed Riemannian Graph ODE for Entropy-increasing Dynamics

Feb 05, 2025

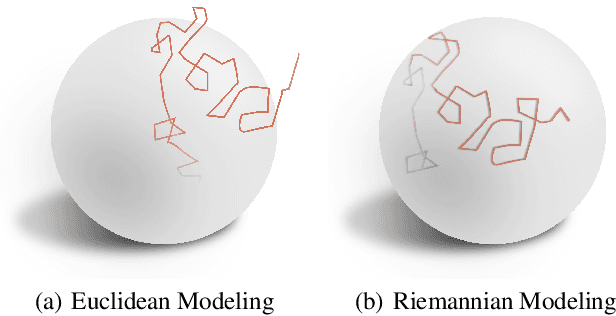

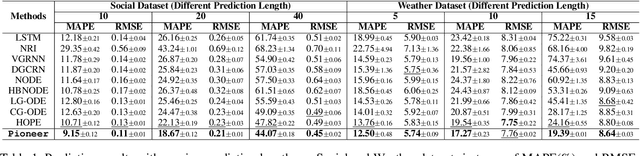

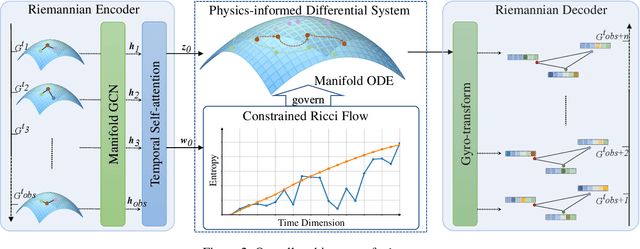

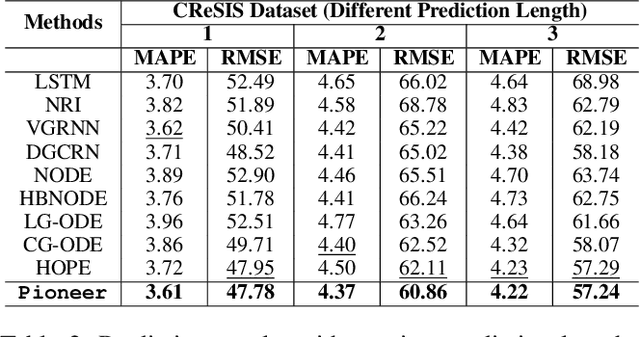

Abstract:Dynamic interacting system modeling is important for understanding and simulating real world systems. The system is typically described as a graph, where multiple objects dynamically interact with each other and evolve over time. In recent years, graph Ordinary Differential Equations (ODE) receive increasing research attentions. While achieving encouraging results, existing solutions prioritize the traditional Euclidean space, and neglect the intrinsic geometry of the system and physics laws, e.g., the principle of entropy increasing. The limitations above motivate us to rethink the system dynamics from a fresh perspective of Riemannian geometry, and pose a more realistic problem of physics-informed dynamic system modeling, considering the underlying geometry and physics law for the first time. In this paper, we present a novel physics-informed Riemannian graph ODE for a wide range of entropy-increasing dynamic systems (termed as Pioneer). In particular, we formulate a differential system on the Riemannian manifold, where a manifold-valued graph ODE is governed by the proposed constrained Ricci flow, and a manifold preserving Gyro-transform aware of system geometry. Theoretically, we report the provable entropy non-decreasing of our formulation, obeying the physics laws. Empirical results show the superiority of Pioneer on real datasets.

Finer-CAM: Spotting the Difference Reveals Finer Details for Visual Explanation

Jan 20, 2025

Abstract:Class activation map (CAM) has been widely used to highlight image regions that contribute to class predictions. Despite its simplicity and computational efficiency, CAM often struggles to identify discriminative regions that distinguish visually similar fine-grained classes. Prior efforts address this limitation by introducing more sophisticated explanation processes, but at the cost of extra complexity. In this paper, we propose Finer-CAM, a method that retains CAM's efficiency while achieving precise localization of discriminative regions. Our key insight is that the deficiency of CAM lies not in "how" it explains, but in "what" it explains}. Specifically, previous methods attempt to identify all cues contributing to the target class's logit value, which inadvertently also activates regions predictive of visually similar classes. By explicitly comparing the target class with similar classes and spotting their differences, Finer-CAM suppresses features shared with other classes and emphasizes the unique, discriminative details of the target class. Finer-CAM is easy to implement, compatible with various CAM methods, and can be extended to multi-modal models for accurate localization of specific concepts. Additionally, Finer-CAM allows adjustable comparison strength, enabling users to selectively highlight coarse object contours or fine discriminative details. Quantitatively, we show that masking out the top 5% of activated pixels by Finer-CAM results in a larger relative confidence drop compared to baselines. The source code and demo are available at https://github.com/Imageomics/Finer-CAM.

Prompt-CAM: A Simpler Interpretable Transformer for Fine-Grained Analysis

Jan 16, 2025

Abstract:We present a simple usage of pre-trained Vision Transformers (ViTs) for fine-grained analysis, aiming to identify and localize the traits that distinguish visually similar categories, such as different bird species or dog breeds. Pre-trained ViTs such as DINO have shown remarkable capabilities to extract localized, informative features. However, using saliency maps like Grad-CAM can hardly point out the traits: they often locate the whole object by a blurred, coarse heatmap, not traits. We propose a novel approach Prompt Class Attention Map (Prompt-CAM) to the rescue. Prompt-CAM learns class-specific prompts to a pre-trained ViT and uses the corresponding outputs for classification. To classify an image correctly, the true-class prompt must attend to the unique image patches not seen in other classes' images, i.e., traits. As such, the true class's multi-head attention maps reveal traits and their locations. Implementation-wise, Prompt-CAM is almost a free lunch by simply modifying the prediction head of Visual Prompt Tuning (VPT). This makes Prompt-CAM fairly easy to train and apply, sharply contrasting other interpretable methods that design specific models and training processes. It is even simpler than the recently published INterpretable TRansformer (INTR), whose encoder-decoder architecture prevents it from leveraging pre-trained ViTs. Extensive empirical studies on a dozen datasets from various domains (e.g., birds, fishes, insects, fungi, flowers, food, and cars) validate Prompt-CAM superior interpretation capability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge