Fuchun Sun

Flow-Based Policy for Online Reinforcement Learning

Jun 15, 2025

Abstract:We present \textbf{FlowRL}, a novel framework for online reinforcement learning that integrates flow-based policy representation with Wasserstein-2-regularized optimization. We argue that in addition to training signals, enhancing the expressiveness of the policy class is crucial for the performance gains in RL. Flow-based generative models offer such potential, excelling at capturing complex, multimodal action distributions. However, their direct application in online RL is challenging due to a fundamental objective mismatch: standard flow training optimizes for static data imitation, while RL requires value-based policy optimization through a dynamic buffer, leading to difficult optimization landscapes. FlowRL first models policies via a state-dependent velocity field, generating actions through deterministic ODE integration from noise. We derive a constrained policy search objective that jointly maximizes Q through the flow policy while bounding the Wasserstein-2 distance to a behavior-optimal policy implicitly derived from the replay buffer. This formulation effectively aligns the flow optimization with the RL objective, enabling efficient and value-aware policy learning despite the complexity of the policy class. Empirical evaluations on DMControl and Humanoidbench demonstrate that FlowRL achieves competitive performance in online reinforcement learning benchmarks.

On the Transferability and Discriminability of Repersentation Learning in Unsupervised Domain Adaptation

May 28, 2025Abstract:In this paper, we addressed the limitation of relying solely on distribution alignment and source-domain empirical risk minimization in Unsupervised Domain Adaptation (UDA). Our information-theoretic analysis showed that this standard adversarial-based framework neglects the discriminability of target-domain features, leading to suboptimal performance. To bridge this theoretical-practical gap, we defined "good representation learning" as guaranteeing both transferability and discriminability, and proved that an additional loss term targeting target-domain discriminability is necessary. Building on these insights, we proposed a novel adversarial-based UDA framework that explicitly integrates a domain alignment objective with a discriminability-enhancing constraint. Instantiated as Domain-Invariant Representation Learning with Global and Local Consistency (RLGLC), our method leverages Asymmetrically-Relaxed Wasserstein of Wasserstein Distance (AR-WWD) to address class imbalance and semantic dimension weighting, and employs a local consistency mechanism to preserve fine-grained target-domain discriminative information. Extensive experiments across multiple benchmark datasets demonstrate that RLGLC consistently surpasses state-of-the-art methods, confirming the value of our theoretical perspective and underscoring the necessity of enforcing both transferability and discriminability in adversarial-based UDA.

Multi-segment Soft Robot Control via Deep Koopman-based Model Predictive Control

May 01, 2025Abstract:Soft robots, compared to regular rigid robots, as their multiple segments with soft materials bring flexibility and compliance, have the advantages of safe interaction and dexterous operation in the environment. However, due to its characteristics of high dimensional, nonlinearity, time-varying nature, and infinite degree of freedom, it has been challenges in achieving precise and dynamic control such as trajectory tracking and position reaching. To address these challenges, we propose a framework of Deep Koopman-based Model Predictive Control (DK-MPC) for handling multi-segment soft robots. We first employ a deep learning approach with sampling data to approximate the Koopman operator, which therefore linearizes the high-dimensional nonlinear dynamics of the soft robots into a finite-dimensional linear representation. Secondly, this linearized model is utilized within a model predictive control framework to compute optimal control inputs that minimize the tracking error between the desired and actual state trajectories. The real-world experiments on the soft robot "Chordata" demonstrate that DK-MPC could achieve high-precision control, showing the potential of DK-MPC for future applications to soft robots.

Adversarial Locomotion and Motion Imitation for Humanoid Policy Learning

Apr 19, 2025Abstract:Humans exhibit diverse and expressive whole-body movements. However, attaining human-like whole-body coordination in humanoid robots remains challenging, as conventional approaches that mimic whole-body motions often neglect the distinct roles of upper and lower body. This oversight leads to computationally intensive policy learning and frequently causes robot instability and falls during real-world execution. To address these issues, we propose Adversarial Locomotion and Motion Imitation (ALMI), a novel framework that enables adversarial policy learning between upper and lower body. Specifically, the lower body aims to provide robust locomotion capabilities to follow velocity commands while the upper body tracks various motions. Conversely, the upper-body policy ensures effective motion tracking when the robot executes velocity-based movements. Through iterative updates, these policies achieve coordinated whole-body control, which can be extended to loco-manipulation tasks with teleoperation systems. Extensive experiments demonstrate that our method achieves robust locomotion and precise motion tracking in both simulation and on the full-size Unitree H1 robot. Additionally, we release a large-scale whole-body motion control dataset featuring high-quality episodic trajectories from MuJoCo simulations deployable on real robots. The project page is https://almi-humanoid.github.io.

Self-Supervised Enhancement of Forward-Looking Sonar Images: Bridging Cross-Modal Degradation Gaps through Feature Space Transformation and Multi-Frame Fusion

Apr 16, 2025Abstract:Enhancing forward-looking sonar images is critical for accurate underwater target detection. Current deep learning methods mainly rely on supervised training with simulated data, but the difficulty in obtaining high-quality real-world paired data limits their practical use and generalization. Although self-supervised approaches from remote sensing partially alleviate data shortages, they neglect the cross-modal degradation gap between sonar and remote sensing images. Directly transferring pretrained weights often leads to overly smooth sonar images, detail loss, and insufficient brightness. To address this, we propose a feature-space transformation that maps sonar images from the pixel domain to a robust feature domain, effectively bridging the degradation gap. Additionally, our self-supervised multi-frame fusion strategy leverages complementary inter-frame information to naturally remove speckle noise and enhance target-region brightness. Experiments on three self-collected real-world forward-looking sonar datasets show that our method significantly outperforms existing approaches, effectively suppressing noise, preserving detailed edges, and substantially improving brightness, demonstrating strong potential for underwater target detection applications.

Video4DGen: Enhancing Video and 4D Generation through Mutual Optimization

Apr 05, 2025Abstract:The advancement of 4D (i.e., sequential 3D) generation opens up new possibilities for lifelike experiences in various applications, where users can explore dynamic objects or characters from any viewpoint. Meanwhile, video generative models are receiving particular attention given their ability to produce realistic and imaginative frames. These models are also observed to exhibit strong 3D consistency, indicating the potential to act as world simulators. In this work, we present Video4DGen, a novel framework that excels in generating 4D representations from single or multiple generated videos as well as generating 4D-guided videos. This framework is pivotal for creating high-fidelity virtual contents that maintain both spatial and temporal coherence. The 4D outputs generated by Video4DGen are represented using our proposed Dynamic Gaussian Surfels (DGS), which optimizes time-varying warping functions to transform Gaussian surfels (surface elements) from a static state to a dynamically warped state. We design warped-state geometric regularization and refinements on Gaussian surfels, to preserve the structural integrity and fine-grained appearance details. To perform 4D generation from multiple videos and capture representation across spatial, temporal, and pose dimensions, we design multi-video alignment, root pose optimization, and pose-guided frame sampling strategies. The leveraging of continuous warping fields also enables a precise depiction of pose, motion, and deformation over per-video frames. Further, to improve the overall fidelity from the observation of all camera poses, Video4DGen performs novel-view video generation guided by the 4D content, with the proposed confidence-filtered DGS to enhance the quality of generated sequences. With the ability of 4D and video generation, Video4DGen offers a powerful tool for applications in virtual reality, animation, and beyond.

Siamese Foundation Models for Crystal Structure Prediction

Mar 13, 2025

Abstract:Crystal Structure Prediction (CSP), which aims to generate stable crystal structures from compositions, represents a critical pathway for discovering novel materials. While structure prediction tasks in other domains, such as proteins, have seen remarkable progress, CSP remains a relatively underexplored area due to the more complex geometries inherent in crystal structures. In this paper, we propose Siamese foundation models specifically designed to address CSP. Our pretrain-finetune framework, named DAO, comprises two complementary foundation models: DAO-G for structure generation and DAO-P for energy prediction. Experiments on CSP benchmarks (MP-20 and MPTS-52) demonstrate that our DAO-G significantly surpasses state-of-the-art (SOTA) methods across all metrics. Extensive ablation studies further confirm that DAO-G excels in generating diverse polymorphic structures, and the dataset relaxation and energy guidance provided by DAO-P are essential for enhancing DAO-G's performance. When applied to three real-world superconductors ($\text{CsV}_3\text{Sb}_5$, $ \text{Zr}_{16}\text{Rh}_8\text{O}_4$ and $\text{Zr}_{16}\text{Pd}_8\text{O}_4$) that are known to be challenging to analyze, our foundation models achieve accurate critical temperature predictions and structure generations. For instance, on $\text{CsV}_3\text{Sb}_5$, DAO-G generates a structure close to the experimental one with an RMSE of 0.0085; DAO-P predicts the $T_c$ value with high accuracy (2.26 K vs. the ground-truth value of 2.30 K). In contrast, conventional DFT calculators like Quantum Espresso only successfully derive the structure of the first superconductor within an acceptable time, while the RMSE is nearly 8 times larger, and the computation speed is more than 1000 times slower. These compelling results collectively highlight the potential of our approach for advancing materials science research and development.

Tacchi 2.0: A Low Computational Cost and Comprehensive Dynamic Contact Simulator for Vision-based Tactile Sensors

Mar 12, 2025Abstract:With the development of robotics technology, some tactile sensors, such as vision-based sensors, have been applied to contact-rich robotics tasks. However, the durability of vision-based tactile sensors significantly increases the cost of tactile information acquisition. Utilizing simulation to generate tactile data has emerged as a reliable approach to address this issue. While data-driven methods for tactile data generation lack robustness, finite element methods (FEM) based approaches require significant computational costs. To address these issues, we integrated a pinhole camera model into the low computational cost vision-based tactile simulator Tacchi that used the Material Point Method (MPM) as the simulated method, completing the simulation of marker motion images. We upgraded Tacchi and introduced Tacchi 2.0. This simulator can simulate tactile images, marked motion images, and joint images under different motion states like pressing, slipping, and rotating. Experimental results demonstrate the reliability of our method and its robustness across various vision-based tactile sensors.

Time Series Domain Adaptation via Latent Invariant Causal Mechanism

Feb 23, 2025Abstract:Time series domain adaptation aims to transfer the complex temporal dependence from the labeled source domain to the unlabeled target domain. Recent advances leverage the stable causal mechanism over observed variables to model the domain-invariant temporal dependence. However, modeling precise causal structures in high-dimensional data, such as videos, remains challenging. Additionally, direct causal edges may not exist among observed variables (e.g., pixels). These limitations hinder the applicability of existing approaches to real-world scenarios. To address these challenges, we find that the high-dimension time series data are generated from the low-dimension latent variables, which motivates us to model the causal mechanisms of the temporal latent process. Based on this intuition, we propose a latent causal mechanism identification framework that guarantees the uniqueness of the reconstructed latent causal structures. Specifically, we first identify latent variables by utilizing sufficient changes in historical information. Moreover, by enforcing the sparsity of the relationships of latent variables, we can achieve identifiable latent causal structures. Built on the theoretical results, we develop the Latent Causality Alignment (LCA) model that leverages variational inference, which incorporates an intra-domain latent sparsity constraint for latent structure reconstruction and an inter-domain latent sparsity constraint for domain-invariant structure reconstruction. Experiment results on eight benchmarks show a general improvement in the domain-adaptive time series classification and forecasting tasks, highlighting the effectiveness of our method in real-world scenarios. Codes are available at https://github.com/DMIRLAB-Group/LCA.

Pick-and-place Manipulation Across Grippers Without Retraining: A Learning-optimization Diffusion Policy Approach

Feb 21, 2025

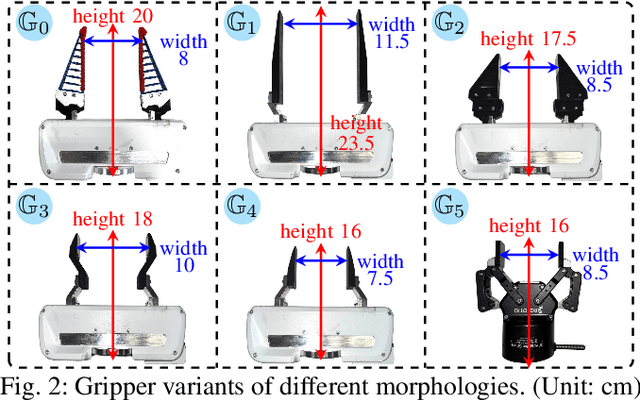

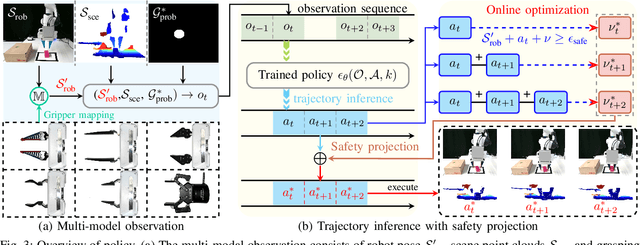

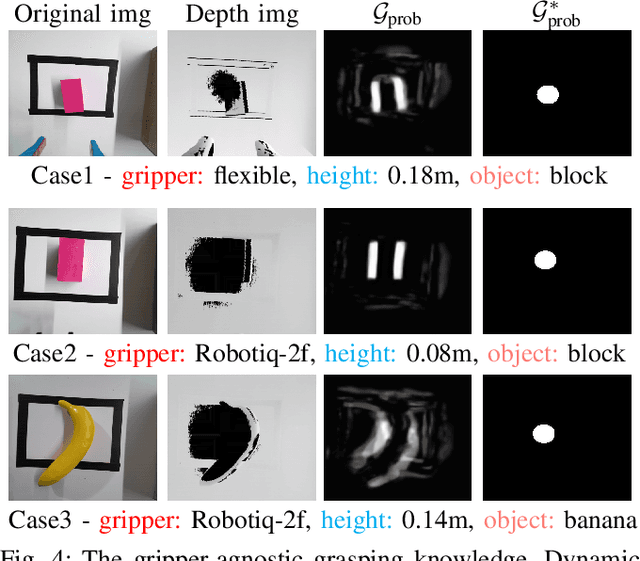

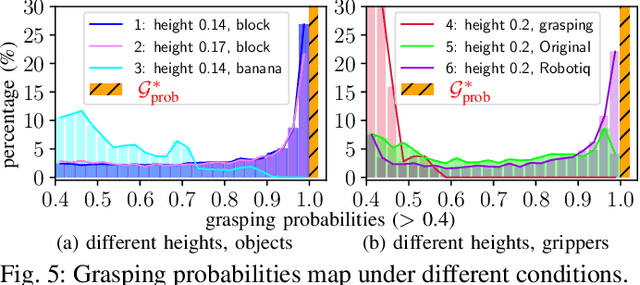

Abstract:Current robotic pick-and-place policies typically require consistent gripper configurations across training and inference. This constraint imposes high retraining or fine-tuning costs, especially for imitation learning-based approaches, when adapting to new end-effectors. To mitigate this issue, we present a diffusion-based policy with a hybrid learning-optimization framework, enabling zero-shot adaptation to novel grippers without additional data collection for retraining policy. During training, the policy learns manipulation primitives from demonstrations collected using a base gripper. At inference, a diffusion-based optimization strategy dynamically enforces kinematic and safety constraints, ensuring that generated trajectories align with the physical properties of unseen grippers. This is achieved through a constrained denoising procedure that adapts trajectories to gripper-specific parameters (e.g., tool-center-point offsets, jaw widths) while preserving collision avoidance and task feasibility. We validate our method on a Franka Panda robot across six gripper configurations, including 3D-printed fingertips, flexible silicone gripper, and Robotiq 2F-85 gripper. Our approach achieves a 93.3% average task success rate across grippers (vs. 23.3-26.7% for diffusion policy baselines), supporting tool-center-point variations of 16-23.5 cm and jaw widths of 7.5-11.5 cm. The results demonstrate that constrained diffusion enables robust cross-gripper manipulation while maintaining the sample efficiency of imitation learning, eliminating the need for gripper-specific retraining. Video and code are available at https://github.com/yaoxt3/GADP.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge