Zhe Liu

PILOT: A Perceptive Integrated Low-level Controller for Loco-manipulation over Unstructured Scenes

Jan 24, 2026Abstract:Humanoid robots hold great potential for diverse interactions and daily service tasks within human-centered environments, necessitating controllers that seamlessly integrate precise locomotion with dexterous manipulation. However, most existing whole-body controllers lack exteroceptive awareness of the surrounding environment, rendering them insufficient for stable task execution in complex, unstructured scenarios.To address this challenge, we propose PILOT, a unified single-stage reinforcement learning (RL) framework tailored for perceptive loco-manipulation, which synergizes perceptive locomotion and expansive whole-body control within a single policy. To enhance terrain awareness and ensure precise foot placement, we design a cross-modal context encoder that fuses prediction-based proprioceptive features with attention-based perceptive representations. Furthermore, we introduce a Mixture-of-Experts (MoE) policy architecture to coordinate diverse motor skills, facilitating better specialization across distinct motion patterns. Extensive experiments in both simulation and on the physical Unitree G1 humanoid robot validate the efficacy of our framework. PILOT demonstrates superior stability, command tracking precision, and terrain traversability compared to existing baselines. These results highlight its potential to serve as a robust, foundational low-level controller for loco-manipulation in unstructured scenes.

The Llama 4 Herd: Architecture, Training, Evaluation, and Deployment Notes

Jan 15, 2026Abstract:This document consolidates publicly reported technical details about Metas Llama 4 model family. It summarizes (i) released variants (Scout and Maverick) and the broader herd context including the previewed Behemoth teacher model, (ii) architectural characteristics beyond a high-level MoE description covering routed/shared-expert structure, early-fusion multimodality, and long-context design elements reported for Scout (iRoPE and length generalization strategies), (iii) training disclosures spanning pre-training, mid-training for long-context extension, and post-training methodology (lightweight SFT, online RL, and lightweight DPO) as described in release materials, (iv) developer-reported benchmark results for both base and instruction-tuned checkpoints, and (v) practical deployment constraints observed across major serving environments, including provider-specific context limits and quantization packaging. The manuscript also summarizes licensing obligations relevant to redistribution and derivative naming, and reviews publicly described safeguards and evaluation practices. The goal is to provide a compact technical reference for researchers and practitioners who need precise, source-backed facts about Llama 4.

Dr. Zero: Self-Evolving Search Agents without Training Data

Jan 11, 2026Abstract:As high-quality data becomes increasingly difficult to obtain, data-free self-evolution has emerged as a promising paradigm. This approach allows large language models (LLMs) to autonomously generate and solve complex problems, thereby improving their reasoning capabilities. However, multi-turn search agents struggle in data-free self-evolution due to the limited question diversity and the substantial compute required for multi-step reasoning and tool using. In this work, we introduce Dr. Zero, a framework enabling search agents to effectively self-evolve without any training data. In particular, we design a self-evolution feedback loop where a proposer generates diverse questions to train a solver initialized from the same base model. As the solver evolves, it incentivizes the proposer to produce increasingly difficult yet solvable tasks, thus establishing an automated curriculum to refine both agents. To enhance training efficiency, we also introduce hop-grouped relative policy optimization (HRPO). This method clusters structurally similar questions to construct group-level baselines, effectively minimizing the sampling overhead in evaluating each query's individual difficulty and solvability. Consequently, HRPO significantly reduces the compute requirements for solver training without compromising performance or stability. Extensive experiment results demonstrate that the data-free Dr. Zero matches or surpasses fully supervised search agents, proving that complex reasoning and search capabilities can emerge solely through self-evolution.

MGPC: Multimodal Network for Generalizable Point Cloud Completion With Modality Dropout and Progressive Decoding

Jan 07, 2026Abstract:Point cloud completion aims to recover complete 3D geometry from partial observations caused by limited viewpoints and occlusions. Existing learning-based works, including 3D Convolutional Neural Network (CNN)-based, point-based, and Transformer-based methods, have achieved strong performance on synthetic benchmarks. However, due to the limitations of modality, scalability, and generative capacity, their generalization to novel objects and real-world scenarios remains challenging. In this paper, we propose MGPC, a generalizable multimodal point cloud completion framework that integrates point clouds, RGB images, and text within a unified architecture. MGPC introduces an innovative modality dropout strategy, a Transformer-based fusion module, and a novel progressive generator to improve robustness, scalability, and geometric modeling capability. We further develop an automatic data generation pipeline and construct MGPC-1M, a large-scale benchmark with over 1,000 categories and one million training pairs. Extensive experiments on MGPC-1M and in-the-wild data demonstrate that the proposed method consistently outperforms prior baselines and exhibits strong generalization under real-world conditions.

GeoTeacher: Geometry-Guided Semi-Supervised 3D Object Detection

Dec 29, 2025Abstract:Semi-supervised 3D object detection, aiming to explore unlabeled data for boosting 3D object detectors, has emerged as an active research area in recent years. Some previous methods have shown substantial improvements by either employing heterogeneous teacher models to provide high-quality pseudo labels or enforcing feature-perspective consistency between the teacher and student networks. However, these methods overlook the fact that the model usually tends to exhibit low sensitivity to object geometries with limited labeled data, making it difficult to capture geometric information, which is crucial for enhancing the student model's ability in object perception and localization. In this paper, we propose GeoTeacher to enhance the student model's ability to capture geometric relations of objects with limited training data, especially unlabeled data. We design a keypoint-based geometric relation supervision module that transfers the teacher model's knowledge of object geometry to the student, thereby improving the student's capability in understanding geometric relations. Furthermore, we introduce a voxel-wise data augmentation strategy that increases the diversity of object geometries, thereby further improving the student model's ability to comprehend geometric structures. To preserve the integrity of distant objects during augmentation, we incorporate a distance-decay mechanism into this strategy. Moreover, GeoTeacher can be combined with different SS3D methods to further improve their performance. Extensive experiments on the ONCE and Waymo datasets indicate the effectiveness and generalization of our method and we achieve the new state-of-the-art results. Code will be available at https://github.com/SII-Whaleice/GeoTeacher

Reloc-VGGT: Visual Re-localization with Geometry Grounded Transformer

Dec 26, 2025

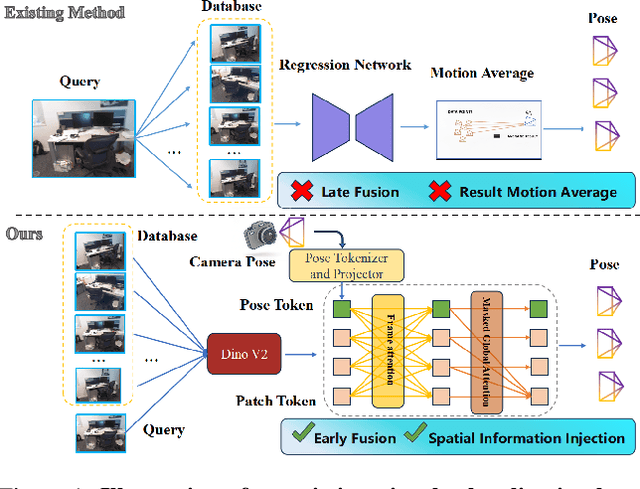

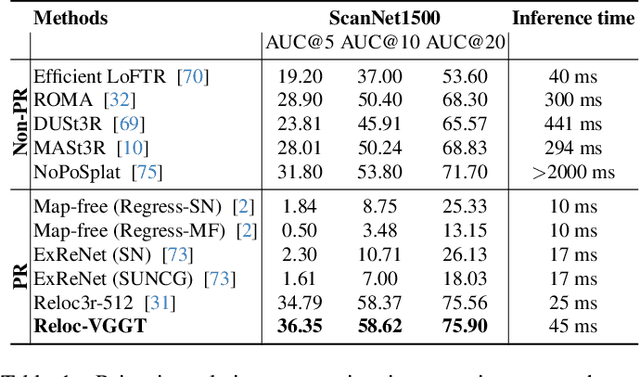

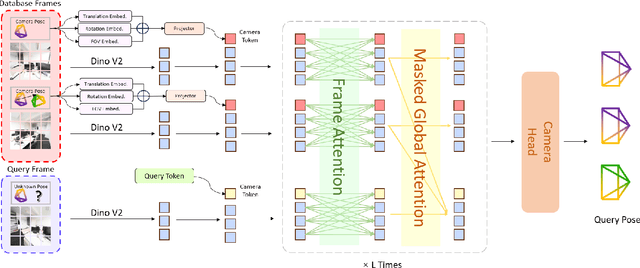

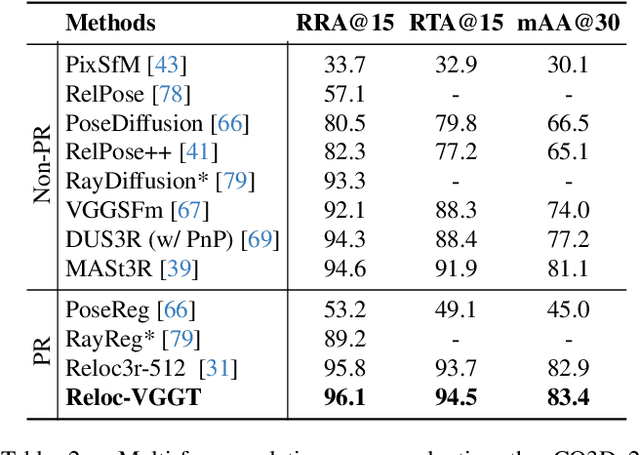

Abstract:Visual localization has traditionally been formulated as a pair-wise pose regression problem. Existing approaches mainly estimate relative poses between two images and employ a late-fusion strategy to obtain absolute pose estimates. However, the late motion average is often insufficient for effectively integrating spatial information, and its accuracy degrades in complex environments. In this paper, we present the first visual localization framework that performs multi-view spatial integration through an early-fusion mechanism, enabling robust operation in both structured and unstructured environments. Our framework is built upon the VGGT backbone, which encodes multi-view 3D geometry, and we introduce a pose tokenizer and projection module to more effectively exploit spatial relationships from multiple database views. Furthermore, we propose a novel sparse mask attention strategy that reduces computational cost by avoiding the quadratic complexity of global attention, thereby enabling real-time performance at scale. Trained on approximately eight million posed image pairs, Reloc-VGGT demonstrates strong accuracy and remarkable generalization ability. Extensive experiments across diverse public datasets consistently validate the effectiveness and efficiency of our approach, delivering high-quality camera pose estimates in real time while maintaining robustness to unseen environments. Our code and models will be publicly released upon acceptance.https://github.com/dtc111111/Reloc-VGGT.

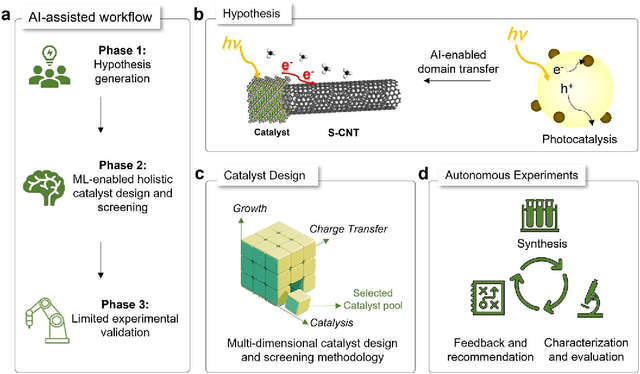

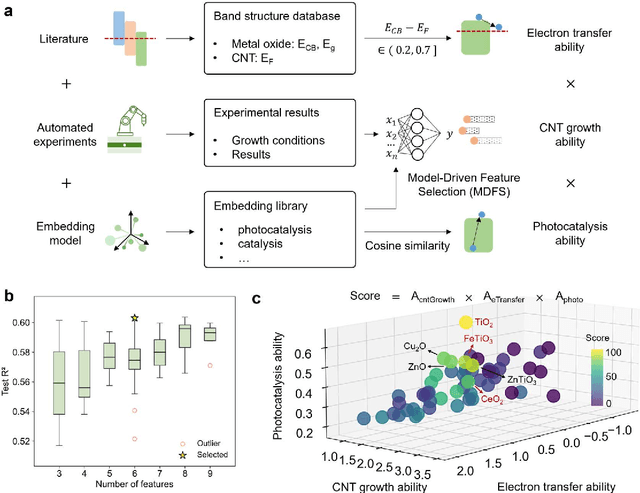

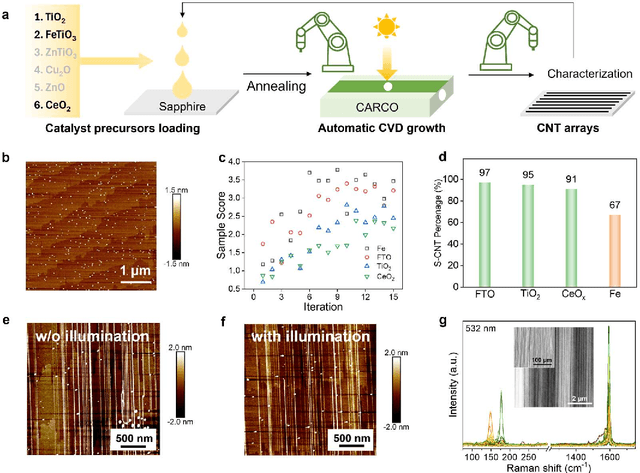

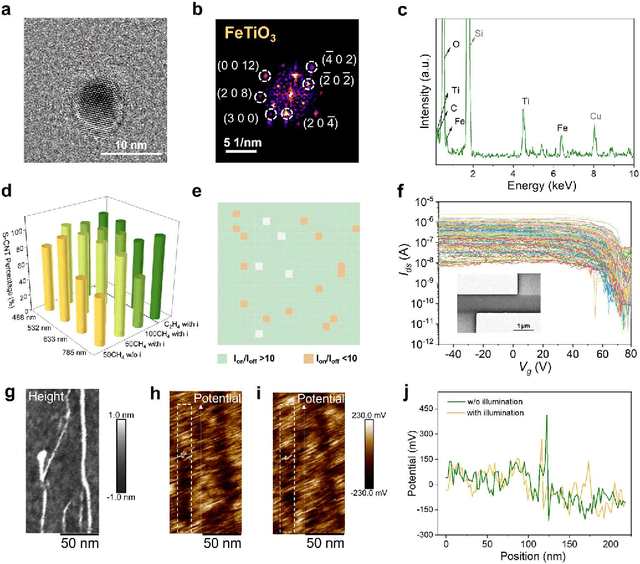

Artificial Intelligence-Enabled Holistic Design of Catalysts Tailored for Semiconducting Carbon Nanotube Growth

Dec 18, 2025

Abstract:Catalyst design is crucial for materials synthesis, especially for complex reaction networks. Strategies like collaborative catalytic systems and multifunctional catalysts are effective but face challenges at the nanoscale. Carbon nanotube synthesis contains complicated nanoscale catalytic reactions, thus achieving high-density, high-quality semiconducting CNTs demands innovative catalyst design. In this work, we present a holistic framework integrating machine learning into traditional catalyst design for semiconducting CNT synthesis. It combines knowledge-based insights with data-driven techniques. Three key components, including open-access electronic structure databases for precise physicochemical descriptors, pre-trained natural language processing-based embedding model for higher-level abstractions, and physical - driven predictive models based on experiment data, are utilized. Through this framework, a new method for selective semiconducting CNT synthesis via catalyst - mediated electron injection, tuned by light during growth, is proposed. 54 candidate catalysts are screened, and three with high potential are identified. High-throughput experiments validate the predictions, with semiconducting selectivity exceeding 91% and the FeTiO3 catalyst reaching 98.6%. This approach not only addresses semiconducting CNT synthesis but also offers a generalizable methodology for global catalyst design and nanomaterials synthesis, advancing materials science in precise control.

DrivePI: Spatial-aware 4D MLLM for Unified Autonomous Driving Understanding, Perception, Prediction and Planning

Dec 14, 2025

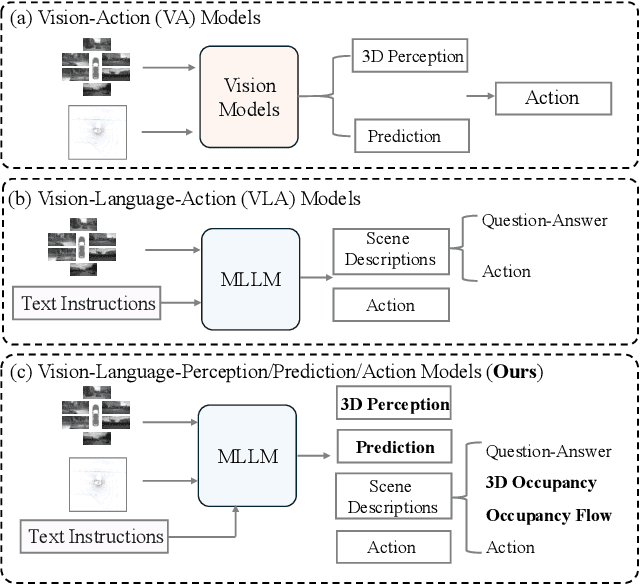

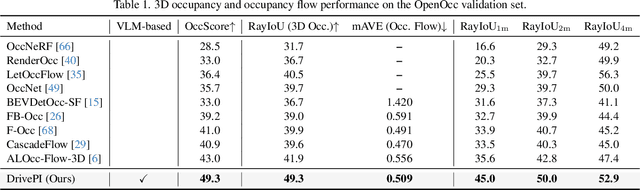

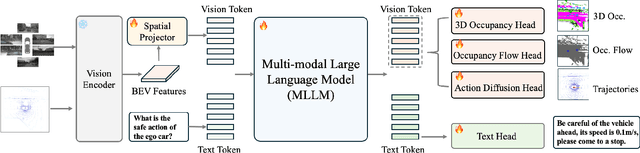

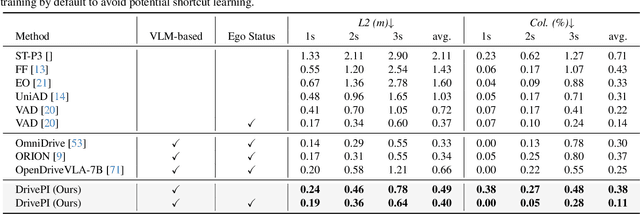

Abstract:Although multi-modal large language models (MLLMs) have shown strong capabilities across diverse domains, their application in generating fine-grained 3D perception and prediction outputs in autonomous driving remains underexplored. In this paper, we propose DrivePI, a novel spatial-aware 4D MLLM that serves as a unified Vision-Language-Action (VLA) framework that is also compatible with vision-action (VA) models. Our method jointly performs spatial understanding, 3D perception (i.e., 3D occupancy), prediction (i.e., occupancy flow), and planning (i.e., action outputs) in parallel through end-to-end optimization. To obtain both precise geometric information and rich visual appearance, our approach integrates point clouds, multi-view images, and language instructions within a unified MLLM architecture. We further develop a data engine to generate text-occupancy and text-flow QA pairs for 4D spatial understanding. Remarkably, with only a 0.5B Qwen2.5 model as MLLM backbone, DrivePI as a single unified model matches or exceeds both existing VLA models and specialized VA models. Specifically, compared to VLA models, DrivePI outperforms OpenDriveVLA-7B by 2.5% mean accuracy on nuScenes-QA and reduces collision rate by 70% over ORION (from 0.37% to 0.11%) on nuScenes. Against specialized VA models, DrivePI surpasses FB-OCC by 10.3 RayIoU for 3D occupancy on OpenOcc, reduces the mAVE from 0.591 to 0.509 for occupancy flow on OpenOcc, and achieves 32% lower L2 error than VAD (from 0.72m to 0.49m) for planning on nuScenes. Code will be available at https://github.com/happinesslz/DrivePI

GenieDrive: Towards Physics-Aware Driving World Model with 4D Occupancy Guided Video Generation

Dec 14, 2025Abstract:Physics-aware driving world model is essential for drive planning, out-of-distribution data synthesis, and closed-loop evaluation. However, existing methods often rely on a single diffusion model to directly map driving actions to videos, which makes learning difficult and leads to physically inconsistent outputs. To overcome these challenges, we propose GenieDrive, a novel framework designed for physics-aware driving video generation. Our approach starts by generating 4D occupancy, which serves as a physics-informed foundation for subsequent video generation. 4D occupancy contains rich physical information, including high-resolution 3D structures and dynamics. To facilitate effective compression of such high-resolution occupancy, we propose a VAE that encodes occupancy into a latent tri-plane representation, reducing the latent size to only 58% of that used in previous methods. We further introduce Mutual Control Attention (MCA) to accurately model the influence of control on occupancy evolution, and we jointly train the VAE and the subsequent prediction module in an end-to-end manner to maximize forecasting accuracy. Together, these designs yield a 7.2% improvement in forecasting mIoU at an inference speed of 41 FPS, while using only 3.47 M parameters. Additionally, a Normalized Multi-View Attention is introduced in the video generation model to generate multi-view driving videos with guidance from our 4D occupancy, significantly improving video quality with a 20.7% reduction in FVD. Experiments demonstrate that GenieDrive enables highly controllable, multi-view consistent, and physics-aware driving video generation.

Beyond Elicitation: Provision-based Prompt Optimization for Knowledge-Intensive Tasks

Nov 13, 2025

Abstract:While prompt optimization has emerged as a critical technique for enhancing language model performance, existing approaches primarily focus on elicitation-based strategies that search for optimal prompts to activate models' capabilities. These methods exhibit fundamental limitations when addressing knowledge-intensive tasks, as they operate within fixed parametric boundaries rather than providing the factual knowledge, terminology precision, and reasoning patterns required in specialized domains. To address these limitations, we propose Knowledge-Provision-based Prompt Optimization (KPPO), a framework that reformulates prompt optimization as systematic knowledge integration rather than potential elicitation. KPPO introduces three key innovations: 1) a knowledge gap filling mechanism for knowledge gap identification and targeted remediation; 2) a batch-wise candidate evaluation approach that considers both performance improvement and distributional stability; 3) an adaptive knowledge pruning strategy that balances performance and token efficiency, reducing up to 29% token usage. Extensive evaluation on 15 knowledge-intensive benchmarks from various domains demonstrates KPPO's superiority over elicitation-based methods, with an average performance improvement of ~6% over the strongest baseline while achieving comparable or lower token consumption. Code at: https://github.com/xyz9911/KPPO.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge