Yu Pan

Learning Shortest Paths When Data is Scarce

Jan 07, 2026Abstract:Digital twins and other simulators are increasingly used to support routing decisions in large-scale networks. However, simulator outputs often exhibit systematic bias, while ground-truth measurements are costly and scarce. We study a stochastic shortest-path problem in which a planner has access to abundant synthetic samples, limited real-world observations, and an edge-similarity structure capturing expected behavioral similarity across links. We model the simulator-to-reality discrepancy as an unknown, edge-specific bias that varies smoothly over the similarity graph, and estimate it using Laplacian-regularized least squares. This approach yields calibrated edge cost estimates even in data-scarce regimes. We establish finite-sample error bounds, translate estimation error into path-level suboptimality guarantees, and propose a computable, data-driven certificate that verifies near-optimality of a candidate route. For cold-start settings without initial real data, we develop a bias-aware active learning algorithm that leverages the simulator and adaptively selects edges to measure until a prescribed accuracy is met. Numerical experiments on multiple road networks and traffic graphs further demonstrate the effectiveness of our methods.

A data-physics hybrid generative model for patient-specific post-stroke motor rehabilitation using wearable sensor data

Dec 16, 2025Abstract:Dynamic prediction of locomotor capacity after stroke is crucial for tailoring rehabilitation, yet current assessments provide only static impairment scores and do not indicate whether patients can safely perform specific tasks such as slope walking or stair climbing. Here, we develop a data-physics hybrid generative framework that reconstructs an individual stroke survivor's neuromuscular control from a single 20 m level-ground walking trial and predicts task-conditioned locomotion across rehabilitation scenarios. The system combines wearable-sensor kinematics, a proportional-derivative physics controller, a population Healthy Motion Atlas, and goal-conditioned deep reinforcement learning with behaviour cloning and generative adversarial imitation learning to generate physically plausible, patient-specific gait simulations for slopes and stairs. In 11 stroke survivors, the personalized controllers preserved idiosyncratic gait patterns while improving joint-angle and endpoint fidelity by 4.73% and 12.10%, respectively, and reducing training time to 25.56% relative to a physics-only baseline. In a multicentre pilot involving 21 inpatients, clinicians who used our locomotion predictions to guide task selection and difficulty obtained larger gains in Fugl-Meyer lower-extremity scores over 28 days of standard rehabilitation than control clinicians (mean change 6.0 versus 3.7 points). These findings indicate that our generative, task-predictive framework can augment clinical decision-making in post-stroke gait rehabilitation and provide a template for dynamically personalized motor recovery strategies.

QiNN-QJ: A Quantum-inspired Neural Network with Quantum Jump for Multimodal Sentiment Analysis

Oct 31, 2025Abstract:Quantum theory provides non-classical principles, such as superposition and entanglement, that inspires promising paradigms in machine learning. However, most existing quantum-inspired fusion models rely solely on unitary or unitary-like transformations to generate quantum entanglement. While theoretically expressive, such approaches often suffer from training instability and limited generalizability. In this work, we propose a Quantum-inspired Neural Network with Quantum Jump (QiNN-QJ) for multimodal entanglement modelling. Each modality is firstly encoded as a quantum pure state, after which a differentiable module simulating the QJ operator transforms the separable product state into the entangled representation. By jointly learning Hamiltonian and Lindblad operators, QiNN-QJ generates controllable cross-modal entanglement among modalities with dissipative dynamics, where structured stochasticity and steady-state attractor properties serve to stabilize training and constrain entanglement shaping. The resulting entangled states are projected onto trainable measurement vectors to produce predictions. In addition to achieving superior performance over the state-of-the-art models on benchmark datasets, including CMU-MOSI, CMU-MOSEI, and CH-SIMS, QiNN-QJ facilitates enhanced post-hoc interpretability through von-Neumann entanglement entropy. This work establishes a principled framework for entangled multimodal fusion and paves the way for quantum-inspired approaches in modelling complex cross-modal correlations.

S2ST-Omni: An Efficient and Scalable Multilingual Speech-to-Speech Translation Framework via Seamlessly Speech-Text Alignment and Streaming Speech Decoder

Jun 16, 2025

Abstract:Multilingual speech-to-speech translation (S2ST) aims to directly convert spoken utterances from multiple source languages into fluent and intelligible speech in a target language. Despite recent progress, several critical challenges persist: 1) achieving high-quality and low-latency S2ST remains a significant obstacle; 2) most existing S2ST methods rely heavily on large-scale parallel speech corpora, which are difficult and resource-intensive to obtain. To tackle these challenges, we introduce S2ST-Omni, a novel, efficient, and scalable framework tailored for multilingual speech-to-speech translation. To enable high-quality S2TT while mitigating reliance on large-scale parallel speech corpora, we leverage powerful pretrained models: Whisper for robust audio understanding and Qwen 3.0 for advanced text comprehension. A lightweight speech adapter is introduced to bridge the modality gap between speech and text representations, facilitating effective utilization of pretrained multimodal knowledge. To ensure both translation accuracy and real-time responsiveness, we adopt a streaming speech decoder in the TTS stage, which generates the target speech in an autoregressive manner. Extensive experiments conducted on the CVSS benchmark demonstrate that S2ST-Omni consistently surpasses several state-of-the-art S2ST baselines in translation quality, highlighting its effectiveness and superiority.

Learning and Interpreting Gravitational-Wave Features from CNNs with a Random Forest Approach

May 26, 2025Abstract:Convolutional neural networks (CNNs) have become widely adopted in gravitational wave (GW) detection pipelines due to their ability to automatically learn hierarchical features from raw strain data. However, the physical meaning of these learned features remains underexplored, limiting the interpretability of such models. In this work, we propose a hybrid architecture that combines a CNN-based feature extractor with a random forest (RF) classifier to improve both detection performance and interpretability. Unlike prior approaches that directly connect classifiers to CNN outputs, our method introduces four physically interpretable metrics - variance, signal-to-noise ratio (SNR), waveform overlap, and peak amplitude - computed from the final convolutional layer. These are jointly used with the CNN output in the RF classifier to enable more informed decision boundaries. Tested on long-duration strain datasets, our hybrid model outperforms a baseline CNN model, achieving a relative improvement of 21\% in sensitivity at a fixed false alarm rate of 10 events per month. Notably, it also shows improved detection of low-SNR signals (SNR $\le$ 10), which are especially vulnerable to misclassification in noisy environments. Feature attribution via the RF model reveals that both CNN-extracted and handcrafted features contribute significantly to classification decisions, with learned variance and CNN outputs ranked among the most informative. These findings suggest that physically motivated post-processing of CNN feature maps can serve as a valuable tool for interpretable and efficient GW detection, bridging the gap between deep learning and domain knowledge.

ClapFM-EVC: High-Fidelity and Flexible Emotional Voice Conversion with Dual Control from Natural Language and Speech

May 20, 2025

Abstract:Despite great advances, achieving high-fidelity emotional voice conversion (EVC) with flexible and interpretable control remains challenging. This paper introduces ClapFM-EVC, a novel EVC framework capable of generating high-quality converted speech driven by natural language prompts or reference speech with adjustable emotion intensity. We first propose EVC-CLAP, an emotional contrastive language-audio pre-training model, guided by natural language prompts and categorical labels, to extract and align fine-grained emotional elements across speech and text modalities. Then, a FuEncoder with an adaptive intensity gate is presented to seamless fuse emotional features with Phonetic PosteriorGrams from a pre-trained ASR model. To further improve emotion expressiveness and speech naturalness, we propose a flow matching model conditioned on these captured features to reconstruct Mel-spectrogram of source speech. Subjective and objective evaluations validate the effectiveness of ClapFM-EVC.

Pangu Ultra MoE: How to Train Your Big MoE on Ascend NPUs

May 07, 2025

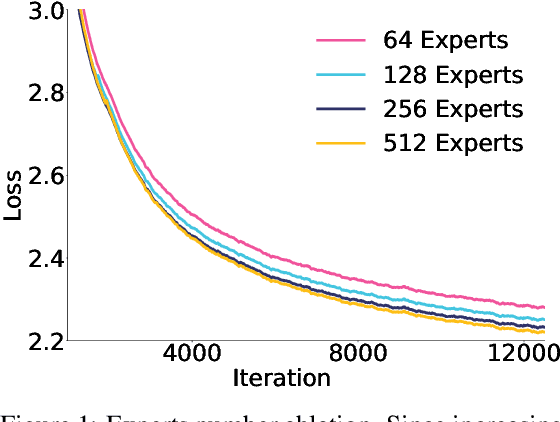

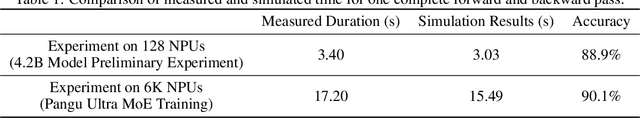

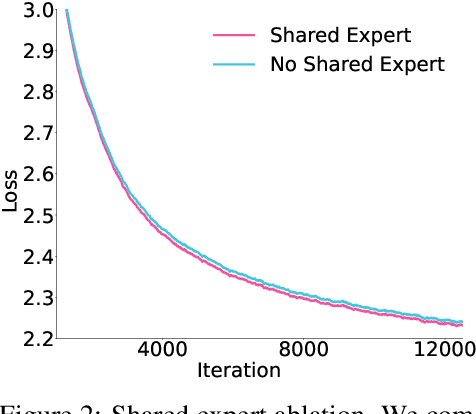

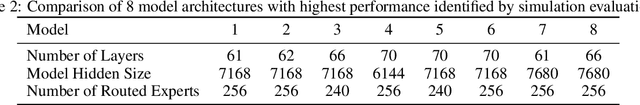

Abstract:Sparse large language models (LLMs) with Mixture of Experts (MoE) and close to a trillion parameters are dominating the realm of most capable language models. However, the massive model scale poses significant challenges for the underlying software and hardware systems. In this paper, we aim to uncover a recipe to harness such scale on Ascend NPUs. The key goals are better usage of the computing resources under the dynamic sparse model structures and materializing the expected performance gain on the actual hardware. To select model configurations suitable for Ascend NPUs without repeatedly running the expensive experiments, we leverage simulation to compare the trade-off of various model hyperparameters. This study led to Pangu Ultra MoE, a sparse LLM with 718 billion parameters, and we conducted experiments on the model to verify the simulation results. On the system side, we dig into Expert Parallelism to optimize the communication between NPU devices to reduce the synchronization overhead. We also optimize the memory efficiency within the devices to further reduce the parameter and activation management overhead. In the end, we achieve an MFU of 30.0% when training Pangu Ultra MoE, with performance comparable to that of DeepSeek R1, on 6K Ascend NPUs, and demonstrate that the Ascend system is capable of harnessing all the training stages of the state-of-the-art language models. Extensive experiments indicate that our recipe can lead to efficient training of large-scale sparse language models with MoE. We also study the behaviors of such models for future reference.

Pangu Ultra: Pushing the Limits of Dense Large Language Models on Ascend NPUs

Apr 10, 2025

Abstract:We present Pangu Ultra, a Large Language Model (LLM) with 135 billion parameters and dense Transformer modules trained on Ascend Neural Processing Units (NPUs). Although the field of LLM has been witnessing unprecedented advances in pushing the scale and capability of LLM in recent years, training such a large-scale model still involves significant optimization and system challenges. To stabilize the training process, we propose depth-scaled sandwich normalization, which effectively eliminates loss spikes during the training process of deep models. We pre-train our model on 13.2 trillion diverse and high-quality tokens and further enhance its reasoning capabilities during post-training. To perform such large-scale training efficiently, we utilize 8,192 Ascend NPUs with a series of system optimizations. Evaluations on multiple diverse benchmarks indicate that Pangu Ultra significantly advances the state-of-the-art capabilities of dense LLMs such as Llama 405B and Mistral Large 2, and even achieves competitive results with DeepSeek-R1, whose sparse model structure contains much more parameters. Our exploration demonstrates that Ascend NPUs are capable of efficiently and effectively training dense models with more than 100 billion parameters. Our model and system will be available for our commercial customers.

Parasite: A Steganography-based Backdoor Attack Framework for Diffusion Models

Apr 08, 2025

Abstract:Recently, the diffusion model has gained significant attention as one of the most successful image generation models, which can generate high-quality images by iteratively sampling noise. However, recent studies have shown that diffusion models are vulnerable to backdoor attacks, allowing attackers to enter input data containing triggers to activate the backdoor and generate their desired output. Existing backdoor attack methods primarily focused on target noise-to-image and text-to-image tasks, with limited work on backdoor attacks in image-to-image tasks. Furthermore, traditional backdoor attacks often rely on a single, conspicuous trigger to generate a fixed target image, lacking concealability and flexibility. To address these limitations, we propose a novel backdoor attack method called "Parasite" for image-to-image tasks in diffusion models, which not only is the first to leverage steganography for triggers hiding, but also allows attackers to embed the target content as a backdoor trigger to achieve a more flexible attack. "Parasite" as a novel attack method effectively bypasses existing detection frameworks to execute backdoor attacks. In our experiments, "Parasite" achieved a 0 percent backdoor detection rate against the mainstream defense frameworks. In addition, in the ablation study, we discuss the influence of different hiding coefficients on the attack results. You can find our code at https://anonymous.4open.science/r/Parasite-1715/.

IDInit: A Universal and Stable Initialization Method for Neural Network Training

Mar 06, 2025Abstract:Deep neural networks have achieved remarkable accomplishments in practice. The success of these networks hinges on effective initialization methods, which are vital for ensuring stable and rapid convergence during training. Recently, initialization methods that maintain identity transition within layers have shown good efficiency in network training. These techniques (e.g., Fixup) set specific weights to zero to achieve identity control. However, settings of remaining weight (e.g., Fixup uses random values to initialize non-zero weights) will affect the inductive bias that is achieved only by a zero weight, which may be harmful to training. Addressing this concern, we introduce fully identical initialization (IDInit), a novel method that preserves identity in both the main and sub-stem layers of residual networks. IDInit employs a padded identity-like matrix to overcome rank constraints in non-square weight matrices. Furthermore, we show the convergence problem of an identity matrix can be solved by stochastic gradient descent. Additionally, we enhance the universality of IDInit by processing higher-order weights and addressing dead neuron problems. IDInit is a straightforward yet effective initialization method, with improved convergence, stability, and performance across various settings, including large-scale datasets and deep models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge