Yintao Wei

BEV-GS: Feed-forward Gaussian Splatting in Bird's-Eye-View for Road Reconstruction

Apr 16, 2025Abstract:Road surface is the sole contact medium for wheels or robot feet. Reconstructing road surface is crucial for unmanned vehicles and mobile robots. Recent studies on Neural Radiance Fields (NeRF) and Gaussian Splatting (GS) have achieved remarkable results in scene reconstruction. However, they typically rely on multi-view image inputs and require prolonged optimization times. In this paper, we propose BEV-GS, a real-time single-frame road surface reconstruction method based on feed-forward Gaussian splatting. BEV-GS consists of a prediction module and a rendering module. The prediction module introduces separate geometry and texture networks following Bird's-Eye-View paradigm. Geometric and texture parameters are directly estimated from a single frame, avoiding per-scene optimization. In the rendering module, we utilize grid Gaussian for road surface representation and novel view synthesis, which better aligns with road surface characteristics. Our method achieves state-of-the-art performance on the real-world dataset RSRD. The road elevation error reduces to 1.73 cm, and the PSNR of novel view synthesis reaches 28.36 dB. The prediction and rendering FPS is 26, and 2061, respectively, enabling high-accuracy and real-time applications. The code will be available at: \href{https://github.com/cat-wwh/BEV-GS}{\texttt{https://github.com/cat-wwh/BEV-GS}}

RoadBEV: Road Surface Reconstruction in Bird's Eye View

Apr 09, 2024

Abstract:Road surface conditions, especially geometry profiles, enormously affect driving performance of autonomous vehicles. Vision-based online road reconstruction promisingly captures road information in advance. Existing solutions like monocular depth estimation and stereo matching suffer from modest performance. The recent technique of Bird's-Eye-View (BEV) perception provides immense potential to more reliable and accurate reconstruction. This paper uniformly proposes two simple yet effective models for road elevation reconstruction in BEV named RoadBEV-mono and RoadBEV-stereo, which estimate road elevation with monocular and stereo images, respectively. The former directly fits elevation values based on voxel features queried from image view, while the latter efficiently recognizes road elevation patterns based on BEV volume representing discrepancy between left and right voxel features. Insightful analyses reveal their consistence and difference with perspective view. Experiments on real-world dataset verify the models' effectiveness and superiority. Elevation errors of RoadBEV-mono and RoadBEV-stereo achieve 1.83cm and 0.56cm, respectively. The estimation performance improves by 50\% in BEV based on monocular image. Our models are promising for practical applications, providing valuable references for vision-based BEV perception in autonomous driving. The code is released at https://github.com/ztsrxh/RoadBEV.

Depth-aware Volume Attention for Texture-less Stereo Matching

Feb 26, 2024

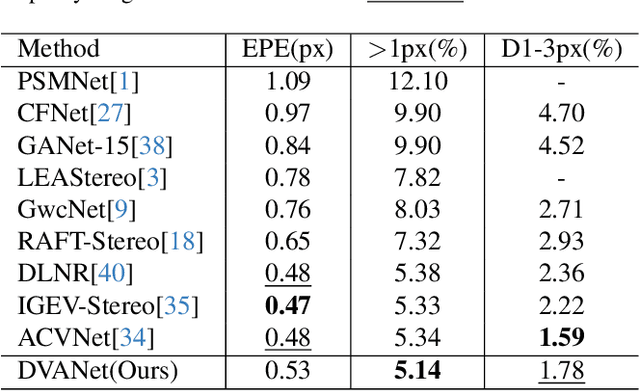

Abstract:Stereo matching plays a crucial role in 3D perception and scenario understanding. Despite the proliferation of promising methods, addressing texture-less and texture-repetitive conditions remains challenging due to the insufficient availability of rich geometric and semantic information. In this paper, we propose a lightweight volume refinement scheme to tackle the texture deterioration in practical outdoor scenarios. Specifically, we introduce a depth volume supervised by the ground-truth depth map, capturing the relative hierarchy of image texture. Subsequently, the disparity discrepancy volume undergoes hierarchical filtering through the incorporation of depth-aware hierarchy attention and target-aware disparity attention modules. Local fine structure and context are emphasized to mitigate ambiguity and redundancy during volume aggregation. Furthermore, we propose a more rigorous evaluation metric that considers depth-wise relative error, providing comprehensive evaluations for universal stereo matching and depth estimation models. We extensively validate the superiority of our proposed methods on public datasets. Results demonstrate that our model achieves state-of-the-art performance, particularly excelling in scenarios with texture-less images. The code is available at https://github.com/ztsrxh/DVANet.

RSRD: A Road Surface Reconstruction Dataset and Benchmark for Safe and Comfortable Autonomous Driving

Oct 03, 2023

Abstract:This paper addresses the growing demands for safety and comfort in intelligent robot systems, particularly autonomous vehicles, where road conditions play a pivotal role in overall driving performance. For example, reconstructing road surfaces helps to enhance the analysis and prediction of vehicle responses for motion planning and control systems. We introduce the Road Surface Reconstruction Dataset (RSRD), a real-world, high-resolution, and high-precision dataset collected with a specialized platform in diverse driving conditions. It covers common road types containing approximately 16,000 pairs of stereo images, original point clouds, and ground-truth depth/disparity maps, with accurate post-processing pipelines to ensure its quality. Based on RSRD, we further build a comprehensive benchmark for recovering road profiles through depth estimation and stereo matching. Preliminary evaluations with various state-of-the-art methods reveal the effectiveness of our dataset and the challenge of the task, underscoring substantial opportunities of RSRD as a valuable resource for advancing techniques, e.g., multi-view stereo towards safe autonomous driving. The dataset and demo videos are available at https://thu-rsxd.com/rsrd/

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge