Zenglin Shi

Language as Prior, Vision as Calibration: Metric Scale Recovery for Monocular Depth Estimation

Jan 07, 2026Abstract:Relative-depth foundation models transfer well, yet monocular metric depth remains ill-posed due to unidentifiable global scale and heightened domain-shift sensitivity. Under a frozen-backbone calibration setting, we recover metric depth via an image-specific affine transform in inverse depth and train only lightweight calibration heads while keeping the relative-depth backbone and the CLIP text encoder fixed. Since captions provide coarse but noisy scale cues that vary with phrasing and missing objects, we use language to predict an uncertainty-aware envelope that bounds feasible calibration parameters in an unconstrained space, rather than committing to a text-only point estimate. We then use pooled multi-scale frozen visual features to select an image-specific calibration within this envelope. During training, a closed-form least-squares oracle in inverse depth provides per-image supervision for learning the envelope and the selected calibration. Experiments on NYUv2 and KITTI improve in-domain accuracy, while zero-shot transfer to SUN-RGBD and DDAD demonstrates improved robustness over strong language-only baselines.

LoRA in LoRA: Towards Parameter-Efficient Architecture Expansion for Continual Visual Instruction Tuning

Aug 08, 2025Abstract:Continual Visual Instruction Tuning (CVIT) enables Multimodal Large Language Models (MLLMs) to incrementally learn new tasks over time. However, this process is challenged by catastrophic forgetting, where performance on previously learned tasks deteriorates as the model adapts to new ones. A common approach to mitigate forgetting is architecture expansion, which introduces task-specific modules to prevent interference. Yet, existing methods often expand entire layers for each task, leading to significant parameter overhead and poor scalability. To overcome these issues, we introduce LoRA in LoRA (LiLoRA), a highly efficient architecture expansion method tailored for CVIT in MLLMs. LiLoRA shares the LoRA matrix A across tasks to reduce redundancy, applies an additional low-rank decomposition to matrix B to minimize task-specific parameters, and incorporates a cosine-regularized stability loss to preserve consistency in shared representations over time. Extensive experiments on a diverse CVIT benchmark show that LiLoRA consistently achieves superior performance in sequential task learning while significantly improving parameter efficiency compared to existing approaches.

MobileIE: An Extremely Lightweight and Effective ConvNet for Real-Time Image Enhancement on Mobile Devices

Jul 02, 2025Abstract:Recent advancements in deep neural networks have driven significant progress in image enhancement (IE). However, deploying deep learning models on resource-constrained platforms, such as mobile devices, remains challenging due to high computation and memory demands. To address these challenges and facilitate real-time IE on mobile, we introduce an extremely lightweight Convolutional Neural Network (CNN) framework with around 4K parameters. Our approach integrates reparameterization with an Incremental Weight Optimization strategy to ensure efficiency. Additionally, we enhance performance with a Feature Self-Transform module and a Hierarchical Dual-Path Attention mechanism, optimized with a Local Variance-Weighted loss. With this efficient framework, we are the first to achieve real-time IE inference at up to 1,100 frames per second (FPS) while delivering competitive image quality, achieving the best trade-off between speed and performance across multiple IE tasks. The code will be available at https://github.com/AVC2-UESTC/MobileIE.git.

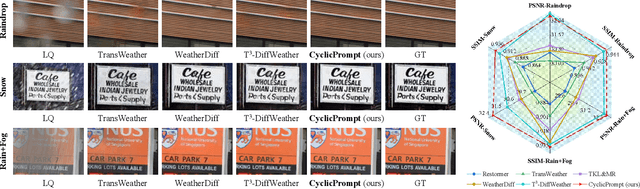

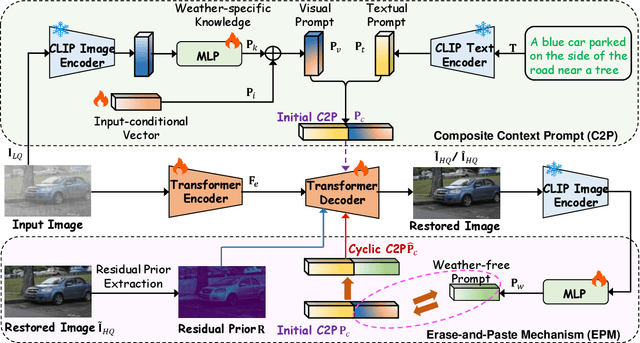

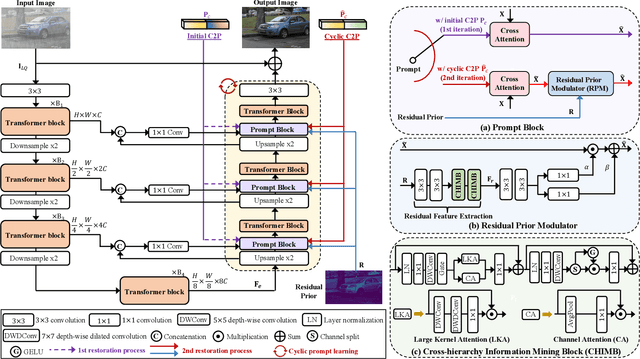

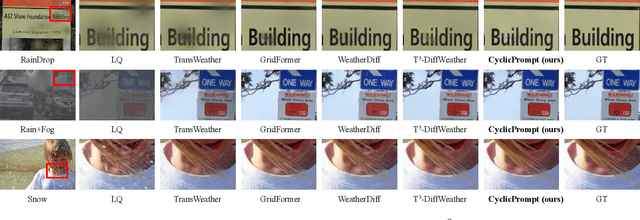

Prompt to Restore, Restore to Prompt: Cyclic Prompting for Universal Adverse Weather Removal

Mar 12, 2025

Abstract:Universal adverse weather removal (UAWR) seeks to address various weather degradations within a unified framework. Recent methods are inspired by prompt learning using pre-trained vision-language models (e.g., CLIP), leveraging degradation-aware prompts to facilitate weather-free image restoration, yielding significant improvements. In this work, we propose CyclicPrompt, an innovative cyclic prompt approach designed to enhance the effectiveness, adaptability, and generalizability of UAWR. CyclicPrompt Comprises two key components: 1) a composite context prompt that integrates weather-related information and context-aware representations into the network to guide restoration. This prompt differs from previous methods by marrying learnable input-conditional vectors with weather-specific knowledge, thereby improving adaptability across various degradations. 2) The erase-and-paste mechanism, after the initial guided restoration, substitutes weather-specific knowledge with constrained restoration priors, inducing high-quality weather-free concepts into the composite prompt to further fine-tune the restoration process. Therefore, we can form a cyclic "Prompt-Restore-Prompt" pipeline that adeptly harnesses weather-specific knowledge, textual contexts, and reliable textures. Extensive experiments on synthetic and real-world datasets validate the superior performance of CyclicPrompt. The code is available at: https://github.com/RongxinL/CyclicPrompt.

Precise Localization of Memories: A Fine-grained Neuron-level Knowledge Editing Technique for LLMs

Mar 03, 2025

Abstract:Knowledge editing aims to update outdated information in Large Language Models (LLMs). A representative line of study is locate-then-edit methods, which typically employ causal tracing to identify the modules responsible for recalling factual knowledge about entities. However, we find these methods are often sensitive only to changes in the subject entity, leaving them less effective at adapting to changes in relations. This limitation results in poor editing locality, which can lead to the persistence of irrelevant or inaccurate facts, ultimately compromising the reliability of LLMs. We believe this issue arises from the insufficient precision of knowledge localization. To address this, we propose a Fine-grained Neuron-level Knowledge Editing (FiNE) method that enhances editing locality without affecting overall success rates. By precisely identifying and modifying specific neurons within feed-forward networks, FiNE significantly improves knowledge localization and editing. Quantitative experiments demonstrate that FiNE efficiently achieves better overall performance compared to existing techniques, providing new insights into the localization and modification of knowledge within LLMs.

Separable Mixture of Low-Rank Adaptation for Continual Visual Instruction Tuning

Nov 21, 2024

Abstract:Visual instruction tuning (VIT) enables multimodal large language models (MLLMs) to effectively handle a wide range of vision tasks by framing them as language-based instructions. Building on this, continual visual instruction tuning (CVIT) extends the capability of MLLMs to incrementally learn new tasks, accommodating evolving functionalities. While prior work has advanced CVIT through the development of new benchmarks and approaches to mitigate catastrophic forgetting, these efforts largely follow traditional continual learning paradigms, neglecting the unique challenges specific to CVIT. We identify a dual form of catastrophic forgetting in CVIT, where MLLMs not only forget previously learned visual understanding but also experience a decline in instruction following abilities as they acquire new tasks. To address this, we introduce the Separable Mixture of Low-Rank Adaptation (SMoLoRA) framework, which employs separable routing through two distinct modules - one for visual understanding and another for instruction following. This dual-routing design enables specialized adaptation in both domains, preventing forgetting while improving performance. Furthermore, we propose a novel CVIT benchmark that goes beyond existing benchmarks by additionally evaluating a model's ability to generalize to unseen tasks and handle diverse instructions across various tasks. Extensive experiments demonstrate that SMoLoRA outperforms existing methods in mitigating dual forgetting, improving generalization to unseen tasks, and ensuring robustness in following diverse instructions.

Visual-Oriented Fine-Grained Knowledge Editing for MultiModal Large Language Models

Nov 19, 2024

Abstract:Knowledge editing aims to efficiently and cost-effectively correct inaccuracies and update outdated information. Recently, there has been growing interest in extending knowledge editing from Large Language Models (LLMs) to Multimodal Large Language Models (MLLMs), which integrate both textual and visual information, introducing additional editing complexities. Existing multimodal knowledge editing works primarily focus on text-oriented, coarse-grained scenarios, failing to address the unique challenges posed by multimodal contexts. In this paper, we propose a visual-oriented, fine-grained multimodal knowledge editing task that targets precise editing in images with multiple interacting entities. We introduce the Fine-Grained Visual Knowledge Editing (FGVEdit) benchmark to evaluate this task. Moreover, we propose a Multimodal Scope Classifier-based Knowledge Editor (MSCKE) framework. MSCKE leverages a multimodal scope classifier that integrates both visual and textual information to accurately identify and update knowledge related to specific entities within images. This approach ensures precise editing while preserving irrelevant information, overcoming the limitations of traditional text-only editing methods. Extensive experiments on the FGVEdit benchmark demonstrate that MSCKE outperforms existing methods, showcasing its effectiveness in solving the complex challenges of multimodal knowledge editing.

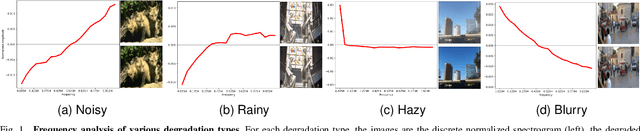

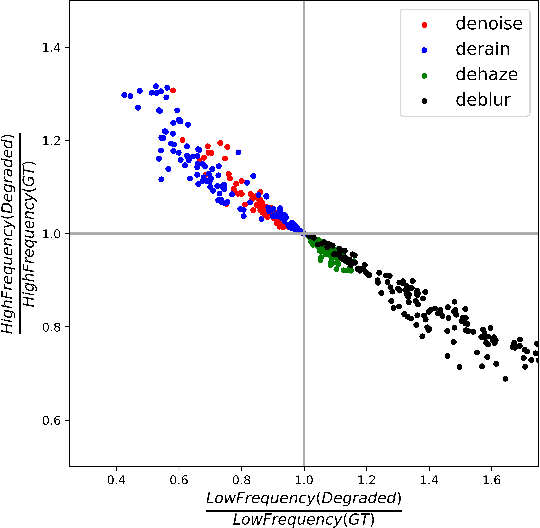

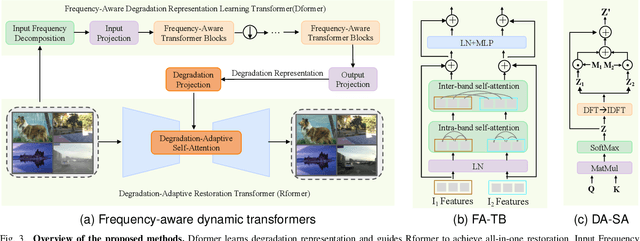

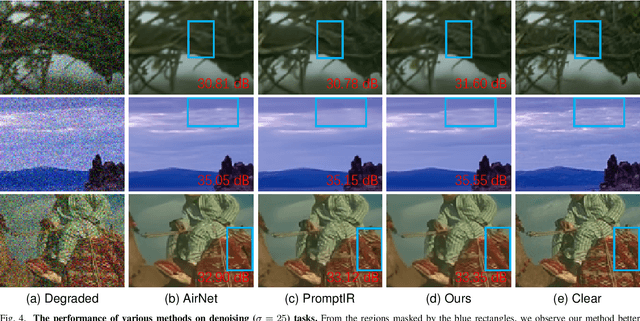

Learning Frequency-Aware Dynamic Transformers for All-In-One Image Restoration

Jun 30, 2024

Abstract:This work aims to tackle the all-in-one image restoration task, which seeks to handle multiple types of degradation with a single model. The primary challenge is to extract degradation representations from the input degraded images and use them to guide the model's adaptation to specific degradation types. Recognizing that various degradations affect image content differently across frequency bands, we propose a new all-in-one image restoration approach from a frequency perspective, leveraging advanced vision transformers. Our method consists of two main components: a frequency-aware Degradation prior learning transformer (Dformer) and a degradation-adaptive Restoration transformer (Rformer). The Dformer captures the essential characteristics of various degradations by decomposing inputs into different frequency components. By understanding how degradations affect these frequency components, the Dformer learns robust priors that effectively guide the restoration process. The Rformer then employs a degradation-adaptive self-attention module to selectively focus on the most affected frequency components, guided by the learned degradation representations. Extensive experimental results demonstrate that our approach outperforms the existing methods on four representative restoration tasks, including denoising, deraining, dehazing and deblurring. Additionally, our method offers benefits for handling spatially variant degradations and unseen degradation levels.

Densely Distilling Cumulative Knowledge for Continual Learning

May 16, 2024

Abstract:Continual learning, involving sequential training on diverse tasks, often faces catastrophic forgetting. While knowledge distillation-based approaches exhibit notable success in preventing forgetting, we pinpoint a limitation in their ability to distill the cumulative knowledge of all the previous tasks. To remedy this, we propose Dense Knowledge Distillation (DKD). DKD uses a task pool to track the model's capabilities. It partitions the output logits of the model into dense groups, each corresponding to a task in the task pool. It then distills all tasks' knowledge using all groups. However, using all the groups can be computationally expensive, we also suggest random group selection in each optimization step. Moreover, we propose an adaptive weighting scheme, which balances the learning of new classes and the retention of old classes, based on the count and similarity of the classes. Our DKD outperforms recent state-of-the-art baselines across diverse benchmarks and scenarios. Empirical analysis underscores DKD's ability to enhance model stability, promote flatter minima for improved generalization, and remains robust across various memory budgets and task orders. Moreover, it seamlessly integrates with other CL methods to boost performance and proves versatile in offline scenarios like model compression.

DualMatch: Robust Semi-Supervised Learning with Dual-Level Interaction

Oct 25, 2023Abstract:Semi-supervised learning provides an expressive framework for exploiting unlabeled data when labels are insufficient. Previous semi-supervised learning methods typically match model predictions of different data-augmented views in a single-level interaction manner, which highly relies on the quality of pseudo-labels and results in semi-supervised learning not robust. In this paper, we propose a novel SSL method called DualMatch, in which the class prediction jointly invokes feature embedding in a dual-level interaction manner. DualMatch requires consistent regularizations for data augmentation, specifically, 1) ensuring that different augmented views are regulated with consistent class predictions, and 2) ensuring that different data of one class are regulated with similar feature embeddings. Extensive experiments demonstrate the effectiveness of DualMatch. In the standard SSL setting, the proposal achieves 9% error reduction compared with SOTA methods, even in a more challenging class-imbalanced setting, the proposal can still achieve 6% error reduction. Code is available at https://github.com/CWangAI/DualMatch

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge