Shiqi Chen

Snapshot 3D image projection using a diffractive decoder

Dec 23, 2025Abstract:3D image display is essential for next-generation volumetric imaging; however, dense depth multiplexing for 3D image projection remains challenging because diffraction-induced cross-talk rapidly increases as the axial image planes get closer. Here, we introduce a 3D display system comprising a digital encoder and a diffractive optical decoder, which simultaneously projects different images onto multiple target axial planes with high axial resolution. By leveraging multi-layer diffractive wavefront decoding and deep learning-based end-to-end optimization, the system achieves high-fidelity depth-resolved 3D image projection in a snapshot, enabling axial plane separations on the order of a wavelength. The digital encoder leverages a Fourier encoder network to capture multi-scale spatial and frequency-domain features from input images, integrates axial position encoding, and generates a unified phase representation that simultaneously encodes all images to be axially projected in a single snapshot through a jointly-optimized diffractive decoder. We characterized the impact of diffractive decoder depth, output diffraction efficiency, spatial light modulator resolution, and axial encoding density, revealing trade-offs that govern axial separation and 3D image projection quality. We further demonstrated the capability to display volumetric images containing 28 axial slices, as well as the ability to dynamically reconfigure the axial locations of the image planes, performed on demand. Finally, we experimentally validated the presented approach, demonstrating close agreement between the measured results and the target images. These results establish the diffractive 3D display system as a compact and scalable framework for depth-resolved snapshot 3D image projection, with potential applications in holographic displays, AR/VR interfaces, and volumetric optical computing.

SynLogic: Synthesizing Verifiable Reasoning Data at Scale for Learning Logical Reasoning and Beyond

May 26, 2025Abstract:Recent advances such as OpenAI-o1 and DeepSeek R1 have demonstrated the potential of Reinforcement Learning (RL) to enhance reasoning abilities in Large Language Models (LLMs). While open-source replication efforts have primarily focused on mathematical and coding domains, methods and resources for developing general reasoning capabilities remain underexplored. This gap is partly due to the challenge of collecting diverse and verifiable reasoning data suitable for RL. We hypothesize that logical reasoning is critical for developing general reasoning capabilities, as logic forms a fundamental building block of reasoning. In this work, we present SynLogic, a data synthesis framework and dataset that generates diverse logical reasoning data at scale, encompassing 35 diverse logical reasoning tasks. The SynLogic approach enables controlled synthesis of data with adjustable difficulty and quantity. Importantly, all examples can be verified by simple rules, making them ideally suited for RL with verifiable rewards. In our experiments, we validate the effectiveness of RL training on the SynLogic dataset based on 7B and 32B models. SynLogic leads to state-of-the-art logical reasoning performance among open-source datasets, surpassing DeepSeek-R1-Distill-Qwen-32B by 6 points on BBEH. Furthermore, mixing SynLogic data with mathematical and coding tasks improves the training efficiency of these domains and significantly enhances reasoning generalization. Notably, our mixed training model outperforms DeepSeek-R1-Zero-Qwen-32B across multiple benchmarks. These findings position SynLogic as a valuable resource for advancing the broader reasoning capabilities of LLMs. We open-source both the data synthesis pipeline and the SynLogic dataset at https://github.com/MiniMax-AI/SynLogic.

Bring Reason to Vision: Understanding Perception and Reasoning through Model Merging

May 08, 2025Abstract:Vision-Language Models (VLMs) combine visual perception with the general capabilities, such as reasoning, of Large Language Models (LLMs). However, the mechanisms by which these two abilities can be combined and contribute remain poorly understood. In this work, we explore to compose perception and reasoning through model merging that connects parameters of different models. Unlike previous works that often focus on merging models of the same kind, we propose merging models across modalities, enabling the incorporation of the reasoning capabilities of LLMs into VLMs. Through extensive experiments, we demonstrate that model merging offers a successful pathway to transfer reasoning abilities from LLMs to VLMs in a training-free manner. Moreover, we utilize the merged models to understand the internal mechanism of perception and reasoning and how merging affects it. We find that perception capabilities are predominantly encoded in the early layers of the model, whereas reasoning is largely facilitated by the middle-to-late layers. After merging, we observe that all layers begin to contribute to reasoning, whereas the distribution of perception abilities across layers remains largely unchanged. These observations shed light on the potential of model merging as a tool for multimodal integration and interpretation.

SkyLadder: Better and Faster Pretraining via Context Window Scheduling

Mar 19, 2025

Abstract:Recent advancements in LLM pretraining have featured ever-expanding context windows to process longer sequences. However, our pilot study reveals that models pretrained with shorter context windows consistently outperform their long-context counterparts under a fixed token budget. This finding motivates us to explore an optimal context window scheduling strategy to better balance long-context capability with pretraining efficiency. To this end, we propose SkyLadder, a simple yet effective approach that implements a short-to-long context window transition. SkyLadder preserves strong standard benchmark performance, while matching or exceeding baseline results on long context tasks. Through extensive experiments, we pre-train 1B-parameter models (up to 32K context) and 3B-parameter models (8K context) on 100B tokens, demonstrating that SkyLadder yields consistent gains of up to 3.7% on common benchmarks, while achieving up to 22% faster training speeds compared to baselines. The code is at https://github.com/sail-sg/SkyLadder.

Why Is Spatial Reasoning Hard for VLMs? An Attention Mechanism Perspective on Focus Areas

Mar 04, 2025

Abstract:Large Vision Language Models (VLMs) have long struggled with spatial reasoning tasks. Surprisingly, even simple spatial reasoning tasks, such as recognizing "under" or "behind" relationships between only two objects, pose significant challenges for current VLMs. In this work, we study the spatial reasoning challenge from the lens of mechanistic interpretability, diving into the model's internal states to examine the interactions between image and text tokens. By tracing attention distribution over the image through out intermediate layers, we observe that successful spatial reasoning correlates strongly with the model's ability to align its attention distribution with actual object locations, particularly differing between familiar and unfamiliar spatial relationships. Motivated by these findings, we propose ADAPTVIS based on inference-time confidence scores to sharpen the attention on highly relevant regions when confident, while smoothing and broadening the attention window to consider a wider context when confidence is lower. This training-free decoding method shows significant improvement (e.g., up to a 50 absolute point improvement) on spatial reasoning benchmarks such as WhatsUp and VSR with negligible cost. We make code and data publicly available for research purposes at https://github.com/shiqichen17/AdaptVis.

Sailor2: Sailing in South-East Asia with Inclusive Multilingual LLMs

Feb 18, 2025

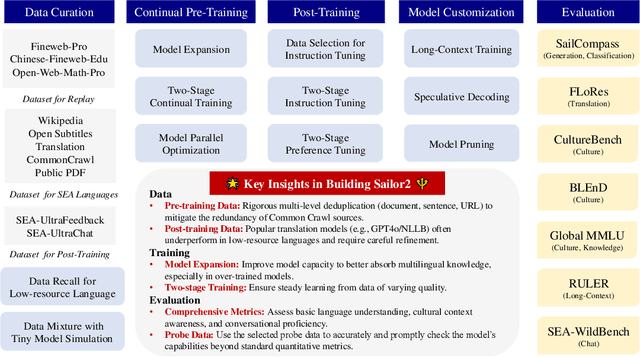

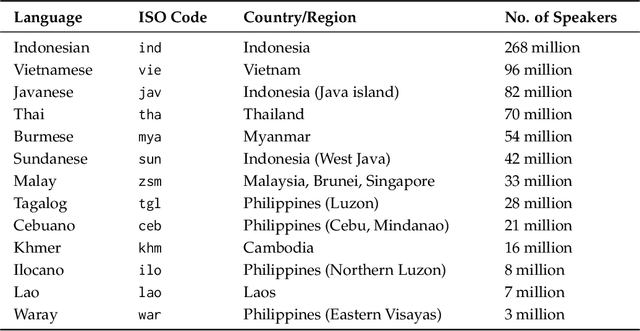

Abstract:Sailor2 is a family of cutting-edge multilingual language models for South-East Asian (SEA) languages, available in 1B, 8B, and 20B sizes to suit diverse applications. Building on Qwen2.5, Sailor2 undergoes continuous pre-training on 500B tokens (400B SEA-specific and 100B replay tokens) to support 13 SEA languages while retaining proficiency in Chinese and English. Sailor2-20B model achieves a 50-50 win rate against GPT-4o across SEA languages. We also deliver a comprehensive cookbook on how to develop the multilingual model in an efficient manner, including five key aspects: data curation, pre-training, post-training, model customization and evaluation. We hope that Sailor2 model (Apache 2.0 license) will drive language development in the SEA region, and Sailor2 cookbook will inspire researchers to build more inclusive LLMs for other under-served languages.

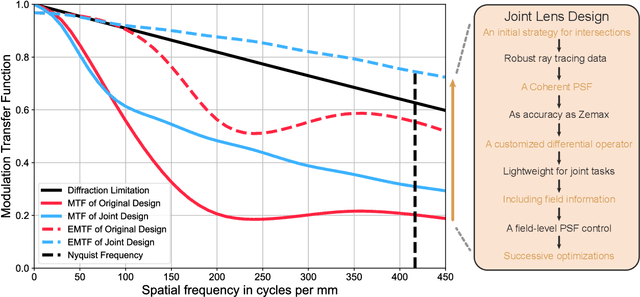

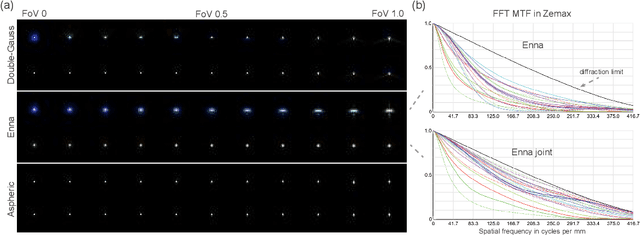

Successive optimization of optics and post-processing with differentiable coherent PSF operator and field information

Dec 19, 2024

Abstract:Recently, the joint design of optical systems and downstream algorithms is showing significant potential. However, existing rays-described methods are limited to optimizing geometric degradation, making it difficult to fully represent the optical characteristics of complex, miniaturized lenses constrained by wavefront aberration or diffraction effects. In this work, we introduce a precise optical simulation model, and every operation in pipeline is differentiable. This model employs a novel initial value strategy to enhance the reliability of intersection calculation on high aspherics. Moreover, it utilizes a differential operator to reduce memory consumption during coherent point spread function calculations. To efficiently address various degradation, we design a joint optimization procedure that leverages field information. Guided by a general restoration network, the proposed method not only enhances the image quality, but also successively improves the optical performance across multiple lenses that are already in professional level. This joint optimization pipeline offers innovative insights into the practical design of sophisticated optical systems and post-processing algorithms. The source code will be made publicly available at https://github.com/Zrr-ZJU/Successive-optimization

DiffSR: Learning Radar Reflectivity Synthesis via Diffusion Model from Satellite Observations

Nov 11, 2024Abstract:Weather radar data synthesis can fill in data for areas where ground observations are missing. Existing methods often employ reconstruction-based approaches with MSE loss to reconstruct radar data from satellite observation. However, such methods lead to over-smoothing, which hinders the generation of high-frequency details or high-value observation areas associated with convective weather. To address this issue, we propose a two-stage diffusion-based method called DiffSR. We first pre-train a reconstruction model on global-scale data to obtain radar estimation and then synthesize radar reflectivity by combining radar estimation results with satellite data as conditions for the diffusion model. Extensive experiments show that our method achieves state-of-the-art (SOTA) results, demonstrating the ability to generate high-frequency details and high-value areas.

Smart-LLaMA: Two-Stage Post-Training of Large Language Models for Smart Contract Vulnerability Detection and Explanation

Nov 09, 2024Abstract:With the rapid development of blockchain technology, smart contract security has become a critical challenge. Existing smart contract vulnerability detection methods face three main issues: (1) Insufficient quality of datasets, lacking detailed explanations and precise vulnerability locations. (2) Limited adaptability of large language models (LLMs) to the smart contract domain, as most LLMs are pre-trained on general text data but minimal smart contract-specific data. (3) Lack of high-quality explanations for detected vulnerabilities, as existing methods focus solely on detection without clear explanations. These limitations hinder detection performance and make it harder for developers to understand and fix vulnerabilities quickly, potentially leading to severe financial losses. To address these problems, we propose Smart-LLaMA, an advanced detection method based on the LLaMA language model. First, we construct a comprehensive dataset covering four vulnerability types with labels, detailed explanations, and precise vulnerability locations. Second, we introduce Smart Contract-Specific Continual Pre-Training, using raw smart contract data to enable the LLM to learn smart contract syntax and semantics, enhancing their domain adaptability. Furthermore, we propose Explanation-Guided Fine-Tuning, which fine-tunes the LLM using paired vulnerable code and explanations, enabling both vulnerability detection and reasoned explanations. We evaluate explanation quality through LLM and human evaluation, focusing on Correctness, Completeness, and Conciseness. Experimental results show that Smart-LLaMA outperforms state-of-the-art baselines, with average improvements of 6.49% in F1 score and 3.78% in accuracy, while providing reliable explanations.

WeatherGFM: Learning A Weather Generalist Foundation Model via In-context Learning

Nov 08, 2024

Abstract:The Earth's weather system encompasses intricate weather data modalities and diverse weather understanding tasks, which hold significant value to human life. Existing data-driven models focus on single weather understanding tasks (e.g., weather forecasting). Although these models have achieved promising results, they fail to tackle various complex tasks within a single and unified model. Moreover, the paradigm that relies on limited real observations for a single scenario hinders the model's performance upper bound. In response to these limitations, we draw inspiration from the in-context learning paradigm employed in state-of-the-art visual foundation models and large language models. In this paper, we introduce the first generalist weather foundation model (WeatherGFM), designed to address a wide spectrum of weather understanding tasks in a unified manner. More specifically, we initially unify the representation and definition of the diverse weather understanding tasks. Subsequently, we devised weather prompt formats to manage different weather data modalities, namely single, multiple, and temporal modalities. Finally, we adopt a visual prompting question-answering paradigm for the training of unified weather understanding tasks. Extensive experiments indicate that our WeatherGFM can effectively handle up to ten weather understanding tasks, including weather forecasting, super-resolution, weather image translation, and post-processing. Our method also showcases generalization ability on unseen tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge