Siyang Gao

Riemannian Flow Matching for Disentangled Graph Domain Adaptation

Jan 31, 2026Abstract:Graph Domain Adaptation (GDA) typically uses adversarial learning to align graph embeddings in Euclidean space. However, this paradigm suffers from two critical challenges: Structural Degeneration, where hierarchical and semantic representations are entangled, and Optimization Instability, which arises from oscillatory dynamics of minimax adversarial training. To tackle these issues, we propose DisRFM, a geometry-aware GDA framework that unifies Riemannian embedding and flow-based transport. First, to overcome structural degeneration, we embed graphs into a Riemannian manifold. By adopting polar coordinates, we explicitly disentangle structure (radius) from semantics (angle). Then, we enforce topology preservation through radial Wasserstein alignment and semantic discrimination via angular clustering, thereby preventing feature entanglement and collapse. Second, we address the instability of adversarial alignment by using Riemannian flow matching. This method learns a smooth vector field to guide source features toward the target along geodesic paths, guaranteeing stable convergence. The geometric constraints further guide the flow to maintain the disentangled structure during transport. Theoretically, we prove the asymptotic stability of the flow matching and derive a tighter bound for the target risk. Extensive experiments demonstrate that DisRFM consistently outperforms state-of-the-art methods.

Best Arm Identification with LLM Judges and Limited Human

Jan 29, 2026Abstract:We study fixed-confidence best-arm identification (BAI) where a cheap but potentially biased proxy (e.g., LLM judge) is available for every sample, while an expensive ground-truth label can only be acquired selectively when using a human for auditing. Unlike classical multi-fidelity BAI, the proxy is biased (arm- and context-dependent) and ground truth is selectively observed. Consequently, standard multi-fidelity methods can mis-select the best arm, and uniform auditing, though accurate, wastes scarce resources and is inefficient. We prove that without bias correction and propensity adjustment, mis-selection probability may not vanish (even with unlimited proxy data). We then develop an estimator for the mean of each arm that combines proxy scores with inverse-propensity-weighted residuals and form anytime-valid confidence sequences for that estimator. Based on the estimator and confidence sequence, we propose an algorithm that adaptively selects and audits arms. The algorithm concentrates audits on unreliable contexts and close arms and we prove that a plug-in Neyman rule achieves near-oracle audit efficiency. Numerical experiments confirm the theoretical guarantees and demonstrate the superior empirical performance of the proposed algorithm.

Bring Reason to Vision: Understanding Perception and Reasoning through Model Merging

May 08, 2025Abstract:Vision-Language Models (VLMs) combine visual perception with the general capabilities, such as reasoning, of Large Language Models (LLMs). However, the mechanisms by which these two abilities can be combined and contribute remain poorly understood. In this work, we explore to compose perception and reasoning through model merging that connects parameters of different models. Unlike previous works that often focus on merging models of the same kind, we propose merging models across modalities, enabling the incorporation of the reasoning capabilities of LLMs into VLMs. Through extensive experiments, we demonstrate that model merging offers a successful pathway to transfer reasoning abilities from LLMs to VLMs in a training-free manner. Moreover, we utilize the merged models to understand the internal mechanism of perception and reasoning and how merging affects it. We find that perception capabilities are predominantly encoded in the early layers of the model, whereas reasoning is largely facilitated by the middle-to-late layers. After merging, we observe that all layers begin to contribute to reasoning, whereas the distribution of perception abilities across layers remains largely unchanged. These observations shed light on the potential of model merging as a tool for multimodal integration and interpretation.

Why Is Spatial Reasoning Hard for VLMs? An Attention Mechanism Perspective on Focus Areas

Mar 04, 2025

Abstract:Large Vision Language Models (VLMs) have long struggled with spatial reasoning tasks. Surprisingly, even simple spatial reasoning tasks, such as recognizing "under" or "behind" relationships between only two objects, pose significant challenges for current VLMs. In this work, we study the spatial reasoning challenge from the lens of mechanistic interpretability, diving into the model's internal states to examine the interactions between image and text tokens. By tracing attention distribution over the image through out intermediate layers, we observe that successful spatial reasoning correlates strongly with the model's ability to align its attention distribution with actual object locations, particularly differing between familiar and unfamiliar spatial relationships. Motivated by these findings, we propose ADAPTVIS based on inference-time confidence scores to sharpen the attention on highly relevant regions when confident, while smoothing and broadening the attention window to consider a wider context when confidence is lower. This training-free decoding method shows significant improvement (e.g., up to a 50 absolute point improvement) on spatial reasoning benchmarks such as WhatsUp and VSR with negligible cost. We make code and data publicly available for research purposes at https://github.com/shiqichen17/AdaptVis.

Stochastically Constrained Best Arm Identification with Thompson Sampling

Jan 07, 2025Abstract:We consider the problem of the best arm identification in the presence of stochastic constraints, where there is a finite number of arms associated with multiple performance measures. The goal is to identify the arm that optimizes the objective measure subject to constraints on the remaining measures. We will explore the popular idea of Thompson sampling (TS) as a means to solve it. To the best of our knowledge, it is the first attempt to extend TS to this problem. We will design a TS-based sampling algorithm, establish its asymptotic optimality in the rate of posterior convergence, and demonstrate its superior performance using numerical examples.

Image-of-Thought Prompting for Visual Reasoning Refinement in Multimodal Large Language Models

May 22, 2024

Abstract:Recent advancements in Chain-of-Thought (CoT) and related rationale-based works have significantly improved the performance of Large Language Models (LLMs) in complex reasoning tasks. With the evolution of Multimodal Large Language Models (MLLMs), enhancing their capability to tackle complex multimodal reasoning problems is a crucial frontier. However, incorporating multimodal rationales in CoT has yet to be thoroughly investigated. We propose the Image-of-Thought (IoT) prompting method, which helps MLLMs to extract visual rationales step-by-step. Specifically, IoT prompting can automatically design critical visual information extraction operations based on the input images and questions. Each step of visual information refinement identifies specific visual rationales that support answers to complex visual reasoning questions. Beyond the textual CoT, IoT simultaneously utilizes visual and textual rationales to help MLLMs understand complex multimodal information. IoT prompting has improved zero-shot visual reasoning performance across various visual understanding tasks in different MLLMs. Moreover, the step-by-step visual feature explanations generated by IoT prompting elucidate the visual reasoning process, aiding in analyzing the cognitive processes of large multimodal models

In-Context Sharpness as Alerts: An Inner Representation Perspective for Hallucination Mitigation

Mar 12, 2024

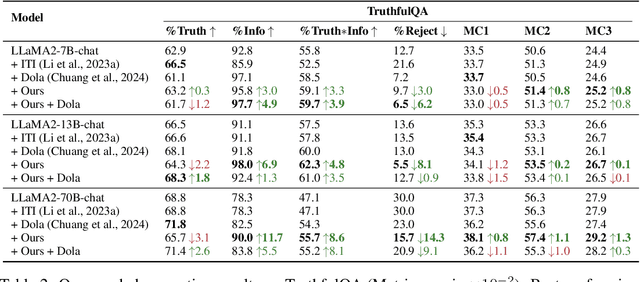

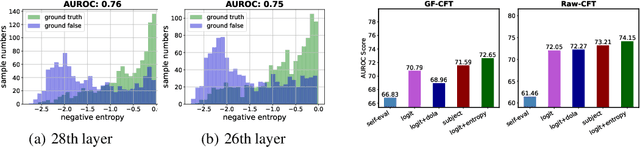

Abstract:Large language models (LLMs) frequently hallucinate and produce factual errors, yet our understanding of why they make these errors remains limited. In this study, we delve into the underlying mechanisms of LLM hallucinations from the perspective of inner representations, and discover a salient pattern associated with hallucinations: correct generations tend to have sharper context activations in the hidden states of the in-context tokens, compared to the incorrect ones. Leveraging this insight, we propose an entropy-based metric to quantify the ``sharpness'' among the in-context hidden states and incorporate it into the decoding process to formulate a constrained decoding approach. Experiments on various knowledge-seeking and hallucination benchmarks demonstrate our approach's consistent effectiveness, for example, achieving up to an 8.6 point improvement on TruthfulQA. We believe this study can improve our understanding of hallucinations and serve as a practical solution for hallucination mitigation.

FELM: Benchmarking Factuality Evaluation of Large Language Models

Oct 01, 2023Abstract:Assessing factuality of text generated by large language models (LLMs) is an emerging yet crucial research area, aimed at alerting users to potential errors and guiding the development of more reliable LLMs. Nonetheless, the evaluators assessing factuality necessitate suitable evaluation themselves to gauge progress and foster advancements. This direction remains under-explored, resulting in substantial impediments to the progress of factuality evaluators. To mitigate this issue, we introduce a benchmark for Factuality Evaluation of large Language Models, referred to as felm. In this benchmark, we collect responses generated from LLMs and annotate factuality labels in a fine-grained manner. Contrary to previous studies that primarily concentrate on the factuality of world knowledge (e.g.~information from Wikipedia), felm focuses on factuality across diverse domains, spanning from world knowledge to math and reasoning. Our annotation is based on text segments, which can help pinpoint specific factual errors. The factuality annotations are further supplemented by predefined error types and reference links that either support or contradict the statement. In our experiments, we investigate the performance of several LLM-based factuality evaluators on felm, including both vanilla LLMs and those augmented with retrieval mechanisms and chain-of-thought processes. Our findings reveal that while retrieval aids factuality evaluation, current LLMs are far from satisfactory to faithfully detect factual errors.

Evaluating Factual Consistency of Summaries with Large Language Models

May 23, 2023

Abstract:Detecting factual errors in summaries has been an important and challenging subject in summarization research. Inspired by the emergent ability of large language models (LLMs), we explore evaluating factual consistency of summaries by directly prompting LLMs. We present a comprehensive empirical study to assess the ability of LLMs as factual consistency evaluators, which consists of (1) analyzing different LLMs such as the GPT model series and Flan-T5; (2) investigating a variety of prompting methods including vanilla prompting, chain-of-thought prompting, and a sentence-by-sentence prompting method to tackle long summaries; and (3) evaluating on diverse summaries generated by multiple summarization systems, ranging from pre-transformer methods to SOTA pretrained models. Our experiments demonstrate that prompting LLMs is able to outperform the previous best factuality systems in all settings, by up to 12.2 absolute points in terms of the binary classification accuracy on inconsistency detection.

Convergence Rate Analysis for Optimal Computing Budget Allocation Algorithms

Nov 29, 2022Abstract:Ordinal optimization (OO) is a widely-studied technique for optimizing discrete-event dynamic systems (DEDS). It evaluates the performance of the system designs in a finite set by sampling and aims to correctly make ordinal comparison of the designs. A well-known method in OO is the optimal computing budget allocation (OCBA). It builds the optimality conditions for the number of samples allocated to each design, and the sample allocation that satisfies the optimality conditions is shown to asymptotically maximize the probability of correct selection for the best design. In this paper, we investigate two popular OCBA algorithms. With known variances for samples of each design, we characterize their convergence rates with respect to different performance measures. We first demonstrate that the two OCBA algorithms achieve the optimal convergence rate under measures of probability of correct selection and expected opportunity cost. It fills the void of convergence analysis for OCBA algorithms. Next, we extend our analysis to the measure of cumulative regret, a main measure studied in the field of machine learning. We show that with minor modification, the two OCBA algorithms can reach the optimal convergence rate under cumulative regret. It indicates the potential of broader use of algorithms designed based on the OCBA optimality conditions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge