Huajun Feng

Successive optimization of optics and post-processing with differentiable coherent PSF operator and field information

Dec 19, 2024

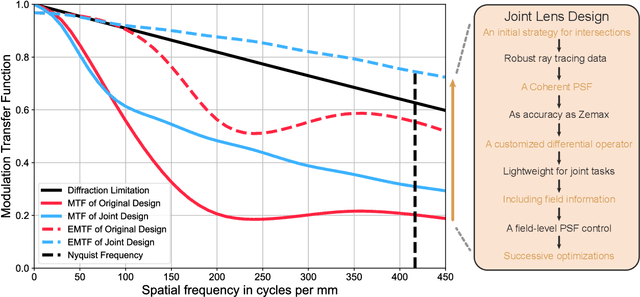

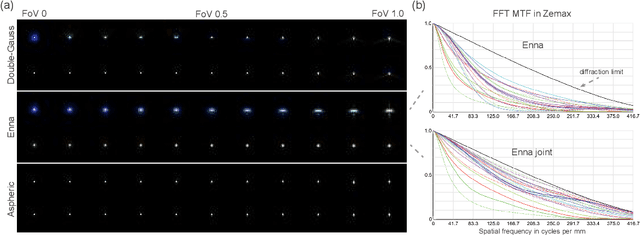

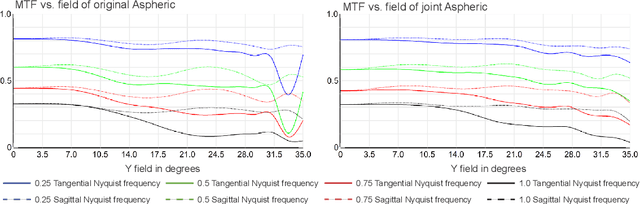

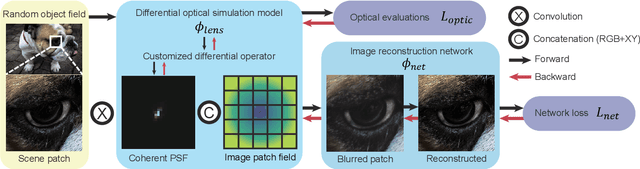

Abstract:Recently, the joint design of optical systems and downstream algorithms is showing significant potential. However, existing rays-described methods are limited to optimizing geometric degradation, making it difficult to fully represent the optical characteristics of complex, miniaturized lenses constrained by wavefront aberration or diffraction effects. In this work, we introduce a precise optical simulation model, and every operation in pipeline is differentiable. This model employs a novel initial value strategy to enhance the reliability of intersection calculation on high aspherics. Moreover, it utilizes a differential operator to reduce memory consumption during coherent point spread function calculations. To efficiently address various degradation, we design a joint optimization procedure that leverages field information. Guided by a general restoration network, the proposed method not only enhances the image quality, but also successively improves the optical performance across multiple lenses that are already in professional level. This joint optimization pipeline offers innovative insights into the practical design of sophisticated optical systems and post-processing algorithms. The source code will be made publicly available at https://github.com/Zrr-ZJU/Successive-optimization

Deep Linear Array Pushbroom Image Restoration: A Degradation Pipeline and Jitter-Aware Restoration Network

Jan 16, 2024

Abstract:Linear Array Pushbroom (LAP) imaging technology is widely used in the realm of remote sensing. However, images acquired through LAP always suffer from distortion and blur because of camera jitter. Traditional methods for restoring LAP images, such as algorithms estimating the point spread function (PSF), exhibit limited performance. To tackle this issue, we propose a Jitter-Aware Restoration Network (JARNet), to remove the distortion and blur in two stages. In the first stage, we formulate an Optical Flow Correction (OFC) block to refine the optical flow of the degraded LAP images, resulting in pre-corrected images where most of the distortions are alleviated. In the second stage, for further enhancement of the pre-corrected images, we integrate two jitter-aware techniques within the Spatial and Frequency Residual (SFRes) block: 1) introducing Coordinate Attention (CoA) to the SFRes block in order to capture the jitter state in orthogonal direction; 2) manipulating image features in both spatial and frequency domains to leverage local and global priors. Additionally, we develop a data synthesis pipeline, which applies Continue Dynamic Shooting Model (CDSM) to simulate realistic degradation in LAP images. Both the proposed JARNet and LAP image synthesis pipeline establish a foundation for addressing this intricate challenge. Extensive experiments demonstrate that the proposed two-stage method outperforms state-of-the-art image restoration models. Code is available at https://github.com/JHW2000/JARNet.

Revealing the preference for correcting separated aberrations in joint optic-image design

Sep 12, 2023Abstract:The joint design of the optical system and the downstream algorithm is a challenging and promising task. Due to the demand for balancing the global optimal of imaging systems and the computational cost of physical simulation, existing methods cannot achieve efficient joint design of complex systems such as smartphones and drones. In this work, starting from the perspective of the optical design, we characterize the optics with separated aberrations. Additionally, to bridge the hardware and software without gradients, an image simulation system is presented to reproduce the genuine imaging procedure of lenses with large field-of-views. As for aberration correction, we propose a network to perceive and correct the spatially varying aberrations and validate its superiority over state-of-the-art methods. Comprehensive experiments reveal that the preference for correcting separated aberrations in joint design is as follows: longitudinal chromatic aberration, lateral chromatic aberration, spherical aberration, field curvature, and coma, with astigmatism coming last. Drawing from the preference, a 10% reduction in the total track length of the consumer-level mobile phone lens module is accomplished. Moreover, this procedure spares more space for manufacturing deviations, realizing extreme-quality enhancement of computational photography. The optimization paradigm provides innovative insight into the practical joint design of sophisticated optical systems and post-processing algorithms.

Toward Real Flare Removal: A Comprehensive Pipeline and A New Benchmark

Jun 28, 2023

Abstract:Photographing in the under-illuminated scenes, the presence of complex light sources often leave strong flare artifacts in images, where the intensity, the spectrum, the reflection, and the aberration altogether contribute the deterioration. Besides the image quality, it also influence the performance of down-stream visual applications. Thus, removing the lens flare and ghosts is a challenge issue especially in low-light environment. However, existing methods for flare removal mainly restricted to the problems of inadequate simulation and real-world capture, where the categories of scattered flares are singular and the reflected ghosts are unavailable. Therefore, a comprehensive deterioration procedure is crucial for constructing the dataset of flare removal. Based on the theoretical analysis and real-world evaluation, we propose a well-developed methodology for generating the data-pairs with flare deterioration. The procedure is comprehensive, where the similarity of scattered flares and the symmetric effect of reflected ghosts are realized. Moreover, we also construct a real-shot pipeline that respectively processes the effects of scattering and reflective flares, aiming to directly generate the data for end-to-end methods. Experimental results show that the proposed methodology add diversity to the existing flare datasets and construct a comprehensive mapping procedure for flare data pairs. And our method facilities the data-driven model to realize better restoration in flare images and proposes a better evaluation system based on real shots, resulting promote progress in the area of real flare removal.

Let Segment Anything Help Image Dehaze

Jun 28, 2023

Abstract:The large language model and high-level vision model have achieved impressive performance improvements with large datasets and model sizes. However, low-level computer vision tasks, such as image dehaze and blur removal, still rely on a small number of datasets and small-sized models, which generally leads to overfitting and local optima. Therefore, we propose a framework to integrate large-model prior into low-level computer vision tasks. Just as with the task of image segmentation, the degradation of haze is also texture-related. So we propose to detect gray-scale coding, network channel expansion, and pre-dehaze structures to integrate large-model prior knowledge into any low-level dehazing network. We demonstrate the effectiveness and applicability of large models in guiding low-level visual tasks through different datasets and algorithms comparison experiments. Finally, we demonstrate the effect of grayscale coding, network channel expansion, and recurrent network structures through ablation experiments. Under the conditions where additional data and training resources are not required, we successfully prove that the integration of large-model prior knowledge will improve the dehaze performance and save training time for low-level visual tasks.

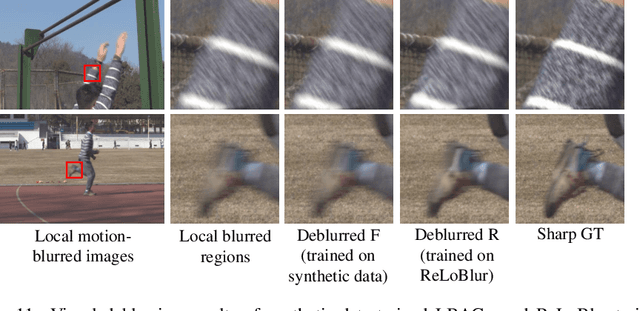

Adaptive Window Pruning for Efficient Local Motion Deblurring

Jun 25, 2023

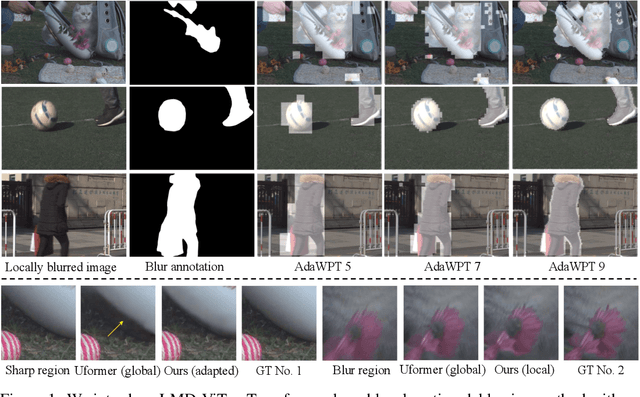

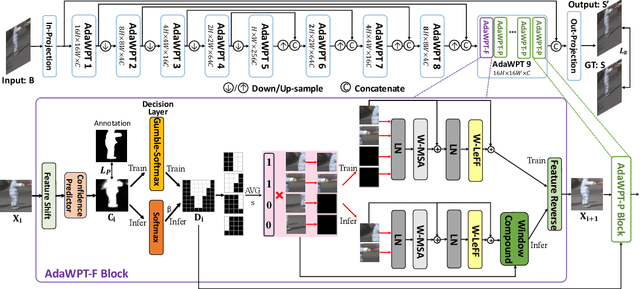

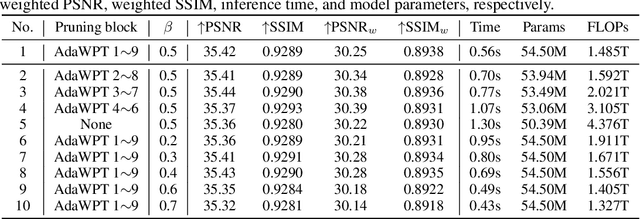

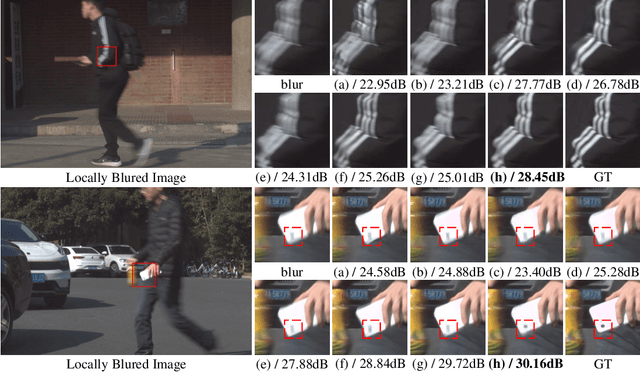

Abstract:Local motion blur commonly occurs in real-world photography due to the mixing between moving objects and stationary backgrounds during exposure. Existing image deblurring methods predominantly focus on global deblurring, inadvertently affecting the sharpness of backgrounds in locally blurred images and wasting unnecessary computation on sharp pixels, especially for high-resolution images. This paper aims to adaptively and efficiently restore high-resolution locally blurred images. We propose a local motion deblurring vision Transformer (LMD-ViT) built on adaptive window pruning Transformer blocks (AdaWPT). To focus deblurring on local regions and reduce computation, AdaWPT prunes unnecessary windows, only allowing the active windows to be involved in the deblurring processes. The pruning operation relies on the blurriness confidence predicted by a confidence predictor that is trained end-to-end using a reconstruction loss with Gumbel-Softmax re-parameterization and a pruning loss guided by annotated blur masks. Our method removes local motion blur effectively without distorting sharp regions, demonstrated by its exceptional perceptual and quantitative improvements (+0.24dB) compared to state-of-the-art methods. In addition, our approach substantially reduces FLOPs by 66% and achieves more than a twofold increase in inference speed compared to Transformer-based deblurring methods. We will make our code and annotated blur masks publicly available.

Computational Optics for Mobile Terminals in Mass Production

May 10, 2023

Abstract:Correcting the optical aberrations and the manufacturing deviations of cameras is a challenging task. Due to the limitation on volume and the demand for mass production, existing mobile terminals cannot rectify optical degradation. In this work, we systematically construct the perturbed lens system model to illustrate the relationship between the deviated system parameters and the spatial frequency response measured from photographs. To further address this issue, an optimization framework is proposed based on this model to build proxy cameras from the machining samples' SFRs. Engaging with the proxy cameras, we synthetic data pairs, which encode the optical aberrations and the random manufacturing biases, for training the learning-based algorithms. In correcting aberration, although promising results have been shown recently with convolutional neural networks, they are hard to generalize to stochastic machining biases. Therefore, we propose a dilated Omni-dimensional dynamic convolution and implement it in post-processing to account for the manufacturing degradation. Extensive experiments which evaluate multiple samples of two representative devices demonstrate that the proposed optimization framework accurately constructs the proxy camera. And the dynamic processing model is well-adapted to manufacturing deviations of different cameras, realizing perfect computational photography. The evaluation shows that the proposed method bridges the gap between optical design, system machining, and post-processing pipeline, shedding light on the joint of image signal reception (lens and sensor) and image signal processing.

* Published in IEEE TPAMI, 15 pages, 13 figures

Optical Aberration Correction in Postprocessing using Imaging Simulation

May 10, 2023

Abstract:As the popularity of mobile photography continues to grow, considerable effort is being invested in the reconstruction of degraded images. Due to the spatial variation in optical aberrations, which cannot be avoided during the lens design process, recent commercial cameras have shifted some of these correction tasks from optical design to postprocessing systems. However, without engaging with the optical parameters, these systems only achieve limited correction for aberrations.In this work, we propose a practical method for recovering the degradation caused by optical aberrations. Specifically, we establish an imaging simulation system based on our proposed optical point spread function model. Given the optical parameters of the camera, it generates the imaging results of these specific devices. To perform the restoration, we design a spatial-adaptive network model on synthetic data pairs generated by the imaging simulation system, eliminating the overhead of capturing training data by a large amount of shooting and registration. Moreover, we comprehensively evaluate the proposed method in simulations and experimentally with a customized digital-single-lens-reflex (DSLR) camera lens and HUAWEI HONOR 20, respectively. The experiments demonstrate that our solution successfully removes spatially variant blur and color dispersion. When compared with the state-of-the-art deblur methods, the proposed approach achieves better results with a lower computational overhead. Moreover, the reconstruction technique does not introduce artificial texture and is convenient to transfer to current commercial cameras. Project Page: \url{https://github.com/TanGeeGo/ImagingSimulation}.

* Published in ACM TOG. 15 pages, 13 figures

Reliable Image Dehazing by NeRF

Mar 16, 2023Abstract:We present an image dehazing algorithm with high quality, wide application, and no data training or prior needed. We analyze the defects of the original dehazing model, and propose a new and reliable dehazing reconstruction and dehazing model based on the combination of optical scattering model and computer graphics lighting rendering model. Based on the new haze model and the images obtained by the cameras, we can reconstruct the three-dimensional space, accurately calculate the objects and haze in the space, and use the transparency relationship of haze to perform accurate haze removal. To obtain a 3D simulation dataset we used the Unreal 5 computer graphics rendering engine. In order to obtain real shot data in different scenes, we used fog generators, array cameras, mobile phones, underwater cameras and drones to obtain haze data. We use formula derivation, simulation data set and real shot data set result experimental results to prove the feasibility of the new method. Compared with various other methods, we are far ahead in terms of calculation indicators (4 dB higher quality average scene), color remains more natural, and the algorithm is more robust in different scenarios and best in the subjective perception.

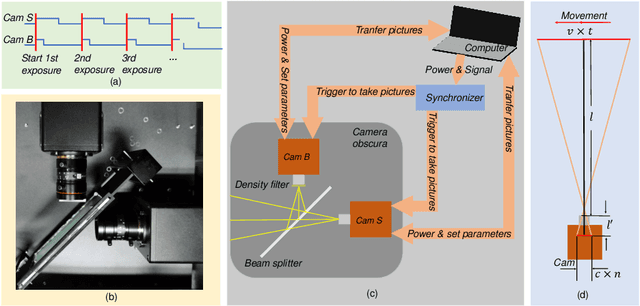

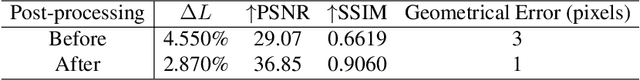

Real-world Deep Local Motion Deblurring

Apr 18, 2022

Abstract:Most existing deblurring methods focus on removing global blur caused by camera shake, while they cannot well handle local blur caused by object movements. To fill the vacancy of local deblurring in real scenes, we establish the first real local motion blur dataset (ReLoBlur), which is captured by a synchronized beam-splitting photographing system and corrected by a post-progressing pipeline. Based on ReLoBlur, we propose a Local Blur-Aware Gated network (LBAG) and several local blur-aware techniques to bridge the gap between global and local deblurring: 1) a blur detection approach based on background subtraction to localize blurred regions; 2) a gate mechanism to guide our network to focus on blurred regions; and 3) a blur-aware patch cropping strategy to address data imbalance problem. Extensive experiments prove the reliability of ReLoBlur dataset, and demonstrate that LBAG achieves better performance than state-of-the-art global deblurring methods without our proposed local blur-aware techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge