Hanlong Chen

Snapshot multi-spectral imaging through defocusing and a Fourier imager network

Jan 24, 2025

Abstract:Multi-spectral imaging, which simultaneously captures the spatial and spectral information of a scene, is widely used across diverse fields, including remote sensing, biomedical imaging, and agricultural monitoring. Here, we introduce a snapshot multi-spectral imaging approach employing a standard monochrome image sensor with no additional spectral filters or customized components. Our system leverages the inherent chromatic aberration of wavelength-dependent defocusing as a natural source of physical encoding of multi-spectral information; this encoded image information is rapidly decoded via a deep learning-based multi-spectral Fourier Imager Network (mFIN). We experimentally tested our method with six illumination bands and demonstrated an overall accuracy of 92.98% for predicting the illumination channels at the input and achieved a robust multi-spectral image reconstruction on various test objects. This deep learning-powered framework achieves high-quality multi-spectral image reconstruction using snapshot image acquisition with a monochrome image sensor and could be useful for applications in biomedicine, industrial quality control, and agriculture, among others.

Super-resolved virtual staining of label-free tissue using diffusion models

Oct 26, 2024

Abstract:Virtual staining of tissue offers a powerful tool for transforming label-free microscopy images of unstained tissue into equivalents of histochemically stained samples. This study presents a diffusion model-based super-resolution virtual staining approach utilizing a Brownian bridge process to enhance both the spatial resolution and fidelity of label-free virtual tissue staining, addressing the limitations of traditional deep learning-based methods. Our approach integrates novel sampling techniques into a diffusion model-based image inference process to significantly reduce the variance in the generated virtually stained images, resulting in more stable and accurate outputs. Blindly applied to lower-resolution auto-fluorescence images of label-free human lung tissue samples, the diffusion-based super-resolution virtual staining model consistently outperformed conventional approaches in resolution, structural similarity and perceptual accuracy, successfully achieving a super-resolution factor of 4-5x, increasing the output space-bandwidth product by 16-25-fold compared to the input label-free microscopy images. Diffusion-based super-resolved virtual tissue staining not only improves resolution and image quality but also enhances the reliability of virtual staining without traditional chemical staining, offering significant potential for clinical diagnostics.

Optical Generative Models

Oct 23, 2024

Abstract:Generative models cover various application areas, including image, video and music synthesis, natural language processing, and molecular design, among many others. As digital generative models become larger, scalable inference in a fast and energy-efficient manner becomes a challenge. Here, we present optical generative models inspired by diffusion models, where a shallow and fast digital encoder first maps random noise into phase patterns that serve as optical generative seeds for a desired data distribution; a jointly-trained free-space-based reconfigurable decoder all-optically processes these generative seeds to create novel images (never seen before) following the target data distribution. Except for the illumination power and the random seed generation through a shallow encoder, these optical generative models do not consume computing power during the synthesis of novel images. We report the optical generation of monochrome and multi-color novel images of handwritten digits, fashion products, butterflies, and human faces, following the data distributions of MNIST, Fashion MNIST, Butterflies-100, and Celeb-A datasets, respectively, achieving an overall performance comparable to digital neural network-based generative models. To experimentally demonstrate optical generative models, we used visible light to generate, in a snapshot, novel images of handwritten digits and fashion products. These optical generative models might pave the way for energy-efficient, scalable and rapid inference tasks, further exploiting the potentials of optics and photonics for artificial intelligence-generated content.

BlurryScope: a cost-effective and compact scanning microscope for automated HER2 scoring using deep learning on blurry image data

Oct 23, 2024Abstract:We developed a rapid scanning optical microscope, termed "BlurryScope", that leverages continuous image acquisition and deep learning to provide a cost-effective and compact solution for automated inspection and analysis of tissue sections. BlurryScope integrates specialized hardware with a neural network-based model to quickly process motion-blurred histological images and perform automated pathology classification. This device offers comparable speed to commercial digital pathology scanners, but at a significantly lower price point and smaller size/weight, making it ideal for fast triaging in small clinics, as well as for resource-limited settings. To demonstrate the proof-of-concept of BlurryScope, we implemented automated classification of human epidermal growth factor receptor 2 (HER2) scores on immunohistochemically (IHC) stained breast tissue sections, achieving concordant results with those obtained from a high-end digital scanning microscope. We evaluated this approach by scanning HER2-stained tissue microarrays (TMAs) at a continuous speed of 5 mm/s, which introduces bidirectional motion blur artifacts. These compromised images were then used to train our network models. Using a test set of 284 unique patient cores, we achieved blind testing accuracies of 79.3% and 89.7% for 4-class (0, 1+, 2+, 3+) and 2-class (0/1+ , 2+/3+) HER2 score classification, respectively. BlurryScope automates the entire workflow, from image scanning to stitching and cropping of regions of interest, as well as HER2 score classification. We believe BlurryScope has the potential to enhance the current pathology infrastructure in resource-scarce environments, save diagnostician time and bolster cancer identification and classification across various clinical environments.

Subwavelength Imaging using a Solid-Immersion Diffractive Optical Processor

Jan 17, 2024Abstract:Phase imaging is widely used in biomedical imaging, sensing, and material characterization, among other fields. However, direct imaging of phase objects with subwavelength resolution remains a challenge. Here, we demonstrate subwavelength imaging of phase and amplitude objects based on all-optical diffractive encoding and decoding. To resolve subwavelength features of an object, the diffractive imager uses a thin, high-index solid-immersion layer to transmit high-frequency information of the object to a spatially-optimized diffractive encoder, which converts/encodes high-frequency information of the input into low-frequency spatial modes for transmission through air. The subsequent diffractive decoder layers (in air) are jointly designed with the encoder using deep-learning-based optimization, and communicate with the encoder layer to create magnified images of input objects at its output, revealing subwavelength features that would otherwise be washed away due to diffraction limit. We demonstrate that this all-optical collaboration between a diffractive solid-immersion encoder and the following decoder layers in air can resolve subwavelength phase and amplitude features of input objects in a highly compact design. To experimentally demonstrate its proof-of-concept, we used terahertz radiation and developed a fabrication method for creating monolithic multi-layer diffractive processors. Through these monolithically fabricated diffractive encoder-decoder pairs, we demonstrated phase-to-intensity transformations and all-optically reconstructed subwavelength phase features of input objects by directly transforming them into magnified intensity features at the output. This solid-immersion-based diffractive imager, with its compact and cost-effective design, can find wide-ranging applications in bioimaging, endoscopy, sensing and materials characterization.

All-optical image denoising using a diffractive visual processor

Sep 17, 2023Abstract:Image denoising, one of the essential inverse problems, targets to remove noise/artifacts from input images. In general, digital image denoising algorithms, executed on computers, present latency due to several iterations implemented in, e.g., graphics processing units (GPUs). While deep learning-enabled methods can operate non-iteratively, they also introduce latency and impose a significant computational burden, leading to increased power consumption. Here, we introduce an analog diffractive image denoiser to all-optically and non-iteratively clean various forms of noise and artifacts from input images - implemented at the speed of light propagation within a thin diffractive visual processor. This all-optical image denoiser comprises passive transmissive layers optimized using deep learning to physically scatter the optical modes that represent various noise features, causing them to miss the output image Field-of-View (FoV) while retaining the object features of interest. Our results show that these diffractive denoisers can efficiently remove salt and pepper noise and image rendering-related spatial artifacts from input phase or intensity images while achieving an output power efficiency of ~30-40%. We experimentally demonstrated the effectiveness of this analog denoiser architecture using a 3D-printed diffractive visual processor operating at the terahertz spectrum. Owing to their speed, power-efficiency, and minimal computational overhead, all-optical diffractive denoisers can be transformative for various image display and projection systems, including, e.g., holographic displays.

Cycle Consistency-based Uncertainty Quantification of Neural Networks in Inverse Imaging Problems

May 22, 2023Abstract:Uncertainty estimation is critical for numerous applications of deep neural networks and draws growing attention from researchers. Here, we demonstrate an uncertainty quantification approach for deep neural networks used in inverse problems based on cycle consistency. We build forward-backward cycles using the physical forward model available and a trained deep neural network solving the inverse problem at hand, and accordingly derive uncertainty estimators through regression analysis on the consistency of these forward-backward cycles. We theoretically analyze cycle consistency metrics and derive their relationship with respect to uncertainty, bias, and robustness of the neural network inference. To demonstrate the effectiveness of these cycle consistency-based uncertainty estimators, we classified corrupted and out-of-distribution input image data using some of the widely used image deblurring and super-resolution neural networks as testbeds. The blind testing of our method outperformed other models in identifying unseen input data corruption and distribution shifts. This work provides a simple-to-implement and rapid uncertainty quantification method that can be universally applied to various neural networks used for solving inverse problems.

eFIN: Enhanced Fourier Imager Network for generalizable autofocusing and pixel super-resolution in holographic imaging

Jan 09, 2023Abstract:The application of deep learning techniques has greatly enhanced holographic imaging capabilities, leading to improved phase recovery and image reconstruction. Here, we introduce a deep neural network termed enhanced Fourier Imager Network (eFIN) as a highly generalizable framework for hologram reconstruction with pixel super-resolution and image autofocusing. Through holographic microscopy experiments involving lung, prostate and salivary gland tissue sections and Papanicolau (Pap) smears, we demonstrate that eFIN has a superior image reconstruction quality and exhibits external generalization to new types of samples never seen during the training phase. This network achieves a wide autofocusing axial range of 0.35 mm, with the capability to accurately predict the hologram axial distances by physics-informed learning. eFIN enables 3x pixel super-resolution imaging and increases the space-bandwidth product of the reconstructed images by 9-fold with almost no performance loss, which allows for significant time savings in holographic imaging and data processing steps. Our results showcase the advancements of eFIN in pushing the boundaries of holographic imaging for various applications in e.g., quantitative phase imaging and label-free microscopy.

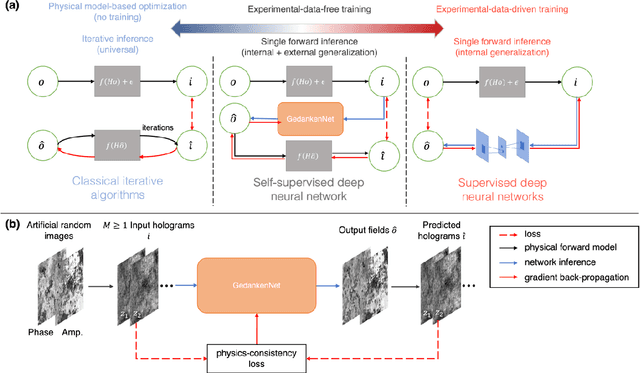

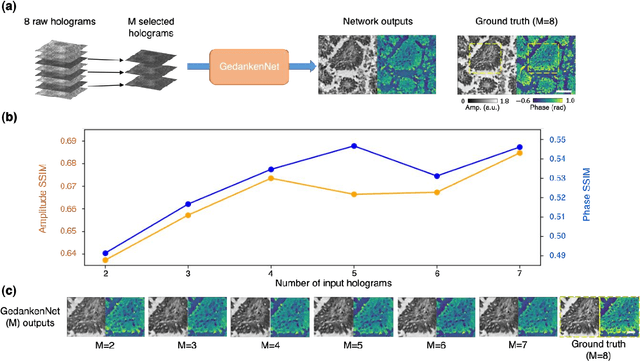

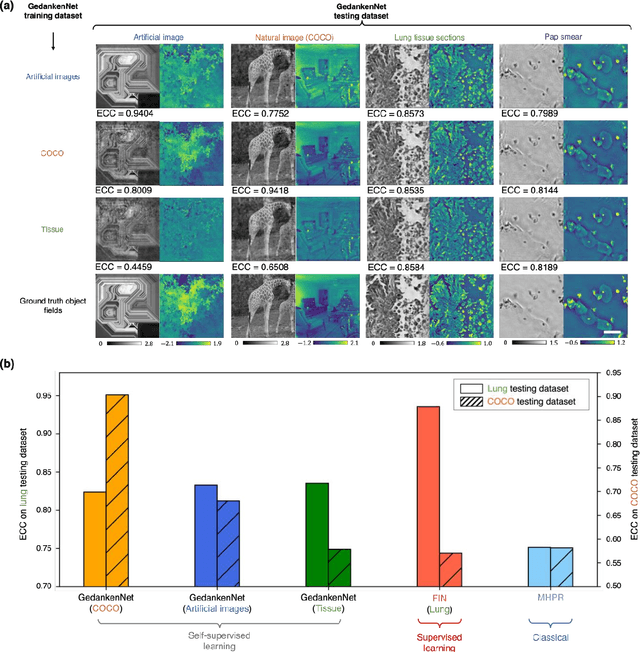

GedankenNet: Self-supervised learning of hologram reconstruction using physics consistency

Sep 17, 2022

Abstract:The past decade has witnessed transformative applications of deep learning in various computational imaging, sensing and microscopy tasks. Due to the supervised learning schemes employed, most of these methods depend on large-scale, diverse, and labeled training data. The acquisition and preparation of such training image datasets are often laborious and costly, also leading to biased estimation and limited generalization to new types of samples. Here, we report a self-supervised learning model, termed GedankenNet, that eliminates the need for labeled or experimental training data, and demonstrate its effectiveness and superior generalization on hologram reconstruction tasks. Without prior knowledge about the sample types to be imaged, the self-supervised learning model was trained using a physics-consistency loss and artificial random images that are synthetically generated without any experiments or resemblance to real-world samples. After its self-supervised training, GedankenNet successfully generalized to experimental holograms of various unseen biological samples, reconstructing the phase and amplitude images of different types of objects using experimentally acquired test holograms. Without access to experimental data or the knowledge of real samples of interest or their spatial features, GedankenNet's self-supervised learning achieved complex-valued image reconstructions that are consistent with the Maxwell's equations, meaning that its output inference and object solutions accurately represent the wave propagation in free-space. This self-supervised learning of image reconstruction tasks opens up new opportunities for various inverse problems in holography, microscopy and computational imaging fields.

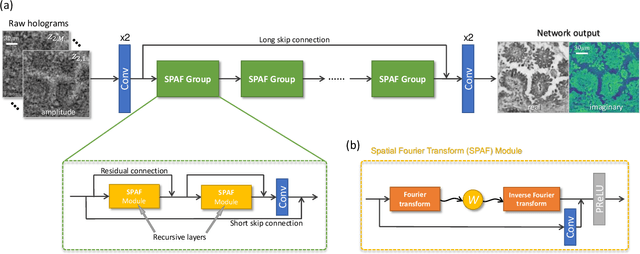

Fourier Imager Network : A deep neural network for hologram reconstruction with superior external generalization

Apr 22, 2022

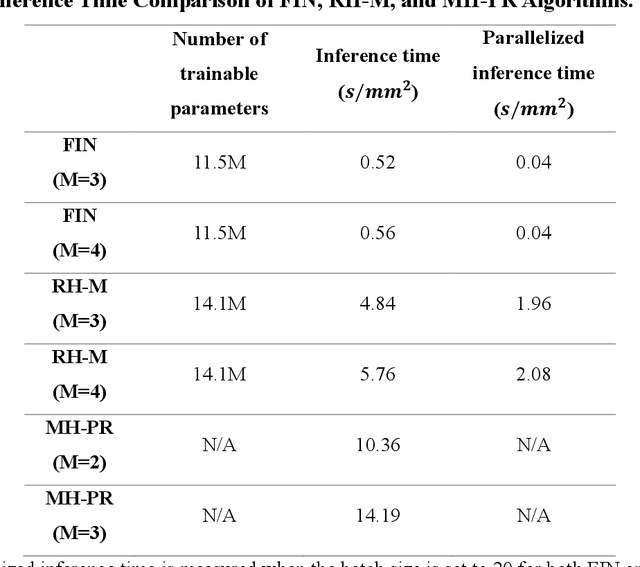

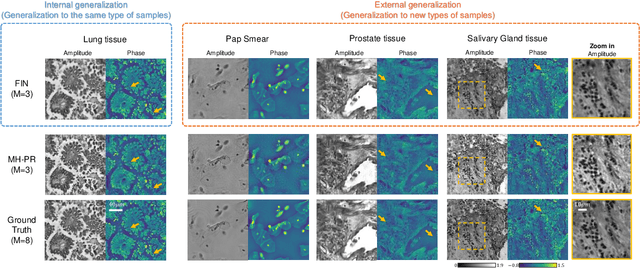

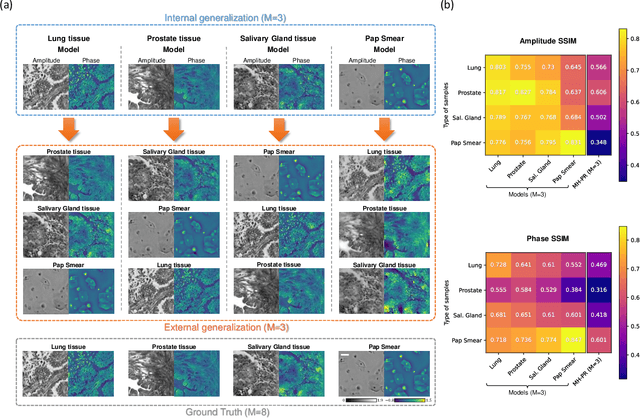

Abstract:Deep learning-based image reconstruction methods have achieved remarkable success in phase recovery and holographic imaging. However, the generalization of their image reconstruction performance to new types of samples never seen by the network remains a challenge. Here we introduce a deep learning framework, termed Fourier Imager Network (FIN), that can perform end-to-end phase recovery and image reconstruction from raw holograms of new types of samples, exhibiting unprecedented success in external generalization. FIN architecture is based on spatial Fourier transform modules that process the spatial frequencies of its inputs using learnable filters and a global receptive field. Compared with existing convolutional deep neural networks used for hologram reconstruction, FIN exhibits superior generalization to new types of samples, while also being much faster in its image inference speed, completing the hologram reconstruction task in ~0.04 s per 1 mm^2 of the sample area. We experimentally validated the performance of FIN by training it using human lung tissue samples and blindly testing it on human prostate, salivary gland tissue and Pap smear samples, proving its superior external generalization and image reconstruction speed. Beyond holographic microscopy and quantitative phase imaging, FIN and the underlying neural network architecture might open up various new opportunities to design broadly generalizable deep learning models in computational imaging and machine vision fields.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge