Deniz Mengu

All-optical image denoising using a diffractive visual processor

Sep 17, 2023Abstract:Image denoising, one of the essential inverse problems, targets to remove noise/artifacts from input images. In general, digital image denoising algorithms, executed on computers, present latency due to several iterations implemented in, e.g., graphics processing units (GPUs). While deep learning-enabled methods can operate non-iteratively, they also introduce latency and impose a significant computational burden, leading to increased power consumption. Here, we introduce an analog diffractive image denoiser to all-optically and non-iteratively clean various forms of noise and artifacts from input images - implemented at the speed of light propagation within a thin diffractive visual processor. This all-optical image denoiser comprises passive transmissive layers optimized using deep learning to physically scatter the optical modes that represent various noise features, causing them to miss the output image Field-of-View (FoV) while retaining the object features of interest. Our results show that these diffractive denoisers can efficiently remove salt and pepper noise and image rendering-related spatial artifacts from input phase or intensity images while achieving an output power efficiency of ~30-40%. We experimentally demonstrated the effectiveness of this analog denoiser architecture using a 3D-printed diffractive visual processor operating at the terahertz spectrum. Owing to their speed, power-efficiency, and minimal computational overhead, all-optical diffractive denoisers can be transformative for various image display and projection systems, including, e.g., holographic displays.

Pyramid diffractive optical networks for unidirectional magnification and demagnification

Aug 29, 2023Abstract:Diffractive deep neural networks (D2NNs) are composed of successive transmissive layers optimized using supervised deep learning to all-optically implement various computational tasks between an input and output field-of-view (FOV). Here, we present a pyramid-structured diffractive optical network design (which we term P-D2NN), optimized specifically for unidirectional image magnification and demagnification. In this P-D2NN design, the diffractive layers are pyramidally scaled in alignment with the direction of the image magnification or demagnification. Our analyses revealed the efficacy of this P-D2NN design in unidirectional image magnification and demagnification tasks, producing high-fidelity magnified or demagnified images in only one direction, while inhibiting the image formation in the opposite direction - confirming the desired unidirectional imaging operation. Compared to the conventional D2NN designs with uniform-sized successive diffractive layers, P-D2NN design achieves similar performance in unidirectional magnification tasks using only half of the diffractive degrees of freedom within the optical processor volume. Furthermore, it maintains its unidirectional image magnification/demagnification functionality across a large band of illumination wavelengths despite being trained with a single illumination wavelength. With this pyramidal architecture, we also designed a wavelength-multiplexed diffractive network, where a unidirectional magnifier and a unidirectional demagnifier operate simultaneously in opposite directions, at two distinct illumination wavelengths. The efficacy of the P-D2NN architecture was also validated experimentally using monochromatic terahertz illumination, successfully matching our numerical simulations. P-D2NN offers a physics-inspired strategy for designing task-specific visual processors.

Multispectral Quantitative Phase Imaging Using a Diffractive Optical Network

Aug 05, 2023Abstract:As a label-free imaging technique, quantitative phase imaging (QPI) provides optical path length information of transparent specimens for various applications in biology, materials science, and engineering. Multispectral QPI measures quantitative phase information across multiple spectral bands, permitting the examination of wavelength-specific phase and dispersion characteristics of samples. Here, we present the design of a diffractive processor that can all-optically perform multispectral quantitative phase imaging of transparent phase-only objects in a snapshot. Our design utilizes spatially engineered diffractive layers, optimized through deep learning, to encode the phase profile of the input object at a predetermined set of wavelengths into spatial intensity variations at the output plane, allowing multispectral QPI using a monochrome focal plane array. Through numerical simulations, we demonstrate diffractive multispectral processors to simultaneously perform quantitative phase imaging at 9 and 16 target spectral bands in the visible spectrum. These diffractive multispectral processors maintain uniform performance across all the wavelength channels, revealing a decent QPI performance at each target wavelength. The generalization of these diffractive processor designs is validated through numerical tests on unseen objects, including thin Pap smear images. Due to its all-optical processing capability using passive dielectric diffractive materials, this diffractive multispectral QPI processor offers a compact and power-efficient solution for high-throughput quantitative phase microscopy and spectroscopy. This framework can operate at different parts of the electromagnetic spectrum and be used for a wide range of phase imaging and sensing applications.

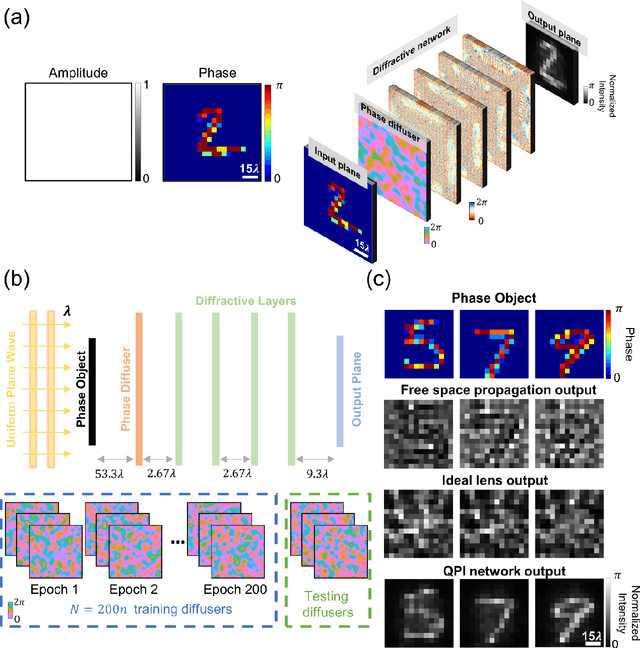

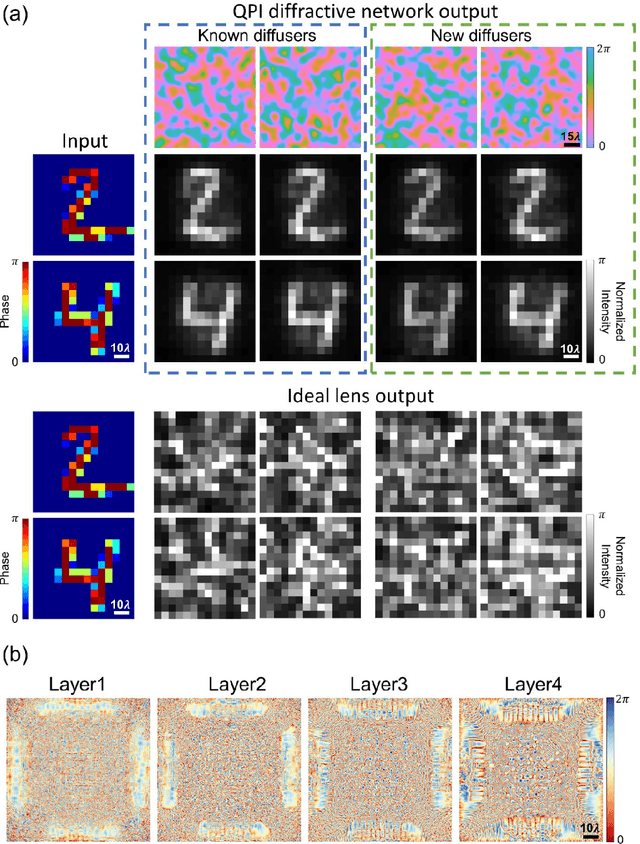

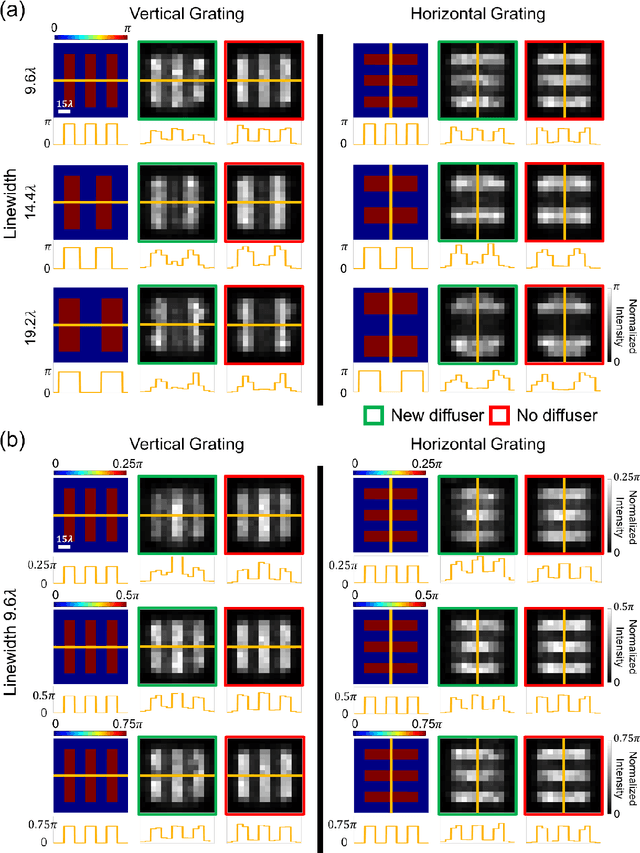

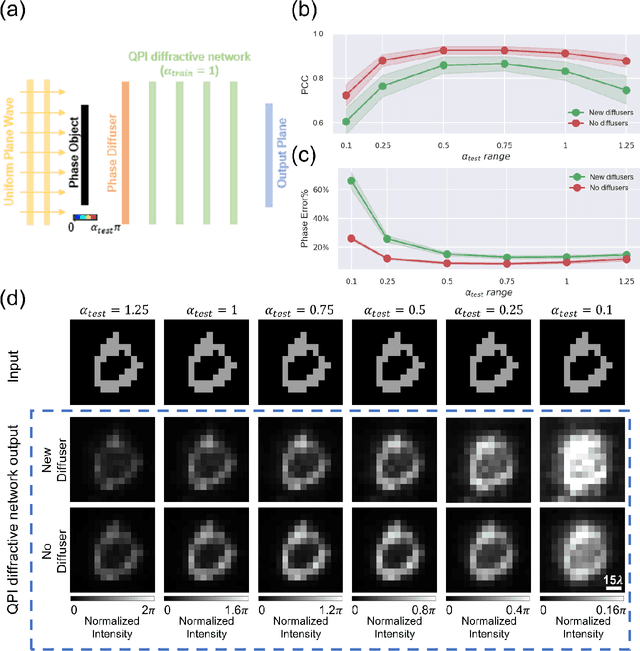

Quantitative phase imaging (QPI) through random diffusers using a diffractive optical network

Jan 19, 2023

Abstract:Quantitative phase imaging (QPI) is a label-free computational imaging technique used in various fields, including biology and medical research. Modern QPI systems typically rely on digital processing using iterative algorithms for phase retrieval and image reconstruction. Here, we report a diffractive optical network trained to convert the phase information of input objects positioned behind random diffusers into intensity variations at the output plane, all-optically performing phase recovery and quantitative imaging of phase objects completely hidden by unknown, random phase diffusers. This QPI diffractive network is composed of successive diffractive layers, axially spanning in total ~70 wavelengths; unlike existing digital image reconstruction and phase retrieval methods, it forms an all-optical processor that does not require external power beyond the illumination beam to complete its QPI reconstruction at the speed of light propagation. This all-optical diffractive processor can provide a low-power, high frame rate and compact alternative for quantitative imaging of phase objects through random, unknown diffusers and can operate at different parts of the electromagnetic spectrum for various applications in biomedical imaging and sensing. The presented QPI diffractive designs can be integrated onto the active area of standard CCD/CMOS-based image sensors to convert an existing optical microscope into a diffractive QPI microscope, performing phase recovery and image reconstruction on a chip through light diffraction within passive structured layers.

Data class-specific all-optical transformations and encryption

Dec 25, 2022Abstract:Diffractive optical networks provide rich opportunities for visual computing tasks since the spatial information of a scene can be directly accessed by a diffractive processor without requiring any digital pre-processing steps. Here we present data class-specific transformations all-optically performed between the input and output fields-of-view (FOVs) of a diffractive network. The visual information of the objects is encoded into the amplitude (A), phase (P), or intensity (I) of the optical field at the input, which is all-optically processed by a data class-specific diffractive network. At the output, an image sensor-array directly measures the transformed patterns, all-optically encrypted using the transformation matrices pre-assigned to different data classes, i.e., a separate matrix for each data class. The original input images can be recovered by applying the correct decryption key (the inverse transformation) corresponding to the matching data class, while applying any other key will lead to loss of information. The class-specificity of these all-optical diffractive transformations creates opportunities where different keys can be distributed to different users; each user can only decode the acquired images of only one data class, serving multiple users in an all-optically encrypted manner. We numerically demonstrated all-optical class-specific transformations covering A-->A, I-->I, and P-->I transformations using various image datasets. We also experimentally validated the feasibility of this framework by fabricating a class-specific I-->I transformation diffractive network using two-photon polymerization and successfully tested it at 1550 nm wavelength. Data class-specific all-optical transformations provide a fast and energy-efficient method for image and data encryption, enhancing data security and privacy.

Snapshot Multispectral Imaging Using a Diffractive Optical Network

Dec 10, 2022Abstract:Multispectral imaging has been used for numerous applications in e.g., environmental monitoring, aerospace, defense, and biomedicine. Here, we present a diffractive optical network-based multispectral imaging system trained using deep learning to create a virtual spectral filter array at the output image field-of-view. This diffractive multispectral imager performs spatially-coherent imaging over a large spectrum, and at the same time, routes a pre-determined set of spectral channels onto an array of pixels at the output plane, converting a monochrome focal plane array or image sensor into a multispectral imaging device without any spectral filters or image recovery algorithms. Furthermore, the spectral responsivity of this diffractive multispectral imager is not sensitive to input polarization states. Through numerical simulations, we present different diffractive network designs that achieve snapshot multispectral imaging with 4, 9 and 16 unique spectral bands within the visible spectrum, based on passive spatially-structured diffractive surfaces, with a compact design that axially spans ~72 times the mean wavelength of the spectral band of interest. Moreover, we experimentally demonstrate a diffractive multispectral imager based on a 3D-printed diffractive network that creates at its output image plane a spatially-repeating virtual spectral filter array with 2x2=4 unique bands at terahertz spectrum. Due to their compact form factor and computation-free, power-efficient and polarization-insensitive forward operation, diffractive multispectral imagers can be transformative for various imaging and sensing applications and be used at different parts of the electromagnetic spectrum where high-density and wide-area multispectral pixel arrays are not widely available.

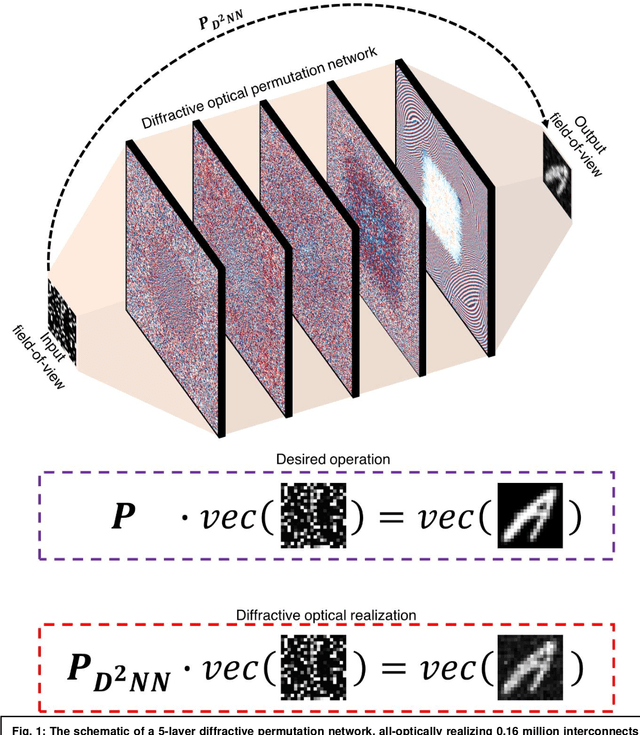

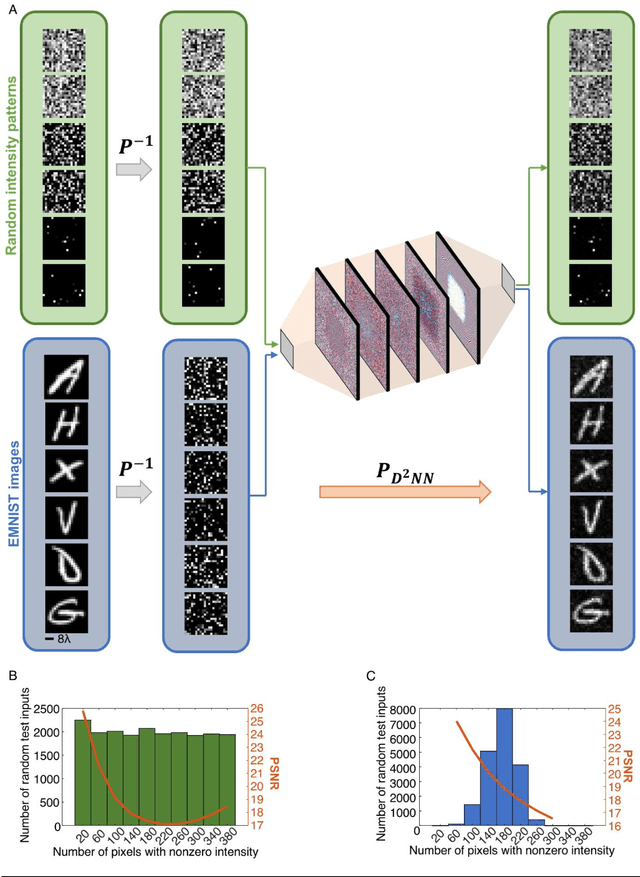

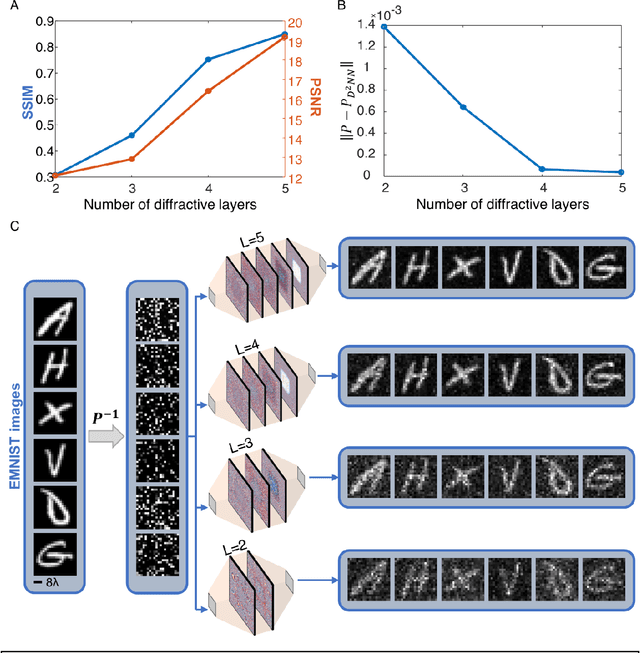

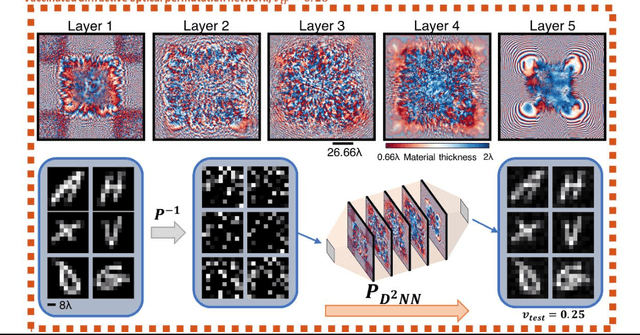

Diffractive Interconnects: All-Optical Permutation Operation Using Diffractive Networks

Jun 21, 2022

Abstract:Permutation matrices form an important computational building block frequently used in various fields including e.g., communications, information security and data processing. Optical implementation of permutation operators with relatively large number of input-output interconnections based on power-efficient, fast, and compact platforms is highly desirable. Here, we present diffractive optical networks engineered through deep learning to all-optically perform permutation operations that can scale to hundreds of thousands of interconnections between an input and an output field-of-view using passive transmissive layers that are individually structured at the wavelength scale. Our findings indicate that the capacity of the diffractive optical network in approximating a given permutation operation increases proportional to the number of diffractive layers and trainable transmission elements in the system. Such deeper diffractive network designs can pose practical challenges in terms of physical alignment and output diffraction efficiency of the system. We addressed these challenges by designing misalignment tolerant diffractive designs that can all-optically perform arbitrarily-selected permutation operations, and experimentally demonstrated, for the first time, a diffractive permutation network that operates at THz part of the spectrum. Diffractive permutation networks might find various applications in e.g., security, image encryption and data processing, along with telecommunications; especially with the carrier frequencies in wireless communications approaching THz-bands, the presented diffractive permutation networks can potentially serve as channel routing and interconnection panels in wireless networks.

Super-resolution image display using diffractive decoders

Jun 15, 2022

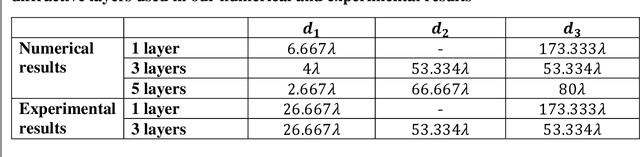

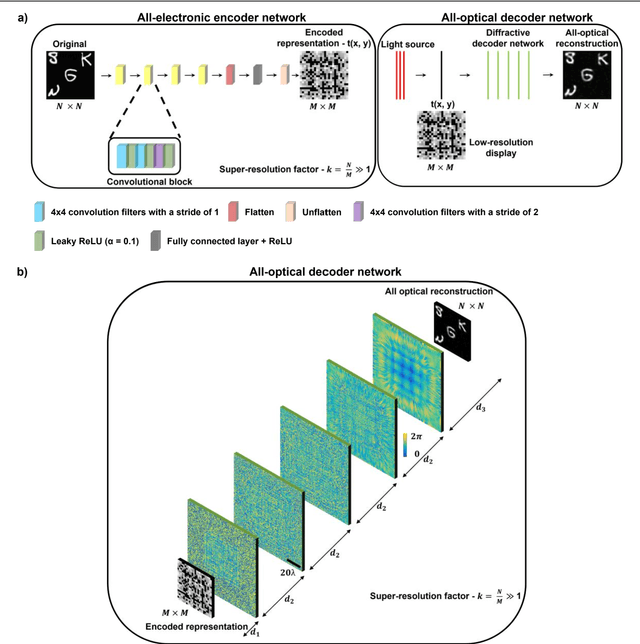

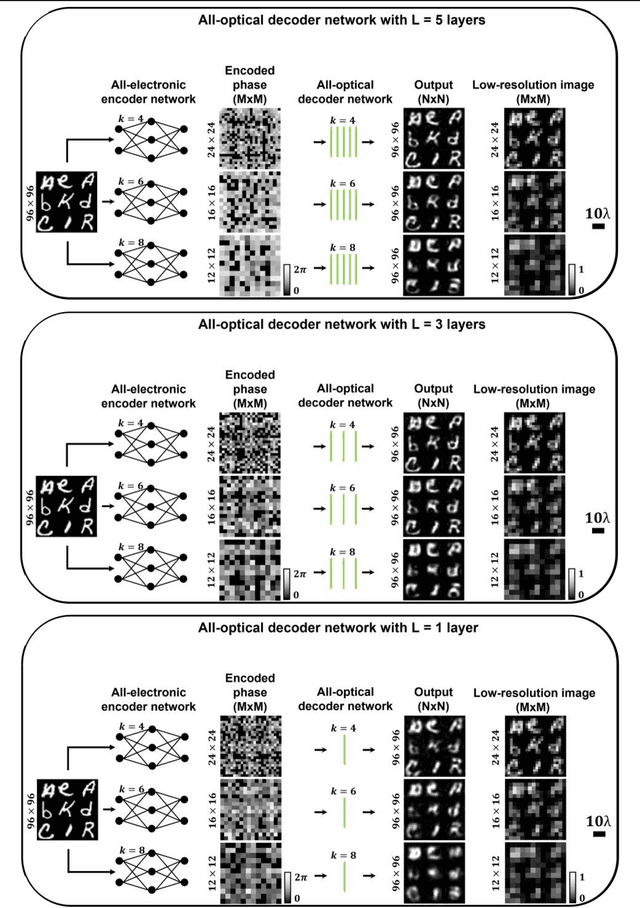

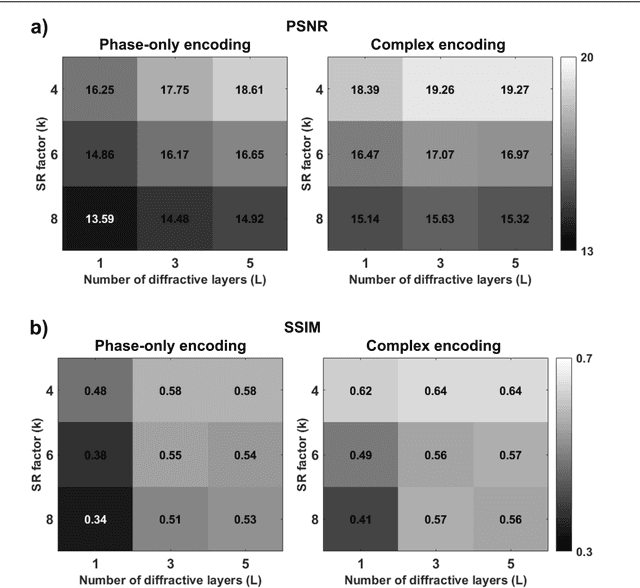

Abstract:High-resolution synthesis/projection of images over a large field-of-view (FOV) is hindered by the restricted space-bandwidth-product (SBP) of wavefront modulators. We report a deep learning-enabled diffractive display design that is based on a jointly-trained pair of an electronic encoder and a diffractive optical decoder to synthesize/project super-resolved images using low-resolution wavefront modulators. The digital encoder, composed of a trained convolutional neural network (CNN), rapidly pre-processes the high-resolution images of interest so that their spatial information is encoded into low-resolution (LR) modulation patterns, projected via a low SBP wavefront modulator. The diffractive decoder processes this LR encoded information using thin transmissive layers that are structured using deep learning to all-optically synthesize and project super-resolved images at its output FOV. Our results indicate that this diffractive image display can achieve a super-resolution factor of ~4, demonstrating a ~16-fold increase in SBP. We also experimentally validate the success of this diffractive super-resolution display using 3D-printed diffractive decoders that operate at the THz spectrum. This diffractive image decoder can be scaled to operate at visible wavelengths and inspire the design of large FOV and high-resolution displays that are compact, low-power, and computationally efficient.

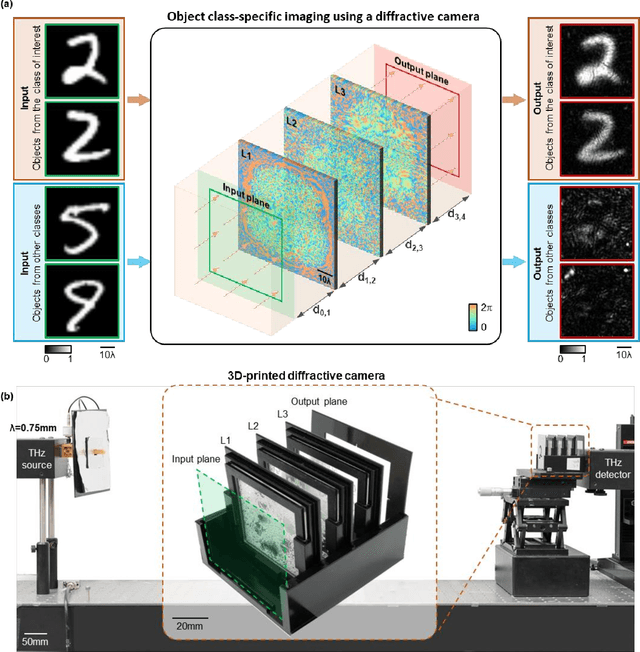

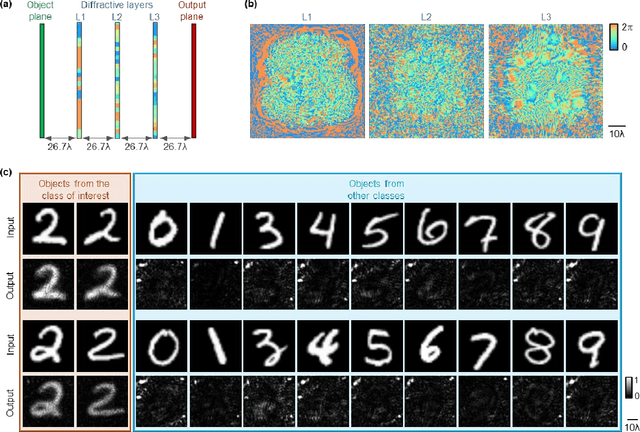

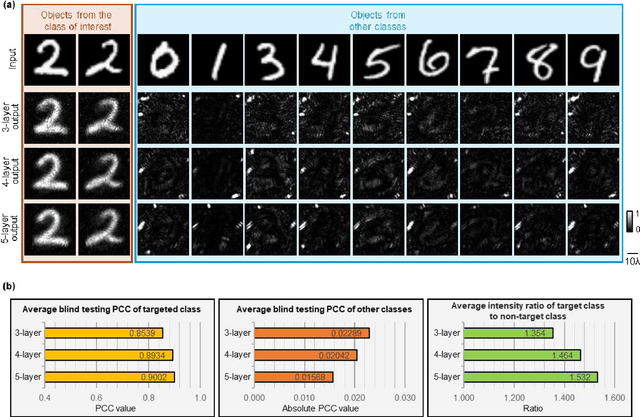

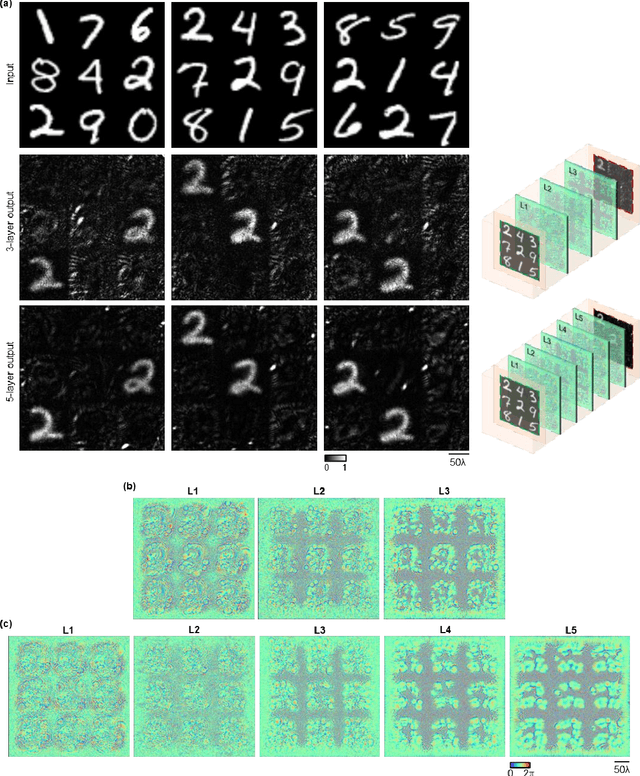

To image, or not to image: Class-specific diffractive cameras with all-optical erasure of undesired objects

May 26, 2022

Abstract:Privacy protection is a growing concern in the digital era, with machine vision techniques widely used throughout public and private settings. Existing methods address this growing problem by, e.g., encrypting camera images or obscuring/blurring the imaged information through digital algorithms. Here, we demonstrate a camera design that performs class-specific imaging of target objects with instantaneous all-optical erasure of other classes of objects. This diffractive camera consists of transmissive surfaces structured using deep learning to perform selective imaging of target classes of objects positioned at its input field-of-view. After their fabrication, the thin diffractive layers collectively perform optical mode filtering to accurately form images of the objects that belong to a target data class or group of classes, while instantaneously erasing objects of the other data classes at the output field-of-view. Using the same framework, we also demonstrate the design of class-specific permutation cameras, where the objects of a target data class are pixel-wise permuted for all-optical class-specific encryption, while the other objects are irreversibly erased from the output image. The success of class-specific diffractive cameras was experimentally demonstrated using terahertz (THz) waves and 3D-printed diffractive layers that selectively imaged only one class of the MNIST handwritten digit dataset, all-optically erasing the other handwritten digits. This diffractive camera design can be scaled to different parts of the electromagnetic spectrum, including, e.g., the visible and infrared wavelengths, to provide transformative opportunities for privacy-preserving digital cameras and task-specific data-efficient imaging.

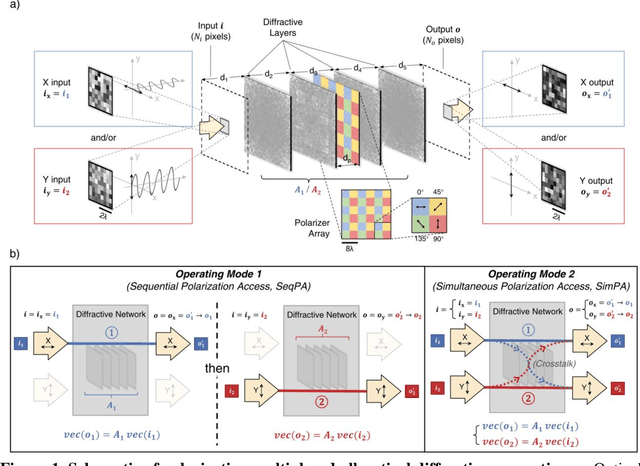

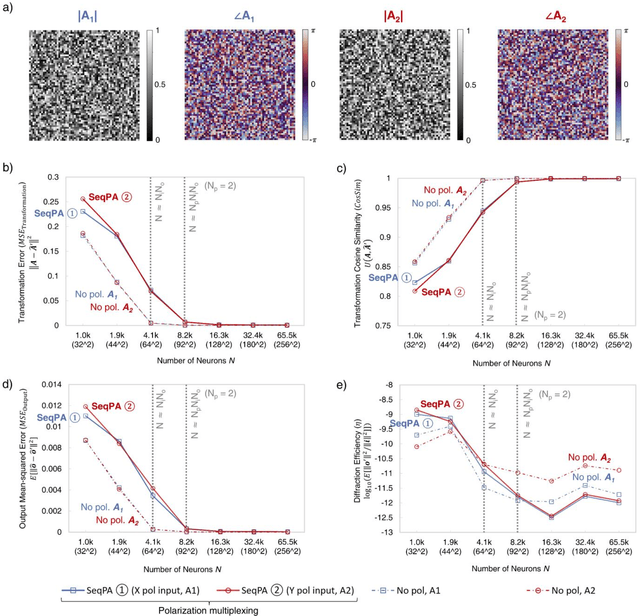

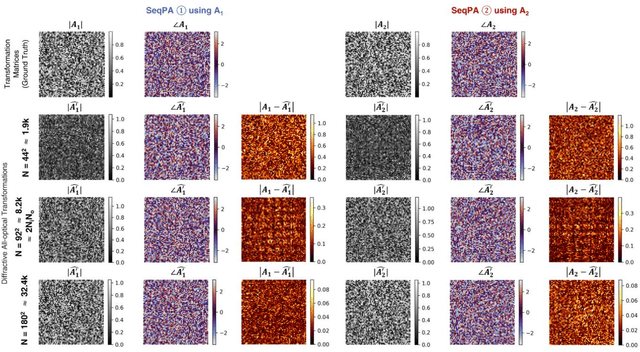

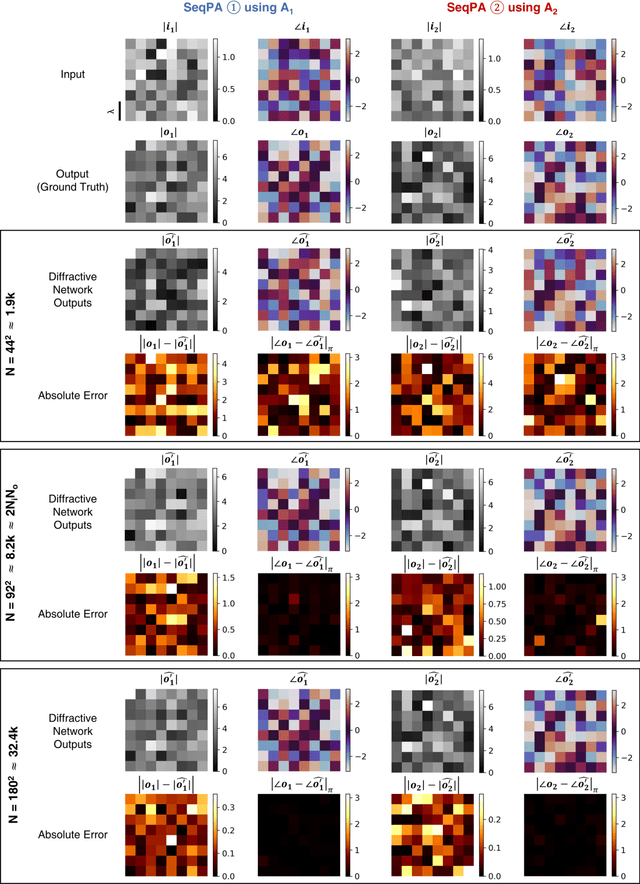

Polarization Multiplexed Diffractive Computing: All-Optical Implementation of a Group of Linear Transformations Through a Polarization-Encoded Diffractive Network

Mar 25, 2022

Abstract:Research on optical computing has recently attracted significant attention due to the transformative advances in machine learning. Among different approaches, diffractive optical networks composed of spatially-engineered transmissive surfaces have been demonstrated for all-optical statistical inference and performing arbitrary linear transformations using passive, free-space optical layers. Here, we introduce a polarization multiplexed diffractive processor to all-optically perform multiple, arbitrarily-selected linear transformations through a single diffractive network trained using deep learning. In this framework, an array of pre-selected linear polarizers is positioned between trainable transmissive diffractive materials that are isotropic, and different target linear transformations (complex-valued) are uniquely assigned to different combinations of input/output polarization states. The transmission layers of this polarization multiplexed diffractive network are trained and optimized via deep learning and error-backpropagation by using thousands of examples of the input/output fields corresponding to each one of the complex-valued linear transformations assigned to different input/output polarization combinations. Our results and analysis reveal that a single diffractive network can successfully approximate and all-optically implement a group of arbitrarily-selected target transformations with a negligible error when the number of trainable diffractive features/neurons (N) approaches N_p x N_i x N_o, where N_i and N_o represent the number of pixels at the input and output fields-of-view, respectively, and N_p refers to the number of unique linear transformations assigned to different input/output polarization combinations. This polarization-multiplexed all-optical diffractive processor can find various applications in optical computing and polarization-based machine vision tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge