Cagatay Isil

Snapshot 3D image projection using a diffractive decoder

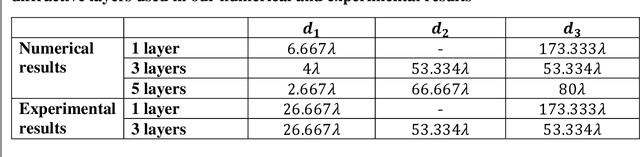

Dec 23, 2025Abstract:3D image display is essential for next-generation volumetric imaging; however, dense depth multiplexing for 3D image projection remains challenging because diffraction-induced cross-talk rapidly increases as the axial image planes get closer. Here, we introduce a 3D display system comprising a digital encoder and a diffractive optical decoder, which simultaneously projects different images onto multiple target axial planes with high axial resolution. By leveraging multi-layer diffractive wavefront decoding and deep learning-based end-to-end optimization, the system achieves high-fidelity depth-resolved 3D image projection in a snapshot, enabling axial plane separations on the order of a wavelength. The digital encoder leverages a Fourier encoder network to capture multi-scale spatial and frequency-domain features from input images, integrates axial position encoding, and generates a unified phase representation that simultaneously encodes all images to be axially projected in a single snapshot through a jointly-optimized diffractive decoder. We characterized the impact of diffractive decoder depth, output diffraction efficiency, spatial light modulator resolution, and axial encoding density, revealing trade-offs that govern axial separation and 3D image projection quality. We further demonstrated the capability to display volumetric images containing 28 axial slices, as well as the ability to dynamically reconfigure the axial locations of the image planes, performed on demand. Finally, we experimentally validated the presented approach, demonstrating close agreement between the measured results and the target images. These results establish the diffractive 3D display system as a compact and scalable framework for depth-resolved snapshot 3D image projection, with potential applications in holographic displays, AR/VR interfaces, and volumetric optical computing.

Deep Plug-and-Play HIO Approach for Phase Retrieval

Nov 28, 2024

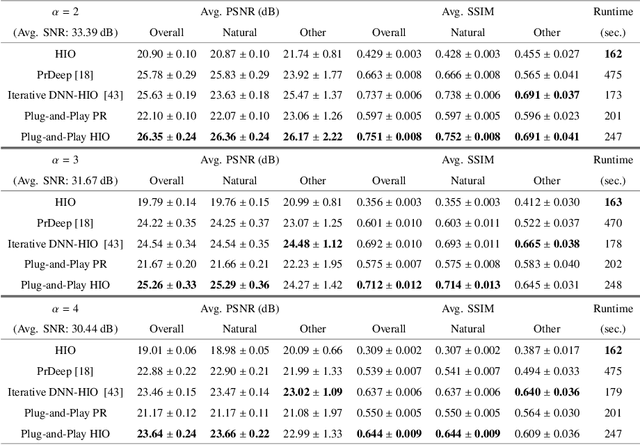

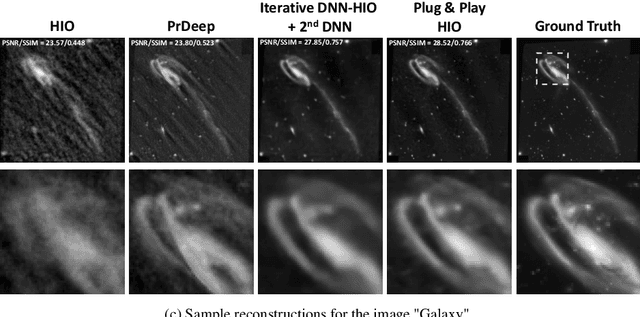

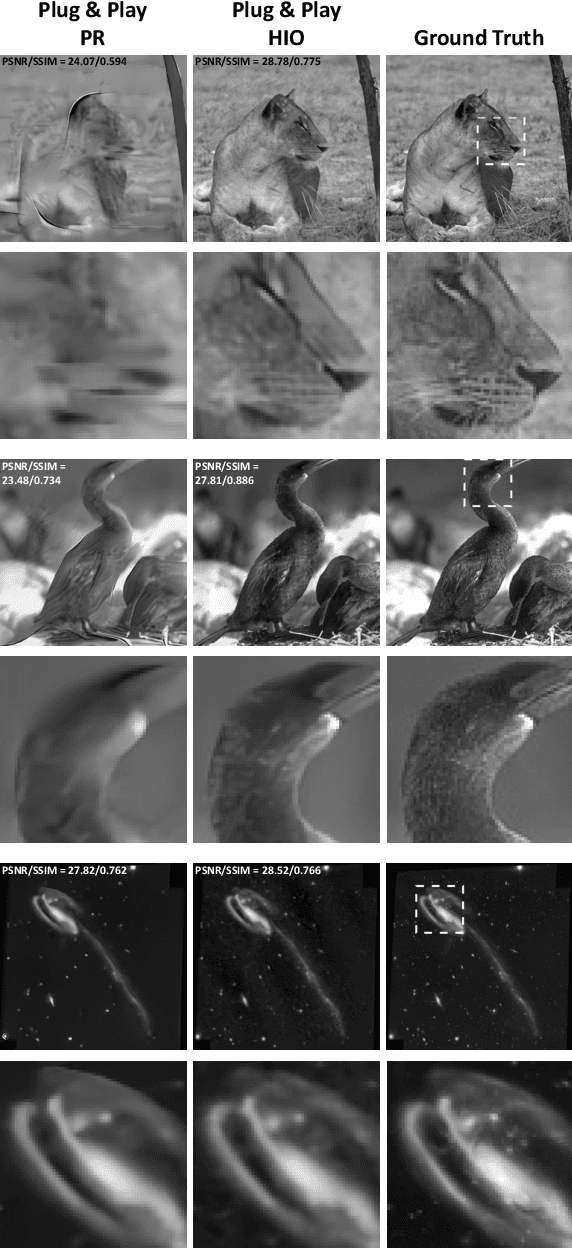

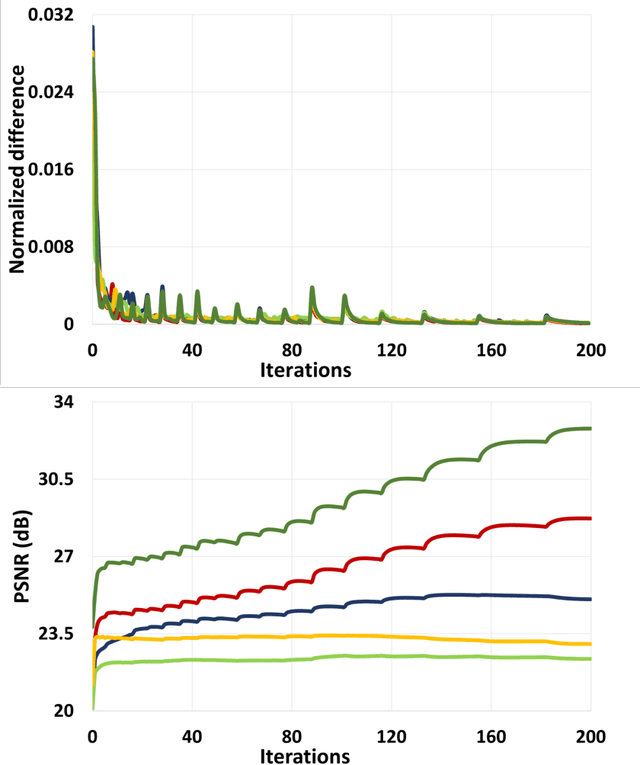

Abstract:In the phase retrieval problem, the aim is the recovery of an unknown image from intensity-only measurements such as Fourier intensity. Although there are several solution approaches, solving this problem is challenging due to its nonlinear and ill-posed nature. Recently, learning-based approaches have emerged as powerful alternatives to the analytical methods for several inverse problems. In the context of phase retrieval, a novel plug-and-play approach that exploits learning-based prior and e!cient update steps has been presented at the Computational Optical Sensing and Imaging topical meeting, with demonstrated state-of-the-art performance. The key idea was to incorporate learning-based prior to the hybrid input-output method (HIO) through plug-and-play regularization. In this paper, we present the mathematical development of the method including the derivation of its analytical update steps based on half-quadratic splitting and comparatively evaluate its performance through extensive simulations on a large test dataset. The results show the e"ectiveness of the method in terms of both image quality, computational e!ciency, and robustness to initialization and noise.

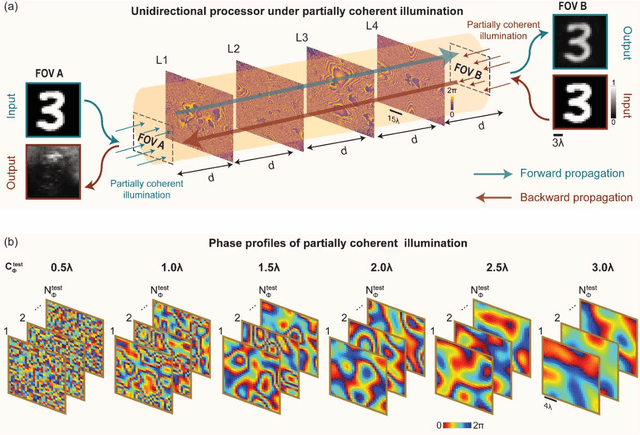

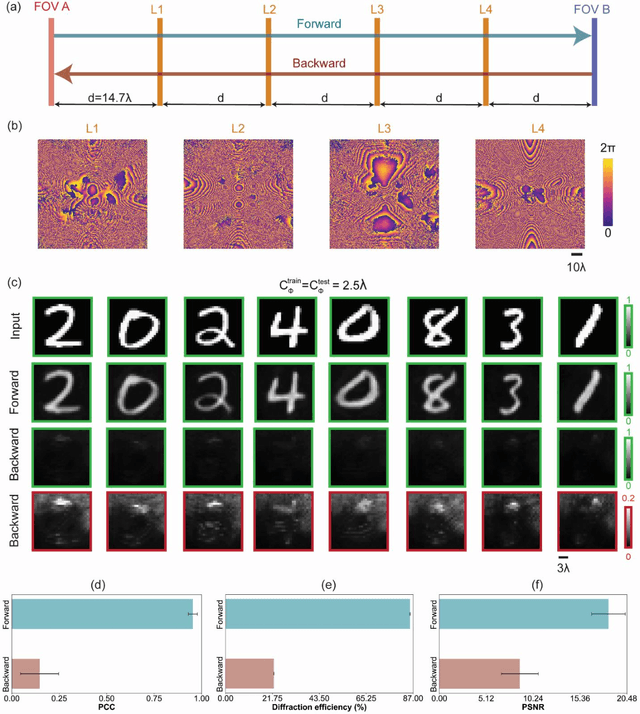

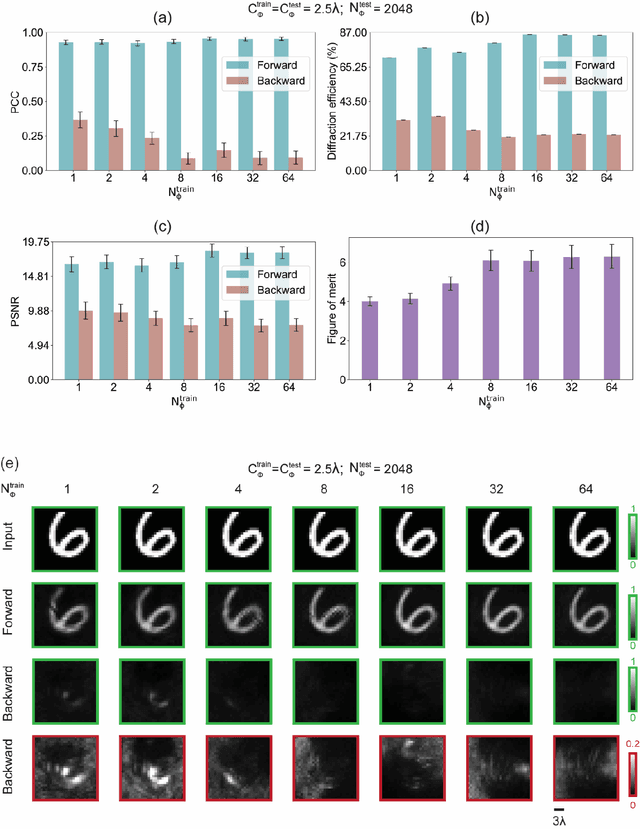

Unidirectional imaging with partially coherent light

Aug 10, 2024

Abstract:Unidirectional imagers form images of input objects only in one direction, e.g., from field-of-view (FOV) A to FOV B, while blocking the image formation in the reverse direction, from FOV B to FOV A. Here, we report unidirectional imaging under spatially partially coherent light and demonstrate high-quality imaging only in the forward direction (A->B) with high power efficiency while distorting the image formation in the backward direction (B->A) along with low power efficiency. Our reciprocal design features a set of spatially engineered linear diffractive layers that are statistically optimized for partially coherent illumination with a given phase correlation length. Our analyses reveal that when illuminated by a partially coherent beam with a correlation length of ~1.5 w or larger, where w is the wavelength of light, diffractive unidirectional imagers achieve robust performance, exhibiting asymmetric imaging performance between the forward and backward directions - as desired. A partially coherent unidirectional imager designed with a smaller correlation length of less than 1.5 w still supports unidirectional image transmission, but with a reduced figure of merit. These partially coherent diffractive unidirectional imagers are compact (axially spanning less than 75 w), polarization-independent, and compatible with various types of illumination sources, making them well-suited for applications in asymmetric visual information processing and communication.

Virtual Gram staining of label-free bacteria using darkfield microscopy and deep learning

Jul 17, 2024

Abstract:Gram staining has been one of the most frequently used staining protocols in microbiology for over a century, utilized across various fields, including diagnostics, food safety, and environmental monitoring. Its manual procedures make it vulnerable to staining errors and artifacts due to, e.g., operator inexperience and chemical variations. Here, we introduce virtual Gram staining of label-free bacteria using a trained deep neural network that digitally transforms darkfield images of unstained bacteria into their Gram-stained equivalents matching brightfield image contrast. After a one-time training effort, the virtual Gram staining model processes an axial stack of darkfield microscopy images of label-free bacteria (never seen before) to rapidly generate Gram staining, bypassing several chemical steps involved in the conventional staining process. We demonstrated the success of the virtual Gram staining workflow on label-free bacteria samples containing Escherichia coli and Listeria innocua by quantifying the staining accuracy of the virtual Gram staining model and comparing the chromatic and morphological features of the virtually stained bacteria against their chemically stained counterparts. This virtual bacteria staining framework effectively bypasses the traditional Gram staining protocol and its challenges, including stain standardization, operator errors, and sensitivity to chemical variations.

Neural Network-Based Processing and Reconstruction of Compromised Biophotonic Image Data

Mar 21, 2024Abstract:The integration of deep learning techniques with biophotonic setups has opened new horizons in bioimaging. A compelling trend in this field involves deliberately compromising certain measurement metrics to engineer better bioimaging tools in terms of cost, speed, and form-factor, followed by compensating for the resulting defects through the utilization of deep learning models trained on a large amount of ideal, superior or alternative data. This strategic approach has found increasing popularity due to its potential to enhance various aspects of biophotonic imaging. One of the primary motivations for employing this strategy is the pursuit of higher temporal resolution or increased imaging speed, critical for capturing fine dynamic biological processes. This approach also offers the prospect of simplifying hardware requirements/complexities, thereby making advanced imaging standards more accessible in terms of cost and/or size. This article provides an in-depth review of the diverse measurement aspects that researchers intentionally impair in their biophotonic setups, including the point spread function, signal-to-noise ratio, sampling density, and pixel resolution. By deliberately compromising these metrics, researchers aim to not only recuperate them through the application of deep learning networks, but also bolster in return other crucial parameters, such as the field-of-view, depth-of-field, and space-bandwidth product. Here, we discuss various biophotonic methods that have successfully employed this strategic approach. These techniques span broad applications and showcase the versatility and effectiveness of deep learning in the context of compromised biophotonic data. Finally, by offering our perspectives on the future possibilities of this rapidly evolving concept, we hope to motivate our readers to explore novel ways of balancing hardware compromises with compensation via AI.

Subwavelength Imaging using a Solid-Immersion Diffractive Optical Processor

Jan 17, 2024Abstract:Phase imaging is widely used in biomedical imaging, sensing, and material characterization, among other fields. However, direct imaging of phase objects with subwavelength resolution remains a challenge. Here, we demonstrate subwavelength imaging of phase and amplitude objects based on all-optical diffractive encoding and decoding. To resolve subwavelength features of an object, the diffractive imager uses a thin, high-index solid-immersion layer to transmit high-frequency information of the object to a spatially-optimized diffractive encoder, which converts/encodes high-frequency information of the input into low-frequency spatial modes for transmission through air. The subsequent diffractive decoder layers (in air) are jointly designed with the encoder using deep-learning-based optimization, and communicate with the encoder layer to create magnified images of input objects at its output, revealing subwavelength features that would otherwise be washed away due to diffraction limit. We demonstrate that this all-optical collaboration between a diffractive solid-immersion encoder and the following decoder layers in air can resolve subwavelength phase and amplitude features of input objects in a highly compact design. To experimentally demonstrate its proof-of-concept, we used terahertz radiation and developed a fabrication method for creating monolithic multi-layer diffractive processors. Through these monolithically fabricated diffractive encoder-decoder pairs, we demonstrated phase-to-intensity transformations and all-optically reconstructed subwavelength phase features of input objects by directly transforming them into magnified intensity features at the output. This solid-immersion-based diffractive imager, with its compact and cost-effective design, can find wide-ranging applications in bioimaging, endoscopy, sensing and materials characterization.

Learning Diffractive Optical Communication Around Arbitrary Opaque Occlusions

Apr 20, 2023Abstract:Free-space optical systems are emerging for high data rate communication and transfer of information in indoor and outdoor settings. However, free-space optical communication becomes challenging when an occlusion blocks the light path. Here, we demonstrate, for the first time, a direct communication scheme, passing optical information around a fully opaque, arbitrarily shaped obstacle that partially or entirely occludes the transmitter's field-of-view. In this scheme, an electronic neural network encoder and a diffractive optical network decoder are jointly trained using deep learning to transfer the optical information or message of interest around the opaque occlusion of an arbitrary shape. The diffractive decoder comprises successive spatially-engineered passive surfaces that process optical information through light-matter interactions. Following its training, the encoder-decoder pair can communicate any arbitrary optical information around opaque occlusions, where information decoding occurs at the speed of light propagation. For occlusions that change their size and/or shape as a function of time, the encoder neural network can be retrained to successfully communicate with the existing diffractive decoder, without changing the physical layer(s) already deployed. We also validate this framework experimentally in the terahertz spectrum using a 3D-printed diffractive decoder to communicate around a fully opaque occlusion. Scalable for operation in any wavelength regime, this scheme could be particularly useful in emerging high data-rate free-space communication systems.

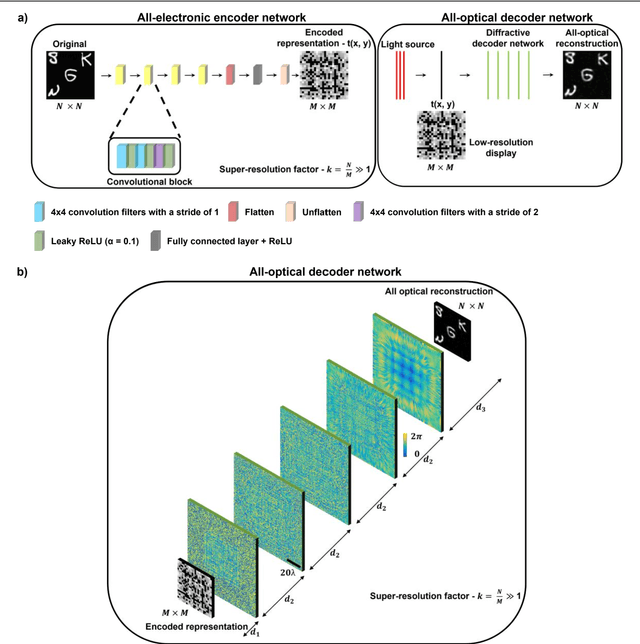

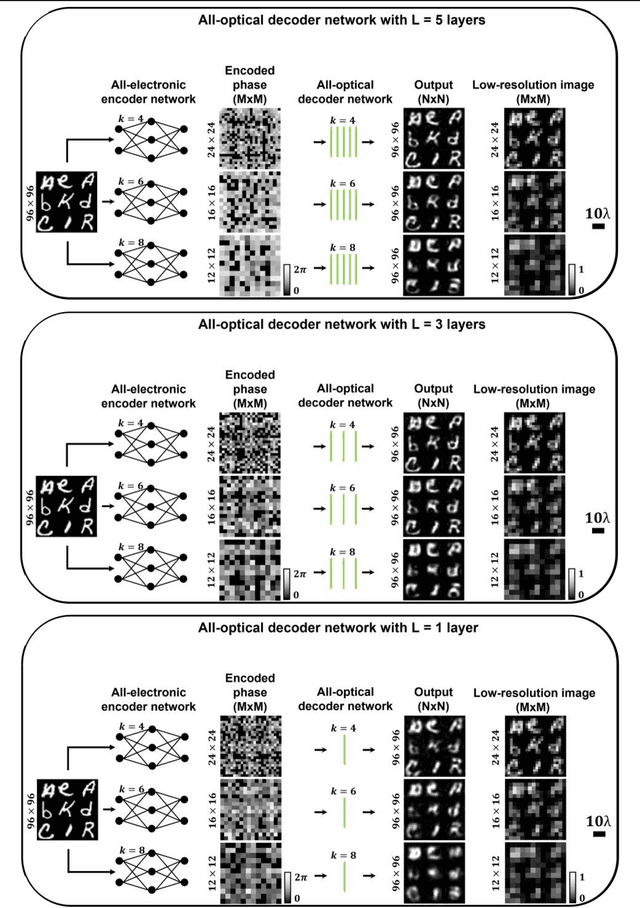

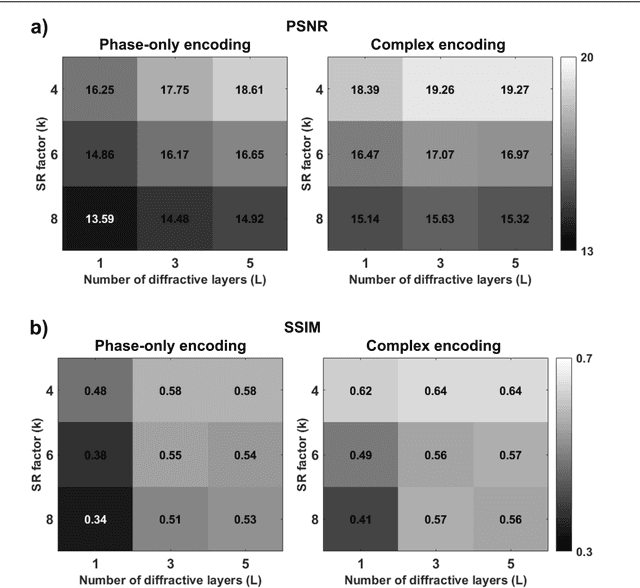

Super-resolution image display using diffractive decoders

Jun 15, 2022

Abstract:High-resolution synthesis/projection of images over a large field-of-view (FOV) is hindered by the restricted space-bandwidth-product (SBP) of wavefront modulators. We report a deep learning-enabled diffractive display design that is based on a jointly-trained pair of an electronic encoder and a diffractive optical decoder to synthesize/project super-resolved images using low-resolution wavefront modulators. The digital encoder, composed of a trained convolutional neural network (CNN), rapidly pre-processes the high-resolution images of interest so that their spatial information is encoded into low-resolution (LR) modulation patterns, projected via a low SBP wavefront modulator. The diffractive decoder processes this LR encoded information using thin transmissive layers that are structured using deep learning to all-optically synthesize and project super-resolved images at its output FOV. Our results indicate that this diffractive image display can achieve a super-resolution factor of ~4, demonstrating a ~16-fold increase in SBP. We also experimentally validate the success of this diffractive super-resolution display using 3D-printed diffractive decoders that operate at the THz spectrum. This diffractive image decoder can be scaled to operate at visible wavelengths and inspire the design of large FOV and high-resolution displays that are compact, low-power, and computationally efficient.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge