Jingxi Li

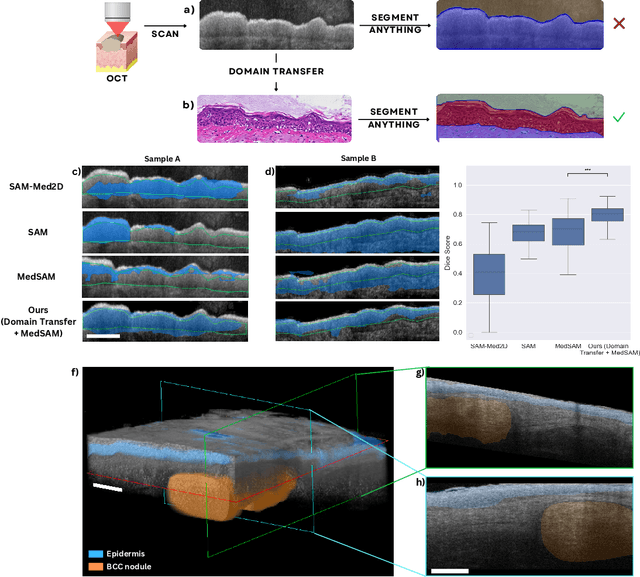

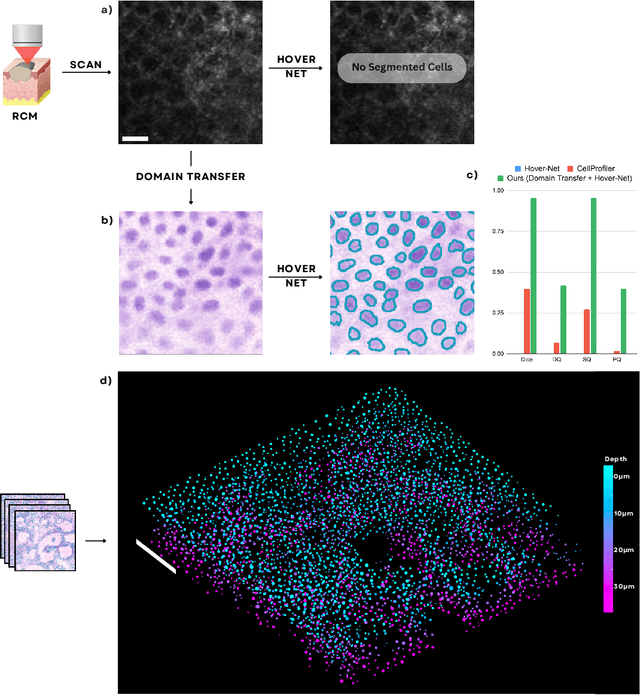

Leveraging Computational Pathology AI for Noninvasive Optical Imaging Analysis Without Retraining

Nov 19, 2024

Abstract:Noninvasive optical imaging modalities can probe patient's tissue in 3D and over time generate gigabytes of clinically relevant data per sample. There is a need for AI models to analyze this data and assist clinical workflow. The lack of expert labelers and the large dataset required (>100,000 images) for model training and tuning are the main hurdles in creating foundation models. In this paper we introduce FoundationShift, a method to apply any AI model from computational pathology without retraining. We show our method is more accurate than state of the art models (SAM, MedSAM, SAM-Med2D, CellProfiler, Hover-Net, PLIP, UNI and ChatGPT), with multiple imaging modalities (OCT and RCM). This is achieved without the need for model retraining or fine-tuning. Applying our method to noninvasive in vivo images could enable physicians to readily incorporate optical imaging modalities into their clinical practice, providing real time tissue analysis and improving patient care.

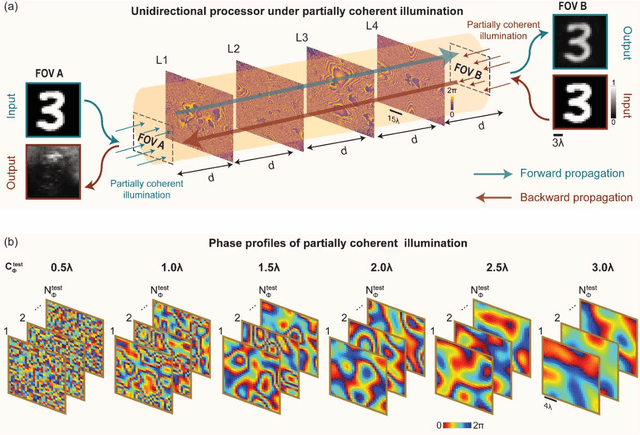

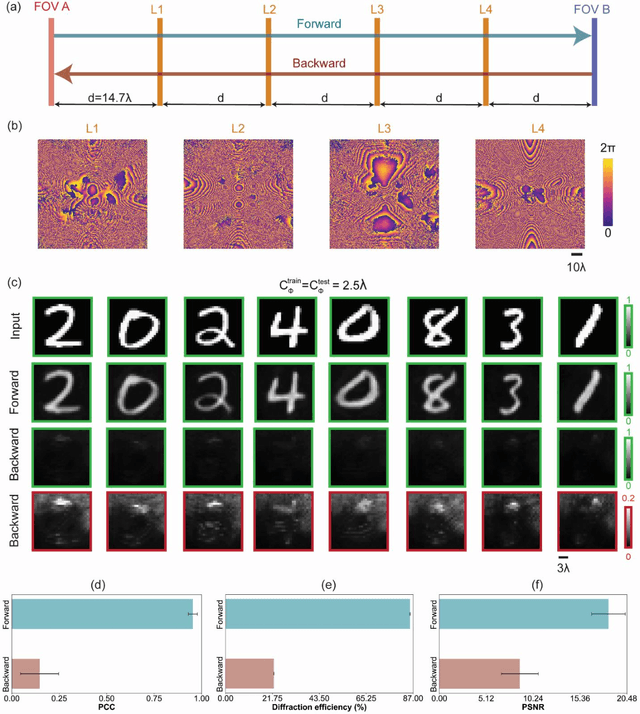

Unidirectional imaging with partially coherent light

Aug 10, 2024

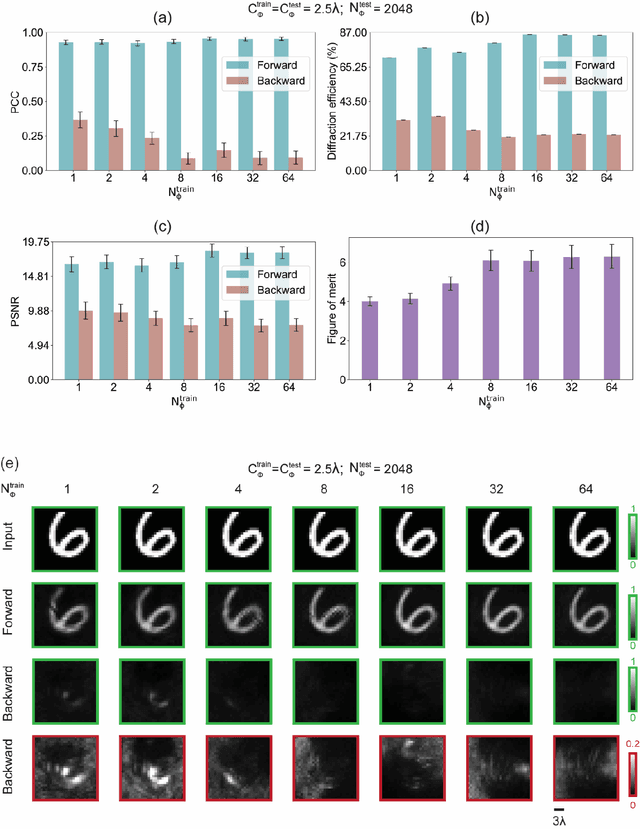

Abstract:Unidirectional imagers form images of input objects only in one direction, e.g., from field-of-view (FOV) A to FOV B, while blocking the image formation in the reverse direction, from FOV B to FOV A. Here, we report unidirectional imaging under spatially partially coherent light and demonstrate high-quality imaging only in the forward direction (A->B) with high power efficiency while distorting the image formation in the backward direction (B->A) along with low power efficiency. Our reciprocal design features a set of spatially engineered linear diffractive layers that are statistically optimized for partially coherent illumination with a given phase correlation length. Our analyses reveal that when illuminated by a partially coherent beam with a correlation length of ~1.5 w or larger, where w is the wavelength of light, diffractive unidirectional imagers achieve robust performance, exhibiting asymmetric imaging performance between the forward and backward directions - as desired. A partially coherent unidirectional imager designed with a smaller correlation length of less than 1.5 w still supports unidirectional image transmission, but with a reduced figure of merit. These partially coherent diffractive unidirectional imagers are compact (axially spanning less than 75 w), polarization-independent, and compatible with various types of illumination sources, making them well-suited for applications in asymmetric visual information processing and communication.

Sports Intelligence: Assessing the Sports Understanding Capabilities of Language Models through Question Answering from Text to Video

Jun 21, 2024Abstract:Understanding sports is crucial for the advancement of Natural Language Processing (NLP) due to its intricate and dynamic nature. Reasoning over complex sports scenarios has posed significant challenges to current NLP technologies which require advanced cognitive capabilities. Toward addressing the limitations of existing benchmarks on sports understanding in the NLP field, we extensively evaluated mainstream large language models for various sports tasks. Our evaluation spans from simple queries on basic rules and historical facts to complex, context-specific reasoning, leveraging strategies from zero-shot to few-shot learning, and chain-of-thought techniques. In addition to unimodal analysis, we further assessed the sports reasoning capabilities of mainstream video language models to bridge the gap in multimodal sports understanding benchmarking. Our findings highlighted the critical challenges of sports understanding for NLP. We proposed a new benchmark based on a comprehensive overview of existing sports datasets and provided extensive error analysis which we hope can help identify future research priorities in this field.

Language and Multimodal Models in Sports: A Survey of Datasets and Applications

Jun 18, 2024

Abstract:Recent integration of Natural Language Processing (NLP) and multimodal models has advanced the field of sports analytics. This survey presents a comprehensive review of the datasets and applications driving these innovations post-2020. We overviewed and categorized datasets into three primary types: language-based, multimodal, and convertible datasets. Language-based and multimodal datasets are for tasks involving text or multimodality (e.g., text, video, audio), respectively. Convertible datasets, initially single-modal (video), can be enriched with additional annotations, such as explanations of actions and video descriptions, to become multimodal, offering future potential for richer and more diverse applications. Our study highlights the contributions of these datasets to various applications, from improving fan experiences to supporting tactical analysis and medical diagnostics. We also discuss the challenges and future directions in dataset development, emphasizing the need for diverse, high-quality data to support real-time processing and personalized user experiences. This survey provides a foundational resource for researchers and practitioners aiming to leverage NLP and multimodal models in sports, offering insights into current trends and future opportunities in the field.

Multiplane Quantitative Phase Imaging Using a Wavelength-Multiplexed Diffractive Optical Processor

Mar 16, 2024Abstract:Quantitative phase imaging (QPI) is a label-free technique that provides optical path length information for transparent specimens, finding utility in biology, materials science, and engineering. Here, we present quantitative phase imaging of a 3D stack of phase-only objects using a wavelength-multiplexed diffractive optical processor. Utilizing multiple spatially engineered diffractive layers trained through deep learning, this diffractive processor can transform the phase distributions of multiple 2D objects at various axial positions into intensity patterns, each encoded at a unique wavelength channel. These wavelength-multiplexed patterns are projected onto a single field-of-view (FOV) at the output plane of the diffractive processor, enabling the capture of quantitative phase distributions of input objects located at different axial planes using an intensity-only image sensor. Based on numerical simulations, we show that our diffractive processor could simultaneously achieve all-optical quantitative phase imaging across several distinct axial planes at the input by scanning the illumination wavelength. A proof-of-concept experiment with a 3D-fabricated diffractive processor further validated our approach, showcasing successful imaging of two distinct phase objects at different axial positions by scanning the illumination wavelength in the terahertz spectrum. Diffractive network-based multiplane QPI designs can open up new avenues for compact on-chip phase imaging and sensing devices.

Multiplexed all-optical permutation operations using a reconfigurable diffractive optical network

Feb 04, 2024

Abstract:Large-scale and high-dimensional permutation operations are important for various applications in e.g., telecommunications and encryption. Here, we demonstrate the use of all-optical diffractive computing to execute a set of high-dimensional permutation operations between an input and output field-of-view through layer rotations in a diffractive optical network. In this reconfigurable multiplexed material designed by deep learning, every diffractive layer has four orientations: 0, 90, 180, and 270 degrees. Each unique combination of these rotatable layers represents a distinct rotation state of the diffractive design tailored for a specific permutation operation. Therefore, a K-layer rotatable diffractive material is capable of all-optically performing up to 4^K independent permutation operations. The original input information can be decrypted by applying the specific inverse permutation matrix to output patterns, while applying other inverse operations will lead to loss of information. We demonstrated the feasibility of this reconfigurable multiplexed diffractive design by approximating 256 randomly selected permutation matrices using K=4 rotatable diffractive layers. We also experimentally validated this reconfigurable diffractive network using terahertz radiation and 3D-printed diffractive layers, providing a decent match to our numerical results. The presented rotation-multiplexed diffractive processor design is particularly useful due to its mechanical reconfigurability, offering multifunctional representation through a single fabrication process.

All-optical complex field imaging using diffractive processors

Jan 30, 2024Abstract:Complex field imaging, which captures both the amplitude and phase information of input optical fields or objects, can offer rich structural insights into samples, such as their absorption and refractive index distributions. However, conventional image sensors are intensity-based and inherently lack the capability to directly measure the phase distribution of a field. This limitation can be overcome using interferometric or holographic methods, often supplemented by iterative phase retrieval algorithms, leading to a considerable increase in hardware complexity and computational demand. Here, we present a complex field imager design that enables snapshot imaging of both the amplitude and quantitative phase information of input fields using an intensity-based sensor array without any digital processing. Our design utilizes successive deep learning-optimized diffractive surfaces that are structured to collectively modulate the input complex field, forming two independent imaging channels that perform amplitude-to-amplitude and phase-to-intensity transformations between the input and output planes within a compact optical design, axially spanning ~100 wavelengths. The intensity distributions of the output fields at these two channels on the sensor plane directly correspond to the amplitude and quantitative phase profiles of the input complex field, eliminating the need for any digital image reconstruction algorithms. We experimentally validated the efficacy of our complex field diffractive imager designs through 3D-printed prototypes operating at the terahertz spectrum, with the output amplitude and phase channel images closely aligning with our numerical simulations. We envision that this complex field imager will have various applications in security, biomedical imaging, sensing and material science, among others.

Subwavelength Imaging using a Solid-Immersion Diffractive Optical Processor

Jan 17, 2024Abstract:Phase imaging is widely used in biomedical imaging, sensing, and material characterization, among other fields. However, direct imaging of phase objects with subwavelength resolution remains a challenge. Here, we demonstrate subwavelength imaging of phase and amplitude objects based on all-optical diffractive encoding and decoding. To resolve subwavelength features of an object, the diffractive imager uses a thin, high-index solid-immersion layer to transmit high-frequency information of the object to a spatially-optimized diffractive encoder, which converts/encodes high-frequency information of the input into low-frequency spatial modes for transmission through air. The subsequent diffractive decoder layers (in air) are jointly designed with the encoder using deep-learning-based optimization, and communicate with the encoder layer to create magnified images of input objects at its output, revealing subwavelength features that would otherwise be washed away due to diffraction limit. We demonstrate that this all-optical collaboration between a diffractive solid-immersion encoder and the following decoder layers in air can resolve subwavelength phase and amplitude features of input objects in a highly compact design. To experimentally demonstrate its proof-of-concept, we used terahertz radiation and developed a fabrication method for creating monolithic multi-layer diffractive processors. Through these monolithically fabricated diffractive encoder-decoder pairs, we demonstrated phase-to-intensity transformations and all-optically reconstructed subwavelength phase features of input objects by directly transforming them into magnified intensity features at the output. This solid-immersion-based diffractive imager, with its compact and cost-effective design, can find wide-ranging applications in bioimaging, endoscopy, sensing and materials characterization.

All-Optical Phase Conjugation Using Diffractive Wavefront Processing

Nov 08, 2023Abstract:Optical phase conjugation (OPC) is a nonlinear technique used for counteracting wavefront distortions, with various applications ranging from imaging to beam focusing. Here, we present the design of a diffractive wavefront processor to approximate all-optical phase conjugation operation for input fields with phase aberrations. Leveraging deep learning, a set of passive diffractive layers was optimized to all-optically process an arbitrary phase-aberrated coherent field from an input aperture, producing an output field with a phase distribution that is the conjugate of the input wave. We experimentally validated the efficacy of this wavefront processor by 3D fabricating diffractive layers trained using deep learning and performing OPC on phase distortions never seen by the diffractive processor during its training. Employing terahertz radiation, our physical diffractive processor successfully performed the OPC task through a shallow spatially-engineered volume that axially spans tens of wavelengths. In addition to this transmissive OPC configuration, we also created a diffractive phase-conjugate mirror by combining deep learning-optimized diffractive layers with a standard mirror. Given its compact, passive and scalable nature, our diffractive wavefront processor can be used for diverse OPC-related applications, e.g., turbidity suppression and aberration correction, and is also adaptable to different parts of the electromagnetic spectrum, especially those where cost-effective wavefront engineering solutions do not exist.

Complex-valued universal linear transformations and image encryption using spatially incoherent diffractive networks

Oct 05, 2023Abstract:As an optical processor, a Diffractive Deep Neural Network (D2NN) utilizes engineered diffractive surfaces designed through machine learning to perform all-optical information processing, completing its tasks at the speed of light propagation through thin optical layers. With sufficient degrees-of-freedom, D2NNs can perform arbitrary complex-valued linear transformations using spatially coherent light. Similarly, D2NNs can also perform arbitrary linear intensity transformations with spatially incoherent illumination; however, under spatially incoherent light, these transformations are non-negative, acting on diffraction-limited optical intensity patterns at the input field-of-view (FOV). Here, we expand the use of spatially incoherent D2NNs to complex-valued information processing for executing arbitrary complex-valued linear transformations using spatially incoherent light. Through simulations, we show that as the number of optimized diffractive features increases beyond a threshold dictated by the multiplication of the input and output space-bandwidth products, a spatially incoherent diffractive visual processor can approximate any complex-valued linear transformation and be used for all-optical image encryption using incoherent illumination. The findings are important for the all-optical processing of information under natural light using various forms of diffractive surface-based optical processors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge