Yujie Chen

Kimi K2.5: Visual Agentic Intelligence

Feb 02, 2026Abstract:We introduce Kimi K2.5, an open-source multimodal agentic model designed to advance general agentic intelligence. K2.5 emphasizes the joint optimization of text and vision so that two modalities enhance each other. This includes a series of techniques such as joint text-vision pre-training, zero-vision SFT, and joint text-vision reinforcement learning. Building on this multimodal foundation, K2.5 introduces Agent Swarm, a self-directed parallel agent orchestration framework that dynamically decomposes complex tasks into heterogeneous sub-problems and executes them concurrently. Extensive evaluations show that Kimi K2.5 achieves state-of-the-art results across various domains including coding, vision, reasoning, and agentic tasks. Agent Swarm also reduces latency by up to $4.5\times$ over single-agent baselines. We release the post-trained Kimi K2.5 model checkpoint to facilitate future research and real-world applications of agentic intelligence.

Unifying Speech Editing Detection and Content Localization via Prior-Enhanced Audio LLMs

Jan 29, 2026Abstract:Speech editing achieves semantic inversion by performing fine-grained segment-level manipulation on original utterances, while preserving global perceptual naturalness. Existing detection studies mainly focus on manually edited speech with explicit splicing artifacts, and therefore struggle to cope with emerging end-to-end neural speech editing techniques that generate seamless acoustic transitions. To address this challenge, we first construct a large-scale bilingual dataset, AiEdit, which leverages large language models to drive precise semantic tampering logic and employs multiple advanced neural speech editing methods for data synthesis, thereby filling the gap of high-quality speech editing datasets. Building upon this foundation, we propose PELM (Prior-Enhanced Audio Large Language Model), the first large-model framework that unifies speech editing detection and content localization by formulating them as an audio question answering task. To mitigate the inherent forgery bias and semantic-priority bias observed in existing audio large models, PELM incorporates word-level probability priors to provide explicit acoustic cues, and further designs a centroid-aggregation-based acoustic consistency perception loss to explicitly enforce the modeling of subtle local distribution anomalies. Extensive experimental results demonstrate that PELM significantly outperforms state-of-the-art methods on both the HumanEdit and AiEdit datasets, achieving equal error rates (EER) of 0.57\% and 9.28\% (localization), respectively.

WorldVQA: Measuring Atomic World Knowledge in Multimodal Large Language Models

Jan 28, 2026Abstract:We introduce WorldVQA, a benchmark designed to evaluate the atomic visual world knowledge of Multimodal Large Language Models (MLLMs). Unlike current evaluations, which often conflate visual knowledge retrieval with reasoning, WorldVQA decouples these capabilities to strictly measure "what the model memorizes." The benchmark assesses the atomic capability of grounding and naming visual entities across a stratified taxonomy, spanning from common head-class objects to long-tail rarities. We expect WorldVQA to serve as a rigorous test for visual factuality, thereby establishing a standard for assessing the encyclopedic breadth and hallucination rates of current and next-generation frontier models.

Mitigating Audiovisual Mismatch in Visual-Guide Audio Captioning

May 28, 2025Abstract:Current vision-guided audio captioning systems frequently fail to address audiovisual misalignment in real-world scenarios, such as dubbed content or off-screen sounds. To bridge this critical gap, we present an entropy-aware gated fusion framework that dynamically modulates visual information flow through cross-modal uncertainty quantification. Our novel approach employs attention entropy analysis in cross-attention layers to automatically identify and suppress misleading visual cues during modal fusion. Complementing this architecture, we develop a batch-wise audiovisual shuffling technique that generates synthetic mismatched training pairs, greatly enhancing model resilience against alignment noise. Evaluations on the AudioCaps benchmark demonstrate our system's superior performance over existing baselines, especially in mismatched modality scenarios. Furthermore, our solution demonstrates an approximately 6x improvement in inference speed compared to the baseline.

Hearing from Silence: Reasoning Audio Descriptions from Silent Videos via Vision-Language Model

May 19, 2025Abstract:Humans can intuitively infer sounds from silent videos, but whether multimodal large language models can perform modal-mismatch reasoning without accessing target modalities remains relatively unexplored. Current text-assisted-video-to-audio (VT2A) methods excel in video foley tasks but struggle to acquire audio descriptions during inference. We introduce the task of Reasoning Audio Descriptions from Silent Videos (SVAD) to address this challenge and investigate vision-language models' (VLMs) capabilities on this task. To further enhance the VLMs' reasoning capacity for the SVAD task, we construct a CoT-AudioCaps dataset and propose a Chain-of-Thought-based supervised fine-tuning strategy. Experiments on SVAD and subsequent VT2A tasks demonstrate our method's effectiveness in two key aspects: significantly improving VLMs' modal-mismatch reasoning for SVAD and effectively addressing the challenge of acquiring audio descriptions during VT2A inference.

$\mathcal{A}LLM4ADD$: Unlocking the Capabilities of Audio Large Language Models for Audio Deepfake Detection

May 16, 2025

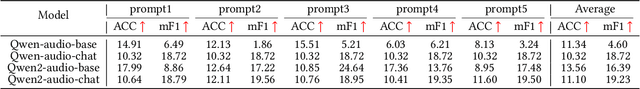

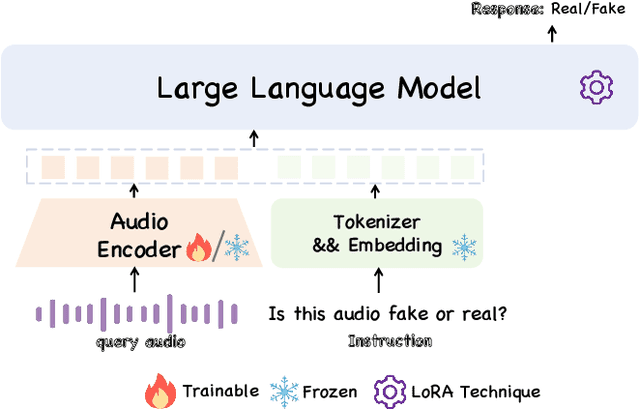

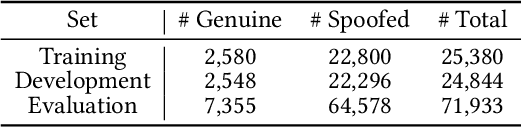

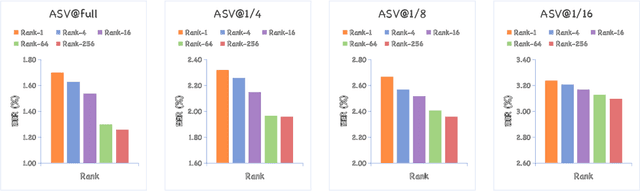

Abstract:Audio deepfake detection (ADD) has grown increasingly important due to the rise of high-fidelity audio generative models and their potential for misuse. Given that audio large language models (ALLMs) have made significant progress in various audio processing tasks, a heuristic question arises: Can ALLMs be leveraged to solve ADD?. In this paper, we first conduct a comprehensive zero-shot evaluation of ALLMs on ADD, revealing their ineffectiveness in detecting fake audio. To enhance their performance, we propose $\mathcal{A}LLM4ADD$, an ALLM-driven framework for ADD. Specifically, we reformulate ADD task as an audio question answering problem, prompting the model with the question: "Is this audio fake or real?". We then perform supervised fine-tuning to enable the ALLM to assess the authenticity of query audio. Extensive experiments are conducted to demonstrate that our ALLM-based method can achieve superior performance in fake audio detection, particularly in data-scarce scenarios. As a pioneering study, we anticipate that this work will inspire the research community to leverage ALLMs to develop more effective ADD systems.

Q-MambaIR: Accurate Quantized Mamba for Efficient Image Restoration

Mar 27, 2025Abstract:State-Space Models (SSMs) have attracted considerable attention in Image Restoration (IR) due to their ability to scale linearly sequence length while effectively capturing long-distance dependencies. However, deploying SSMs to edge devices is challenging due to the constraints in memory, computing capacity, and power consumption, underscoring the need for efficient compression strategies. While low-bit quantization is an efficient model compression strategy for reducing size and accelerating IR tasks, SSM suffers substantial performance drops at ultra-low bit-widths (2-4 bits), primarily due to outliers that exacerbate quantization error. To address this challenge, we propose Q-MambaIR, an accurate, efficient, and flexible Quantized Mamba for IR tasks. Specifically, we introduce a Statistical Dynamic-balancing Learnable Scalar (DLS) to dynamically adjust the quantization mapping range, thereby mitigating the peak truncation loss caused by extreme values. Furthermore, we design a Range-floating Flexible Allocator (RFA) with an adaptive threshold to flexibly round values. This approach preserves high-frequency details and maintains the SSM's feature extraction capability. Notably, RFA also enables pre-deployment weight quantization, striking a balance between computational efficiency and model accuracy. Extensive experiments on IR tasks demonstrate that Q-MambaIR consistently outperforms existing quantized SSMs, achieving much higher state-of-the-art (SOTA) accuracy results with only a negligible increase in training computation and storage saving.

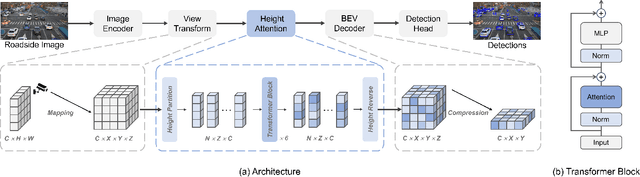

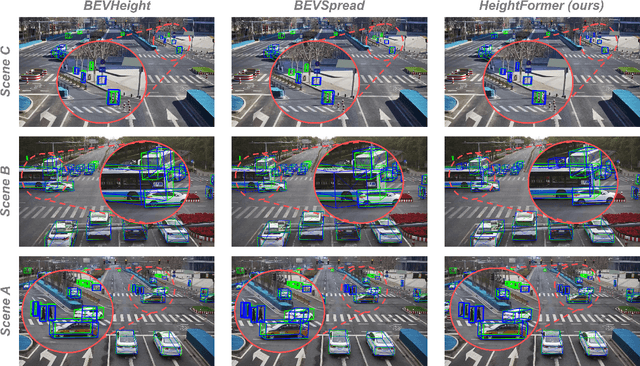

HeightFormer: Learning Height Prediction in Voxel Features for Roadside Vision Centric 3D Object Detection via Transformer

Mar 13, 2025

Abstract:Roadside vision centric 3D object detection has received increasing attention in recent years. It expands the perception range of autonomous vehicles, enhances the road safety. Previous methods focused on predicting per-pixel height rather than depth, making significant gains in roadside visual perception. While it is limited by the perspective property of near-large and far-small on image features, making it difficult for network to understand real dimension of objects in the 3D world. BEV features and voxel features present the real distribution of objects in 3D world compared to the image features. However, BEV features tend to lose details due to the lack of explicit height information, and voxel features are computationally expensive. Inspired by this insight, an efficient framework learning height prediction in voxel features via transformer is proposed, dubbed HeightFormer. It groups the voxel features into local height sequences, and utilize attention mechanism to obtain height distribution prediction. Subsequently, the local height sequences are reassembled to generate accurate 3D features. The proposed method is applied to two large-scale roadside benchmarks, DAIR-V2X-I and Rope3D. Extensive experiments are performed and the HeightFormer outperforms the state-of-the-art methods in roadside vision centric 3D object detection task.

HumanoidPano: Hybrid Spherical Panoramic-LiDAR Cross-Modal Perception for Humanoid Robots

Mar 13, 2025Abstract:The perceptual system design for humanoid robots poses unique challenges due to inherent structural constraints that cause severe self-occlusion and limited field-of-view (FOV). We present HumanoidPano, a novel hybrid cross-modal perception framework that synergistically integrates panoramic vision and LiDAR sensing to overcome these limitations. Unlike conventional robot perception systems that rely on monocular cameras or standard multi-sensor configurations, our method establishes geometrically-aware modality alignment through a spherical vision transformer, enabling seamless fusion of 360 visual context with LiDAR's precise depth measurements. First, Spherical Geometry-aware Constraints (SGC) leverage panoramic camera ray properties to guide distortion-regularized sampling offsets for geometric alignment. Second, Spatial Deformable Attention (SDA) aggregates hierarchical 3D features via spherical offsets, enabling efficient 360{\deg}-to-BEV fusion with geometrically complete object representations. Third, Panoramic Augmentation (AUG) combines cross-view transformations and semantic alignment to enhance BEV-panoramic feature consistency during data augmentation. Extensive evaluations demonstrate state-of-the-art performance on the 360BEV-Matterport benchmark. Real-world deployment on humanoid platforms validates the system's capability to generate accurate BEV segmentation maps through panoramic-LiDAR co-perception, directly enabling downstream navigation tasks in complex environments. Our work establishes a new paradigm for embodied perception in humanoid robotics.

S$^2$DN: Learning to Denoise Unconvincing Knowledge for Inductive Knowledge Graph Completion

Dec 20, 2024Abstract:Inductive Knowledge Graph Completion (KGC) aims to infer missing facts between newly emerged entities within knowledge graphs (KGs), posing a significant challenge. While recent studies have shown promising results in inferring such entities through knowledge subgraph reasoning, they suffer from (i) the semantic inconsistencies of similar relations, and (ii) noisy interactions inherent in KGs due to the presence of unconvincing knowledge for emerging entities. To address these challenges, we propose a Semantic Structure-aware Denoising Network (S$^2$DN) for inductive KGC. Our goal is to learn adaptable general semantics and reliable structures to distill consistent semantic knowledge while preserving reliable interactions within KGs. Specifically, we introduce a semantic smoothing module over the enclosing subgraphs to retain the universal semantic knowledge of relations. We incorporate a structure refining module to filter out unreliable interactions and offer additional knowledge, retaining robust structure surrounding target links. Extensive experiments conducted on three benchmark KGs demonstrate that S$^2$DN surpasses the performance of state-of-the-art models. These results demonstrate the effectiveness of S$^2$DN in preserving semantic consistency and enhancing the robustness of filtering out unreliable interactions in contaminated KGs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge