Luca Benini

D-ITET, ETH Zürich, Switzerland

Safe-NEureka: a Hybrid Modular Redundant DNN Accelerator for On-board Satellite AI Processing

Feb 04, 2026Abstract:Low Earth Orbit (LEO) constellations are revolutionizing the space sector, with on-board Artificial Intelligence (AI) becoming pivotal for next-generation satellites. AI acceleration is essential for safety-critical functions such as autonomous Guidance, Navigation, and Control (GNC), where errors cannot be tolerated, and performance-critical processing of high-bandwidth sensor data, where occasional errors are tolerable. Consequently, AI accelerators for satellites must combine robust protection against radiation-induced faults with high throughput. This paper presents Safe-NEureka, a Hybrid Modular Redundant Deep Neural Network (DNN) accelerator for heterogeneous RISC-V systems. It operates in two modes: a redundancy mode utilizing Dual Modular Redundancy (DMR) with hardware-based recovery, and a performance mode repurposing redundant datapaths to maximize parallel throughput. Furthermore, its memory interface is protected by Error Correction Codes (ECCs), and the controller by Triple Modular Redundancy (TMR). Implementation in GlobalFoundries 12nm technology shows a 96 reduction in faulty executions in redundancy mode, with a manageable 15 area overhead. In performance mode, the architecture achieves near-baseline speeds on 3x3 dense convolutions with a 5 throughput and 11 efficiency reduction, compared to 48 and 53 in redundancy mode. This flexibility ensures high overheads are limited to critical tasks, establishing Safe-NEureka as a versatile solution for space applications.

LEVIO: Lightweight Embedded Visual Inertial Odometry for Resource-Constrained Devices

Feb 03, 2026Abstract:Accurate, infrastructure-less sensor systems for motion tracking are essential for mobile robotics and augmented reality (AR) applications. The most popular state-of-the-art visual-inertial odometry (VIO) systems, however, are too computationally demanding for resource-constrained hardware, such as micro-drones and smart glasses. This work presents LEVIO, a fully featured VIO pipeline optimized for ultra-low-power compute platforms, allowing six-degrees-of-freedom (DoF) real-time sensing. LEVIO incorporates established VIO components such as Oriented FAST and Rotated BRIEF (ORB) feature tracking and bundle adjustment, while emphasizing a computationally efficient architecture with parallelization and low memory usage to suit embedded microcontrollers and low-power systems-on-chip (SoCs). The paper proposes and details the algorithmic design choices and the hardware-software co-optimization approach, and presents real-time performance on resource-constrained hardware. LEVIO is validated on a parallel-processing ultra-low-power RISC-V SoC, achieving 20 FPS while consuming less than 100 mW, and benchmarked against public VIO datasets, offering a compelling balance between efficiency and accuracy. To facilitate reproducibility and adoption, the complete implementation is released as open-source.

* This article has been accepted for publication in the IEEE Sensors Journal (JSEN)

Revisiting Adaptive Rounding with Vectorized Reparameterization for LLM Quantization

Feb 02, 2026Abstract:Adaptive Rounding has emerged as an alternative to round-to-nearest (RTN) for post-training quantization by enabling cross-element error cancellation. Yet, dense and element-wise rounding matrices are prohibitively expensive for billion-parameter large language models (LLMs). We revisit adaptive rounding from an efficiency perspective and propose VQRound, a parameter-efficient optimization framework that reparameterizes the rounding matrix into a compact codebook. Unlike low-rank alternatives, VQRound minimizes the element-wise worst-case error under $L_\infty$ norm, which is critical for handling heavy-tailed weight distributions in LLMs. Beyond reparameterization, we identify rounding initialization as a decisive factor and develop a lightweight end-to-end finetuning pipeline that optimizes codebooks across all layers using only 128 samples. Extensive experiments on OPT, LLaMA, LLaMA2, and Qwen3 models demonstrate that VQRound achieves better convergence than traditional adaptive rounding at the same number of steps while using as little as 0.2% of the trainable parameters. Our results show that adaptive rounding can be made both scalable and fast-fitting. The code is available at https://github.com/zhoustan/VQRound.

Gated Relational Alignment via Confidence-based Distillation for Efficient VLMs

Jan 30, 2026Abstract:Vision-Language Models (VLMs) achieve strong multimodal performance but are costly to deploy, and post-training quantization often causes significant accuracy loss. Despite its potential, quantization-aware training for VLMs remains underexplored. We propose GRACE, a framework unifying knowledge distillation and QAT under the Information Bottleneck principle: quantization constrains information capacity while distillation guides what to preserve within this budget. Treating the teacher as a proxy for task-relevant information, we introduce confidence-gated decoupled distillation to filter unreliable supervision, relational centered kernel alignment to transfer visual token structures, and an adaptive controller via Lagrangian relaxation to balance fidelity against capacity constraints. Across extensive benchmarks on LLaVA and Qwen families, our INT4 models consistently outperform FP16 baselines (e.g., LLaVA-1.5-7B: 70.1 vs. 66.8 on SQA; Qwen2-VL-2B: 76.9 vs. 72.6 on MMBench), nearly matching teacher performance. Using real INT4 kernel, we achieve 3$\times$ throughput with 54% memory reduction. This principled framework significantly outperforms existing quantization methods, making GRACE a compelling solution for resource-constrained deployment.

PerfDojo: Automated ML Library Generation for Heterogeneous Architectures

Nov 05, 2025

Abstract:The increasing complexity of machine learning models and the proliferation of diverse hardware architectures (CPUs, GPUs, accelerators) make achieving optimal performance a significant challenge. Heterogeneity in instruction sets, specialized kernel requirements for different data types and model features (e.g., sparsity, quantization), and architecture-specific optimizations complicate performance tuning. Manual optimization is resource-intensive, while existing automatic approaches often rely on complex hardware-specific heuristics and uninterpretable intermediate representations, hindering performance portability. We introduce PerfLLM, a novel automatic optimization methodology leveraging Large Language Models (LLMs) and Reinforcement Learning (RL). Central to this is PerfDojo, an environment framing optimization as an RL game using a human-readable, mathematically-inspired code representation that guarantees semantic validity through transformations. This allows effective optimization without prior hardware knowledge, facilitating both human analysis and RL agent training. We demonstrate PerfLLM's ability to achieve significant performance gains across diverse CPU (x86, Arm, RISC-V) and GPU architectures.

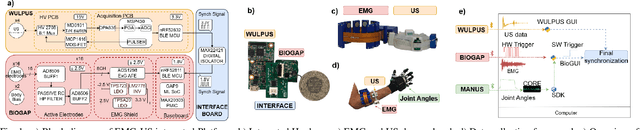

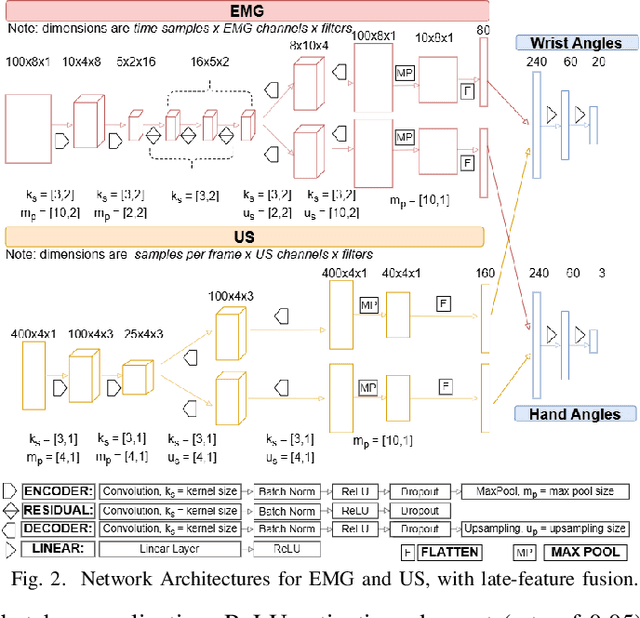

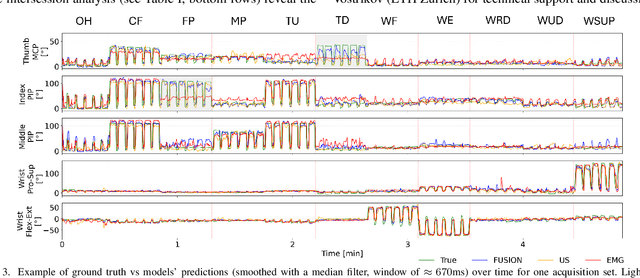

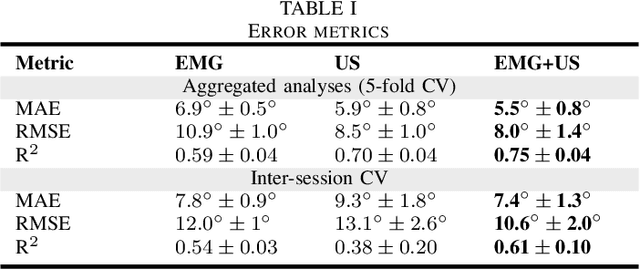

Wearable and Ultra-Low-Power Fusion of EMG and A-Mode US for Hand-Wrist Kinematic Tracking

Oct 02, 2025

Abstract:Hand gesture recognition based on biosignals has shown strong potential for developing intuitive human-machine interaction strategies that closely mimic natural human behavior. In particular, sensor fusion approaches have gained attention for combining complementary information and overcoming the limitations of individual sensing modalities, thereby enabling more robust and reliable systems. Among them, the fusion of surface electromyography (EMG) and A-mode ultrasound (US) is very promising. However, prior solutions rely on power-hungry platforms unsuitable for multi-day use and are limited to discrete gesture classification. In this work, we present an ultra-low-power (sub-50 mW) system for concurrent acquisition of 8-channel EMG and 4-channel A-mode US signals, integrating two state-of-the-art platforms into fully wearable, dry-contact armbands. We propose a framework for continuous tracking of 23 degrees of freedom (DoFs), 20 for the hand and 3 for the wrist, using a kinematic glove for ground-truth labeling. Our method employs lightweight encoder-decoder architectures with multi-task learning to simultaneously estimate hand and wrist joint angles. Experimental results under realistic sensor repositioning conditions demonstrate that EMG-US fusion achieves a root mean squared error of $10.6^\circ\pm2.0^\circ$, compared to $12.0^\circ\pm1^\circ$ for EMG and $13.1^\circ\pm2.6^\circ$ for US, and a R$^2$ score of $0.61\pm0.1$, with $0.54\pm0.03$ for EMG and $0.38\pm0.20$ for US.

A Parallel Ultra-Low Power Silent Speech Interface based on a Wearable, Fully-dry EMG Neckband

Sep 26, 2025

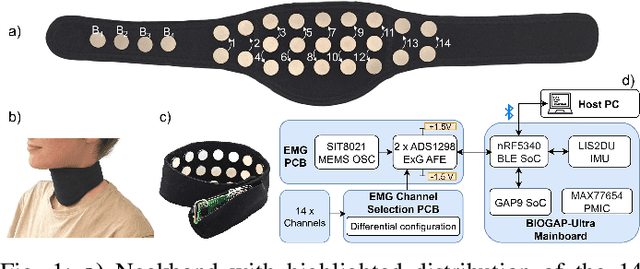

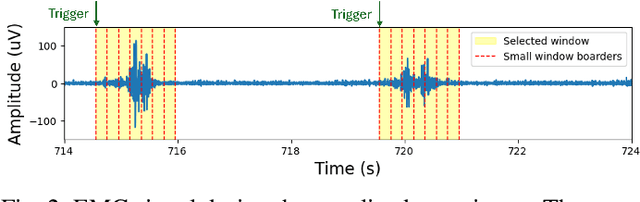

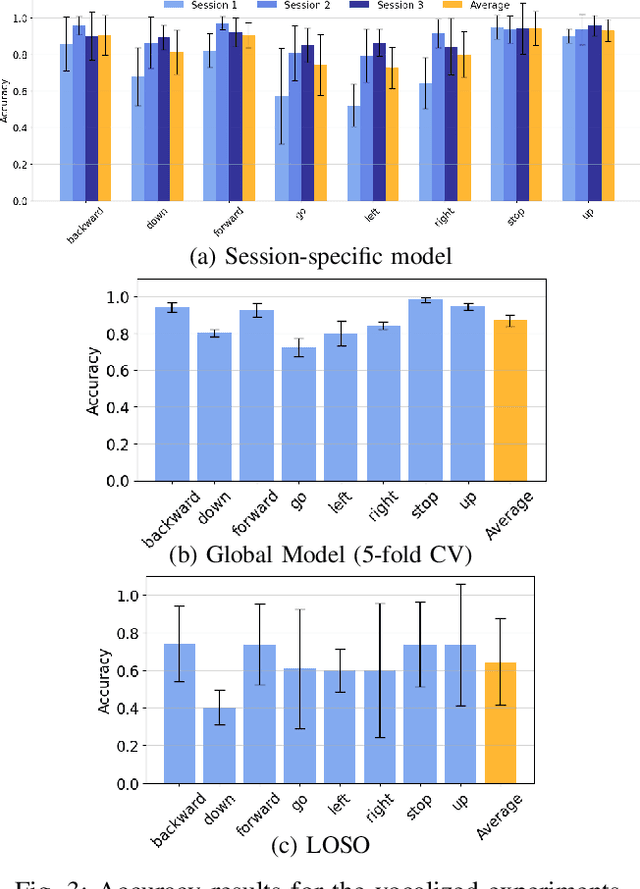

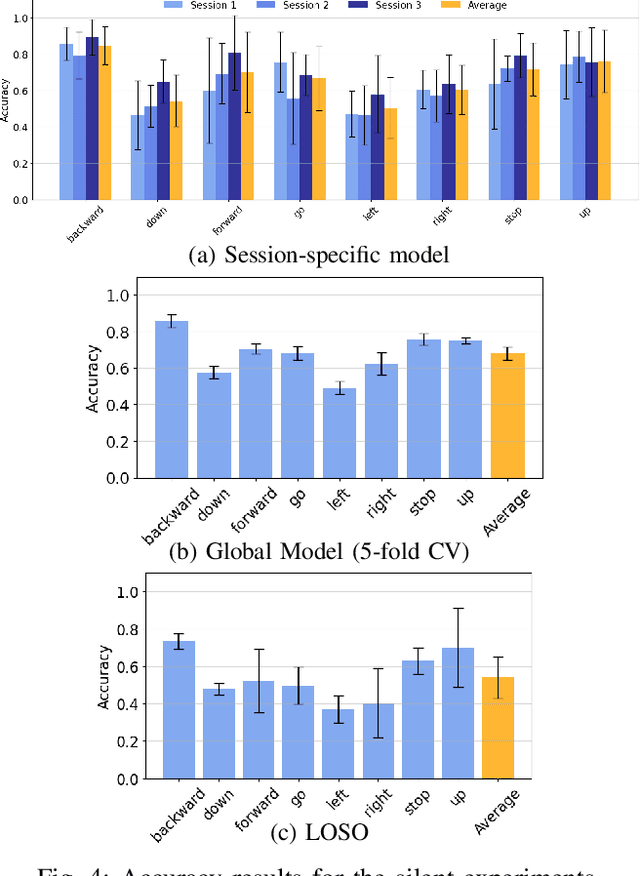

Abstract:We present a wearable, fully-dry, and ultra-low power EMG system for silent speech recognition, integrated into a textile neckband to enable comfortable, non-intrusive use. The system features 14 fully-differential EMG channels and is based on the BioGAP-Ultra platform for ultra-low power (22 mW) biosignal acquisition and wireless transmission. We evaluate its performance on eight speech commands under both vocalized and silent articulation, achieving average classification accuracies of 87$\pm$3% and 68$\pm$3% respectively, with a 5-fold CV approach. To mimic everyday-life conditions, we introduce session-to-session variability by repositioning the neckband between sessions, achieving leave-one-session-out accuracies of 64$\pm$18% and 54$\pm$7% for the vocalized and silent experiments, respectively. These results highlight the robustness of the proposed approach and the promise of energy-efficient silent-speech decoding.

ListenToJESD204B: A Lightweight Open-Source JESD204B IP Core for FPGA-Based Ultrasound Acquisition systems

Aug 20, 2025Abstract:The demand for hundreds of tightly synchronized channels operating at tens of MSPS in ultrasound systems exceeds conventional low-voltage differential signaling links' bandwidth, pin count, and latency. Although the JESD204B serial interface mitigates these limitations, commercial FPGA IP cores are proprietary, costly, and resource-intensive. We present ListenToJESD204B, an open-source receiver IP core released under a permissive Solderpad 0.51 license for AMD Xilinx Zynq UltraScale+ devices. Written in synthesizable SystemVerilog, the core supports four GTH/GTY lanes at 12.8 Gb/s and provides cycle-accurate AXI-Stream data alongside deterministic Subclass~1 latency. It occupies only 107 configurable logic blocks (approximately 437 LUTs), representing a 79\% reduction compared to comparable commercially available IP. A modular data path featuring per-lane elastic buffers, SYSREF-locked LMFC generation, and optional LFSR descrambling facilitates scaling to high lane counts. We verified protocol compliance through simulation against the Xilinx JESD204C IP in JESD204B mode and on hardware using TI AFE58JD48 ADCs. Block stability was verified by streaming 80 MSPS, 16-bit samples over two 12.8 Gb/s links for 30 minutes with no errors.

A 66-Gb/s/5.5-W RISC-V Many-Core Cluster for 5G+ Software-Defined Radio Uplinks

Aug 08, 2025Abstract:Following the scale-up of new radio (NR) complexity in 5G and beyond, the physical layer's computing load on base stations is increasing under a strictly constrained latency and power budget; base stations must process > 20-Gb/s uplink wireless data rate on the fly, in < 10 W. At the same time, the programmability and reconfigurability of base station components are the key requirements; it reduces the time and cost of new networks' deployment, it lowers the acceptance threshold for industry players to enter the market, and it ensures return on investments in a fast-paced evolution of standards. In this article, we present the design of a many-core cluster for 5G and beyond base station processing. Our design features 1024, streamlined RISC-V cores with domain-specific FP extensions, and 4-MiB shared memory. It provides the necessary computational capabilities for software-defined processing of the lower physical layer of 5G physical uplink shared channel (PUSCH), satisfying high-end throughput requirements (66 Gb/s for a transition time interval (TTI), 9.4-302 Gb/s depending on the processing stage). The throughput metrics for the implemented functions are ten times higher than in state-of-the-art (SoTA) application-specific instruction processors (ASIPs). The energy efficiency on key NR kernels (2-41 Gb/s/W), measured at 800 MHz, 25 {\deg}C, and 0.8 V, on a placed and routed instance in 12-nm CMOS technology, is competitive with SoTA architectures. The PUSCH processing runs end-to-end on a single cluster in 1.7 ms, at <6-W average power consumption, achieving 12 Gb/s/W.

HiM2SAM: Enhancing SAM2 with Hierarchical Motion Estimation and Memory Optimization towards Long-term Tracking

Jul 10, 2025

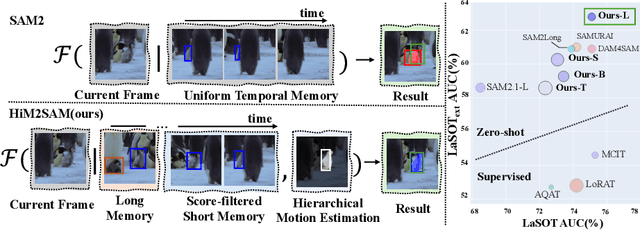

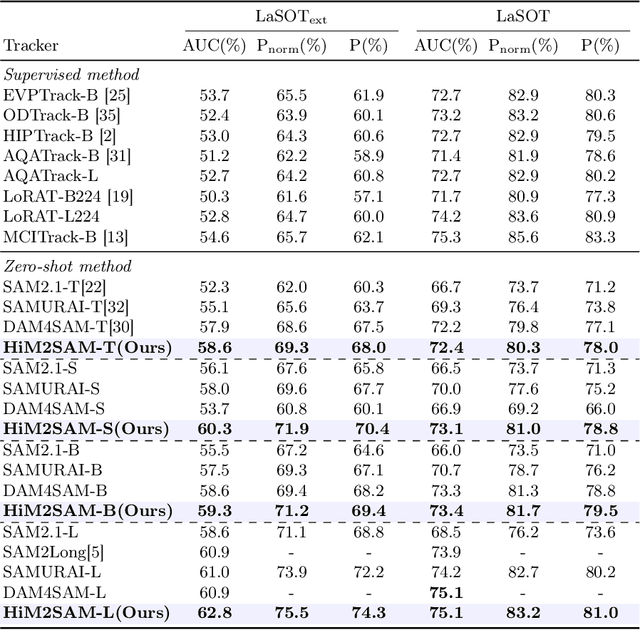

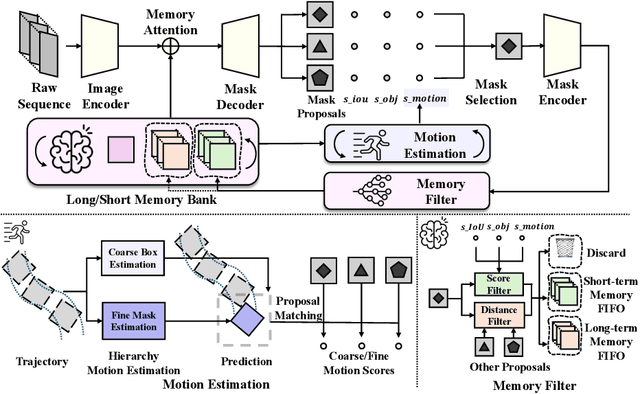

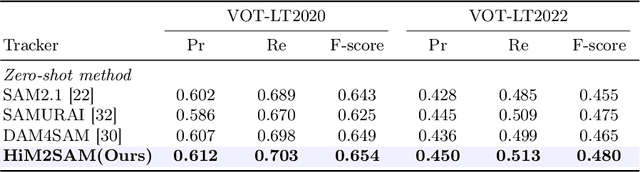

Abstract:This paper presents enhancements to the SAM2 framework for video object tracking task, addressing challenges such as occlusions, background clutter, and target reappearance. We introduce a hierarchical motion estimation strategy, combining lightweight linear prediction with selective non-linear refinement to improve tracking accuracy without requiring additional training. In addition, we optimize the memory bank by distinguishing long-term and short-term memory frames, enabling more reliable tracking under long-term occlusions and appearance changes. Experimental results show consistent improvements across different model scales. Our method achieves state-of-the-art performance on LaSOT and LaSOText with the large model, achieving 9.6% and 7.2% relative improvements in AUC over the original SAM2, and demonstrates even larger relative gains on smaller models, highlighting the effectiveness of our trainless, low-overhead improvements for boosting long-term tracking performance. The code is available at https://github.com/LouisFinner/HiM2SAM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge