Tommaso Polonelli

D-ITET, ETH Zürich, Switzerland

EdgeCodec: Onboard Lightweight High Fidelity Neural Compressor with Residual Vector Quantization

Jul 08, 2025Abstract:We present EdgeCodec, an end-to-end neural compressor for barometric data collected from wind turbine blades. EdgeCodec leverages a heavily asymmetric autoencoder architecture, trained with a discriminator and enhanced by a Residual Vector Quantizer to maximize compression efficiency. It achieves compression rates between 2'560:1 and 10'240:1 while maintaining a reconstruction error below 3%, and operates in real time on the GAP9 microcontroller with bitrates ranging from 11.25 to 45 bits per second. Bitrates can be selected on a sample-by-sample basis, enabling on-the-fly adaptation to varying network conditions. In its highest compression mode, EdgeCodec reduces the energy consumption of wireless data transmission by up to 2.9x, significantly extending the operational lifetime of deployed sensor units.

ElectraSight: Smart Glasses with Fully Onboard Non-Invasive Eye Tracking Using Hybrid Contact and Contactless EOG

Dec 19, 2024

Abstract:Smart glasses with integrated eye tracking technology are revolutionizing diverse fields, from immersive augmented reality experiences to cutting-edge health monitoring solutions. However, traditional eye tracking systems rely heavily on cameras and significant computational power, leading to high-energy demand and privacy issues. Alternatively, systems based on electrooculography (EOG) provide superior battery life but are less accurate and primarily effective for detecting blinks, while being highly invasive. The paper introduces ElectraSight, a non-invasive plug-and-play low-power eye tracking system for smart glasses. The hardware-software co-design of the system is detailed, along with the integration of a hybrid EOG (hEOG) solution that incorporates both contact and contactless electrodes. Within 79 kB of memory, the proposed tinyML model performs real-time eye movement classification with 81% accuracy for 10 classes and 92% for 6 classes, not requiring any calibration or user-specific fine-tuning. Experimental results demonstrate that ElectraSight delivers high accuracy in eye movement and blink classification, with minimal overall movement detection latency (90% within 60 ms) and an ultra-low computing time (301 {\mu}s). The power consumption settles down to 7.75 mW for continuous data acquisition and 46 mJ for the tinyML inference. This efficiency enables continuous operation for over 3 days on a compact 175 mAh battery. This work opens new possibilities for eye tracking in commercial applications, offering an unobtrusive solution that enables advancements in user interfaces, health diagnostics, and hands-free control systems.

Aerodynamic Performance and Impact Analysis of a MEMS-Based Non-Invasive Monitoring System for Wind Turbine Blades

Aug 21, 2024

Abstract:Wind power generation plays a crucial role in transitioning away from fossil fuel-dependent energy sources, contributing significantly to the mitigation of climate change. Monitoring and evaluating the aerodynamics of large wind turbine rotors is crucial to enable more wind energy deployment. This is necessary to achieve the European climate goal of a reduction in net greenhouse gas emissions by at least 55% by 2030, compared to 1990 levels. This paper presents a comparison between two measurement systems for evaluating the aerodynamic performance of wind turbine rotor blades on a full-scale wind tunnel test. One system uses an array of ten commercial compact ultra-low power micro-electromechanical systems (MEMS) pressure sensors placed on the blade surface, while the other employs high-accuracy lab-based pressure scanners embedded in the airfoil. The tests are conducted at a Reynolds number of 3.5 x 10^6, which represents typical operating conditions for wind turbines. MEMS sensors are of particular interest, as they can enable real-time monitoring which would be impossible with the ground truth system. This work provides an accurate quantification of the impact of the MEMS system on the blade aerodynamics and its measurement accuracy. Our results indicate that MEMS sensors, with a total sensing power below 1.6 mW, can measure key aerodynamic parameters like Angle of Attack (AoA) and flow separation with a precision of 1{\deg}. Although there are minor differences in measurements due to sensor encapsulation, the MEMS system does not significantly compromise blade aerodynamics, with a maximum shift in the angle of attack for flow separation of only 1{\deg}. These findings indicate that surface and low-power MEMS sensor systems are a promising approach for efficient and sustainable wind turbine monitoring using self-sustaining Internet of Things devices and wireless sensor networks.

Ultra-Lightweight Collaborative Mapping for Robot Swarms

Jul 03, 2024

Abstract:A key requirement in robotics is the ability to simultaneously self-localize and map a previously unknown environment, relying primarily on onboard sensing and computation. Achieving fully onboard accurate simultaneous localization and mapping (SLAM) is feasible for high-end robotic platforms, whereas small and inexpensive robots face challenges due to constrained hardware, therefore frequently resorting to external infrastructure for sensing and computation. The challenge is further exacerbated in swarms of robots, where coordination, scalability, and latency are crucial concerns. This work introduces a decentralized and lightweight collaborative SLAM approach that enables mapping on virtually any robot, even those equipped with low-cost hardware, including miniaturized insect-size devices. Moreover, the proposed solution supports large swarm formations with the capability to coordinate hundreds of agents. To substantiate our claims, we have successfully implemented collaborative SLAM on centimeter-size drones weighing only 46 grams. Remarkably, we achieve results comparable to high-end state-of-the-art solutions while reducing the cost, memory, and computation requirements by two orders of magnitude. Our approach is innovative in three main aspects. First, it enables onboard infrastructure-less collaborative mapping with a lightweight and cost-effective solution in terms of sensing and computation. Second, we optimize the data traffic within the swarm to support hundreds of cooperative agents using standard wireless protocols such as ultra-wideband (UWB), Bluetooth, or WiFi. Last, we implement a distributed swarm coordination policy to decrease mapping latency and enhance accuracy.

Angle of Arrival and Centimeter Distance Estimation on a Smart UWB Sensor Node

Dec 21, 2023

Abstract:Accurate and low-power indoor localization is becoming more and more of a necessity to empower novel consumer and industrial applications. In this field, the most promising technology is based on UWB modulation; however, current UWB positioning systems do not reach centimeter accuracy in general deployments due to multipath and nonisotropic antennas, still necessitating several fixed anchors to estimate an object's position in space. This article presents an in-depth study and assessment of angle of arrival (AoA) UWB measurements using a compact, low-power solution integrating a novel commercial module with phase difference of arrival (PDoA) estimation as integrated feature. Results demonstrate the possibility of reaching centimeter distance precision and ang 2.4 average angular accuracy in many operative conditions, e.g., in a ang 90 range around the center. Moreover, integrating the channel impulse response, the phase differential of arrival, and the point-to-point distance, an error correction model is discussed to compensate for reflections, multipaths, and front-back ambiguity.

NanoSLAM: Enabling Fully Onboard SLAM for Tiny Robots

Sep 21, 2023

Abstract:Perceiving and mapping the surroundings are essential for enabling autonomous navigation in any robotic platform. The algorithm class that enables accurate mapping while correcting the odometry errors present in most robotics systems is Simultaneous Localization and Mapping (SLAM). Today, fully onboard mapping is only achievable on robotic platforms that can host high-wattage processors, mainly due to the significant computational load and memory demands required for executing SLAM algorithms. For this reason, pocket-size hardware-constrained robots offload the execution of SLAM to external infrastructures. To address the challenge of enabling SLAM algorithms on resource-constrained processors, this paper proposes NanoSLAM, a lightweight and optimized end-to-end SLAM approach specifically designed to operate on centimeter-size robots at a power budget of only 87.9 mW. We demonstrate the mapping capabilities in real-world scenarios and deploy NanoSLAM on a nano-drone weighing 44 g and equipped with a novel commercial RISC-V low-power parallel processor called GAP9. The algorithm is designed to leverage the parallel capabilities of the RISC-V processing cores and enables mapping of a general environment with an accuracy of 4.5 cm and an end-to-end execution time of less than 250 ms.

Fully Onboard SLAM for Distributed Mapping with a Swarm of Nano-Drones

Sep 07, 2023Abstract:The use of Unmanned Aerial Vehicles (UAVs) is rapidly increasing in applications ranging from surveillance and first-aid missions to industrial automation involving cooperation with other machines or humans. To maximize area coverage and reduce mission latency, swarms of collaborating drones have become a significant research direction. However, this approach requires open challenges in positioning, mapping, and communications to be addressed. This work describes a distributed mapping system based on a swarm of nano-UAVs, characterized by a limited payload of 35 g and tightly constrained on-board sensing and computing capabilities. Each nano-UAV is equipped with four 64-pixel depth sensors that measure the relative distance to obstacles in four directions. The proposed system merges the information from the swarm and generates a coherent grid map without relying on any external infrastructure. The data fusion is performed using the iterative closest point algorithm and a graph-based simultaneous localization and mapping algorithm, running entirely on-board the UAV's low-power ARM Cortex-M microcontroller with just 192 kB of SRAM memory. Field results gathered in three different mazes from a swarm of up to 4 nano-UAVs prove a mapping accuracy of 12 cm and demonstrate that the mapping time is inversely proportional to the number of agents. The proposed framework scales linearly in terms of communication bandwidth and on-board computational complexity, supporting communication between up to 20 nano-UAVs and mapping of areas up to 180 m2 with the chosen configuration requiring only 50 kB of memory.

Exploring Automatic Gym Workouts Recognition Locally On Wearable Resource-Constrained Devices

Jan 13, 2023Abstract:Automatic gym activity recognition on energy- and resource-constrained wearable devices removes the human-interaction requirement during intense gym sessions - like soft-touch tapping and swiping. This work presents a tiny and highly accurate residual convolutional neural network that runs in milliwatt microcontrollers for automatic workouts classification. We evaluated the inference performance of the deep model with quantization on three resource-constrained devices: two microcontrollers with ARM-Cortex M4 and M7 core from ST Microelectronics, and a GAP8 system on chip, which is an open-sourced, multi-core RISC-V computing platform from GreenWaves Technologies. Experimental results show an accuracy of up to 90.4% for eleven workouts recognition with full precision inference. The paper also presents the trade-off performance of the resource-constrained system. While keeping the recognition accuracy (88.1%) with minimal loss, each inference takes only 3.2 ms on GAP8, benefiting from the 8 RISC-V cluster cores. We measured that it features an execution time that is 18.9x and 6.5x faster than the Cortex-M4 and Cortex-M7 cores, showing the feasibility of real-time on-board workouts recognition based on the described data set with 20 Hz sampling rate. The energy consumed for each inference on GAP8 is 0.41 mJ compared to 5.17 mJ on Cortex-M4 and 8.07 mJ on Cortex-M7 with the maximum clock. It can lead to longer battery life when the system is battery-operated. We also introduced an open data set composed of fifty sessions of eleven gym workouts collected from ten subjects that is publicly available.

Fully On-board Low-Power Localization with Multizone Time-of-Flight Sensors on Nano-UAVs

Nov 25, 2022Abstract:Nano-size unmanned aerial vehicles (UAVs) hold enormous potential to perform autonomous operations in complex environments, such as inspection, monitoring or data collection. Moreover, their small size allows safe operation close to humans and agile flight. An important part of autonomous flight is localization, which is a computationally intensive task especially on a nano-UAV that usually has strong constraints in sensing, processing and memory. This work presents a real-time localization approach with low element-count multizone range sensors for resource-constrained nano-UAVs. The proposed approach is based on a novel miniature 64-zone time-of-flight sensor from ST Microelectronics and a RISC-V-based parallel ultra low-power processor, to enable accurate and low latency Monte Carlo Localization on-board. Experimental evaluation using a nano-UAV open platform demonstrated that the proposed solution is capable of localizing on a 31.2m$\boldsymbol{^2}$ map with 0.15m accuracy and an above 95% success rate. The achieved accuracy is sufficient for localization in common indoor environments. We analyze tradeoffs in using full and half-precision floating point numbers as well as a quantized map and evaluate the accuracy and memory footprint across the design space. Experimental evaluation shows that parallelizing the execution for 8 RISC-V cores brings a 7x speedup and allows us to execute the algorithm on-board in real-time with a latency of 0.2-30ms (depending on the number of particles), while only increasing the overall drone power consumption by 3-7%. Finally, we provide an open-source implementation of our approach.

Robust and Efficient Depth-based Obstacle Avoidance for Autonomous Miniaturized UAVs

Aug 26, 2022

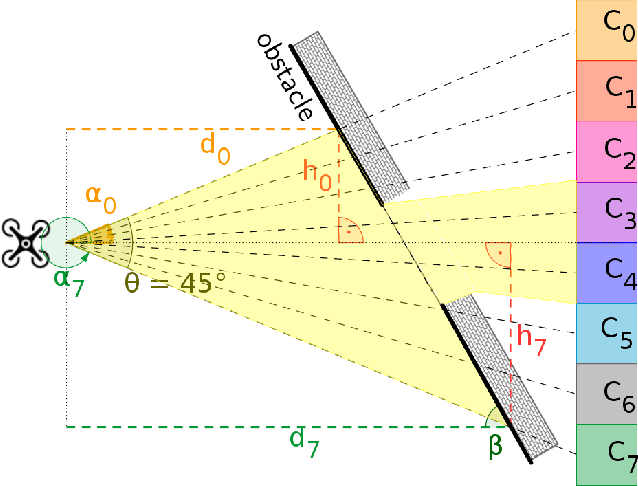

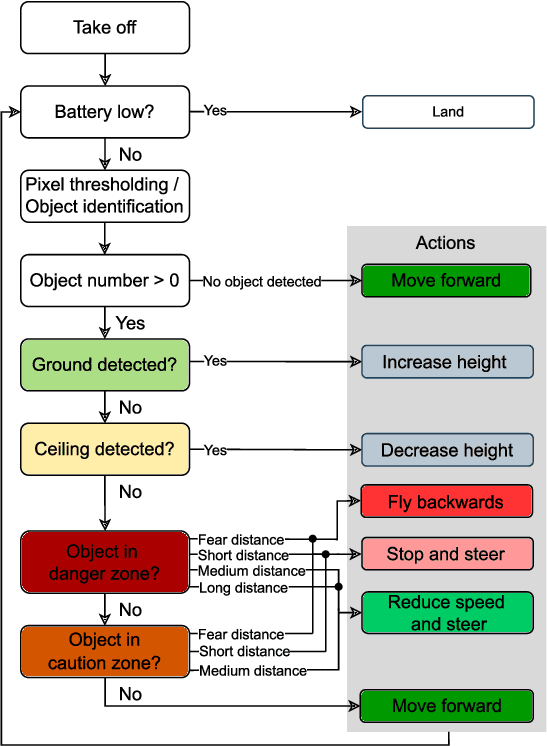

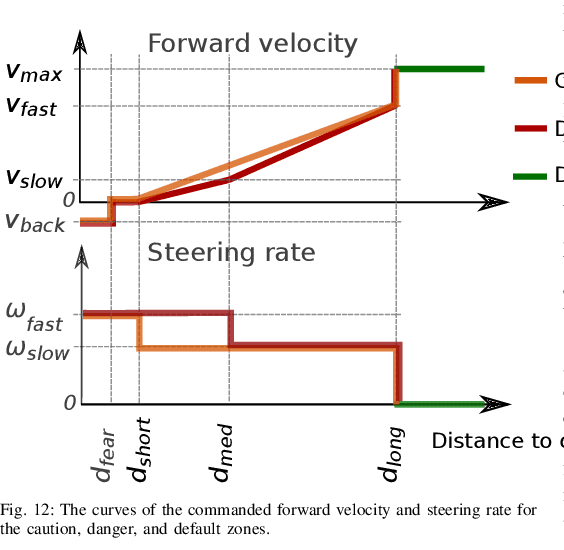

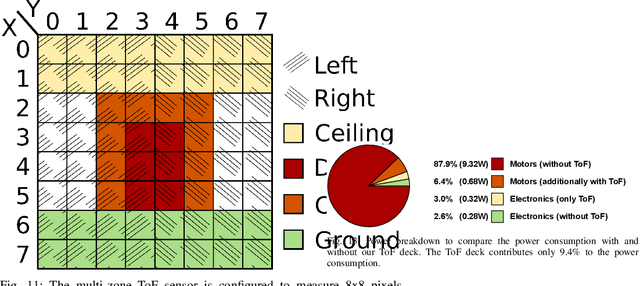

Abstract:Nano-size drones hold enormous potential to explore unknown and complex environments. Their small size makes them agile and safe for operation close to humans and allows them to navigate through narrow spaces. However, their tiny size and payload restrict the possibilities for on-board computation and sensing, making fully autonomous flight extremely challenging. The first step towards full autonomy is reliable obstacle avoidance, which has proven to be technically challenging by itself in a generic indoor environment. Current approaches utilize vision-based or 1-dimensional sensors to support nano-drone perception algorithms. This work presents a lightweight obstacle avoidance system based on a novel millimeter form factor 64 pixels multi-zone Time-of-Flight (ToF) sensor and a generalized model-free control policy. Reported in-field tests are based on the Crazyflie 2.1, extended by a custom multi-zone ToF deck, featuring a total flight mass of 35g. The algorithm only uses 0.3% of the on-board processing power (210uS execution time) with a frame rate of 15fps, providing an excellent foundation for many future applications. Less than 10% of the total drone power is needed to operate the proposed perception system, including both lifting and operating the sensor. The presented autonomous nano-size drone reaches 100% reliability at 0.5m/s in a generic and previously unexplored indoor environment. The proposed system is released open-source with an extensive dataset including ToF and gray-scale camera data, coupled with UAV position ground truth from motion capture.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge