Xiaying Wang

FEMBA: Efficient and Scalable EEG Analysis with a Bidirectional Mamba Foundation Model

Feb 10, 2025

Abstract:Accurate and efficient electroencephalography (EEG) analysis is essential for detecting seizures and artifacts in long-term monitoring, with applications spanning hospital diagnostics to wearable health devices. Robust EEG analytics have the potential to greatly improve patient care. However, traditional deep learning models, especially Transformer-based architectures, are hindered by their quadratic time and memory complexity, making them less suitable for resource-constrained environments. To address these challenges, we present FEMBA (Foundational EEG Mamba + Bidirectional Architecture), a novel self-supervised framework that establishes new efficiency benchmarks for EEG analysis through bidirectional state-space modeling. Unlike Transformer-based models, which incur quadratic time and memory complexity, FEMBA scales linearly with sequence length, enabling more scalable and efficient processing of extended EEG recordings. Trained on over 21,000 hours of unlabeled EEG and fine-tuned on three downstream tasks, FEMBA achieves competitive performance in comparison with transformer models, with significantly lower computational cost. Specifically, it reaches 81.82% balanced accuracy (0.8921 AUROC) on TUAB and 0.949 AUROC on TUAR, while a tiny 7.8M-parameter variant demonstrates viability for resource-constrained devices. These results pave the way for scalable, general-purpose EEG analytics in both clinical and highlight FEMBA as a promising candidate for wearable applications.

CEReBrO: Compact Encoder for Representations of Brain Oscillations Using Efficient Alternating Attention

Jan 18, 2025Abstract:Electroencephalograph (EEG) is a crucial tool for studying brain activity. Recently, self-supervised learning methods leveraging large unlabeled datasets have emerged as a potential solution to the scarcity of widely available annotated EEG data. However, current methods suffer from at least one of the following limitations: i) sub-optimal EEG signal modeling, ii) model sizes in the hundreds of millions of trainable parameters, and iii) reliance on private datasets and/or inconsistent public benchmarks, hindering reproducibility. To address these challenges, we introduce a Compact Encoder for Representations of Brain Oscillations using alternating attention (CEReBrO), a new small EEG foundation model. Our tokenization scheme represents EEG signals at a per-channel patch granularity. We propose an alternating attention mechanism that jointly models intra-channel temporal dynamics and inter-channel spatial correlations, achieving 2x speed improvement with 6x less memory required compared to standard self-attention. We present several model sizes ranging from 3.6 million to 85 million parameters. Pre-trained on over 20,000 hours of publicly available scalp EEG recordings with diverse channel configurations, our models set new benchmarks in emotion detection and seizure detection tasks, with competitive performance in anomaly classification and gait prediction. This validates our models' effectiveness and effictiveness.

EnhancePPG: Improving PPG-based Heart Rate Estimation with Self-Supervision and Augmentation

Dec 20, 2024

Abstract:Heart rate (HR) estimation from photoplethysmography (PPG) signals is a key feature of modern wearable devices for health and wellness monitoring. While deep learning models show promise, their performance relies on the availability of large datasets. We present EnhancePPG, a method that enhances state-of-the-art models by integrating self-supervised learning with data augmentation (DA). Our approach combines self-supervised pre-training with DA, allowing the model to learn more generalizable features, without needing more labelled data. Inspired by a U-Net-like autoencoder architecture, we utilize unsupervised PPG signal reconstruction, taking advantage of large amounts of unlabeled data during the pre-training phase combined with data augmentation, to improve state-of-the-art models' performance. Thanks to our approach and minimal modification to the state-of-the-art model, we improve the best HR estimation by 12.2%, lowering from 4.03 Beats-Per-Minute (BPM) to 3.54 BPM the error on PPG-DaLiA. Importantly, our EnhancePPG approach focuses exclusively on the training of the selected deep learning model, without significantly increasing its inference latency

An Ultra-Low Power Wearable BMI System with Continual Learning Capabilities

Sep 16, 2024

Abstract:Driven by the progress in efficient embedded processing, there is an accelerating trend toward running machine learning models directly on wearable Brain-Machine Interfaces (BMIs) to improve portability and privacy and maximize battery life. However, achieving low latency and high classification performance remains challenging due to the inherent variability of electroencephalographic (EEG) signals across sessions and the limited onboard resources. This work proposes a comprehensive BMI workflow based on a CNN-based Continual Learning (CL) framework, allowing the system to adapt to inter-session changes. The workflow is deployed on a wearable, parallel ultra-low power BMI platform (BioGAP). Our results based on two in-house datasets, Dataset A and Dataset B, show that the CL workflow improves average accuracy by up to 30.36% and 10.17%, respectively. Furthermore, when implementing the continual learning on a Parallel Ultra-Low Power (PULP) microcontroller (GAP9), it achieves an energy consumption as low as 0.45mJ per inference and an adaptation time of only 21.5ms, yielding around 25h of battery life with a small 100mAh, 3.7V battery on BioGAP. Our setup, coupled with the compact CNN model and on-device CL capabilities, meets users' needs for improved privacy, reduced latency, and enhanced inter-session performance, offering good promise for smart embedded real-world BMIs.

Train-On-Request: An On-Device Continual Learning Workflow for Adaptive Real-World Brain Machine Interfaces

Sep 13, 2024

Abstract:Brain-machine interfaces (BMIs) are expanding beyond clinical settings thanks to advances in hardware and algorithms. However, they still face challenges in user-friendliness and signal variability. Classification models need periodic adaptation for real-life use, making an optimal re-training strategy essential to maximize user acceptance and maintain high performance. We propose TOR, a train-on-request workflow that enables user-specific model adaptation to novel conditions, addressing signal variability over time. Using continual learning, TOR preserves knowledge across sessions and mitigates inter-session variability. With TOR, users can refine, on demand, the model through on-device learning (ODL) to enhance accuracy adapting to changing conditions. We evaluate the proposed methodology on a motor-movement dataset recorded with a non-stigmatizing wearable BMI headband, achieving up to 92% accuracy and a re-calibration time as low as 1.6 minutes, a 46% reduction compared to a naive transfer learning workflow. We additionally demonstrate that TOR is suitable for ODL in extreme edge settings by deploying the training procedure on a RISC-V ultra-low-power SoC (GAP9), resulting in 21.6 ms of latency and 1 mJ of energy consumption per training step. To the best of our knowledge, this work is the first demonstration of an online, energy-efficient, dynamic adaptation of a BMI model to the intrinsic variability of EEG signals in real-time settings.

A Spiking Neural Network Decoder for Implantable Brain Machine Interfaces and its Sparsity-aware Deployment on RISC-V Microcontrollers

May 03, 2024

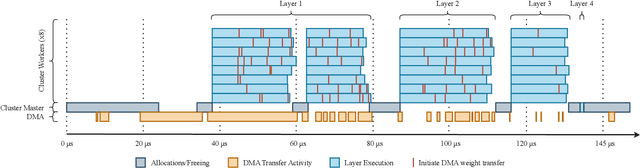

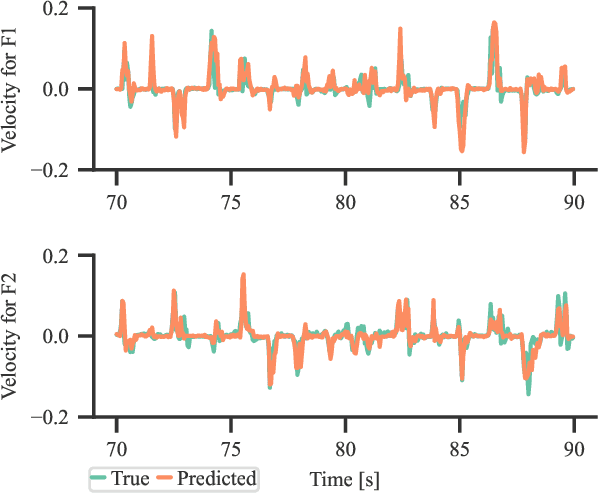

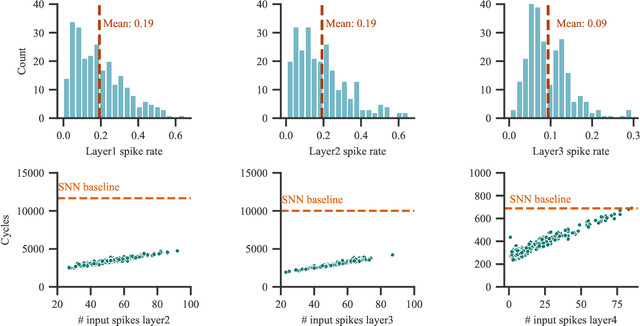

Abstract:Implantable Brain-machine interfaces (BMIs) are promising for motor rehabilitation and mobility augmentation, and they demand accurate and energy-efficient algorithms. In this paper, we propose a novel spiking neural network (SNN) decoder for regression tasks for implantable BMIs. The SNN is trained with enhanced spatio-temporal backpropagation to fully leverage its capability to handle temporal problems. The proposed SNN decoder outperforms the state-of-the-art Kalman filter and artificial neural network (ANN) decoders in offline finger velocity decoding tasks. The decoder is deployed on a RISC-V-based hardware platform and optimized to exploit sparsity. The proposed implementation has an average power consumption of 0.50 mW in a duty-cycled mode. When conducting continuous inference without duty-cycling, it achieves an energy efficiency of 1.88 uJ per inference, which is 5.5X less than the baseline ANN. Additionally, the average decoding latency is 0.12 ms for each inference, which is 5.7X faster than the ANN implementation.

SzCORE: A Seizure Community Open-source Research Evaluation framework for the validation of EEG-based automated seizure detection algorithms

Feb 23, 2024

Abstract:The need for high-quality automated seizure detection algorithms based on electroencephalography (EEG) becomes ever more pressing with the increasing use of ambulatory and long-term EEG monitoring. Heterogeneity in validation methods of these algorithms influences the reported results and makes comprehensive evaluation and comparison challenging. This heterogeneity concerns in particular the choice of datasets, evaluation methodologies, and performance metrics. In this paper, we propose a unified framework designed to establish standardization in the validation of EEG-based seizure detection algorithms. Based on existing guidelines and recommendations, the framework introduces a set of recommendations and standards related to datasets, file formats, EEG data input content, seizure annotation input and output, cross-validation strategies, and performance metrics. We also propose the 10-20 seizure detection benchmark, a machine-learning benchmark based on public datasets converted to a standardized format. This benchmark defines the machine-learning task as well as reporting metrics. We illustrate the use of the benchmark by evaluating a set of existing seizure detection algorithms. The SzCORE (Seizure Community Open-source Research Evaluation) framework and benchmark are made publicly available along with an open-source software library to facilitate research use, while enabling rigorous evaluation of the clinical significance of the algorithms, fostering a collective effort to more optimally detect seizures to improve the lives of people with epilepsy.

Enhancing Performance, Calibration Time and Efficiency in Brain-Machine Interfaces through Transfer Learning and Wearable EEG Technology

Sep 14, 2023Abstract:Brain-machine interfaces (BMIs) have emerged as a transformative force in assistive technologies, empowering individuals with motor impairments by enabling device control and facilitating functional recovery. However, the persistent challenge of inter-session variability poses a significant hurdle, requiring time-consuming calibration at every new use. Compounding this issue, the low comfort level of current devices further restricts their usage. To address these challenges, we propose a comprehensive solution that combines a tiny CNN-based Transfer Learning (TL) approach with a comfortable, wearable EEG headband. The novel wearable EEG device features soft dry electrodes placed on the headband and is capable of on-board processing. We acquire multiple sessions of motor-movement EEG data and achieve up to 96% inter-session accuracy using TL, greatly reducing the calibration time and improving usability. By executing the inference on the edge every 100ms, the system is estimated to achieve 30h of battery life. The comfortable BMI setup with tiny CNN and TL paves the way to future on-device continual learning, essential for tackling inter-session variability and improving usability.

EpiDeNet: An Energy-Efficient Approach to Seizure Detection for Embedded Systems

Aug 28, 2023Abstract:Epilepsy is a prevalent neurological disorder that affects millions of individuals globally, and continuous monitoring coupled with automated seizure detection appears as a necessity for effective patient treatment. To enable long-term care in daily-life conditions, comfortable and smart wearable devices with long battery life are required, which in turn set the demand for resource-constrained and energy-efficient computing solutions. In this context, the development of machine learning algorithms for seizure detection faces the challenge of heavily imbalanced datasets. This paper introduces EpiDeNet, a new lightweight seizure detection network, and Sensitivity-Specificity Weighted Cross-Entropy (SSWCE), a new loss function that incorporates sensitivity and specificity, to address the challenge of heavily unbalanced datasets. The proposed EpiDeNet-SSWCE approach demonstrates the successful detection of 91.16% and 92.00% seizure events on two different datasets (CHB-MIT and PEDESITE, respectively), with only four EEG channels. A three-window majority voting-based smoothing scheme combined with the SSWCE loss achieves 3x reduction of false positives to 1.18 FP/h. EpiDeNet is well suited for implementation on low-power embedded platforms, and we evaluate its performance on two ARM Cortex-based platforms (M4F/M7) and two parallel ultra-low power (PULP) systems (GAP8, GAP9). The most efficient implementation (GAP9) achieves an energy efficiency of 40 GMAC/s/W, with an energy consumption per inference of only 0.051 mJ at high performance (726.46 MMAC/s), outperforming the best ARM Cortex-based solutions by approximately 160x in energy efficiency. The EpiDeNet-SSWCE method demonstrates effective and accurate seizure detection performance on heavily imbalanced datasets, while being suited for implementation on energy-constrained platforms.

Exploring Automatic Gym Workouts Recognition Locally On Wearable Resource-Constrained Devices

Jan 13, 2023Abstract:Automatic gym activity recognition on energy- and resource-constrained wearable devices removes the human-interaction requirement during intense gym sessions - like soft-touch tapping and swiping. This work presents a tiny and highly accurate residual convolutional neural network that runs in milliwatt microcontrollers for automatic workouts classification. We evaluated the inference performance of the deep model with quantization on three resource-constrained devices: two microcontrollers with ARM-Cortex M4 and M7 core from ST Microelectronics, and a GAP8 system on chip, which is an open-sourced, multi-core RISC-V computing platform from GreenWaves Technologies. Experimental results show an accuracy of up to 90.4% for eleven workouts recognition with full precision inference. The paper also presents the trade-off performance of the resource-constrained system. While keeping the recognition accuracy (88.1%) with minimal loss, each inference takes only 3.2 ms on GAP8, benefiting from the 8 RISC-V cluster cores. We measured that it features an execution time that is 18.9x and 6.5x faster than the Cortex-M4 and Cortex-M7 cores, showing the feasibility of real-time on-board workouts recognition based on the described data set with 20 Hz sampling rate. The energy consumed for each inference on GAP8 is 0.41 mJ compared to 5.17 mJ on Cortex-M4 and 8.07 mJ on Cortex-M7 with the maximum clock. It can lead to longer battery life when the system is battery-operated. We also introduced an open data set composed of fifty sessions of eleven gym workouts collected from ten subjects that is publicly available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge