Simone Benatti

Wearable and Ultra-Low-Power Fusion of EMG and A-Mode US for Hand-Wrist Kinematic Tracking

Oct 02, 2025

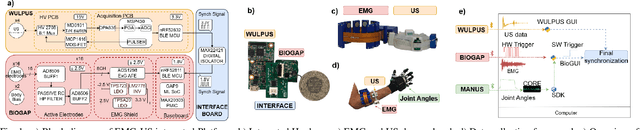

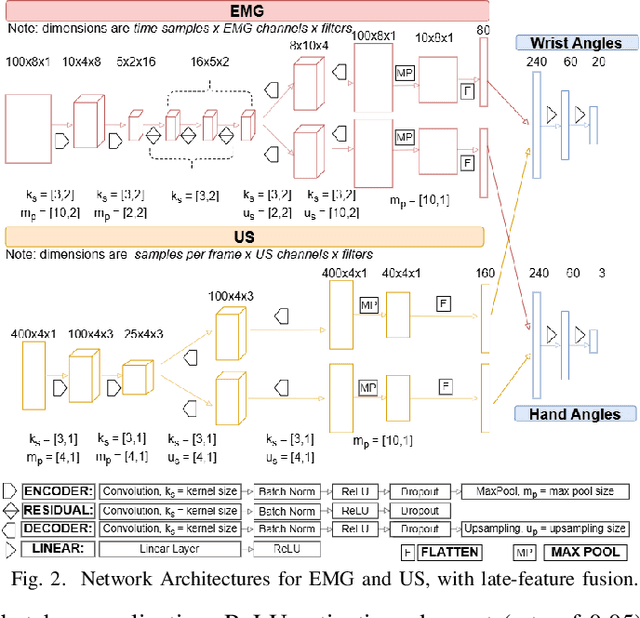

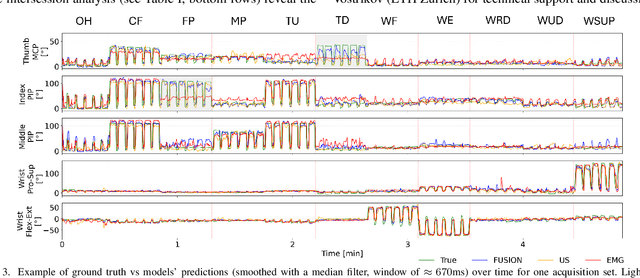

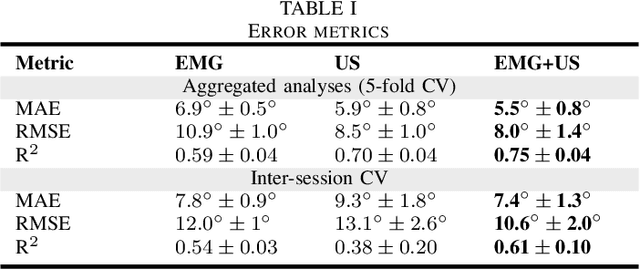

Abstract:Hand gesture recognition based on biosignals has shown strong potential for developing intuitive human-machine interaction strategies that closely mimic natural human behavior. In particular, sensor fusion approaches have gained attention for combining complementary information and overcoming the limitations of individual sensing modalities, thereby enabling more robust and reliable systems. Among them, the fusion of surface electromyography (EMG) and A-mode ultrasound (US) is very promising. However, prior solutions rely on power-hungry platforms unsuitable for multi-day use and are limited to discrete gesture classification. In this work, we present an ultra-low-power (sub-50 mW) system for concurrent acquisition of 8-channel EMG and 4-channel A-mode US signals, integrating two state-of-the-art platforms into fully wearable, dry-contact armbands. We propose a framework for continuous tracking of 23 degrees of freedom (DoFs), 20 for the hand and 3 for the wrist, using a kinematic glove for ground-truth labeling. Our method employs lightweight encoder-decoder architectures with multi-task learning to simultaneously estimate hand and wrist joint angles. Experimental results under realistic sensor repositioning conditions demonstrate that EMG-US fusion achieves a root mean squared error of $10.6^\circ\pm2.0^\circ$, compared to $12.0^\circ\pm1^\circ$ for EMG and $13.1^\circ\pm2.6^\circ$ for US, and a R$^2$ score of $0.61\pm0.1$, with $0.54\pm0.03$ for EMG and $0.38\pm0.20$ for US.

PhysioWave: A Multi-Scale Wavelet-Transformer for Physiological Signal Representation

Jun 12, 2025Abstract:Physiological signals are often corrupted by motion artifacts, baseline drift, and other low-SNR disturbances, which pose significant challenges for analysis. Additionally, these signals exhibit strong non-stationarity, with sharp peaks and abrupt changes that evolve continuously, making them difficult to represent using traditional time-domain or filtering methods. To address these issues, a novel wavelet-based approach for physiological signal analysis is presented, aiming to capture multi-scale time-frequency features in various physiological signals. Leveraging this technique, two large-scale pretrained models specific to EMG and ECG are introduced for the first time, achieving superior performance and setting new baselines in downstream tasks. Additionally, a unified multi-modal framework is constructed by integrating pretrained EEG model, where each modality is guided through its dedicated branch and fused via learnable weighted fusion. This design effectively addresses challenges such as low signal-to-noise ratio, high inter-subject variability, and device mismatch, outperforming existing methods on multi-modal tasks. The proposed wavelet-based architecture lays a solid foundation for analysis of diverse physiological signals, while the multi-modal design points to next-generation physiological signal processing with potential impact on wearable health monitoring, clinical diagnostics, and broader biomedical applications.

WaveFormer: A Lightweight Transformer Model for sEMG-based Gesture Recognition

Jun 12, 2025Abstract:Human-machine interaction, particularly in prosthetic and robotic control, has seen progress with gesture recognition via surface electromyographic (sEMG) signals.However, classifying similar gestures that produce nearly identical muscle signals remains a challenge, often reducing classification accuracy. Traditional deep learning models for sEMG gesture recognition are large and computationally expensive, limiting their deployment on resource-constrained embedded systems. In this work, we propose WaveFormer, a lightweight transformer-based architecture tailored for sEMG gesture recognition. Our model integrates time-domain and frequency-domain features through a novel learnable wavelet transform, enhancing feature extraction. In particular, the WaveletConv module, a multi-level wavelet decomposition layer with depthwise separable convolution, ensures both efficiency and compactness. With just 3.1 million parameters, WaveFormer achieves 95% classification accuracy on the EPN612 dataset, outperforming larger models. Furthermore, when profiled on a laptop equipped with an Intel CPU, INT8 quantization achieves real-time deployment with a 6.75 ms inference latency.

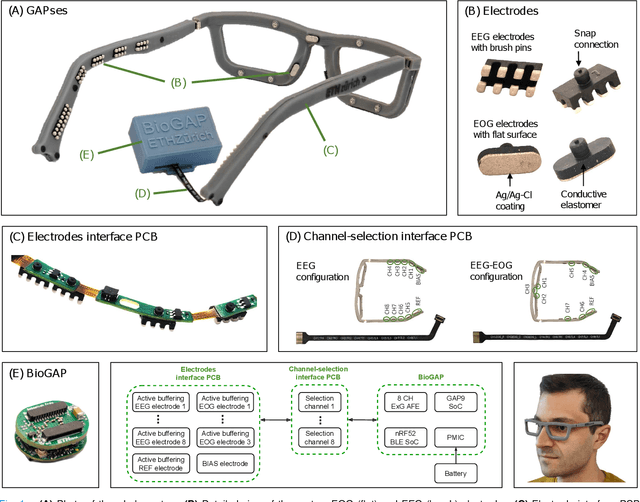

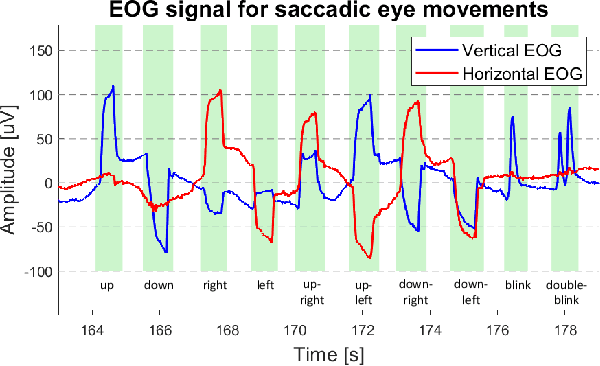

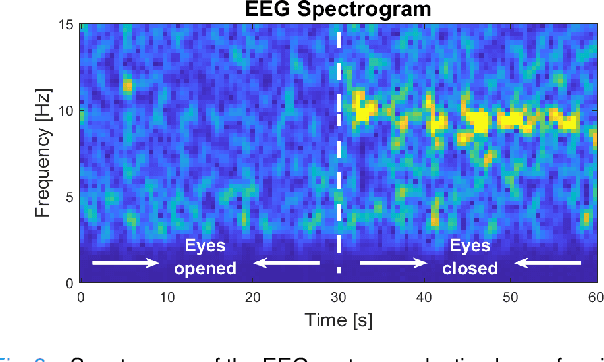

GAPses: Versatile smart glasses for comfortable and fully-dry acquisition and parallel ultra-low-power processing of EEG and EOG

Jun 12, 2024

Abstract:Recent advancements in head-mounted wearable technology are revolutionizing the field of biopotential measurement, but the integration of these technologies into practical, user-friendly devices remains challenging due to issues with design intrusiveness, comfort, and data privacy. To address these challenges, this paper presents GAPSES, a novel smart glasses platform designed for unobtrusive, comfortable, and secure acquisition and processing of electroencephalography (EEG) and electrooculography (EOG) signals. We introduce a direct electrode-electronics interface with custom fully dry soft electrodes to enhance comfort for long wear. An integrated parallel ultra-low-power RISC-V processor (GAP9, Greenwaves Technologies) processes data at the edge, thereby eliminating the need for continuous data streaming through a wireless link, enhancing privacy, and increasing system reliability in adverse channel conditions. We demonstrate the broad applicability of the designed prototype through validation in a number of EEG-based interaction tasks, including alpha waves, steady-state visual evoked potential analysis, and motor movement classification. Furthermore, we demonstrate an EEG-based biometric subject recognition task, where we reach a sensitivity and specificity of 98.87% and 99.86% respectively, with only 8 EEG channels and an energy consumption per inference on the edge as low as 121 uJ. Moreover, in an EOG-based eye movement classification task, we reach an accuracy of 96.68% on 11 classes, resulting in an information transfer rate of 94.78 bit/min, which can be further increased to 161.43 bit/min by reducing the accuracy to 81.43%. The deployed implementation has an energy consumption of 24 uJ per inference and a total system power of only 16.28 mW, allowing for continuous operation of more than 12 h with a small 75 mAh battery.

A Heterogeneous RISC-V based SoC for Secure Nano-UAV Navigation

Jan 07, 2024

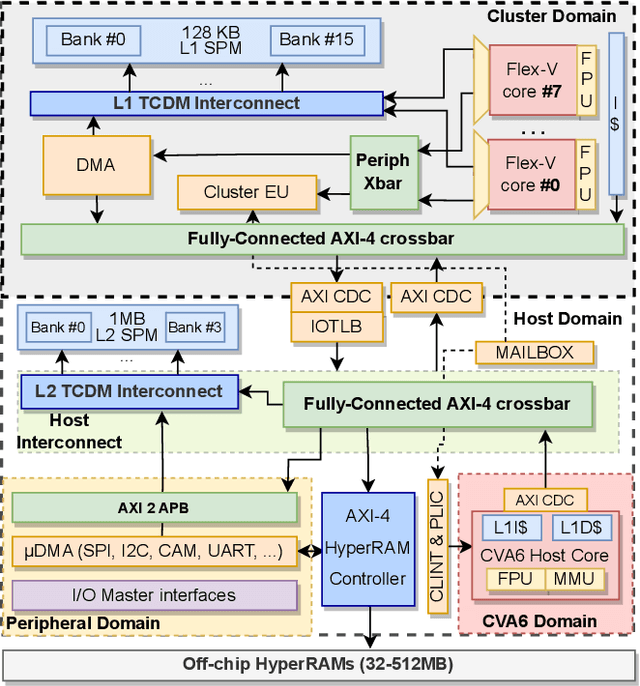

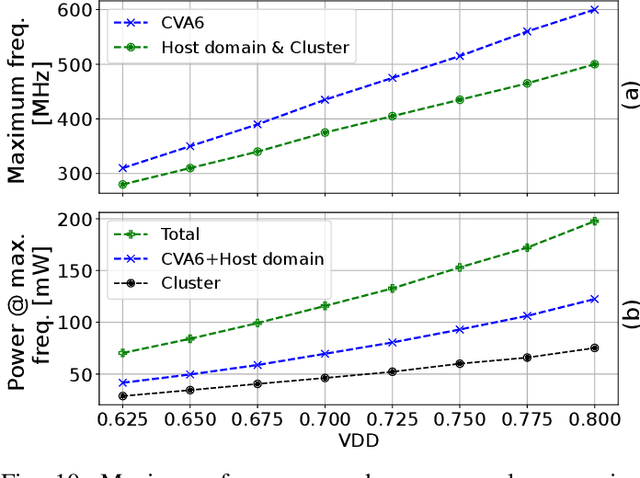

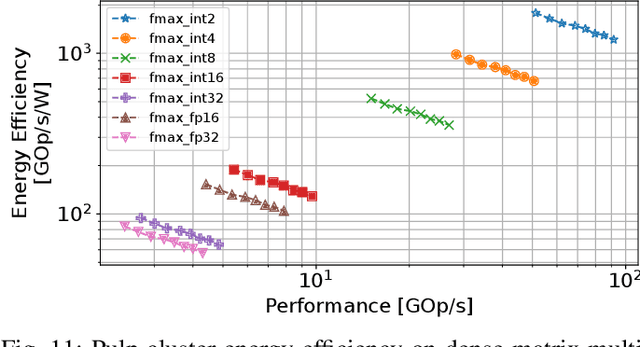

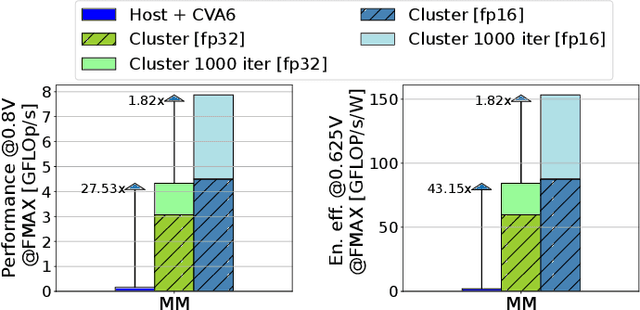

Abstract:The rapid advancement of energy-efficient parallel ultra-low-power (ULP) ucontrollers units (MCUs) is enabling the development of autonomous nano-sized unmanned aerial vehicles (nano-UAVs). These sub-10cm drones represent the next generation of unobtrusive robotic helpers and ubiquitous smart sensors. However, nano-UAVs face significant power and payload constraints while requiring advanced computing capabilities akin to standard drones, including real-time Machine Learning (ML) performance and the safe co-existence of general-purpose and real-time OSs. Although some advanced parallel ULP MCUs offer the necessary ML computing capabilities within the prescribed power limits, they rely on small main memories (<1MB) and ucontroller-class CPUs with no virtualization or security features, and hence only support simple bare-metal runtimes. In this work, we present Shaheen, a 9mm2 200mW SoC implemented in 22nm FDX technology. Differently from state-of-the-art MCUs, Shaheen integrates a Linux-capable RV64 core, compliant with the v1.0 ratified Hypervisor extension and equipped with timing channel protection, along with a low-cost and low-power memory controller exposing up to 512MB of off-chip low-cost low-power HyperRAM directly to the CPU. At the same time, it integrates a fully programmable energy- and area-efficient multi-core cluster of RV32 cores optimized for general-purpose DSP as well as reduced- and mixed-precision ML. To the best of the authors' knowledge, it is the first silicon prototype of a ULP SoC coupling the RV64 and RV32 cores in a heterogeneous host+accelerator architecture fully based on the RISC-V ISA. We demonstrate the capabilities of the proposed SoC on a wide range of benchmarks relevant to nano-UAV applications. The cluster can deliver up to 90GOp/s and up to 1.8TOp/s/W on 2-bit integer kernels and up to 7.9GFLOp/s and up to 150GFLOp/s/W on 16-bit FP kernels.

A Wearable Ultra-Low-Power sEMG-Triggered Ultrasound System for Long-Term Muscle Activity Monitoring

Sep 14, 2023Abstract:Surface electromyography (sEMG) is a well-established approach to monitor muscular activity on wearable and resource-constrained devices. However, when measuring deeper muscles, its low signal-to-noise ratio (SNR), high signal attenuation, and crosstalk degrade sensing performance. Ultrasound (US) complements sEMG effectively with its higher SNR at high penetration depths. In fact, combining US and sEMG improves the accuracy of muscle dynamic assessment, compared to using only one modality. However, the power envelope of US hardware is considerably higher than that of sEMG, thus inflating energy consumption and reducing the battery life. This work proposes a wearable solution that integrates both modalities and utilizes an EMG-driven wake-up approach to achieve ultra-low power consumption as needed for wearable long-term monitoring. We integrate two wearable state-of-the-art (SoA) US and ExG biosignal acquisition devices to acquire time-synchronized measurements of the short head of the biceps. To minimize power consumption, the US probe is kept in a sleep state when there is no muscle activity. sEMG data are processed on the probe (filtering, envelope extraction and thresholding) to identify muscle activity and generate a trigger to wake-up the US counterpart. The US acquisition starts before muscle fascicles displacement thanks to a triggering time faster than the electromechanical delay (30-100 ms) between the neuromuscular junction stimulation and the muscle contraction. Assuming a muscle contraction of 200 ms at a contraction rate of 1 Hz, the proposed approach enables more than 59% energy saving (with a full-system average power consumption of 12.2 mW) as compared to operating both sEMG and US continuously.

EpiDeNet: An Energy-Efficient Approach to Seizure Detection for Embedded Systems

Aug 28, 2023Abstract:Epilepsy is a prevalent neurological disorder that affects millions of individuals globally, and continuous monitoring coupled with automated seizure detection appears as a necessity for effective patient treatment. To enable long-term care in daily-life conditions, comfortable and smart wearable devices with long battery life are required, which in turn set the demand for resource-constrained and energy-efficient computing solutions. In this context, the development of machine learning algorithms for seizure detection faces the challenge of heavily imbalanced datasets. This paper introduces EpiDeNet, a new lightweight seizure detection network, and Sensitivity-Specificity Weighted Cross-Entropy (SSWCE), a new loss function that incorporates sensitivity and specificity, to address the challenge of heavily unbalanced datasets. The proposed EpiDeNet-SSWCE approach demonstrates the successful detection of 91.16% and 92.00% seizure events on two different datasets (CHB-MIT and PEDESITE, respectively), with only four EEG channels. A three-window majority voting-based smoothing scheme combined with the SSWCE loss achieves 3x reduction of false positives to 1.18 FP/h. EpiDeNet is well suited for implementation on low-power embedded platforms, and we evaluate its performance on two ARM Cortex-based platforms (M4F/M7) and two parallel ultra-low power (PULP) systems (GAP8, GAP9). The most efficient implementation (GAP9) achieves an energy efficiency of 40 GMAC/s/W, with an energy consumption per inference of only 0.051 mJ at high performance (726.46 MMAC/s), outperforming the best ARM Cortex-based solutions by approximately 160x in energy efficiency. The EpiDeNet-SSWCE method demonstrates effective and accurate seizure detection performance on heavily imbalanced datasets, while being suited for implementation on energy-constrained platforms.

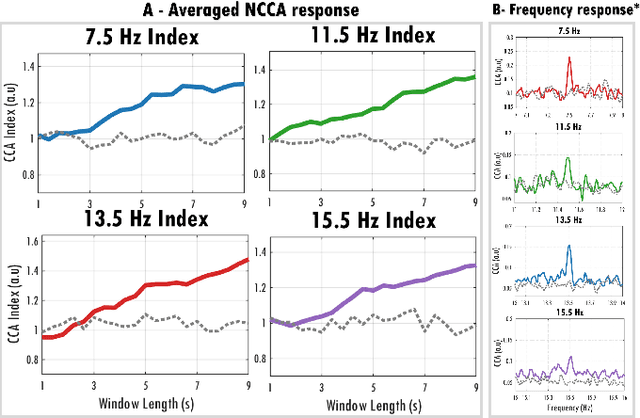

Long-term stable Electromyography classification using Canonical Correlation Analysis

Jan 23, 2023

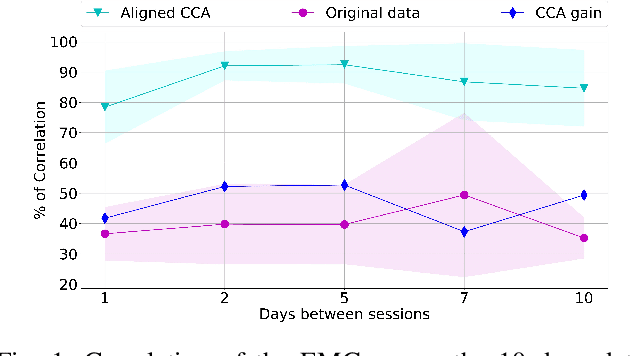

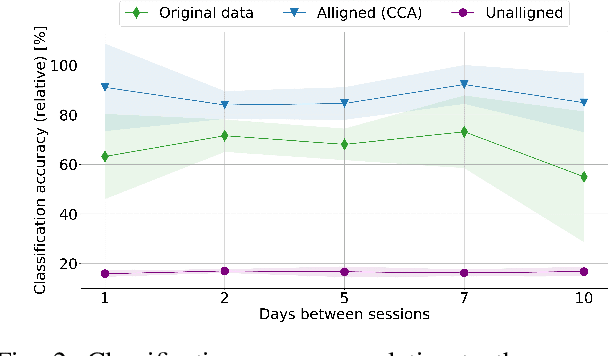

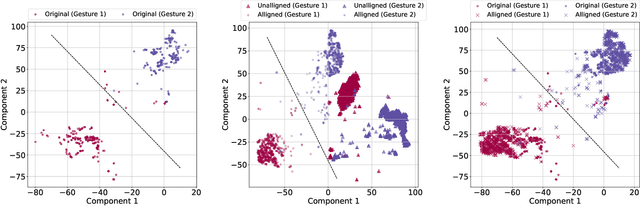

Abstract:Discrimination of hand gestures based on the decoding of surface electromyography (sEMG) signals is a well-establish approach for controlling prosthetic devices and for Human-Machine Interfaces (HMI). However, despite the promising results achieved by this approach in well-controlled experimental conditions, its deployment in long-term real-world application scenarios is still hindered by several challenges. One of the most critical challenges is maintaining high EMG data classification performance across multiple days without retraining the decoding system. The drop in performance is mostly due to the high EMG variability caused by electrodes shift, muscle artifacts, fatigue, user adaptation, or skin-electrode interfacing issues. Here we propose a novel statistical method based on canonical correlation analysis (CCA) that stabilizes EMG classification performance across multiple days for long-term control of prosthetic devices. We show how CCA can dramatically decrease the performance drop of standard classifiers observed across days, by maximizing the correlation among multiple-day acquisition data sets. Our results show how the performance of a classifier trained on EMG data acquired only of the first day of the experiment maintains 90% relative accuracy across multiple days, compensating for the EMG data variability that occurs over long-term periods, using the CCA transformation on data obtained from a small number of gestures. This approach eliminates the need for large data sets and multiple or periodic training sessions, which currently hamper the usability of conventional pattern recognition based approaches

Energy-Efficient Tree-Based EEG Artifact Detection

Apr 19, 2022

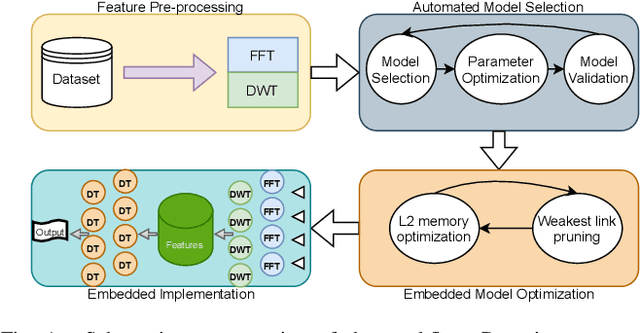

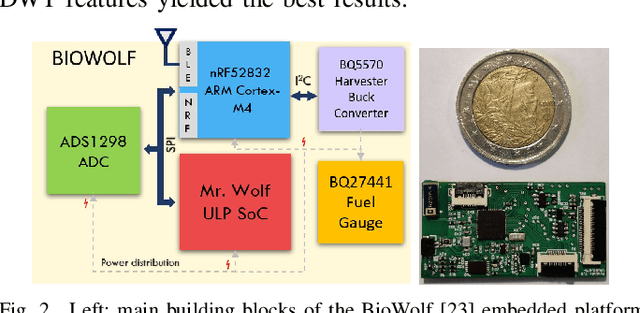

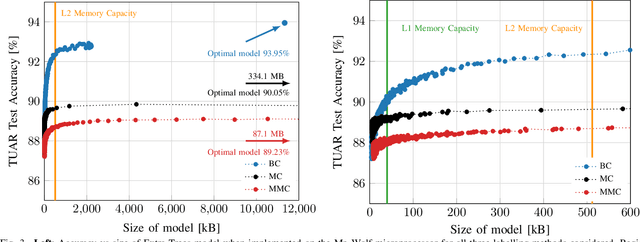

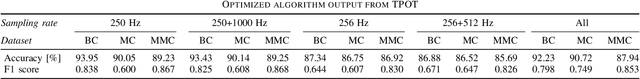

Abstract:In the context of epilepsy monitoring, EEG artifacts are often mistaken for seizures due to their morphological similarity in both amplitude and frequency, making seizure detection systems susceptible to higher false alarm rates. In this work we present the implementation of an artifact detection algorithm based on a minimal number of EEG channels on a parallel ultra-low-power (PULP) embedded platform. The analyses are based on the TUH EEG Artifact Corpus dataset and focus on the temporal electrodes. First, we extract optimal feature models in the frequency domain using an automated machine learning framework, achieving a 93.95% accuracy, with a 0.838 F1 score for a 4 temporal EEG channel setup. The achieved accuracy levels surpass state-of-the-art by nearly 20%. Then, these algorithms are parallelized and optimized for a PULP platform, achieving a 5.21 times improvement of energy-efficient compared to state-of-the-art low-power implementations of artifact detection frameworks. Combining this model with a low-power seizure detection algorithm would allow for 300h of continuous monitoring on a 300 mAh battery in a wearable form factor and power budget. These results pave the way for implementing affordable, wearable, long-term epilepsy monitoring solutions with low false-positive rates and high sensitivity, meeting both patients' and caregivers' requirements.

Robust and Energy-efficient PPG-based Heart-Rate Monitoring

Mar 28, 2022

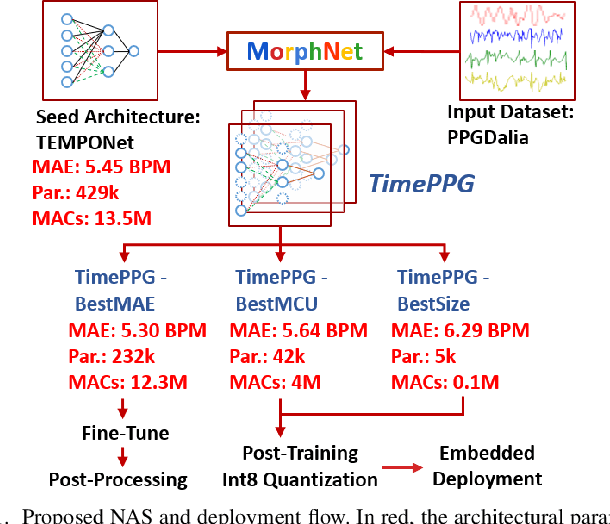

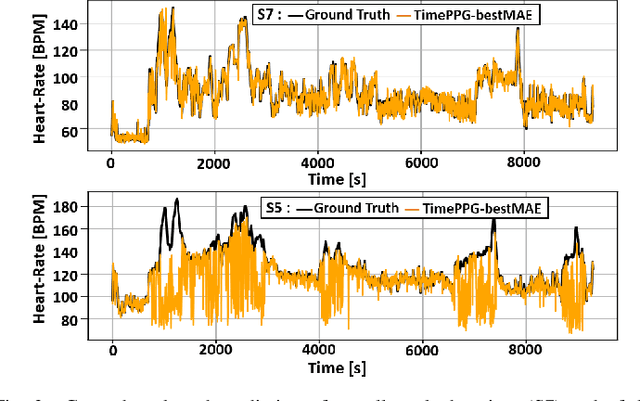

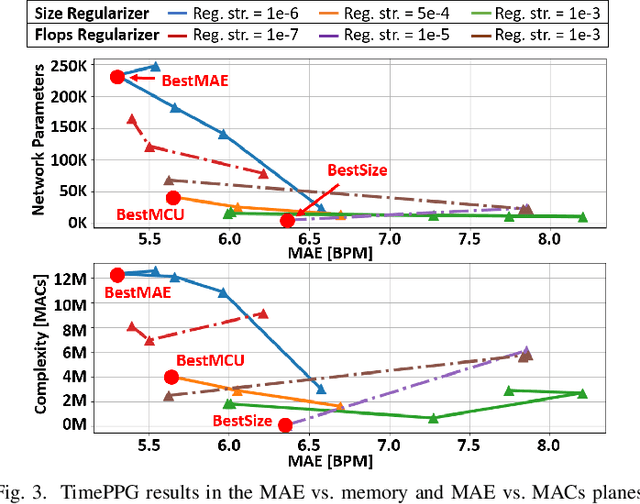

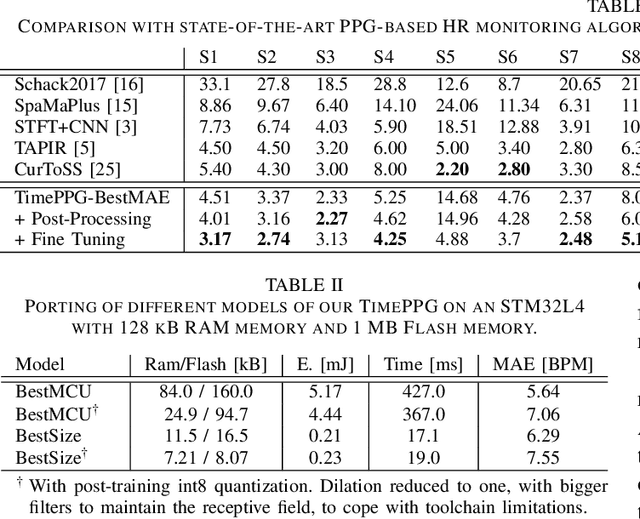

Abstract:A wrist-worn PPG sensor coupled with a lightweight algorithm can run on a MCU to enable non-invasive and comfortable monitoring, but ensuring robust PPG-based heart-rate monitoring in the presence of motion artifacts is still an open challenge. Recent state-of-the-art algorithms combine PPG and inertial signals to mitigate the effect of motion artifacts. However, these approaches suffer from limited generality. Moreover, their deployment on MCU-based edge nodes has not been investigated. In this work, we tackle both the aforementioned problems by proposing the use of hardware-friendly Temporal Convolutional Networks (TCN) for PPG-based heart estimation. Starting from a single "seed" TCN, we leverage an automatic Neural Architecture Search (NAS) approach to derive a rich family of models. Among them, we obtain a TCN that outperforms the previous state-of-the-art on the largest PPG dataset available (PPGDalia), achieving a Mean Absolute Error (MAE) of just 3.84 Beats Per Minute (BPM). Furthermore, we tested also a set of smaller yet still accurate (MAE of 5.64 - 6.29 BPM) networks that can be deployed on a commercial MCU (STM32L4) which require as few as 5k parameters and reach a latency of 17.1 ms consuming just 0.21 mJ per inference.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge