Giacomo Indiveri

Institute of Neuroinformatics, University of Zurich and ETH Zurich

Training slow silicon neurons to control extremely fast robots with spiking reinforcement learning

Jan 29, 2026Abstract:Air hockey demands split-second decisions at high puck velocities, a challenge we address with a compact network of spiking neurons running on a mixed-signal analog/digital neuromorphic processor. By co-designing hardware and learning algorithms, we train the system to achieve successful puck interactions through reinforcement learning in a remarkably small number of trials. The network leverages fixed random connectivity to capture the task's temporal structure and adopts a local e-prop learning rule in the readout layer to exploit event-driven activity for fast and efficient learning. The result is real-time learning with a setup comprising a computer and the neuromorphic chip in-the-loop, enabling practical training of spiking neural networks for robotic autonomous systems. This work bridges neuroscience-inspired hardware with real-world robotic control, showing that brain-inspired approaches can tackle fast-paced interaction tasks while supporting always-on learning in intelligent machines.

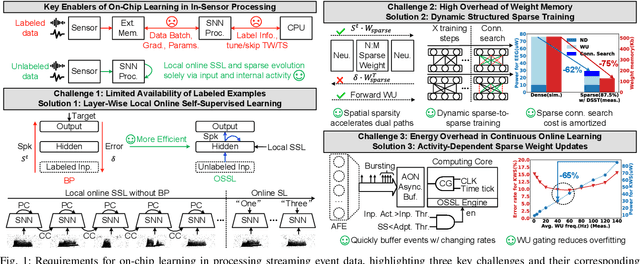

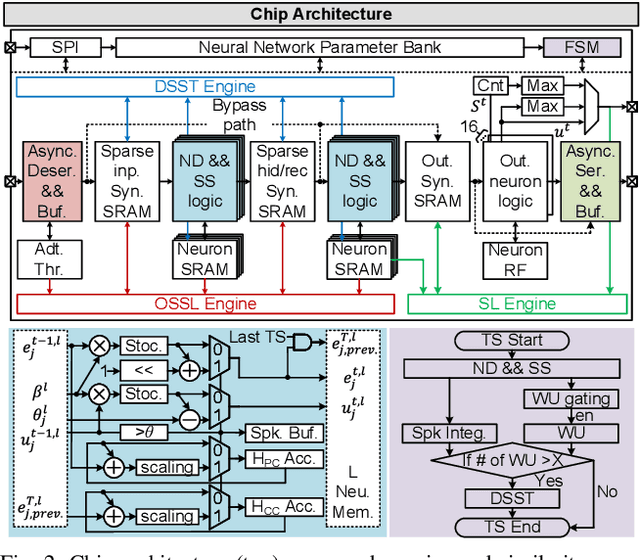

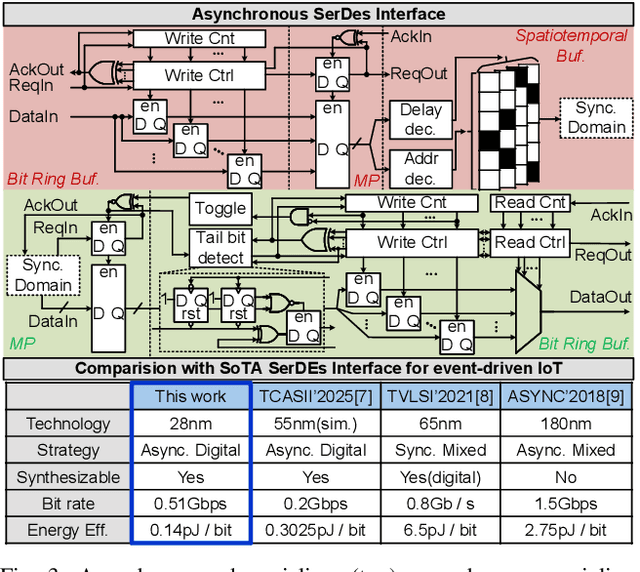

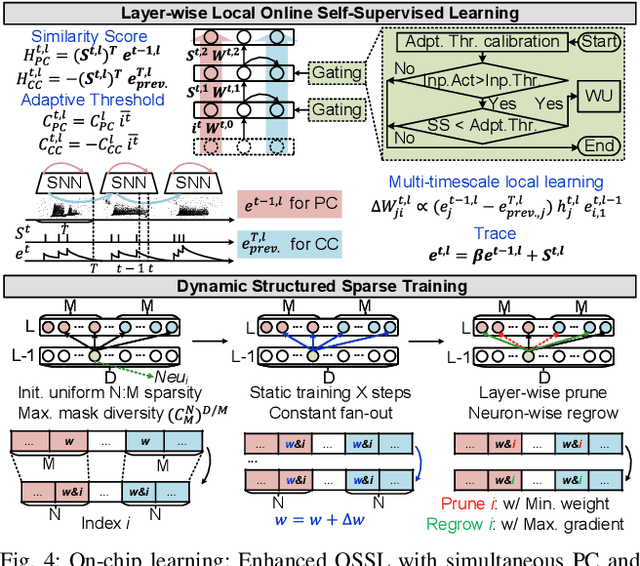

ElfCore: A 28nm Neural Processor Enabling Dynamic Structured Sparse Training and Online Self-Supervised Learning with Activity-Dependent Weight Update

Dec 24, 2025

Abstract:In this paper, we present ElfCore, a 28nm digital spiking neural network processor tailored for event-driven sensory signal processing. ElfCore is the first to efficiently integrate: (1) a local online self-supervised learning engine that enables multi-layer temporal learning without labeled inputs; (2) a dynamic structured sparse training engine that supports high-accuracy sparse-to-sparse learning; and (3) an activity-dependent sparse weight update mechanism that selectively updates weights based solely on input activity and network dynamics. Demonstrated on tasks including gesture recognition, speech, and biomedical signal processing, ElfCore outperforms state-of-the-art solutions with up to 16X lower power consumption, 3.8X reduced on-chip memory requirements, and 5.9X greater network capacity efficiency.

* This paper has been published in the proceedings of the 2025 IEEE European Solid-State Electronics Research Conference (ESSERC)

Algorithm-hardware co-design of neuromorphic networks with dual memory pathways

Dec 11, 2025Abstract:Spiking neural networks excel at event-driven sensing. Yet, maintaining task-relevant context over long timescales both algorithmically and in hardware, while respecting both tight energy and memory budgets, remains a core challenge in the field. We address this challenge through novel algorithm-hardware co-design effort. At the algorithm level, inspired by the cortical fast-slow organization in the brain, we introduce a neural network with an explicit slow memory pathway that, combined with fast spiking activity, enables a dual memory pathway (DMP) architecture in which each layer maintains a compact low-dimensional state that summarizes recent activity and modulates spiking dynamics. This explicit memory stabilizes learning while preserving event-driven sparsity, achieving competitive accuracy on long-sequence benchmarks with 40-60% fewer parameters than equivalent state-of-the-art spiking neural networks. At the hardware level, we introduce a near-memory-compute architecture that fully leverages the advantages of the DMP architecture by retaining its compact shared state while optimizing dataflow, across heterogeneous sparse-spike and dense-memory pathways. We show experimental results that demonstrate more than a 4x increase in throughput and over a 5x improvement in energy efficiency compared with state-of-the-art implementations. Together, these contributions demonstrate that biological principles can guide functional abstractions that are both algorithmically effective and hardware-efficient, establishing a scalable co-design paradigm for real-time neuromorphic computation and learning.

A neuromorphic continuous soil monitoring system for precision irrigation

Sep 17, 2025Abstract:Sensory processing at the edge requires ultra-low power stand-alone computing technologies. This is particularly true for modern agriculture and precision irrigation systems which aim to optimize water usage by monitoring key environmental observables continuously using distributed efficient embedded processing elements. Neuromorphic processing systems are emerging as a promising technology for extreme edge-computing applications that need to run on resource-constrained hardware. As such, they are a very good candidate for implementing efficient water management systems based on data measured from soil and plants, across large fields. In this work, we present a fully energy-efficient neuromorphic irrigation control system that operates autonomously without any need for data transmission or remote processing. Leveraging the properties of a biologically realistic spiking neural network, our system performs computation, and decision-making locally. We validate this approach using real-world soil moisture data from apple and kiwi orchards applied to a mixed-signal neuromorphic processor, and show that the generated irrigation commands closely match those derived from conventional methods across different soil depths. Our results show that local neuromorphic inference can maintain decision accuracy, paving the way for autonomous, sustainable irrigation solutions at scale.

Spiking Neural Network Decoders of Finger Forces from High-Density Intramuscular Microelectrode Arrays

Sep 04, 2025Abstract:Restoring naturalistic finger control in assistive technologies requires the continuous decoding of motor intent with high accuracy, efficiency, and robustness. Here, we present a spike-based decoding framework that integrates spiking neural networks (SNNs) with motor unit activity extracted from high-density intramuscular microelectrode arrays. We demonstrate simultaneous and proportional decoding of individual finger forces from motor unit spike trains during isometric contractions at 15% of maximum voluntary contraction using SNNs. We systematically evaluated alternative SNN decoder configurations and compared two possible input modalities: physiologically grounded motor unit spike trains and spike-encoded intramuscular EMG signals. Through this comparison, we quantified trade-offs between decoding accuracy, memory footprint, and robustness to input errors. The results showed that shallow SNNs can reliably decode finger-level motor intent with competitive accuracy and minimal latency, while operating with reduced memory requirements and without the need for external preprocessing buffers. This work provides a practical blueprint for integrating SNNs into finger-level force decoding systems, demonstrating how the choice of input representation can be strategically tailored to meet application-specific requirements for accuracy, robustness, and memory efficiency.

Waves and symbols in neuromorphic hardware: from analog signal processing to digital computing on the same computational substrate

Feb 27, 2025Abstract:Neural systems use the same underlying computational substrate to carry out analog filtering and signal processing operations, as well as discrete symbol manipulation and digital computation. Inspired by the computational principles of canonical cortical microcircuits, we propose a framework for using recurrent spiking neural networks to seamlessly and robustly switch between analog signal processing and categorical and discrete computation. We provide theoretical analysis and practical neural network design tools to formally determine the conditions for inducing this switch. We demonstrate the robustness of this framework experimentally with hardware soft Winner-Take-All and mixed-feedback recurrent spiking neural networks, implemented by appropriately configuring the analog neuron and synapse circuits of a mixed-signal neuromorphic processor chip.

An Efficient Multicast Addressing Encoding Scheme for Multi-Core Neuromorphic Processors

Nov 18, 2024Abstract:Multi-core neuromorphic processors are becoming increasingly significant due to their energy-efficient local computing and scalable modular architecture, particularly for event-based processing applications. However, minimizing the cost of inter-core communication, which accounts for the majority of energy usage, remains a challenging issue. Beyond optimizing circuit design at lower abstraction levels, an efficient multicast addressing scheme is crucial. We propose a hierarchical bit string encoding scheme that largely expands the addressing capability of state-of-the-art symbol-based schemes for the same number of routing bits. When put at work with a real neuromorphic task, this hierarchical bit string encoding achieves a reduction in area cost by approximately 29% and decreases energy consumption by about 50%.

Genetic Motifs as a Blueprint for Mismatch-Tolerant Neuromorphic Computing

Oct 25, 2024Abstract:Mixed-signal implementations of SNNs offer a promising solution to edge computing applications that require low-power and compact embedded processing systems. However, device mismatch in the analog circuits of these neuromorphic processors poses a significant challenge to the deployment of robust processing in these systems. Here we introduce a novel architectural solution inspired by biological development to address this issue. Specifically we propose to implement architectures that incorporate network motifs found in developed brains through a differentiable re-parameterization of weight matrices based on gene expression patterns and genetic rules. Thanks to the gradient descent optimization compatibility of the method proposed, we can apply the robustness of biological neural development to neuromorphic computing. To validate this approach we benchmark it using the Yin-Yang classification dataset, and compare its performance with that of standard multilayer perceptrons trained with state-of-the-art hardware-aware training method. Our results demonstrate that the proposed method mitigates mismatch-induced noise without requiring precise device mismatch measurements, effectively outperforming alternative hardware-aware techniques proposed in the literature, and providing a more general solution for improving the robustness of SNNs in neuromorphic hardware.

TEXEL: A neuromorphic processor with on-chip learning for beyond-CMOS device integration

Oct 21, 2024

Abstract:Recent advances in memory technologies, devices and materials have shown great potential for integration into neuromorphic electronic systems. However, a significant gap remains between the development of these materials and the realization of large-scale, fully functional systems. One key challenge is determining which devices and materials are best suited for specific functions and how they can be paired with CMOS circuitry. To address this, we introduce TEXEL, a mixed-signal neuromorphic architecture designed to explore the integration of on-chip learning circuits and novel two- and three-terminal devices. TEXEL serves as an accessible platform to bridge the gap between CMOS-based neuromorphic computation and the latest advancements in emerging devices. In this paper, we demonstrate the readiness of TEXEL for device integration through comprehensive chip measurements and simulations. TEXEL provides a practical system for testing bio-inspired learning algorithms alongside emerging devices, establishing a tangible link between brain-inspired computation and cutting-edge device research.

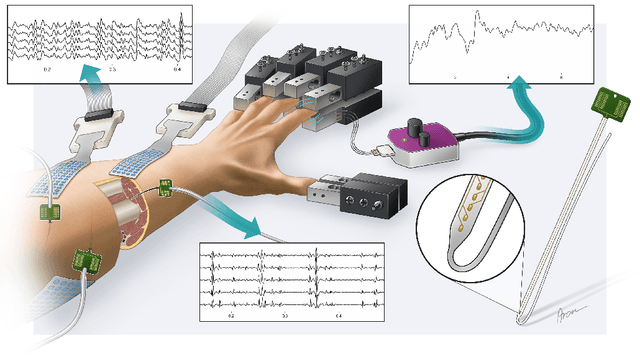

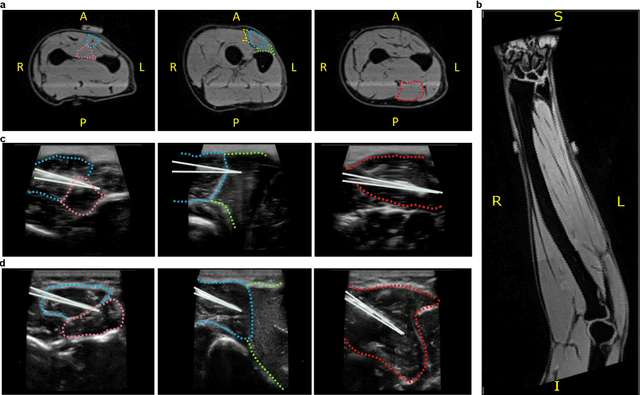

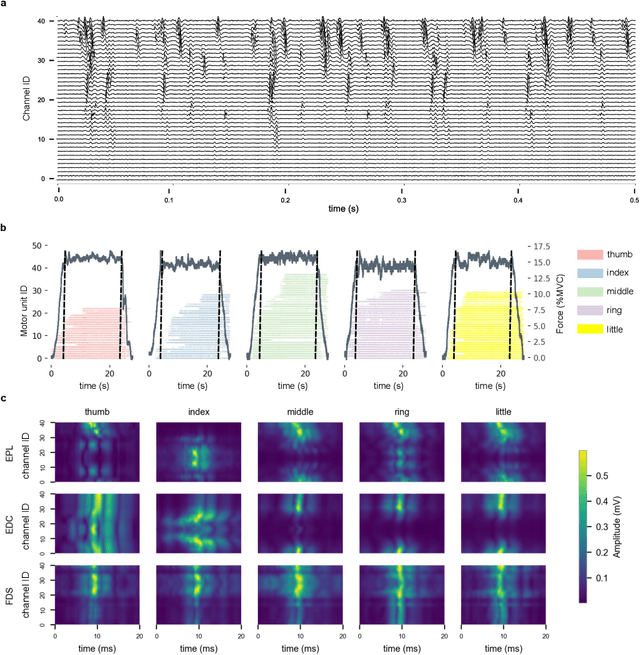

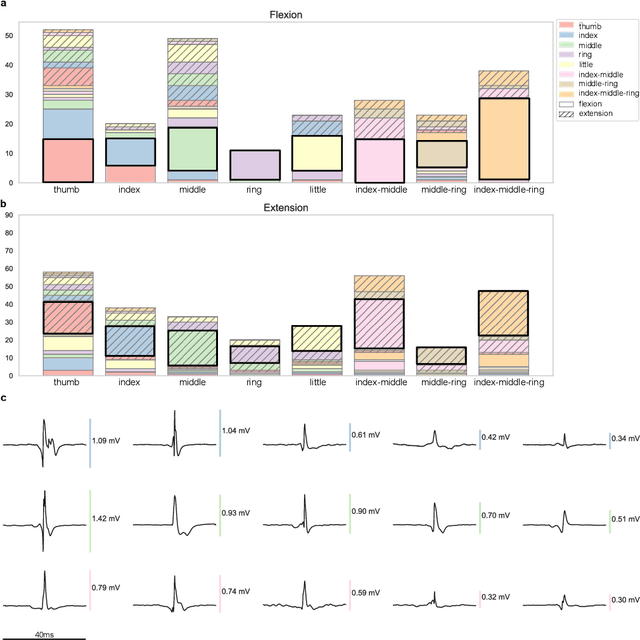

Intramuscular High-Density Micro-Electrode Arrays Enable High-Precision Decoding and Mapping of Spinal Motor Neurons to Reveal Hand Control

Oct 14, 2024

Abstract:Decoding nervous system activity is a key challenge in neuroscience and neural interfacing. In this study, we propose a novel neural decoding system that enables unprecedented large-scale sampling of muscle activity. Using micro-electrode arrays with more than 100 channels embedded within the forearm muscles, we recorded high-density signals that captured multi-unit motor neuron activity. This extensive sampling was complemented by advanced methods for neural decomposition, analysis, and classification, allowing us to accurately detect and interpret the spiking activity of spinal motor neurons that innervate hand muscles. We evaluated this system in two healthy participants, each implanted with three electromyogram (EMG) micro-electrode arrays (comprising 40 electrodes each) in the forearm. These arrays recorded muscle activity during both single- and multi-digit isometric contractions. For the first time under controlled conditions, we demonstrate that multi-digit tasks elicit unique patterns of motor neuron recruitment specific to each task, rather than employing combinations of recruitment patterns from single-digit tasks. This observation led us to hypothesize that hand tasks could be classified with high precision based on the decoded neural activity. We achieved perfect classification accuracy (100%) across 12 distinct single- and multi-digit tasks, and consistently high accuracy (>96\%) across all conditions and subjects, for up to 16 task classes. These results significantly outperformed conventional EMG classification methods. The exceptional performance of this system paves the way for developing advanced neural interfaces based on invasive high-density EMG technology. This innovation could greatly enhance human-computer interaction and lead to substantial improvements in assistive technologies, offering new possibilities for restoring motor function in clinical applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge