Elisabetta Chicca

An Asynchronous Mixed-Signal Resonate-and-Fire Neuron

Dec 08, 2025Abstract:Analog computing at the edge is an emerging strategy to limit data storage and transmission requirements, as well as energy consumption, and its practical implementation is in its initial stages of development. Translating properties of biological neurons into hardware offers a pathway towards low-power, real-time edge processing. Specifically, resonator neurons offer selectivity to specific frequencies as a potential solution for temporal signal processing. Here, we show a fabricated Complementary Metal-Oxide-Semiconductor (CMOS) mixed-signal Resonate-and-Fire (R&F) neuron circuit implementation that emulates the behavior of these neural cells responsible for controlling oscillations within the central nervous system. We integrate the design with asynchronous handshake capabilities, perform comprehensive variability analyses, and characterize its frequency detection functionality. Our results demonstrate the feasibility of large-scale integration within neuromorphic systems, thereby advancing the exploitation of bio-inspired circuits for efficient edge temporal signal processing.

Learning in Spiking Neural Networks with a Calcium-based Hebbian Rule for Spike-timing-dependent Plasticity

Apr 09, 2025Abstract:Understanding how biological neural networks are shaped via local plasticity mechanisms can lead to energy-efficient and self-adaptive information processing systems, which promises to mitigate some of the current roadblocks in edge computing systems. While biology makes use of spikes to seamless use both spike timing and mean firing rate to modulate synaptic strength, most models focus on one of the two. In this work, we present a Hebbian local learning rule that models synaptic modification as a function of calcium traces tracking neuronal activity. We show how the rule reproduces results from spike time and spike rate protocols from neuroscientific studies. Moreover, we use the model to train spiking neural networks on MNIST digit recognition to show and explain what sort of mechanisms are needed to learn real-world patterns. We show how our model is sensitive to correlated spiking activity and how this enables it to modulate the learning rate of the network without altering the mean firing rate of the neurons nor the hyparameters of the learning rule. To the best of our knowledge, this is the first work that showcases how spike timing and rate can be complementary in their role of shaping the connectivity of spiking neural networks.

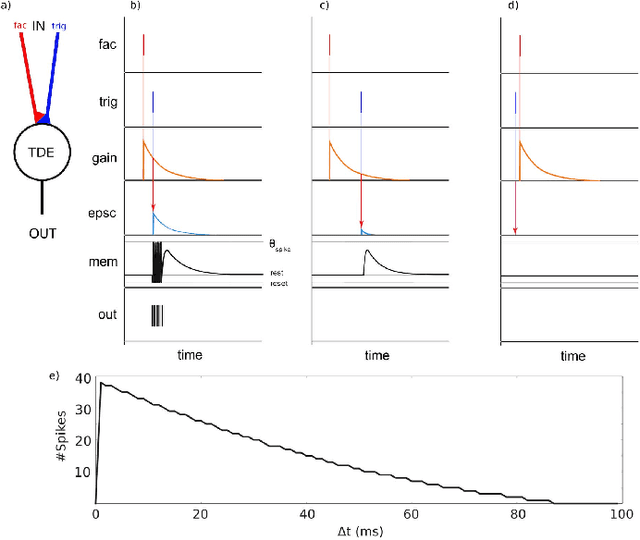

Towards efficient keyword spotting using spike-based time difference encoders

Mar 19, 2025Abstract:Keyword spotting in edge devices is becoming increasingly important as voice-activated assistants are widely used. However, its deployment is often limited by the extreme low-power constraints of the target embedded systems. Here, we explore the Temporal Difference Encoder (TDE) performance in keyword spotting. This recent neuron model encodes the time difference in instantaneous frequency and spike count to perform efficient keyword spotting with neuromorphic processors. We use the TIdigits dataset of spoken digits with a formant decomposition and rate-based encoding into spikes. We compare three Spiking Neural Networks (SNNs) architectures to learn and classify spatio-temporal signals. The proposed SNN architectures are made of three layers with variation in its hidden layer composed of either (1) feedforward TDE, (2) feedforward Current-Based Leaky Integrate-and-Fire (CuBa-LIF), or (3) recurrent CuBa-LIF neurons. We first show that the spike trains of the frequency-converted spoken digits have a large amount of information in the temporal domain, reinforcing the importance of better exploiting temporal encoding for such a task. We then train the three SNNs with the same number of synaptic weights to quantify and compare their performance based on the accuracy and synaptic operations. The resulting accuracy of the feedforward TDE network (89%) is higher than the feedforward CuBa-LIF network (71%) and close to the recurrent CuBa-LIF network (91%). However, the feedforward TDE-based network performs 92% fewer synaptic operations than the recurrent CuBa-LIF network with the same amount of synapses. In addition, the results of the TDE network are highly interpretable and correlated with the frequency and timescale features of the spoken keywords in the dataset. Our findings suggest that the TDE is a promising neuron model for scalable event-driven processing of spatio-temporal patterns.

Event-based vision for egomotion estimation using precise event timing

Jan 20, 2025Abstract:Egomotion estimation is crucial for applications such as autonomous navigation and robotics, where accurate and real-time motion tracking is required. However, traditional methods relying on inertial sensors are highly sensitive to external conditions, and suffer from drifts leading to large inaccuracies over long distances. Vision-based methods, particularly those utilising event-based vision sensors, provide an efficient alternative by capturing data only when changes are perceived in the scene. This approach minimises power consumption while delivering high-speed, low-latency feedback. In this work, we propose a fully event-based pipeline for egomotion estimation that processes the event stream directly within the event-based domain. This method eliminates the need for frame-based intermediaries, allowing for low-latency and energy-efficient motion estimation. We construct a shallow spiking neural network using a synaptic gating mechanism to convert precise event timing into bursts of spikes. These spikes encode local optical flow velocities, and the network provides an event-based readout of egomotion. We evaluate the network's performance on a dedicated chip, demonstrating strong potential for low-latency, low-power motion estimation. Additionally, simulations of larger networks show that the system achieves state-of-the-art accuracy in egomotion estimation tasks with event-based cameras, making it a promising solution for real-time, power-constrained robotics applications.

A scalable event-driven spatiotemporal feature extraction circuit

Jan 17, 2025Abstract:Event-driven sensors, which produce data only when there is a change in the input signal, are increasingly used in applications that require low-latency and low-power real-time sensing, such as robotics and edge devices. To fully achieve the latency and power advantages on offer however, similarly event-driven data processing methods are required. A promising solution is the TDE: an event-based processing element which encodes the time difference between events on different channels into an output event stream. In this work we introduce a novel TDE implementation on CMOS. The circuit is robust to device mismatch and allows the linear integration of input events. This is crucial for enabling a high-density implementation of many TDEs on the same die, and for realising real-time parallel processing of the high-event-rate data produced by event-driven sensors.

TEXEL: A neuromorphic processor with on-chip learning for beyond-CMOS device integration

Oct 21, 2024

Abstract:Recent advances in memory technologies, devices and materials have shown great potential for integration into neuromorphic electronic systems. However, a significant gap remains between the development of these materials and the realization of large-scale, fully functional systems. One key challenge is determining which devices and materials are best suited for specific functions and how they can be paired with CMOS circuitry. To address this, we introduce TEXEL, a mixed-signal neuromorphic architecture designed to explore the integration of on-chip learning circuits and novel two- and three-terminal devices. TEXEL serves as an accessible platform to bridge the gap between CMOS-based neuromorphic computation and the latest advancements in emerging devices. In this paper, we demonstrate the readiness of TEXEL for device integration through comprehensive chip measurements and simulations. TEXEL provides a practical system for testing bio-inspired learning algorithms alongside emerging devices, establishing a tangible link between brain-inspired computation and cutting-edge device research.

Distributed Representations Enable Robust Multi-Timescale Computation in Neuromorphic Hardware

May 02, 2024

Abstract:Programming recurrent spiking neural networks (RSNNs) to robustly perform multi-timescale computation remains a difficult challenge. To address this, we show how the distributed approach offered by vector symbolic architectures (VSAs), which uses high-dimensional random vectors as the smallest units of representation, can be leveraged to embed robust multi-timescale dynamics into attractor-based RSNNs. We embed finite state machines into the RSNN dynamics by superimposing a symmetric autoassociative weight matrix and asymmetric transition terms. The transition terms are formed by the VSA binding of an input and heteroassociative outer-products between states. Our approach is validated through simulations with highly non-ideal weights; an experimental closed-loop memristive hardware setup; and on Loihi 2, where it scales seamlessly to large state machines. This work demonstrates the effectiveness of VSA representations for embedding robust computation with recurrent dynamics into neuromorphic hardware, without requiring parameter fine-tuning or significant platform-specific optimisation. This advances VSAs as a high-level representation-invariant abstract language for cognitive algorithms in neuromorphic hardware.

ETLP: Event-based Three-factor Local Plasticity for online learning with neuromorphic hardware

Jan 24, 2023Abstract:Neuromorphic perception with event-based sensors, asynchronous hardware and spiking neurons is showing promising results for real-time and energy-efficient inference in embedded systems. The next promise of brain-inspired computing is to enable adaptation to changes at the edge with online learning. However, the parallel and distributed architectures of neuromorphic hardware based on co-localized compute and memory imposes locality constraints to the on-chip learning rules. We propose in this work the Event-based Three-factor Local Plasticity (ETLP) rule that uses (1) the pre-synaptic spike trace, (2) the post-synaptic membrane voltage and (3) a third factor in the form of projected labels with no error calculation, that also serve as update triggers. We apply ETLP with feedforward and recurrent spiking neural networks on visual and auditory event-based pattern recognition, and compare it to Back-Propagation Through Time (BPTT) and eProp. We show a competitive performance in accuracy with a clear advantage in the computational complexity for ETLP. We also show that when using local plasticity, threshold adaptation in spiking neurons and a recurrent topology are necessary to learn spatio-temporal patterns with a rich temporal structure. Finally, we provide a proof of concept hardware implementation of ETLP on FPGA to highlight the simplicity of its computational primitives and how they can be mapped into neuromorphic hardware for online learning with low-energy consumption and real-time interaction.

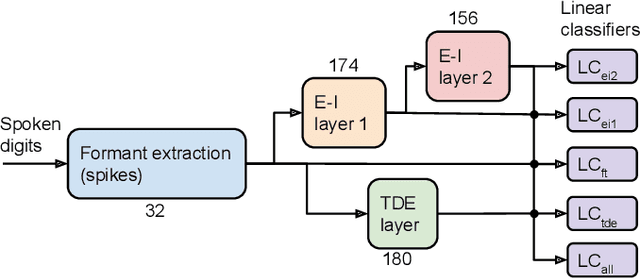

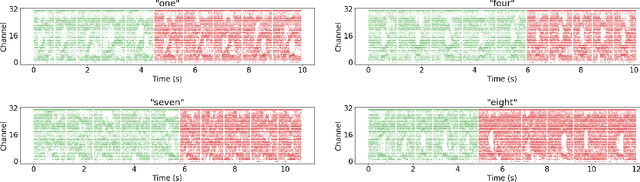

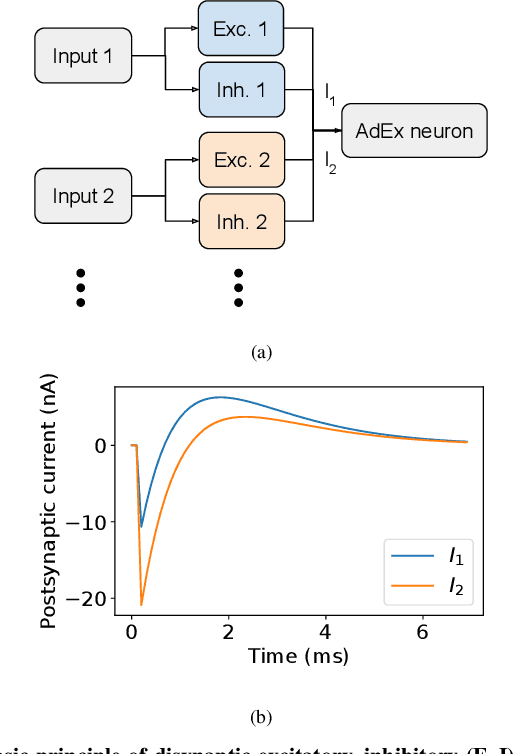

A Comparison of Temporal Encoders for Neuromorphic Keyword Spotting with Few Neurons

Jan 24, 2023

Abstract:With the expansion of AI-powered virtual assistants, there is a need for low-power keyword spotting systems providing a "wake-up" mechanism for subsequent computationally expensive speech recognition. One promising approach is the use of neuromorphic sensors and spiking neural networks (SNNs) implemented in neuromorphic processors for sparse event-driven sensing. However, this requires resource-efficient SNN mechanisms for temporal encoding, which need to consider that these systems process information in a streaming manner, with physical time being an intrinsic property of their operation. In this work, two candidate neurocomputational elements for temporal encoding and feature extraction in SNNs described in recent literature - the spiking time-difference encoder (TDE) and disynaptic excitatory-inhibitory (E-I) elements - are comparatively investigated in a keyword-spotting task on formants computed from spoken digits in the TIDIGITS dataset. While both encoders improve performance over direct classification of the formant features in the training data, enabling a complete binary classification with a logistic regression model, they show no clear improvements on the test set. Resource-efficient keyword spotting applications may benefit from the use of these encoders, but further work on methods for learning the time constants and weights is required to investigate their full potential.

Vector Symbolic Finite State Machines in Attractor Neural Networks

Dec 02, 2022Abstract:Hopfield attractor networks are robust distributed models of human memory. We propose construction rules such that an attractor network may implement an arbitrary finite state machine (FSM), where states and stimuli are represented by high-dimensional random bipolar vectors, and all state transitions are enacted by the attractor network's dynamics. Numerical simulations show the capacity of the model, in terms of the maximum size of implementable FSM, to be linear in the size of the attractor network. We show that the model is robust to imprecise and noisy weights, and so a prime candidate for implementation with high-density but unreliable devices. By endowing attractor networks with the ability to emulate arbitrary FSMs, we propose a plausible path by which FSMs may exist as a distributed computational primitive in biological neural networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge