Arianna Rubino

TEXEL: A neuromorphic processor with on-chip learning for beyond-CMOS device integration

Oct 21, 2024

Abstract:Recent advances in memory technologies, devices and materials have shown great potential for integration into neuromorphic electronic systems. However, a significant gap remains between the development of these materials and the realization of large-scale, fully functional systems. One key challenge is determining which devices and materials are best suited for specific functions and how they can be paired with CMOS circuitry. To address this, we introduce TEXEL, a mixed-signal neuromorphic architecture designed to explore the integration of on-chip learning circuits and novel two- and three-terminal devices. TEXEL serves as an accessible platform to bridge the gap between CMOS-based neuromorphic computation and the latest advancements in emerging devices. In this paper, we demonstrate the readiness of TEXEL for device integration through comprehensive chip measurements and simulations. TEXEL provides a practical system for testing bio-inspired learning algorithms alongside emerging devices, establishing a tangible link between brain-inspired computation and cutting-edge device research.

SPAIC: A sub-$μ$W/Channel, 16-Channel General-Purpose Event-Based Analog Front-End with Dual-Mode Encoders

Aug 31, 2023

Abstract:Low-power event-based analog front-ends (AFE) are a crucial component required to build efficient end-to-end neuromorphic processing systems for edge computing. Although several neuromorphic chips have been developed for implementing spiking neural networks (SNNs) and solving a wide range of sensory processing tasks, there are only a few general-purpose analog front-end devices that can be used to convert analog sensory signals into spikes and interfaced to neuromorphic processors. In this work, we present a novel, highly configurable analog front-end chip, denoted as SPAIC (signal-to-spike converter for analog AI computation), that offers a general-purpose dual-mode analog signal-to-spike encoding with delta modulation and pulse frequency modulation, with tunable frequency bands. The ASIC is designed in a 180 nm process. It supports and encodes a wide variety of signals spanning 4 orders of magnitude in frequency, and provides an event-based output that is compatible with existing neuromorphic processors. We validated the ASIC for its functions and present initial silicon measurement results characterizing the basic building blocks of the chip.

Neuromorphic analog circuits for robust on-chip always-on learning in spiking neural networks

Jul 12, 2023

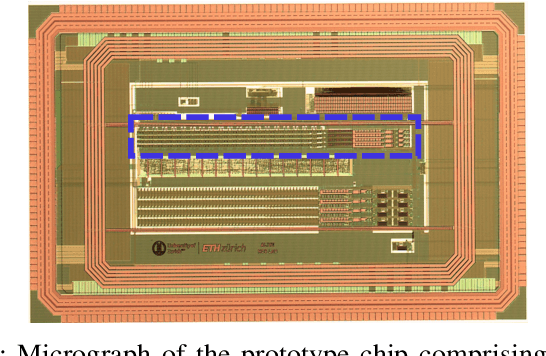

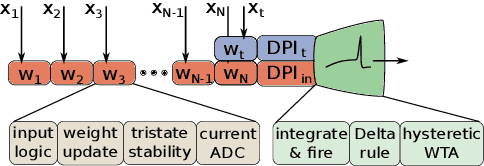

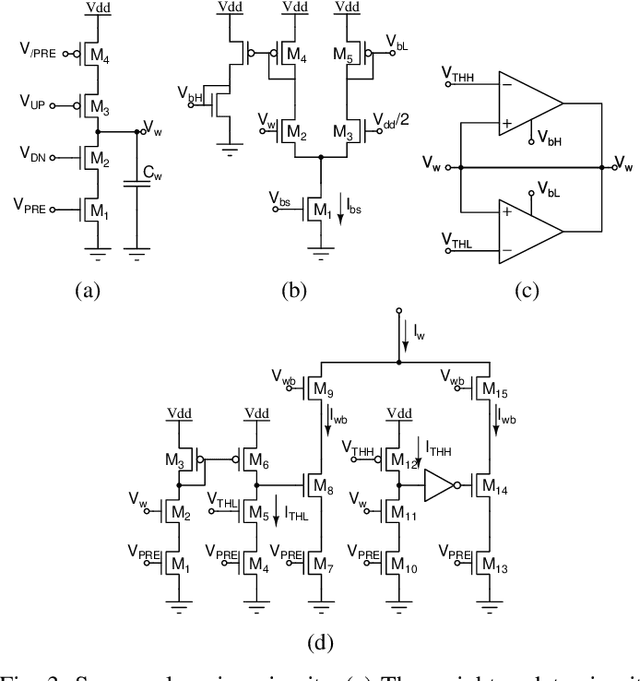

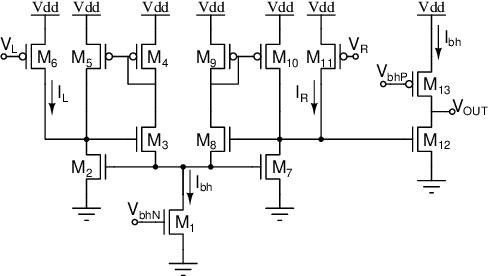

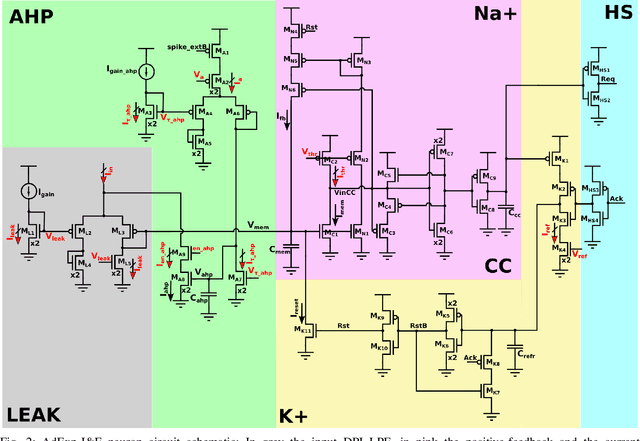

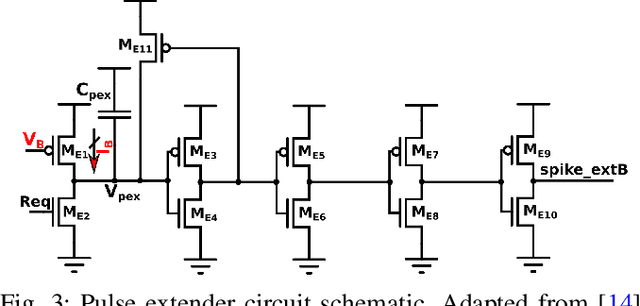

Abstract:Mixed-signal neuromorphic systems represent a promising solution for solving extreme-edge computing tasks without relying on external computing resources. Their spiking neural network circuits are optimized for processing sensory data on-line in continuous-time. However, their low precision and high variability can severely limit their performance. To address this issue and improve their robustness to inhomogeneities and noise in both their internal state variables and external input signals, we designed on-chip learning circuits with short-term analog dynamics and long-term tristate discretization mechanisms. An additional hysteretic stop-learning mechanism is included to improve stability and automatically disable weight updates when necessary, to enable continuous always-on learning. We designed a spiking neural network with these learning circuits in a prototype chip using a 180 nm CMOS technology. Simulation and silicon measurement results from the prototype chip are presented. These circuits enable the construction of large-scale spiking neural networks with online learning capabilities for real-world edge computing tasks.

Spike-based local synaptic plasticity: A survey of computational models and neuromorphic circuits

Sep 30, 2022

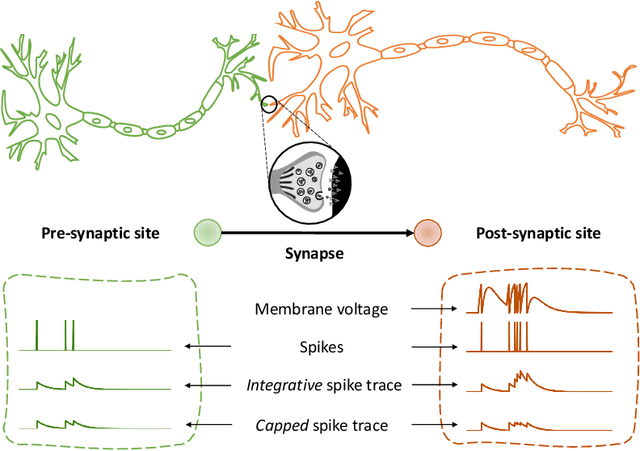

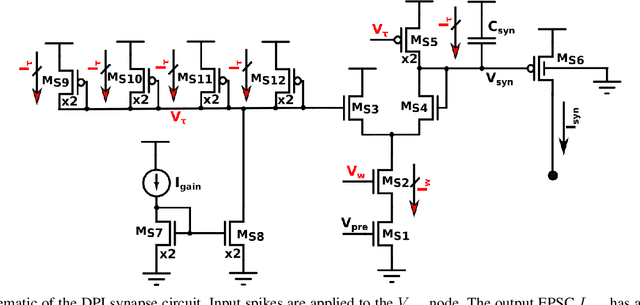

Abstract:Understanding how biological neural networks carry out learning using spike-based local plasticity mechanisms can lead to the development of powerful, energy-efficient, and adaptive neuromorphic processing systems. A large number of spike-based learning models have recently been proposed following different approaches. However, it is difficult to assess if and how they could be mapped onto neuromorphic hardware, and to compare their features and ease of implementation. To this end, in this survey, we provide a comprehensive overview of representative brain-inspired synaptic plasticity models and mixed-signal \acs{CMOS} neuromorphic circuits within a unified framework. We review historical, bottom-up, and top-down approaches to modeling synaptic plasticity, and we identify computational primitives that can support low-latency and low-power hardware implementations of spike-based learning rules. We provide a common definition of a locality principle based on pre- and post-synaptic neuron information, which we propose as a fundamental requirement for physical implementations of synaptic plasticity. Based on this principle, we compare the properties of these models within the same framework, and describe the mixed-signal electronic circuits that implement their computing primitives, pointing out how these building blocks enable efficient on-chip and online learning in neuromorphic processing systems.

Ultra-Low-Power FDSOI Neural Circuits for Extreme-Edge Neuromorphic Intelligence

Jul 14, 2020

Abstract:Recent years have seen an increasing interest in the development of artificial intelligence circuits and systems for edge computing applications. In-memory computing mixed-signal neuromorphic architectures provide promising ultra-low-power solutions for edge-computing sensory-processing applications, thanks to their ability to emulate spiking neural networks in real-time. The fine-grain parallelism offered by this approach allows such neural circuits to process the sensory data efficiently by adapting their dynamics to the ones of the sensed signals, without having to resort to the time-multiplexed computing paradigm of von Neumann architectures. To reduce power consumption even further, we present a set of mixed-signal analog/digital circuits that exploit the features of advanced Fully-Depleted Silicon on Insulator (FDSOI) integration processes. Specifically, we explore the options of advanced FDSOI technologies to address analog design issues and optimize the design of the synapse integrator and of the adaptive neuron circuits accordingly. We present circuit simulation results and demonstrate the circuit's ability to produce biologically plausible neural dynamics with compact designs, optimized for the realization of large-scale spiking neural networks in neuromorphic processors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge