Ning Qiao

SynSense AG, Swizerland, SynSense, PR China

AnchorDP3: 3D Affordance Guided Sparse Diffusion Policy for Robotic Manipulation

Jun 24, 2025

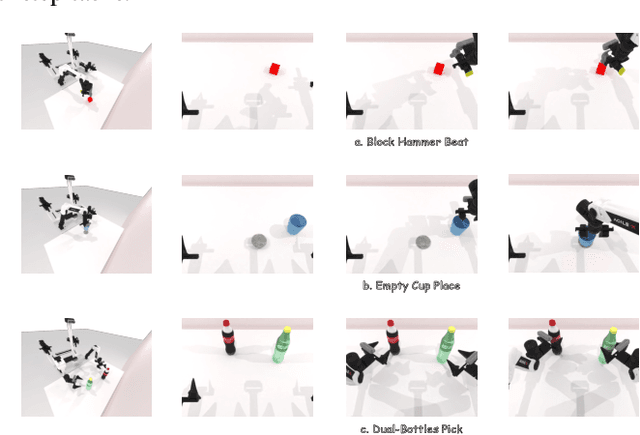

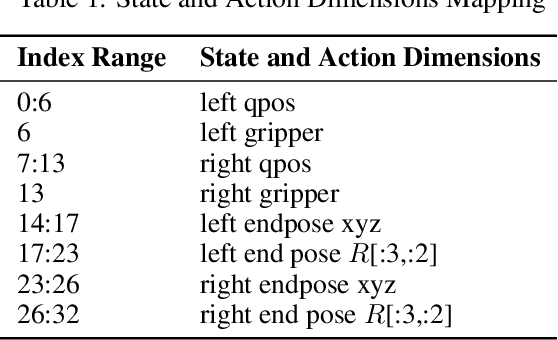

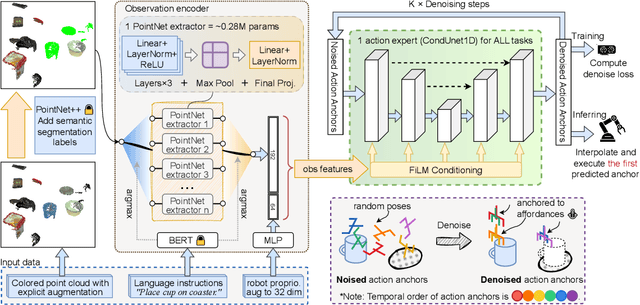

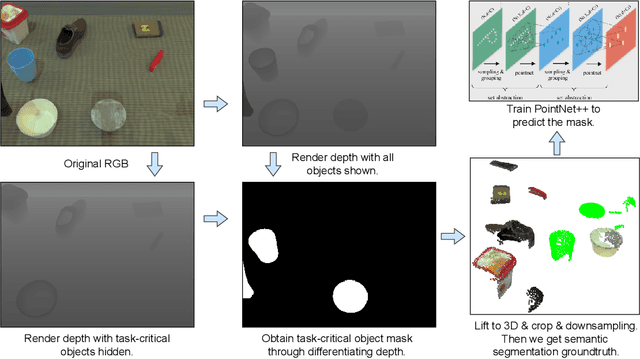

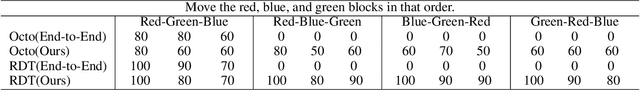

Abstract:We present AnchorDP3, a diffusion policy framework for dual-arm robotic manipulation that achieves state-of-the-art performance in highly randomized environments. AnchorDP3 integrates three key innovations: (1) Simulator-Supervised Semantic Segmentation, using rendered ground truth to explicitly segment task-critical objects within the point cloud, which provides strong affordance priors; (2) Task-Conditioned Feature Encoders, lightweight modules processing augmented point clouds per task, enabling efficient multi-task learning through a shared diffusion-based action expert; (3) Affordance-Anchored Keypose Diffusion with Full State Supervision, replacing dense trajectory prediction with sparse, geometrically meaningful action anchors, i.e., keyposes such as pre-grasp pose, grasp pose directly anchored to affordances, drastically simplifying the prediction space; the action expert is forced to predict both robot joint angles and end-effector poses simultaneously, which exploits geometric consistency to accelerate convergence and boost accuracy. Trained on large-scale, procedurally generated simulation data, AnchorDP3 achieves a 98.7% average success rate in the RoboTwin benchmark across diverse tasks under extreme randomization of objects, clutter, table height, lighting, and backgrounds. This framework, when integrated with the RoboTwin real-to-sim pipeline, has the potential to enable fully autonomous generation of deployable visuomotor policies from only scene and instruction, totally eliminating human demonstrations from learning manipulation skills.

An Atomic Skill Library Construction Method for Data-Efficient Embodied Manipulation

Jan 25, 2025

Abstract:Embodied manipulation is a fundamental ability in the realm of embodied artificial intelligence. Although current embodied manipulation models show certain generalizations in specific settings, they struggle in new environments and tasks due to the complexity and diversity of real-world scenarios. The traditional end-to-end data collection and training manner leads to significant data demands, which we call ``data explosion''. To address the issue, we introduce a three-wheeled data-driven method to build an atomic skill library. We divide tasks into subtasks using the Vision-Language Planning (VLP). Then, atomic skill definitions are formed by abstracting the subtasks. Finally, an atomic skill library is constructed via data collection and Vision-Language-Action (VLA) fine-tuning. As the atomic skill library expands dynamically with the three-wheel update strategy, the range of tasks it can cover grows naturally. In this way, our method shifts focus from end-to-end tasks to atomic skills, significantly reducing data costs while maintaining high performance and enabling efficient adaptation to new tasks. Extensive experiments in real-world settings demonstrate the effectiveness and efficiency of our approach.

Efficient 3D Recognition with Event-driven Spike Sparse Convolution

Dec 10, 2024

Abstract:Spiking Neural Networks (SNNs) provide an energy-efficient way to extract 3D spatio-temporal features. Point clouds are sparse 3D spatial data, which suggests that SNNs should be well-suited for processing them. However, when applying SNNs to point clouds, they often exhibit limited performance and fewer application scenarios. We attribute this to inappropriate preprocessing and feature extraction methods. To address this issue, we first introduce the Spike Voxel Coding (SVC) scheme, which encodes the 3D point clouds into a sparse spike train space, reducing the storage requirements and saving time on point cloud preprocessing. Then, we propose a Spike Sparse Convolution (SSC) model for efficiently extracting 3D sparse point cloud features. Combining SVC and SSC, we design an efficient 3D SNN backbone (E-3DSNN), which is friendly with neuromorphic hardware. For instance, SSC can be implemented on neuromorphic chips with only minor modifications to the addressing function of vanilla spike convolution. Experiments on ModelNet40, KITTI, and Semantic KITTI datasets demonstrate that E-3DSNN achieves state-of-the-art (SOTA) results with remarkable efficiency. Notably, our E-3DSNN (1.87M) obtained 91.7\% top-1 accuracy on ModelNet40, surpassing the current best SNN baselines (14.3M) by 3.0\%. To our best knowledge, it is the first direct training 3D SNN backbone that can simultaneously handle various 3D computer vision tasks (e.g., classification, detection, and segmentation) with an event-driven nature. Code is available: https://github.com/bollossom/E-3DSNN/.

Real-time Sub-milliwatt Epilepsy Detection Implemented on a Spiking Neural Network Edge Inference Processor

Oct 22, 2024Abstract:Analyzing electroencephalogram (EEG) signals to detect the epileptic seizure status of a subject presents a challenge to existing technologies aimed at providing timely and efficient diagnosis. In this study, we aimed to detect interictal and ictal periods of epileptic seizures using a spiking neural network (SNN). Our proposed approach provides an online and real-time preliminary diagnosis of epileptic seizures and helps to detect possible pathological conditions.To validate our approach, we conducted experiments using multiple datasets. We utilized a trained SNN to identify the presence of epileptic seizures and compared our results with those of related studies. The SNN model was deployed on Xylo, a digital SNN neuromorphic processor designed to process temporal signals. Xylo efficiently simulates spiking leaky integrate-and-fire neurons with exponential input synapses. Xylo has much lower energy requirments than traditional approaches to signal processing, making it an ideal platform for developing low-power seizure detection systems.Our proposed method has a high test accuracy of 93.3% and 92.9% when classifying ictal and interictal periods. At the same time, the application has an average power consumption of 87.4 uW(IO power) + 287.9 uW(computational power) when deployed to Xylo. Our method demonstrates excellent low-latency performance when tested on multiple datasets. Our work provides a new solution for seizure detection, and it is expected to be widely used in portable and wearable devices in the future.

High-Performance Temporal Reversible Spiking Neural Networks with $O(L)$ Training Memory and $O(1)$ Inference Cost

May 26, 2024

Abstract:Multi-timestep simulation of brain-inspired Spiking Neural Networks (SNNs) boost memory requirements during training and increase inference energy cost. Current training methods cannot simultaneously solve both training and inference dilemmas. This work proposes a novel Temporal Reversible architecture for SNNs (T-RevSNN) to jointly address the training and inference challenges by altering the forward propagation of SNNs. We turn off the temporal dynamics of most spiking neurons and design multi-level temporal reversible interactions at temporal turn-on spiking neurons, resulting in a $O(L)$ training memory. Combined with the temporal reversible nature, we redesign the input encoding and network organization of SNNs to achieve $O(1)$ inference energy cost. Then, we finely adjust the internal units and residual connections of the basic SNN block to ensure the effectiveness of sparse temporal information interaction. T-RevSNN achieves excellent accuracy on ImageNet, while the memory efficiency, training time acceleration, and inference energy efficiency can be significantly improved by $8.6 \times$, $2.0 \times$, and $1.6 \times$, respectively. This work is expected to break the technical bottleneck of significantly increasing memory cost and training time for large-scale SNNs while maintaining high performance and low inference energy cost. Source code and models are available at: https://github.com/BICLab/T-RevSNN.

Vector-Symbolic Architecture for Event-Based Optical Flow

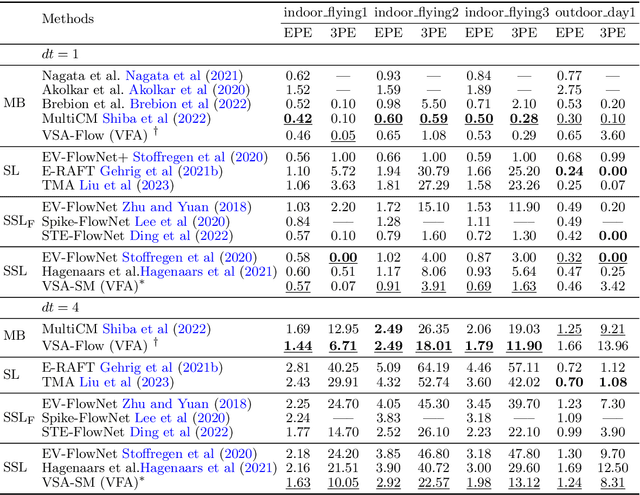

May 15, 2024

Abstract:From a perspective of feature matching, optical flow estimation for event cameras involves identifying event correspondences by comparing feature similarity across accompanying event frames. In this work, we introduces an effective and robust high-dimensional (HD) feature descriptor for event frames, utilizing Vector Symbolic Architectures (VSA). The topological similarity among neighboring variables within VSA contributes to the enhanced representation similarity of feature descriptors for flow-matching points, while its structured symbolic representation capacity facilitates feature fusion from both event polarities and multiple spatial scales. Based on this HD feature descriptor, we propose a novel feature matching framework for event-based optical flow, encompassing both model-based (VSA-Flow) and self-supervised learning (VSA-SM) methods. In VSA-Flow, accurate optical flow estimation validates the effectiveness of HD feature descriptors. In VSA-SM, a novel similarity maximization method based on the HD feature descriptor is proposed to learn optical flow in a self-supervised way from events alone, eliminating the need for auxiliary grayscale images. Evaluation results demonstrate that our VSA-based method achieves superior accuracy in comparison to both model-based and self-supervised learning methods on the DSEC benchmark, while remains competitive among both methods on the MVSEC benchmark. This contribution marks a significant advancement in event-based optical flow within the feature matching methodology.

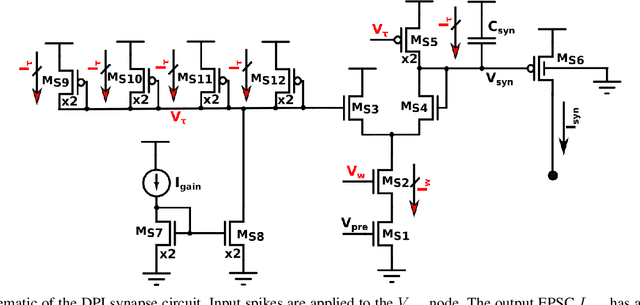

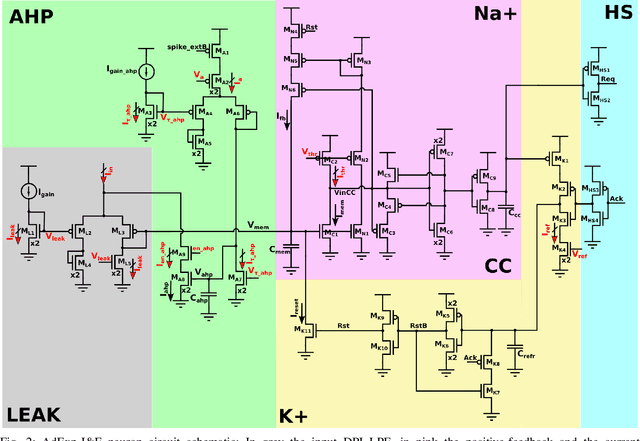

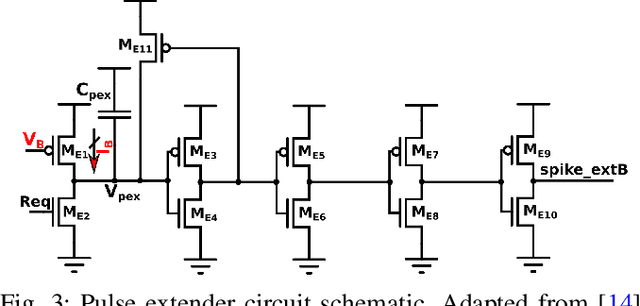

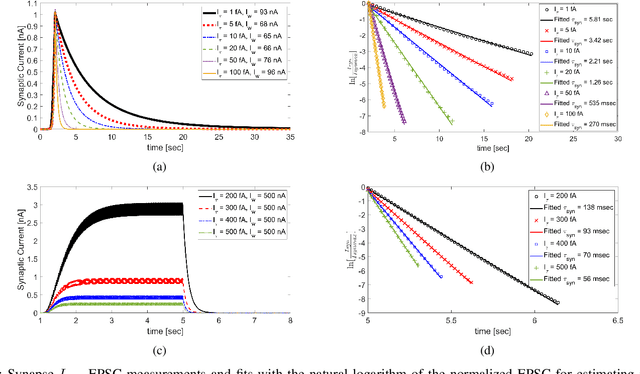

DYNAP-SE2: a scalable multi-core dynamic neuromorphic asynchronous spiking neural network processor

Oct 01, 2023Abstract:With the remarkable progress that technology has made, the need for processing data near the sensors at the edge has increased dramatically. The electronic systems used in these applications must process data continuously, in real-time, and extract relevant information using the smallest possible energy budgets. A promising approach for implementing always-on processing of sensory signals that supports on-demand, sparse, and edge-computing is to take inspiration from biological nervous system. Following this approach, we present a brain-inspired platform for prototyping real-time event-based Spiking Neural Networks (SNNs). The system proposed supports the direct emulation of dynamic and realistic neural processing phenomena such as short-term plasticity, NMDA gating, AMPA diffusion, homeostasis, spike frequency adaptation, conductance-based dendritic compartments and spike transmission delays. The analog circuits that implement such primitives are paired with a low latency asynchronous digital circuits for routing and mapping events. This asynchronous infrastructure enables the definition of different network architectures, and provides direct event-based interfaces to convert and encode data from event-based and continuous-signal sensors. Here we describe the overall system architecture, we characterize the mixed signal analog-digital circuits that emulate neural dynamics, demonstrate their features with experimental measurements, and present a low- and high-level software ecosystem that can be used for configuring the system. The flexibility to emulate different biologically plausible neural networks, and the chip's ability to monitor both population and single neuron signals in real-time, allow to develop and validate complex models of neural processing for both basic research and edge-computing applications.

"Seeing'' Electric Network Frequency from Events

May 04, 2023Abstract:Most of the artificial lights fluctuate in response to the grid's alternating current and exhibit subtle variations in terms of both intensity and spectrum, providing the potential to estimate the Electric Network Frequency (ENF) from conventional frame-based videos. Nevertheless, the performance of Video-based ENF (V-ENF) estimation largely relies on the imaging quality and thus may suffer from significant interference caused by non-ideal sampling, motion, and extreme lighting conditions. In this paper, we show that the ENF can be extracted without the above limitations from a new modality provided by the so-called event camera, a neuromorphic sensor that encodes the light intensity variations and asynchronously emits events with extremely high temporal resolution and high dynamic range. Specifically, we first formulate and validate the physical mechanism for the ENF captured in events, and then propose a simple yet robust Event-based ENF (E-ENF) estimation method through mode filtering and harmonic enhancement. Furthermore, we build an Event-Video ENF Dataset (EV-ENFD) that records both events and videos in diverse scenes. Extensive experiments on EV-ENFD demonstrate that our proposed E-ENF method can extract more accurate ENF traces, outperforming the conventional V-ENF by a large margin, especially in challenging environments with object motions and extreme lighting conditions. The code and dataset are available at https://xlx-creater.github.io/E-ENF.

Speck: A Smart event-based Vision Sensor with a low latency 327K Neuron Convolutional Neuronal Network Processing Pipeline

Apr 13, 2023Abstract:Edge computing solutions that enable the extraction of high level information from a variety of sensors is in increasingly high demand. This is due to the increasing number of smart devices that require sensory processing for their application on the edge. To tackle this problem, we present a smart vision sensor System on Chip (Soc), featuring an event-based camera and a low power asynchronous spiking Convolutional Neuronal Network (sCNN) computing architecture embedded on a single chip. By combining both sensor and processing on a single die, we can lower unit production costs significantly. Moreover, the simple end-to-end nature of the SoC facilitates small stand-alone applications as well as functioning as an edge node in a larger systems. The event-driven nature of the vision sensor delivers high-speed signals in a sparse data stream. This is reflected in the processing pipeline, focuses on optimising highly sparse computation and minimising latency for 9 sCNN layers to $3.36\mu s$. Overall, this results in an extremely low-latency visual processing pipeline deployed on a small form factor with a low energy budget and sensor cost. We present the asynchronous architecture, the individual blocks, the sCNN processing principle and benchmark against other sCNN capable processors.

Ultra-Low-Power FDSOI Neural Circuits for Extreme-Edge Neuromorphic Intelligence

Jul 14, 2020

Abstract:Recent years have seen an increasing interest in the development of artificial intelligence circuits and systems for edge computing applications. In-memory computing mixed-signal neuromorphic architectures provide promising ultra-low-power solutions for edge-computing sensory-processing applications, thanks to their ability to emulate spiking neural networks in real-time. The fine-grain parallelism offered by this approach allows such neural circuits to process the sensory data efficiently by adapting their dynamics to the ones of the sensed signals, without having to resort to the time-multiplexed computing paradigm of von Neumann architectures. To reduce power consumption even further, we present a set of mixed-signal analog/digital circuits that exploit the features of advanced Fully-Depleted Silicon on Insulator (FDSOI) integration processes. Specifically, we explore the options of advanced FDSOI technologies to address analog design issues and optimize the design of the synapse integrator and of the adaptive neuron circuits accordingly. We present circuit simulation results and demonstrate the circuit's ability to produce biologically plausible neural dynamics with compact designs, optimized for the realization of large-scale spiking neural networks in neuromorphic processors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge