Dongjiang Li

AnchorDP3: 3D Affordance Guided Sparse Diffusion Policy for Robotic Manipulation

Jun 24, 2025

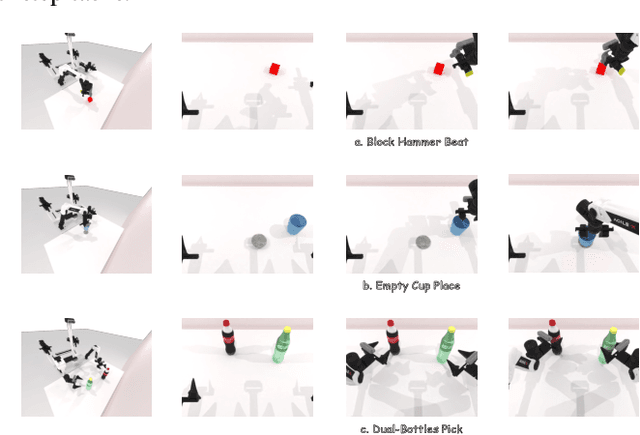

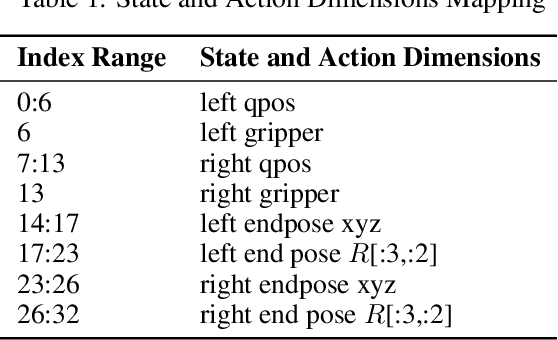

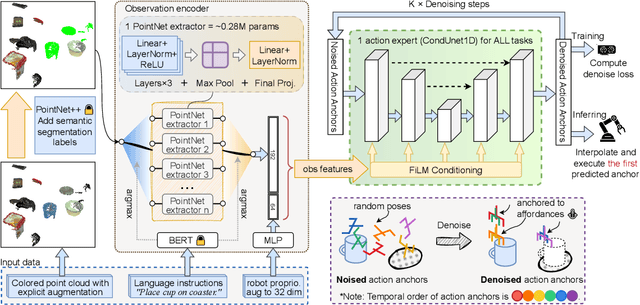

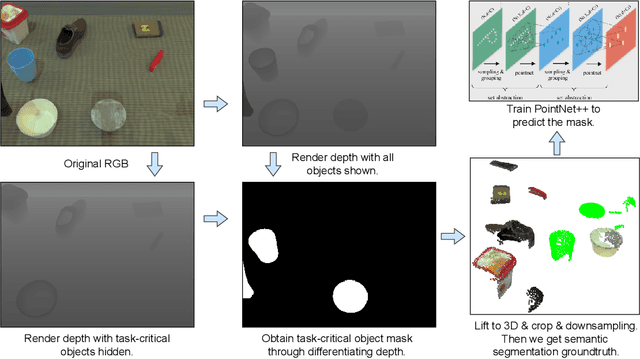

Abstract:We present AnchorDP3, a diffusion policy framework for dual-arm robotic manipulation that achieves state-of-the-art performance in highly randomized environments. AnchorDP3 integrates three key innovations: (1) Simulator-Supervised Semantic Segmentation, using rendered ground truth to explicitly segment task-critical objects within the point cloud, which provides strong affordance priors; (2) Task-Conditioned Feature Encoders, lightweight modules processing augmented point clouds per task, enabling efficient multi-task learning through a shared diffusion-based action expert; (3) Affordance-Anchored Keypose Diffusion with Full State Supervision, replacing dense trajectory prediction with sparse, geometrically meaningful action anchors, i.e., keyposes such as pre-grasp pose, grasp pose directly anchored to affordances, drastically simplifying the prediction space; the action expert is forced to predict both robot joint angles and end-effector poses simultaneously, which exploits geometric consistency to accelerate convergence and boost accuracy. Trained on large-scale, procedurally generated simulation data, AnchorDP3 achieves a 98.7% average success rate in the RoboTwin benchmark across diverse tasks under extreme randomization of objects, clutter, table height, lighting, and backgrounds. This framework, when integrated with the RoboTwin real-to-sim pipeline, has the potential to enable fully autonomous generation of deployable visuomotor policies from only scene and instruction, totally eliminating human demonstrations from learning manipulation skills.

End-to-End HOI Reconstruction Transformer with Graph-based Encoding

Mar 08, 2025

Abstract:With the diversification of human-object interaction (HOI) applications and the success of capturing human meshes, HOI reconstruction has gained widespread attention. Existing mainstream HOI reconstruction methods often rely on explicitly modeling interactions between humans and objects. However, such a way leads to a natural conflict between 3D mesh reconstruction, which emphasizes global structure, and fine-grained contact reconstruction, which focuses on local details. To address the limitations of explicit modeling, we propose the End-to-End HOI Reconstruction Transformer with Graph-based Encoding (HOI-TG). It implicitly learns the interaction between humans and objects by leveraging self-attention mechanisms. Within the transformer architecture, we devise graph residual blocks to aggregate the topology among vertices of different spatial structures. This dual focus effectively balances global and local representations. Without bells and whistles, HOI-TG achieves state-of-the-art performance on BEHAVE and InterCap datasets. Particularly on the challenging InterCap dataset, our method improves the reconstruction results for human and object meshes by 8.9% and 8.6%, respectively.

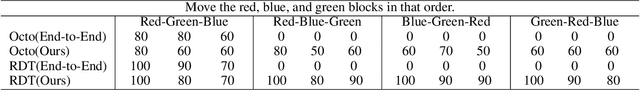

An Atomic Skill Library Construction Method for Data-Efficient Embodied Manipulation

Jan 25, 2025

Abstract:Embodied manipulation is a fundamental ability in the realm of embodied artificial intelligence. Although current embodied manipulation models show certain generalizations in specific settings, they struggle in new environments and tasks due to the complexity and diversity of real-world scenarios. The traditional end-to-end data collection and training manner leads to significant data demands, which we call ``data explosion''. To address the issue, we introduce a three-wheeled data-driven method to build an atomic skill library. We divide tasks into subtasks using the Vision-Language Planning (VLP). Then, atomic skill definitions are formed by abstracting the subtasks. Finally, an atomic skill library is constructed via data collection and Vision-Language-Action (VLA) fine-tuning. As the atomic skill library expands dynamically with the three-wheel update strategy, the range of tasks it can cover grows naturally. In this way, our method shifts focus from end-to-end tasks to atomic skills, significantly reducing data costs while maintaining high performance and enabling efficient adaptation to new tasks. Extensive experiments in real-world settings demonstrate the effectiveness and efficiency of our approach.

Empowering Embodied Manipulation: A Bimanual-Mobile Robot Manipulation Dataset for Household Tasks

May 29, 2024

Abstract:As Embodied AI advances, it increasingly enables robots to handle the complexity of household manipulation tasks more effectively. However, the application of robots in these settings remains limited due to the scarcity of bimanual-mobile robot manipulation datasets. Existing datasets either focus solely on simple grasping tasks using single-arm robots without mobility, or collect sensor data limited to a narrow scope of sensory inputs. As a result, these datasets often fail to encapsulate the intricate and dynamic nature of real-world tasks that bimanual-mobile robots are expected to perform. To address these limitations, we introduce BRMData, a Bimanual-mobile Robot Manipulation Dataset designed specifically for household applications. The dataset includes 10 diverse household tasks, ranging from simple single-arm manipulation to more complex dual-arm and mobile manipulations. It is collected using multi-view and depth-sensing data acquisition strategies. Human-robot interactions and multi-object manipulations are integrated into the task designs to closely simulate real-world household applications. Moreover, we present a Manipulation Efficiency Score (MES) metric to evaluate both the precision and efficiency of robot manipulation methods. BRMData aims to drive the development of versatile robot manipulation technologies, specifically focusing on advancing imitation learning methods from human demonstrations. The dataset is now open-sourced and available at https://embodiedrobot.github.io/, enhancing research and development efforts in the field of Embodied Manipulation.

Preferred-Action-Optimized Diffusion Policies for Offline Reinforcement Learning

May 29, 2024

Abstract:Offline reinforcement learning (RL) aims to learn optimal policies from previously collected datasets. Recently, due to their powerful representational capabilities, diffusion models have shown significant potential as policy models for offline RL issues. However, previous offline RL algorithms based on diffusion policies generally adopt weighted regression to improve the policy. This approach optimizes the policy only using the collected actions and is sensitive to Q-values, which limits the potential for further performance enhancement. To this end, we propose a novel preferred-action-optimized diffusion policy for offline RL. In particular, an expressive conditional diffusion model is utilized to represent the diverse distribution of a behavior policy. Meanwhile, based on the diffusion model, preferred actions within the same behavior distribution are automatically generated through the critic function. Moreover, an anti-noise preference optimization is designed to achieve policy improvement by using the preferred actions, which can adapt to noise-preferred actions for stable training. Extensive experiments demonstrate that the proposed method provides competitive or superior performance compared to previous state-of-the-art offline RL methods, particularly in sparse reward tasks such as Kitchen and AntMaze. Additionally, we empirically prove the effectiveness of anti-noise preference optimization.

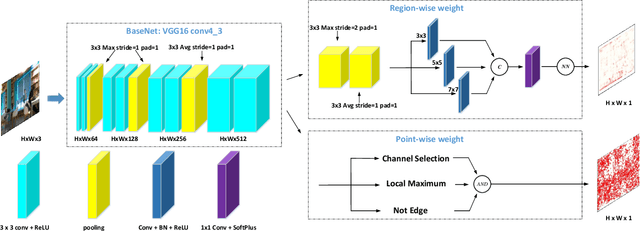

RaP-Net: A Region-wise and Point-wise Weighting Network to Extract Robust Keypoints for Indoor Localization

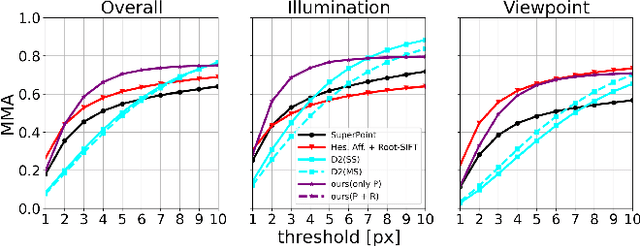

Dec 01, 2020

Abstract:Image keypoint extraction is an important step for visual localization. The localization in indoor environment is challenging for that there may be many unreliable features on dynamic or repetitive objects. Such kind of reliability cannot be well learned by existing Convolutional Neural Network (CNN) based feature extractors. We propose a novel network, RaP-Net, which explicitly addresses feature invariability with a region-wise predictor, and combines it with a point-wise predictor to select reliable keypoints in an image. We also build a new dataset, OpenLORIS-Location, to train this network. The dataset contains 1553 indoor images with location labels. There are various scene changes between images on the same location, which can help a network to learn the invariability in typical indoor scenes. Experimental results show that the proposed RaP-Net trained with the OpenLORIS-Location dataset significantly outperforms existing CNN-based keypoint extraction algorithms for indoor localization. The code and data are available at https://github.com/ivipsourcecode/RaP-Net.

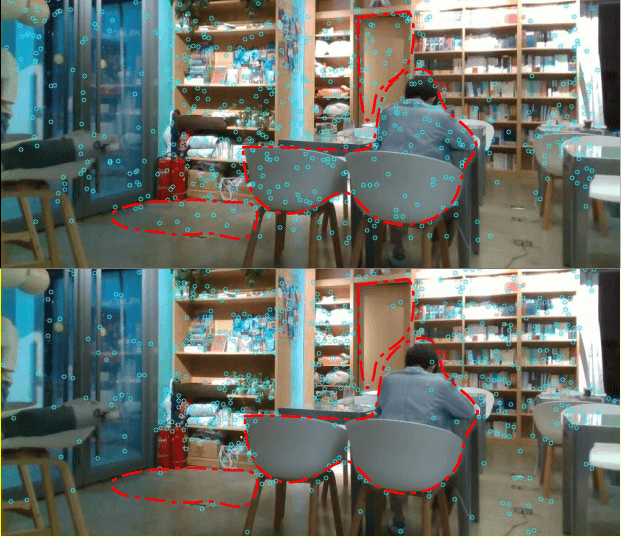

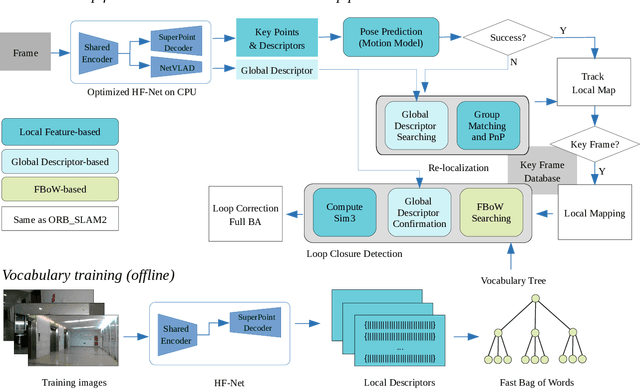

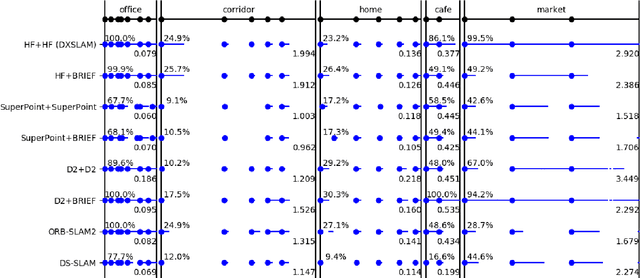

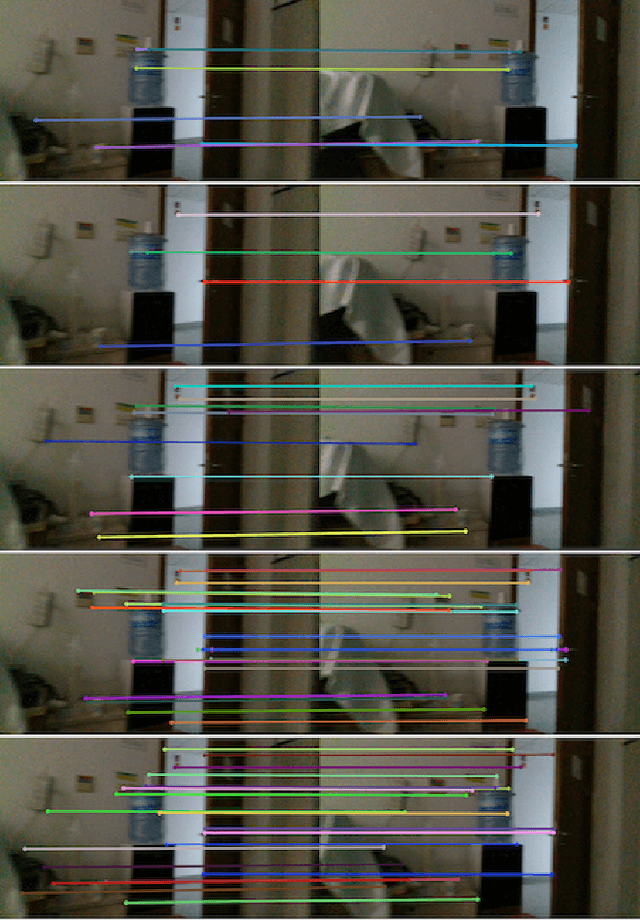

DXSLAM: A Robust and Efficient Visual SLAM System with Deep Features

Aug 12, 2020

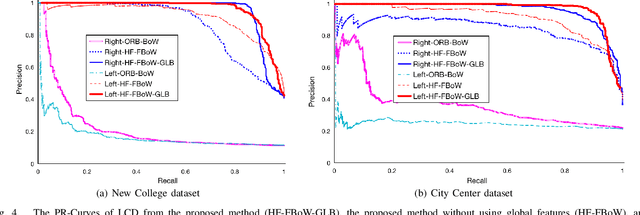

Abstract:A robust and efficient Simultaneous Localization and Mapping (SLAM) system is essential for robot autonomy. For visual SLAM algorithms, though the theoretical framework has been well established for most aspects, feature extraction and association is still empirically designed in most cases, and can be vulnerable in complex environments. This paper shows that feature extraction with deep convolutional neural networks (CNNs) can be seamlessly incorporated into a modern SLAM framework. The proposed SLAM system utilizes a state-of-the-art CNN to detect keypoints in each image frame, and to give not only keypoint descriptors, but also a global descriptor of the whole image. These local and global features are then used by different SLAM modules, resulting in much more robustness against environmental changes and viewpoint changes compared with using hand-crafted features. We also train a visual vocabulary of local features with a Bag of Words (BoW) method. Based on the local features, global features, and the vocabulary, a highly reliable loop closure detection method is built. Experimental results show that all the proposed modules significantly outperforms the baseline, and the full system achieves much lower trajectory errors and much higher correct rates on all evaluated data. Furthermore, by optimizing the CNN with Intel OpenVINO toolkit and utilizing the Fast BoW library, the system benefits greatly from the SIMD (single-instruction-multiple-data) techniques in modern CPUs. The full system can run in real-time without any GPU or other accelerators. The code is public at https://github.com/ivipsourcecode/dxslam.

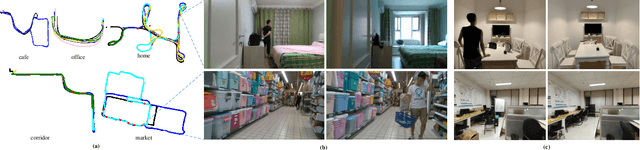

Are We Ready for Service Robots? The OpenLORIS-Scene Datasets for Lifelong SLAM

Nov 13, 2019

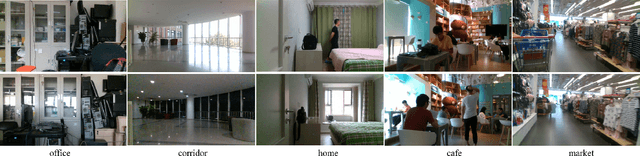

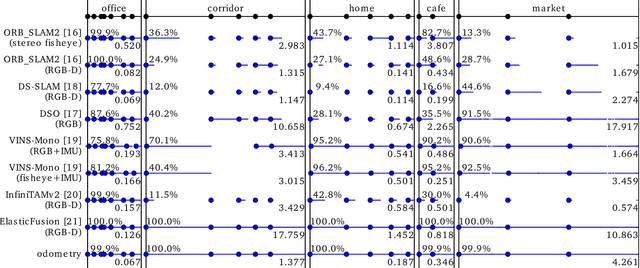

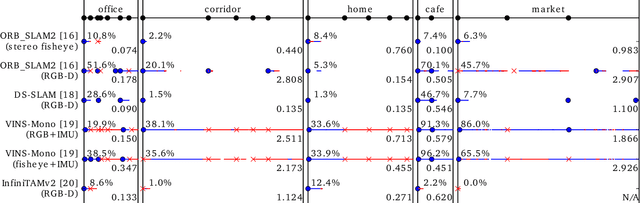

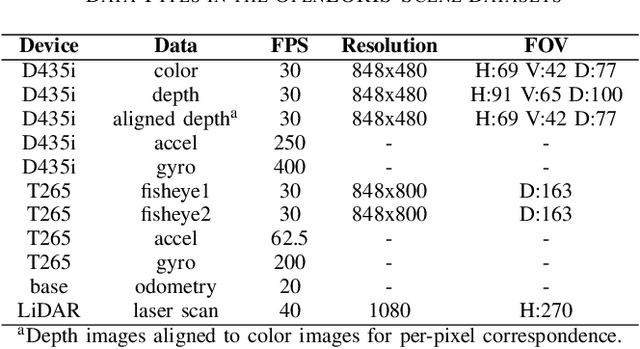

Abstract:Service robots should be able to operate autonomously in dynamic and daily changing environments over an extended period of time. While Simultaneous Localization And Mapping (SLAM) is one of the most fundamental problems for robotic autonomy, most existing SLAM works are evaluated with data sequences that are recorded in a short period of time. In real-world deployment, there can be out-of-sight scene changes caused by both natural factors and human activities. For example, in home scenarios, most objects may be movable, replaceable or deformable, and the visual features of the same place may be significantly different in some successive days. Such out-of-sight dynamics pose great challenges to the robustness of pose estimation, and hence a robot's long-term deployment and operation. To differentiate the forementioned problem from the conventional works which are usually evaluated in a static setting in a single run, the term lifelong SLAM is used here to address SLAM problems in an ever-changing environment over a long period of time. To accelerate lifelong SLAM research, we release the OpenLORIS-Scene datasets. The data are collected in real-world indoor scenes, for multiple times in each place to include scene changes in real life. We also design benchmarking metrics for lifelong SLAM, with which the robustness and accuracy of pose estimation are evaluated separately. The datasets and benchmark are available online at https://lifelong-robotic-vision.github.io/dataset/scene.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge