Pengpeng Zhao

Soochow University

How Do Graph Signals Affect Recommendation: Unveiling the Mystery of Low and High-Frequency Graph Signals

Dec 10, 2025

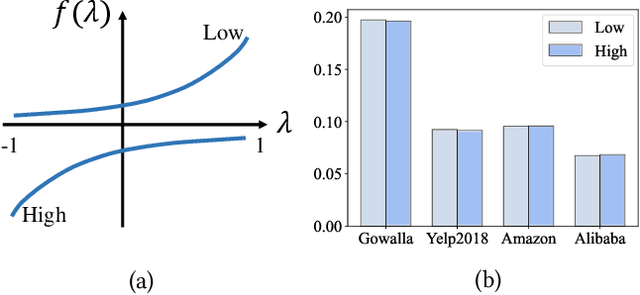

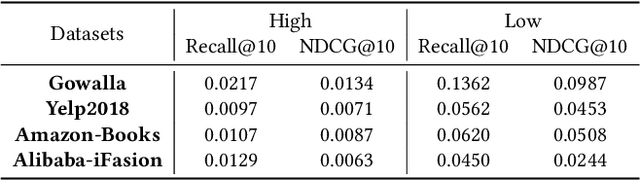

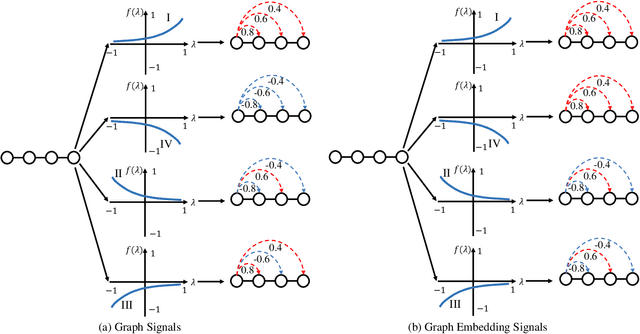

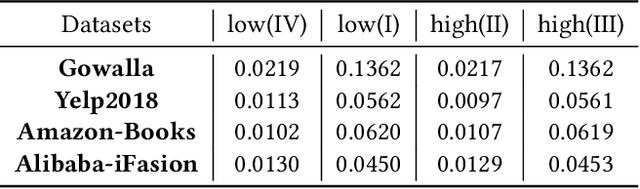

Abstract:Spectral graph neural networks (GNNs) are highly effective in modeling graph signals, with their success in recommendation often attributed to low-pass filtering. However, recent studies highlight the importance of high-frequency signals. The role of low-frequency and high-frequency graph signals in recommendation remains unclear. This paper aims to bridge this gap by investigating the influence of graph signals on recommendation performance. We theoretically prove that the effects of low-frequency and high-frequency graph signals are equivalent in recommendation tasks, as both contribute by smoothing the similarities between user-item pairs. To leverage this insight, we propose a frequency signal scaler, a plug-and-play module that adjusts the graph signal filter function to fine-tune the smoothness between user-item pairs, making it compatible with any GNN model. Additionally, we identify and prove that graph embedding-based methods cannot fully capture the characteristics of graph signals. To address this limitation, a space flip method is introduced to restore the expressive power of graph embeddings. Remarkably, we demonstrate that either low-frequency or high-frequency graph signals alone are sufficient for effective recommendations. Extensive experiments on four public datasets validate the effectiveness of our proposed methods. Code is avaliable at https://github.com/mojosey/SimGCF.

Wavelet Enhanced Adaptive Frequency Filter for Sequential Recommendation

Nov 10, 2025

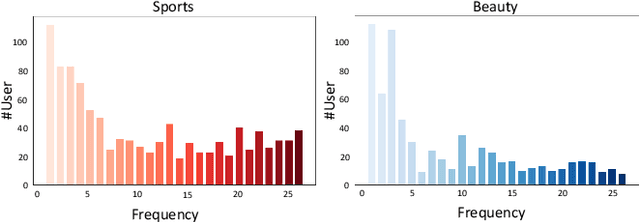

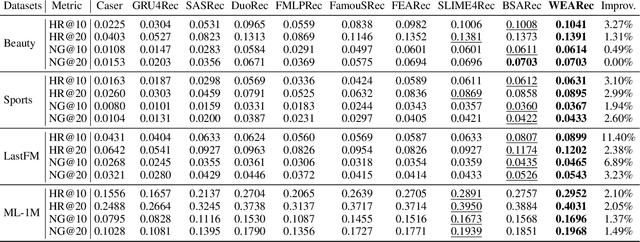

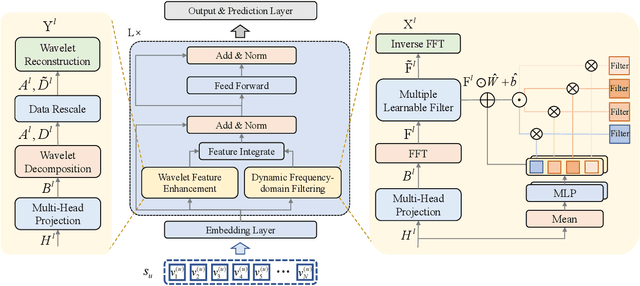

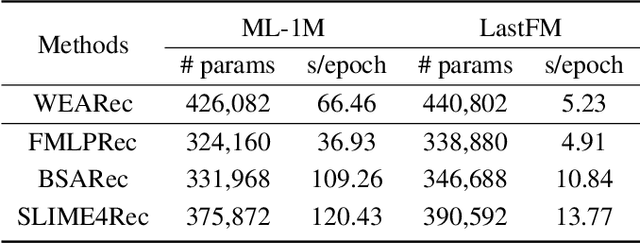

Abstract:Sequential recommendation has garnered significant attention for its ability to capture dynamic preferences by mining users' historical interaction data. Given that users' complex and intertwined periodic preferences are difficult to disentangle in the time domain, recent research is exploring frequency domain analysis to identify these hidden patterns. However, current frequency-domain-based methods suffer from two key limitations: (i) They primarily employ static filters with fixed characteristics, overlooking the personalized nature of behavioral patterns; (ii) While the global discrete Fourier transform excels at modeling long-range dependencies, it can blur non-stationary signals and short-term fluctuations. To overcome these limitations, we propose a novel method called Wavelet Enhanced Adaptive Frequency Filter for Sequential Recommendation. Specifically, it consists of two vital modules: dynamic frequency-domain filtering and wavelet feature enhancement. The former is used to dynamically adjust filtering operations based on behavioral sequences to extract personalized global information, and the latter integrates wavelet transform to reconstruct sequences, enhancing blurred non-stationary signals and short-term fluctuations. Finally, these two modules work to achieve comprehensive performance and efficiency optimization in long sequential recommendation scenarios. Extensive experiments on four widely-used benchmark datasets demonstrate the superiority of our work.

GBV-SQL: Guided Generation and SQL2Text Back-Translation Validation for Multi-Agent Text2SQL

Sep 16, 2025Abstract:While Large Language Models have significantly advanced Text2SQL generation, a critical semantic gap persists where syntactically valid queries often misinterpret user intent. To mitigate this challenge, we propose GBV-SQL, a novel multi-agent framework that introduces Guided Generation with SQL2Text Back-translation Validation. This mechanism uses a specialized agent to translate the generated SQL back into natural language, which verifies its logical alignment with the original question. Critically, our investigation reveals that current evaluation is undermined by a systemic issue: the poor quality of the benchmarks themselves. We introduce a formal typology for "Gold Errors", which are pervasive flaws in the ground-truth data, and demonstrate how they obscure true model performance. On the challenging BIRD benchmark, GBV-SQL achieves 63.23% execution accuracy, a 5.8% absolute improvement. After removing flawed examples, GBV-SQL achieves 96.5% (dev) and 97.6% (test) execution accuracy on the Spider benchmark. Our work offers both a robust framework for semantic validation and a critical perspective on benchmark integrity, highlighting the need for more rigorous dataset curation.

CADRL: Category-aware Dual-agent Reinforcement Learning for Explainable Recommendations over Knowledge Graphs

Aug 06, 2024

Abstract:Knowledge graphs (KGs) have been widely adopted to mitigate data sparsity and address cold-start issues in recommender systems. While existing KGs-based recommendation methods can predict user preferences and demands, they fall short in generating explicit recommendation paths and lack explainability. As a step beyond the above methods, recent advancements utilize reinforcement learning (RL) to find suitable items for a given user via explainable recommendation paths. However, the performance of these solutions is still limited by the following two points. (1) Lack of ability to capture contextual dependencies from neighboring information. (2) The excessive reliance on short recommendation paths due to efficiency concerns. To surmount these challenges, we propose a category-aware dual-agent reinforcement learning (CADRL) model for explainable recommendations over KGs. Specifically, our model comprises two components: (1) a category-aware gated graph neural network that jointly captures context-aware item representations from neighboring entities and categories, and (2) a dual-agent RL framework where two agents efficiently traverse long paths to search for suitable items. Finally, experimental results show that CADRL outperforms state-of-the-art models in terms of both effectiveness and efficiency on large-scale datasets.

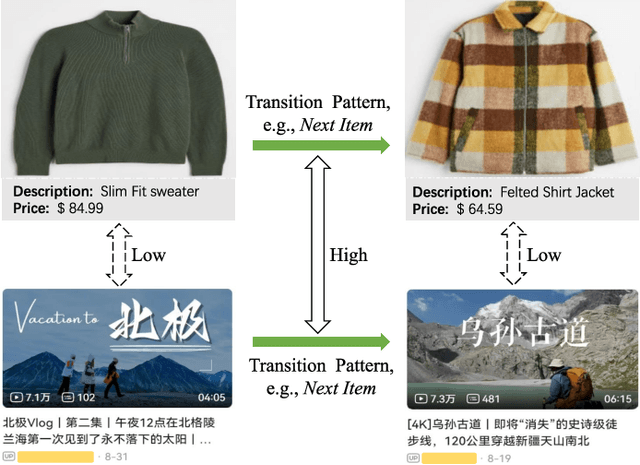

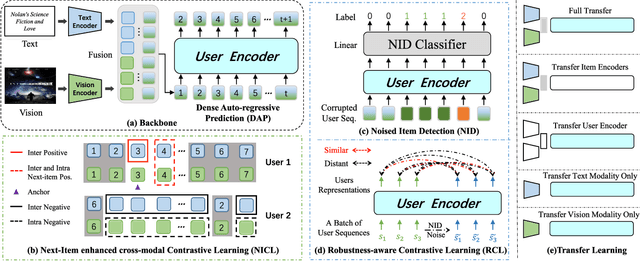

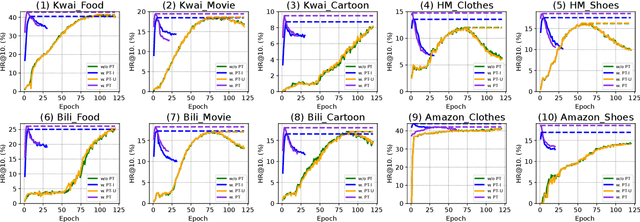

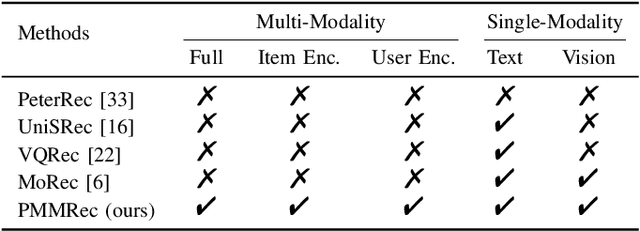

Multi-Modality is All You Need for Transferable Recommender Systems

Dec 18, 2023

Abstract:ID-based Recommender Systems (RecSys), where each item is assigned a unique identifier and subsequently converted into an embedding vector, have dominated the designing of RecSys. Though prevalent, such ID-based paradigm is not suitable for developing transferable RecSys and is also susceptible to the cold-start issue. In this paper, we unleash the boundaries of the ID-based paradigm and propose a Pure Multi-Modality based Recommender system (PMMRec), which relies solely on the multi-modal contents of the items (e.g., texts and images) and learns transition patterns general enough to transfer across domains and platforms. Specifically, we design a plug-and-play framework architecture consisting of multi-modal item encoders, a fusion module, and a user encoder. To align the cross-modal item representations, we propose a novel next-item enhanced cross-modal contrastive learning objective, which is equipped with both inter- and intra-modality negative samples and explicitly incorporates the transition patterns of user behaviors into the item encoders. To ensure the robustness of user representations, we propose a novel noised item detection objective and a robustness-aware contrastive learning objective, which work together to denoise user sequences in a self-supervised manner. PMMRec is designed to be loosely coupled, so after being pre-trained on the source data, each component can be transferred alone, or in conjunction with other components, allowing PMMRec to achieve versatility under both multi-modality and single-modality transfer learning settings. Extensive experiments on 4 sources and 10 target datasets demonstrate that PMMRec surpasses the state-of-the-art recommenders in both recommendation performance and transferability. Our code and dataset is available at: https://github.com/ICDE24/PMMRec.

Intent Contrastive Learning with Cross Subsequences for Sequential Recommendation

Oct 31, 2023

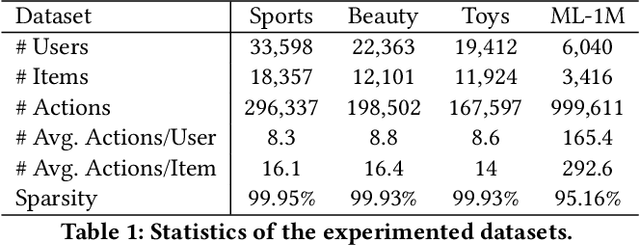

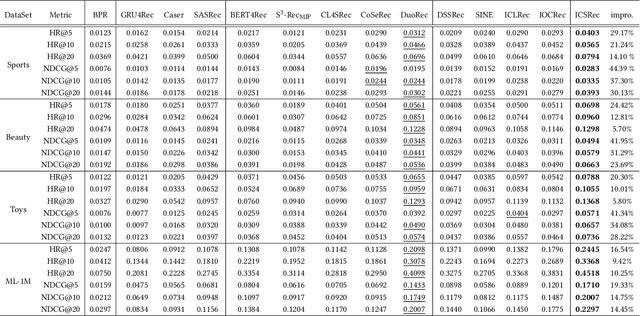

Abstract:The user purchase behaviors are mainly influenced by their intentions (e.g., buying clothes for decoration, buying brushes for painting, etc.). Modeling a user's latent intention can significantly improve the performance of recommendations. Previous works model users' intentions by considering the predefined label in auxiliary information or introducing stochastic data augmentation to learn purposes in the latent space. However, the auxiliary information is sparse and not always available for recommender systems, and introducing stochastic data augmentation may introduce noise and thus change the intentions hidden in the sequence. Therefore, leveraging user intentions for sequential recommendation (SR) can be challenging because they are frequently varied and unobserved. In this paper, Intent contrastive learning with Cross Subsequences for sequential Recommendation (ICSRec) is proposed to model users' latent intentions. Specifically, ICSRec first segments a user's sequential behaviors into multiple subsequences by using a dynamic sliding operation and takes these subsequences into the encoder to generate the representations for the user's intentions. To tackle the problem of no explicit labels for purposes, ICSRec assumes different subsequences with the same target item may represent the same intention and proposes a coarse-grain intent contrastive learning to push these subsequences closer. Then, fine-grain intent contrastive learning is mentioned to capture the fine-grain intentions of subsequences in sequential behaviors. Extensive experiments conducted on four real-world datasets demonstrate the superior performance of the proposed ICSRec model compared with baseline methods.

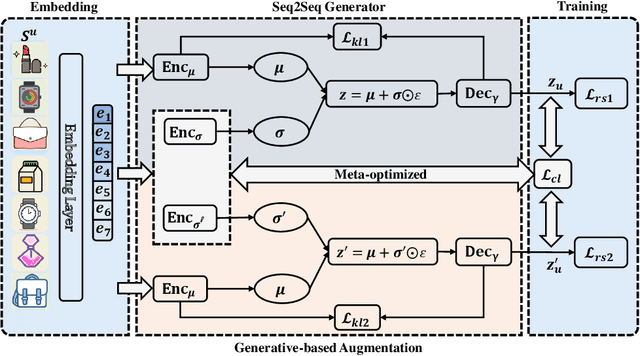

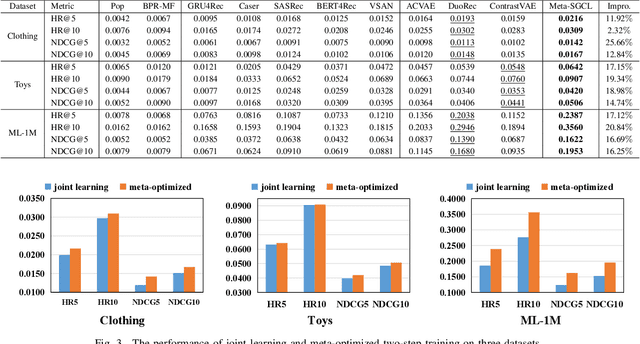

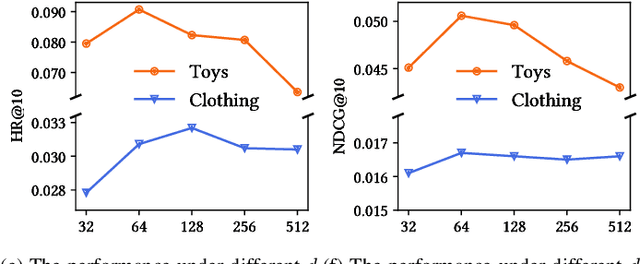

Meta-optimized Joint Generative and Contrastive Learning for Sequential Recommendation

Oct 21, 2023

Abstract:Sequential Recommendation (SR) has received increasing attention due to its ability to capture user dynamic preferences. Recently, Contrastive Learning (CL) provides an effective approach for sequential recommendation by learning invariance from different views of an input. However, most existing data or model augmentation methods may destroy semantic sequential interaction characteristics and often rely on the hand-crafted property of their contrastive view-generation strategies. In this paper, we propose a Meta-optimized Seq2Seq Generator and Contrastive Learning (Meta-SGCL) for sequential recommendation, which applies the meta-optimized two-step training strategy to adaptive generate contrastive views. Specifically, Meta-SGCL first introduces a simple yet effective augmentation method called Sequence-to-Sequence (Seq2Seq) generator, which treats the Variational AutoEncoders (VAE) as the view generator and can constitute contrastive views while preserving the original sequence's semantics. Next, the model employs a meta-optimized two-step training strategy, which aims to adaptively generate contrastive views without relying on manually designed view-generation techniques. Finally, we evaluate our proposed method Meta-SGCL using three public real-world datasets. Compared with the state-of-the-art methods, our experimental results demonstrate the effectiveness of our model and the code is available.

Contrastive Enhanced Slide Filter Mixer for Sequential Recommendation

May 07, 2023

Abstract:Sequential recommendation (SR) aims to model user preferences by capturing behavior patterns from their item historical interaction data. Most existing methods model user preference in the time domain, omitting the fact that users' behaviors are also influenced by various frequency patterns that are difficult to separate in the entangled chronological items. However, few attempts have been made to train SR in the frequency domain, and it is still unclear how to use the frequency components to learn an appropriate representation for the user. To solve this problem, we shift the viewpoint to the frequency domain and propose a novel Contrastive Enhanced \textbf{SLI}de Filter \textbf{M}ixEr for Sequential \textbf{Rec}ommendation, named \textbf{SLIME4Rec}. Specifically, we design a frequency ramp structure to allow the learnable filter slide on the frequency spectrums across different layers to capture different frequency patterns. Moreover, a Dynamic Frequency Selection (DFS) and a Static Frequency Split (SFS) module are proposed to replace the self-attention module for effectively extracting frequency information in two ways. DFS is used to select helpful frequency components dynamically, and SFS is combined with the dynamic frequency selection module to provide a more fine-grained frequency division. Finally, contrastive learning is utilized to improve the quality of user embedding learned from the frequency domain. Extensive experiments conducted on five widely used benchmark datasets demonstrate our proposed model performs significantly better than the state-of-the-art approaches. Our code is available at https://github.com/sudaada/SLIME4Rec.

Ensemble Modeling with Contrastive Knowledge Distillation for Sequential Recommendation

May 04, 2023

Abstract:Sequential recommendation aims to capture users' dynamic interest and predicts the next item of users' preference. Most sequential recommendation methods use a deep neural network as sequence encoder to generate user and item representations. Existing works mainly center upon designing a stronger sequence encoder. However, few attempts have been made with training an ensemble of networks as sequence encoders, which is more powerful than a single network because an ensemble of parallel networks can yield diverse prediction results and hence better accuracy. In this paper, we present Ensemble Modeling with contrastive Knowledge Distillation for sequential recommendation (EMKD). Our framework adopts multiple parallel networks as an ensemble of sequence encoders and recommends items based on the output distributions of all these networks. To facilitate knowledge transfer between parallel networks, we propose a novel contrastive knowledge distillation approach, which performs knowledge transfer from the representation level via Intra-network Contrastive Learning (ICL) and Cross-network Contrastive Learning (CCL), as well as Knowledge Distillation (KD) from the logits level via minimizing the Kullback-Leibler divergence between the output distributions of the teacher network and the student network. To leverage contextual information, we train the primary masked item prediction task alongside the auxiliary attribute prediction task as a multi-task learning scheme. Extensive experiments on public benchmark datasets show that EMKD achieves a significant improvement compared with the state-of-the-art methods. Besides, we demonstrate that our ensemble method is a generalized approach that can also improve the performance of other sequential recommenders. Our code is available at this link: https://github.com/hw-du/EMKD.

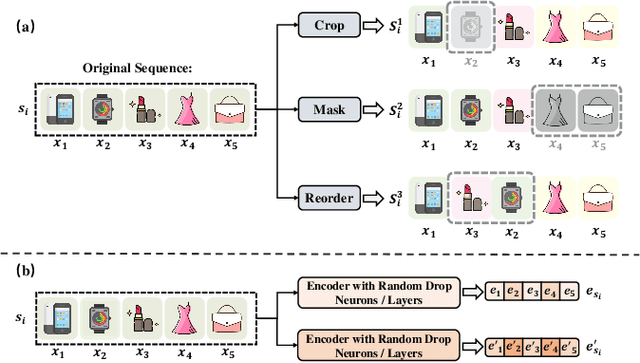

Meta-optimized Contrastive Learning for Sequential Recommendation

Apr 26, 2023Abstract:Contrastive Learning (CL) performances as a rising approach to address the challenge of sparse and noisy recommendation data. Although having achieved promising results, most existing CL methods only perform either hand-crafted data or model augmentation for generating contrastive pairs to find a proper augmentation operation for different datasets, which makes the model hard to generalize. Additionally, since insufficient input data may lead the encoder to learn collapsed embeddings, these CL methods expect a relatively large number of training data (e.g., large batch size or memory bank) to contrast. However, not all contrastive pairs are always informative and discriminative enough for the training processing. Therefore, a more general CL-based recommendation model called Meta-optimized Contrastive Learning for sequential Recommendation (MCLRec) is proposed in this work. By applying both data augmentation and learnable model augmentation operations, this work innovates the standard CL framework by contrasting data and model augmented views for adaptively capturing the informative features hidden in stochastic data augmentation. Moreover, MCLRec utilizes a meta-learning manner to guide the updating of the model augmenters, which helps to improve the quality of contrastive pairs without enlarging the amount of input data. Finally, a contrastive regularization term is considered to encourage the augmentation model to generate more informative augmented views and avoid too similar contrastive pairs within the meta updating. The experimental results on commonly used datasets validate the effectiveness of MCLRec.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge