Chang Li

PACE: Pretrained Audio Continual Learning

Feb 03, 2026Abstract:Audio is a fundamental modality for analyzing speech, music, and environmental sounds. Although pretrained audio models have significantly advanced audio understanding, they remain fragile in real-world settings where data distributions shift over time. In this work, we present the first systematic benchmark for audio continual learning (CL) with pretrained models (PTMs), together with a comprehensive analysis of its unique challenges. Unlike in vision, where parameter-efficient fine-tuning (PEFT) has proven effective for CL, directly transferring such strategies to audio leads to poor performance. This stems from a fundamental property of audio backbones: they focus on low-level spectral details rather than structured semantics, causing severe upstream-downstream misalignment. Through extensive empirical study, we identify analytic classifiers with first-session adaptation (FSA) as a promising direction, but also reveal two major limitations: representation saturation in coarse-grained scenarios and representation drift in fine-grained scenarios. To address these challenges, we propose PACE, a novel method that enhances FSA via a regularized analytic classifier and enables multi-session adaptation through adaptive subspace-orthogonal PEFT for improved semantic alignment. In addition, we introduce spectrogram-based boundary-aware perturbations to mitigate representation overlap and improve stability. Experiments on six diverse audio CL benchmarks demonstrate that PACE substantially outperforms state-of-the-art baselines, marking an important step toward robust and scalable audio continual learning with PTMs.

Exploiting the Prior of Generative Time Series Imputation

Dec 29, 2025Abstract:Time series imputation, i.e., filling the missing values of a time recording, finds various applications in electricity, finance, and weather modelling. Previous methods have introduced generative models such as diffusion probabilistic models and Schrodinger bridge models to conditionally generate the missing values from Gaussian noise or directly from linear interpolation results. However, as their prior is not informative to the ground-truth target, their generation process inevitably suffer increased burden and limited imputation accuracy. In this work, we present Bridge-TS, building a data-to-data generation process for generative time series imputation and exploiting the design of prior with two novel designs. Firstly, we propose expert prior, leveraging a pretrained transformer-based module as an expert to fill the missing values with a deterministic estimation, and then taking the results as the prior of ground truth target. Secondly, we explore compositional priors, utilizing several pretrained models to provide different estimation results, and then combining them in the data-to-data generation process to achieve a compositional priors-to-target imputation process. Experiments conducted on several benchmark datasets such as ETT, Exchange, and Weather show that Bridge-TS reaches a new record of imputation accuracy in terms of mean square error and mean absolute error, demonstrating the superiority of improving prior for generative time series imputation.

AI Meets Brain: Memory Systems from Cognitive Neuroscience to Autonomous Agents

Dec 29, 2025Abstract:Memory serves as the pivotal nexus bridging past and future, providing both humans and AI systems with invaluable concepts and experience to navigate complex tasks. Recent research on autonomous agents has increasingly focused on designing efficient memory workflows by drawing on cognitive neuroscience. However, constrained by interdisciplinary barriers, existing works struggle to assimilate the essence of human memory mechanisms. To bridge this gap, we systematically synthesizes interdisciplinary knowledge of memory, connecting insights from cognitive neuroscience with LLM-driven agents. Specifically, we first elucidate the definition and function of memory along a progressive trajectory from cognitive neuroscience through LLMs to agents. We then provide a comparative analysis of memory taxonomy, storage mechanisms, and the complete management lifecycle from both biological and artificial perspectives. Subsequently, we review the mainstream benchmarks for evaluating agent memory. Additionally, we explore memory security from dual perspectives of attack and defense. Finally, we envision future research directions, with a focus on multimodal memory systems and skill acquisition.

Unsupervised Single-Channel Audio Separation with Diffusion Source Priors

Dec 23, 2025Abstract:Single-channel audio separation aims to separate individual sources from a single-channel mixture. Most existing methods rely on supervised learning with synthetically generated paired data. However, obtaining high-quality paired data in real-world scenarios is often difficult. This data scarcity can degrade model performance under unseen conditions and limit generalization ability. To this end, in this work, we approach this problem from an unsupervised perspective, framing it as a probabilistic inverse problem. Our method requires only diffusion priors trained on individual sources. Separation is then achieved by iteratively guiding an initial state toward the solution through reconstruction guidance. Importantly, we introduce an advanced inverse problem solver specifically designed for separation, which mitigates gradient conflicts caused by interference between the diffusion prior and reconstruction guidance during inverse denoising. This design ensures high-quality and balanced separation performance across individual sources. Additionally, we find that initializing the denoising process with an augmented mixture instead of pure Gaussian noise provides an informative starting point that significantly improves the final performance. To further enhance audio prior modeling, we design a novel time-frequency attention-based network architecture that demonstrates strong audio modeling capability. Collectively, these improvements lead to significant performance gains, as validated across speech-sound event, sound event, and speech separation tasks.

U(PM)$^2$:Unsupervised polygon matching with pre-trained models for challenging stereo images

Nov 08, 2025Abstract:Stereo image matching is a fundamental task in computer vision, photogrammetry and remote sensing, but there is an almost unexplored field, i.e., polygon matching, which faces the following challenges: disparity discontinuity, scale variation, training requirement, and generalization. To address the above-mentioned issues, this paper proposes a novel U(PM)$^2$: low-cost unsupervised polygon matching with pre-trained models by uniting automatically learned and handcrafted features, of which pipeline is as follows: firstly, the detector leverages the pre-trained segment anything model to obtain masks; then, the vectorizer converts the masks to polygons and graphic structure; secondly, the global matcher addresses challenges from global viewpoint changes and scale variation based on bidirectional-pyramid strategy with pre-trained LoFTR; finally, the local matcher further overcomes local disparity discontinuity and topology inconsistency of polygon matching by local-joint geometry and multi-feature matching strategy with Hungarian algorithm. We benchmark our U(PM)$^2$ on the ScanNet and SceneFlow datasets using our proposed new metric, which achieved state-of-the-art accuracy at a competitive speed and satisfactory generalization performance at low cost without any training requirement.

Learning Temporal Abstractions via Variational Homomorphisms in Option-Induced Abstract MDPs

Jul 24, 2025

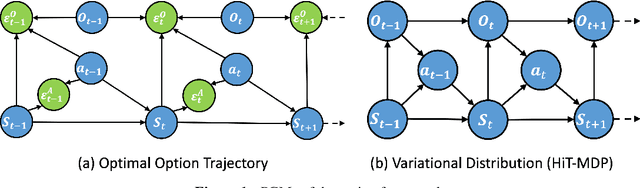

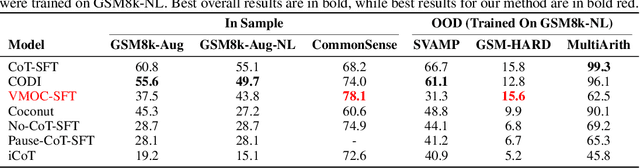

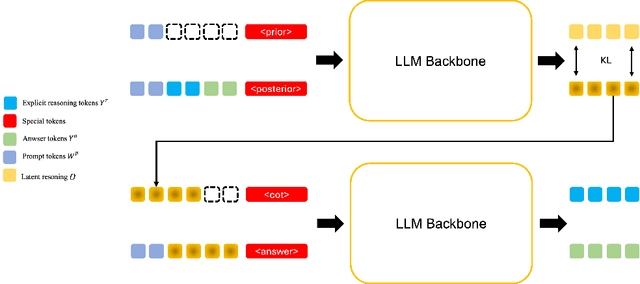

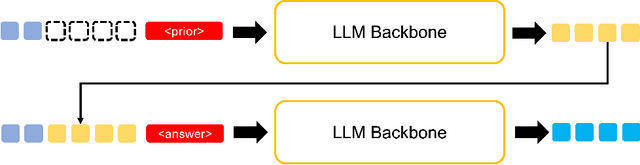

Abstract:Large Language Models (LLMs) have shown remarkable reasoning ability through explicit Chain-of-Thought (CoT) prompting, but generating these step-by-step textual explanations is computationally expensive and slow. To overcome this, we aim to develop a framework for efficient, implicit reasoning, where the model "thinks" in a latent space without generating explicit text for every step. We propose that these latent thoughts can be modeled as temporally-extended abstract actions, or options, within a hierarchical reinforcement learning framework. To effectively learn a diverse library of options as latent embeddings, we first introduce the Variational Markovian Option Critic (VMOC), an off-policy algorithm that uses variational inference within the HiT-MDP framework. To provide a rigorous foundation for using these options as an abstract reasoning space, we extend the theory of continuous MDP homomorphisms. This proves that learning a policy in the simplified, abstract latent space, for which VMOC is suited, preserves the optimality of the solution to the original, complex problem. Finally, we propose a cold-start procedure that leverages supervised fine-tuning (SFT) data to distill human reasoning demonstrations into this latent option space, providing a rich initialization for the model's reasoning capabilities. Extensive experiments demonstrate that our approach achieves strong performance on complex logical reasoning benchmarks and challenging locomotion tasks, validating our framework as a principled method for learning abstract skills for both language and control.

Latent Swap Joint Diffusion for Long-Form Audio Generation

Feb 07, 2025

Abstract:Previous work on long-form audio generation using global-view diffusion or iterative generation demands significant training or inference costs. While recent advancements in multi-view joint diffusion for panoramic generation provide an efficient option, they struggle with spectrum generation with severe overlap distortions and high cross-view consistency costs. We initially explore this phenomenon through the connectivity inheritance of latent maps and uncover that averaging operations excessively smooth the high-frequency components of the latent map. To address these issues, we propose Swap Forward (SaFa), a frame-level latent swap framework that synchronizes multiple diffusions to produce a globally coherent long audio with more spectrum details in a forward-only manner. At its core, the bidirectional Self-Loop Latent Swap is applied between adjacent views, leveraging stepwise diffusion trajectory to adaptively enhance high-frequency components without disrupting low-frequency components. Furthermore, to ensure cross-view consistency, the unidirectional Reference-Guided Latent Swap is applied between the reference and the non-overlap regions of each subview during the early stages, providing centralized trajectory guidance. Quantitative and qualitative experiments demonstrate that SaFa significantly outperforms existing joint diffusion methods and even training-based long audio generation models. Moreover, we find that it also adapts well to panoramic generation, achieving comparable state-of-the-art performance with greater efficiency and model generalizability. Project page is available at https://swapforward.github.io/.

An Atomic Skill Library Construction Method for Data-Efficient Embodied Manipulation

Jan 25, 2025

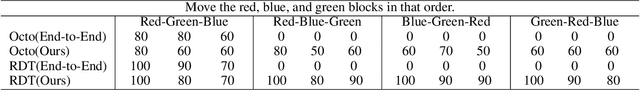

Abstract:Embodied manipulation is a fundamental ability in the realm of embodied artificial intelligence. Although current embodied manipulation models show certain generalizations in specific settings, they struggle in new environments and tasks due to the complexity and diversity of real-world scenarios. The traditional end-to-end data collection and training manner leads to significant data demands, which we call ``data explosion''. To address the issue, we introduce a three-wheeled data-driven method to build an atomic skill library. We divide tasks into subtasks using the Vision-Language Planning (VLP). Then, atomic skill definitions are formed by abstracting the subtasks. Finally, an atomic skill library is constructed via data collection and Vision-Language-Action (VLA) fine-tuning. As the atomic skill library expands dynamically with the three-wheel update strategy, the range of tasks it can cover grows naturally. In this way, our method shifts focus from end-to-end tasks to atomic skills, significantly reducing data costs while maintaining high performance and enabling efficient adaptation to new tasks. Extensive experiments in real-world settings demonstrate the effectiveness and efficiency of our approach.

Bridge-SR: Schrödinger Bridge for Efficient SR

Jan 14, 2025

Abstract:Speech super-resolution (SR), which generates a waveform at a higher sampling rate from its low-resolution version, is a long-standing critical task in speech restoration. Previous works have explored speech SR in different data spaces, but these methods either require additional compression networks or exhibit limited synthesis quality and inference speed. Motivated by recent advances in probabilistic generative models, we present Bridge-SR, a novel and efficient any-to-48kHz SR system in the speech waveform domain. Using tractable Schr\"odinger Bridge models, we leverage the observed low-resolution waveform as a prior, which is intrinsically informative for the high-resolution target. By optimizing a lightweight network to learn the score functions from the prior to the target, we achieve efficient waveform SR through a data-to-data generation process that fully exploits the instructive content contained in the low-resolution observation. Furthermore, we identify the importance of the noise schedule, data scaling, and auxiliary loss functions, which further improve the SR quality of bridge-based systems. The experiments conducted on the benchmark dataset VCTK demonstrate the efficiency of our system: (1) in terms of sample quality, Bridge-SR outperforms several strong baseline methods under different SR settings, using a lightweight network backbone (1.7M); (2) in terms of inference speed, our 4-step synthesis achieves better performance than the 8-step conditional diffusion counterpart (LSD: 0.911 vs 0.927). Demo at https://bridge-sr.github.io.

ΩSFormer: Dual-Modal Ω-like Super-Resolution Transformer Network for Cross-scale and High-accuracy Terraced Field Vectorization Extraction

Nov 26, 2024

Abstract:Terraced field is a significant engineering practice for soil and water conservation (SWC). Terraced field extraction from remotely sensed imagery is the foundation for monitoring and evaluating SWC. This study is the first to propose a novel dual-modal {\Omega}-like super-resolution Transformer network for intelligent TFVE, offering the following advantages: (1) reducing edge segmentation error from conventional multi-scale downsampling encoder, through fusing original high-resolution features with downsampling features at each step of encoder and leveraging a multi-head attention mechanism; (2) improving the accuracy of TFVE by proposing a {\Omega}-like network structure, which fully integrates rich high-level features from both spectral and terrain data to form cross-scale super-resolution features; (3) validating an optimal fusion scheme for cross-modal and cross-scale (i.e., inconsistent spatial resolution between remotely sensed imagery and DEM) super-resolution feature extraction; (4) mitigating uncertainty between segmentation edge pixels by a coarse-to-fine and spatial topological semantic relationship optimization (STSRO) segmentation strategy; (5) leveraging contour vibration neural network to continuously optimize parameters and iteratively vectorize terraced fields from semantic segmentation results. Moreover, a DMRVD for deep-learning-based TFVE was created for the first time, which covers nine study areas in four provinces of China, with a total coverage area of 22441 square kilometers. To assess the performance of {\Omega}SFormer, classic and SOTA networks were compared. The mIOU of {\Omega}SFormer has improved by 0.165, 0.297 and 0.128 respectively, when compared with best accuracy single-modal remotely sensed imagery, single-modal DEM and dual-modal result.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge