Sadique Sheik

SynSense AG, Swizerland

A Low-Power Neuromorphic Approach for Efficient Eye-Tracking

Dec 01, 2023

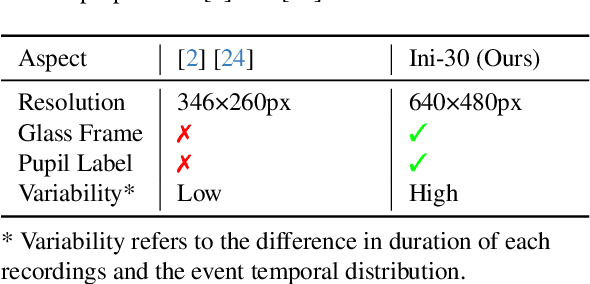

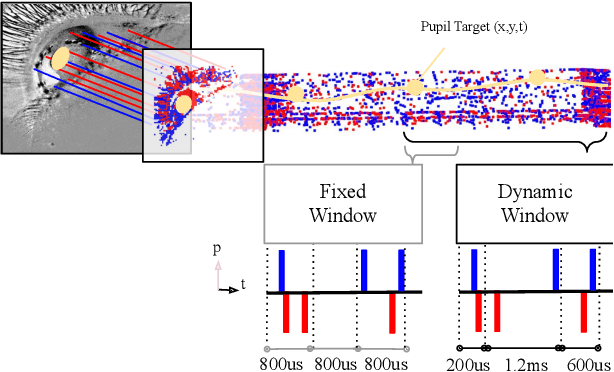

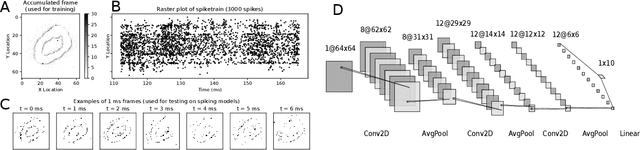

Abstract:This paper introduces a neuromorphic methodology for eye tracking, harnessing pure event data captured by a Dynamic Vision Sensor (DVS) camera. The framework integrates a directly trained Spiking Neuron Network (SNN) regression model and leverages a state-of-the-art low power edge neuromorphic processor - Speck, collectively aiming to advance the precision and efficiency of eye-tracking systems. First, we introduce a representative event-based eye-tracking dataset, "Ini-30", which was collected with two glass-mounted DVS cameras from thirty volunteers. Then,a SNN model, based on Integrate And Fire (IAF) neurons, named "Retina", is described , featuring only 64k parameters (6.63x fewer than the latest) and achieving pupil tracking error of only 3.24 pixels in a 64x64 DVS input. The continous regression output is obtained by means of convolution using a non-spiking temporal 1D filter slided across the output spiking layer. Finally, we evaluate Retina on the neuromorphic processor, showing an end-to-end power between 2.89-4.8 mW and a latency of 5.57-8.01 mS dependent on the time window. We also benchmark our model against the latest event-based eye-tracking method, "3ET", which was built upon event frames. Results show that Retina achieves superior precision with 1.24px less pupil centroid error and reduced computational complexity with 35 times fewer MAC operations. We hope this work will open avenues for further investigation of close-loop neuromorphic solutions and true event-based training pursuing edge performance.

Neuromorphic Intermediate Representation: A Unified Instruction Set for Interoperable Brain-Inspired Computing

Nov 24, 2023Abstract:Spiking neural networks and neuromorphic hardware platforms that emulate neural dynamics are slowly gaining momentum and entering main-stream usage. Despite a well-established mathematical foundation for neural dynamics, the implementation details vary greatly across different platforms. Correspondingly, there are a plethora of software and hardware implementations with their own unique technology stacks. Consequently, neuromorphic systems typically diverge from the expected computational model, which challenges the reproducibility and reliability across platforms. Additionally, most neuromorphic hardware is limited by its access via a single software frameworks with a limited set of training procedures. Here, we establish a common reference-frame for computations in neuromorphic systems, dubbed the Neuromorphic Intermediate Representation (NIR). NIR defines a set of computational primitives as idealized continuous-time hybrid systems that can be composed into graphs and mapped to and from various neuromorphic technology stacks. By abstracting away assumptions around discretization and hardware constraints, NIR faithfully captures the fundamental computation, while simultaneously exposing the exact differences between the evaluated implementation and the idealized mathematical formalism. We reproduce three NIR graphs across 7 neuromorphic simulators and 4 hardware platforms, demonstrating support for an unprecedented number of neuromorphic systems. With NIR, we decouple the evolution of neuromorphic hardware and software, ultimately increasing the interoperability between platforms and improving accessibility to neuromorphic technologies. We believe that NIR is an important step towards the continued study of brain-inspired hardware and bottom-up approaches aimed at an improved understanding of the computational underpinnings of nervous systems.

Ultra-low-power Image Classification on Neuromorphic Hardware

Sep 28, 2023

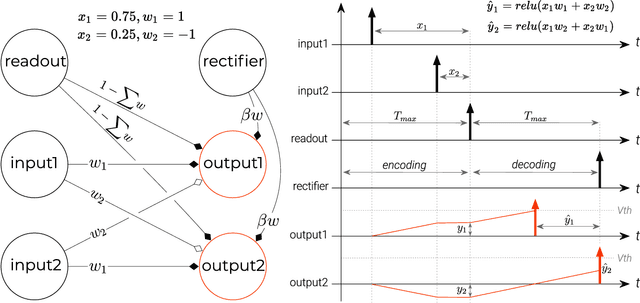

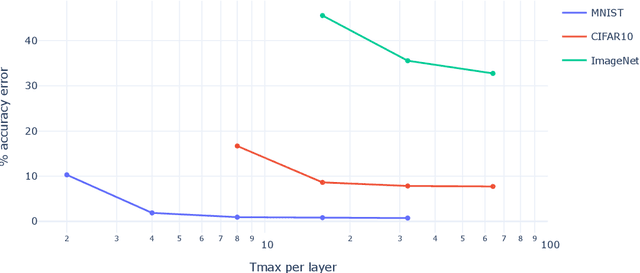

Abstract:Spiking neural networks (SNNs) promise ultra-low-power applications by exploiting temporal and spatial sparsity. The number of binary activations, called spikes, is proportional to the power consumed when executed on neuromorphic hardware. Training such SNNs using backpropagation through time for vision tasks that rely mainly on spatial features is computationally costly. Training a stateless artificial neural network (ANN) to then convert the weights to an SNN is a straightforward alternative when it comes to image recognition datasets. Most conversion methods rely on rate coding in the SNN to represent ANN activation, which uses enormous amounts of spikes and, therefore, energy to encode information. Recently, temporal conversion methods have shown promising results requiring significantly fewer spikes per neuron, but sometimes complex neuron models. We propose a temporal ANN-to-SNN conversion method, which we call Quartz, that is based on the time to first spike (TTFS). Quartz achieves high classification accuracy and can be easily implemented on neuromorphic hardware while using the least amount of synaptic operations and memory accesses. It incurs a cost of two additional synapses per neuron compared to previous temporal conversion methods, which are readily available on neuromorphic hardware. We benchmark Quartz on MNIST, CIFAR10, and ImageNet in simulation to show the benefits of our method and follow up with an implementation on Loihi, a neuromorphic chip by Intel. We provide evidence that temporal coding has advantages in terms of power consumption, throughput, and latency for similar classification accuracy. Our code and models are publicly available.

NeuroBench: Advancing Neuromorphic Computing through Collaborative, Fair and Representative Benchmarking

Apr 15, 2023

Abstract:The field of neuromorphic computing holds great promise in terms of advancing computing efficiency and capabilities by following brain-inspired principles. However, the rich diversity of techniques employed in neuromorphic research has resulted in a lack of clear standards for benchmarking, hindering effective evaluation of the advantages and strengths of neuromorphic methods compared to traditional deep-learning-based methods. This paper presents a collaborative effort, bringing together members from academia and the industry, to define benchmarks for neuromorphic computing: NeuroBench. The goals of NeuroBench are to be a collaborative, fair, and representative benchmark suite developed by the community, for the community. In this paper, we discuss the challenges associated with benchmarking neuromorphic solutions, and outline the key features of NeuroBench. We believe that NeuroBench will be a significant step towards defining standards that can unify the goals of neuromorphic computing and drive its technological progress. Please visit neurobench.ai for the latest updates on the benchmark tasks and metrics.

Speck: A Smart event-based Vision Sensor with a low latency 327K Neuron Convolutional Neuronal Network Processing Pipeline

Apr 13, 2023Abstract:Edge computing solutions that enable the extraction of high level information from a variety of sensors is in increasingly high demand. This is due to the increasing number of smart devices that require sensory processing for their application on the edge. To tackle this problem, we present a smart vision sensor System on Chip (Soc), featuring an event-based camera and a low power asynchronous spiking Convolutional Neuronal Network (sCNN) computing architecture embedded on a single chip. By combining both sensor and processing on a single die, we can lower unit production costs significantly. Moreover, the simple end-to-end nature of the SoC facilitates small stand-alone applications as well as functioning as an edge node in a larger systems. The event-driven nature of the vision sensor delivers high-speed signals in a sparse data stream. This is reflected in the processing pipeline, focuses on optimising highly sparse computation and minimising latency for 9 sCNN layers to $3.36\mu s$. Overall, this results in an extremely low-latency visual processing pipeline deployed on a small form factor with a low energy budget and sensor cost. We present the asynchronous architecture, the individual blocks, the sCNN processing principle and benchmark against other sCNN capable processors.

EXODUS: Stable and Efficient Training of Spiking Neural Networks

May 20, 2022

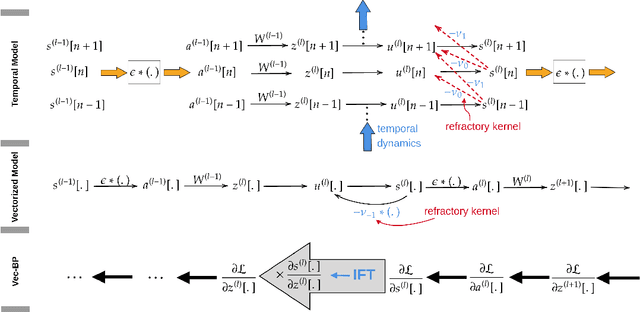

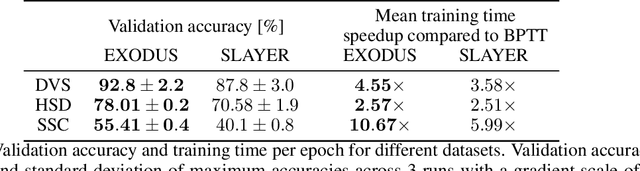

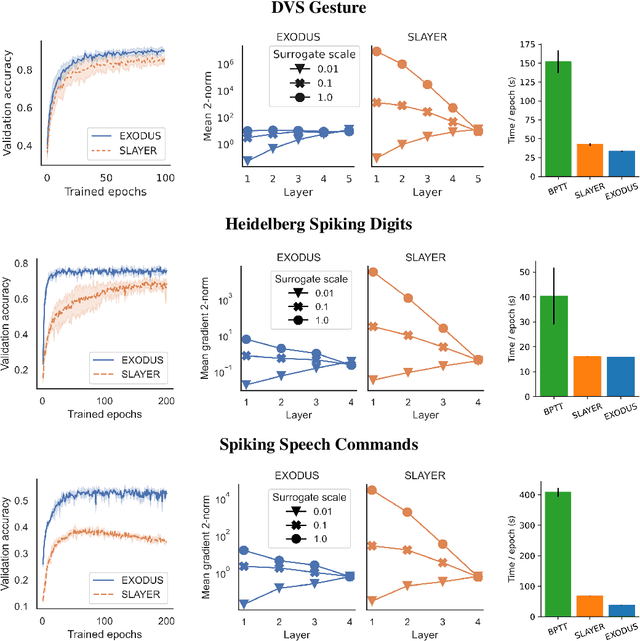

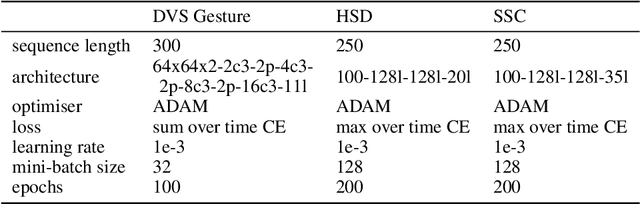

Abstract:Spiking Neural Networks (SNNs) are gaining significant traction in machine learning tasks where energy-efficiency is of utmost importance. Training such networks using the state-of-the-art back-propagation through time (BPTT) is, however, very time-consuming. Previous work by Shrestha and Orchard [2018] employs an efficient GPU-accelerated back-propagation algorithm called SLAYER, which speeds up training considerably. SLAYER, however, does not take into account the neuron reset mechanism while computing the gradients, which we argue to be the source of numerical instability. To counteract this, SLAYER introduces a gradient scale hyperparameter across layers, which needs manual tuning. In this paper, (i) we modify SLAYER and design an algorithm called EXODUS, that accounts for the neuron reset mechanism and applies the Implicit Function Theorem (IFT) to calculate the correct gradients (equivalent to those computed by BPTT), (ii) we eliminate the need for ad-hoc scaling of gradients, thus, reducing the training complexity tremendously, (iii) we demonstrate, via computer simulations, that EXODUS is numerically stable and achieves a comparable or better performance than SLAYER especially in various tasks with SNNs that rely on temporal features. Our code is available at https://github.com/synsense/sinabs-exodus.

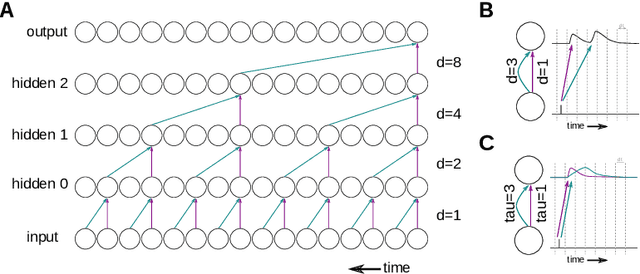

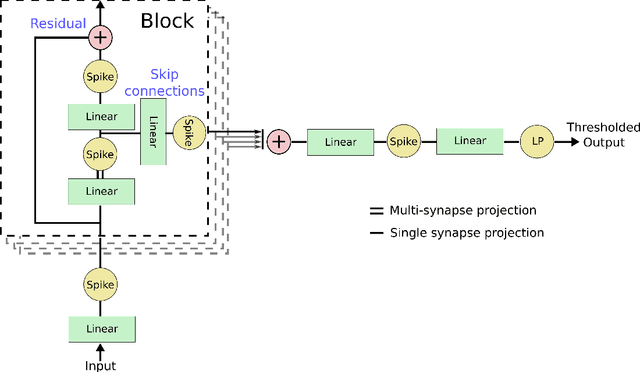

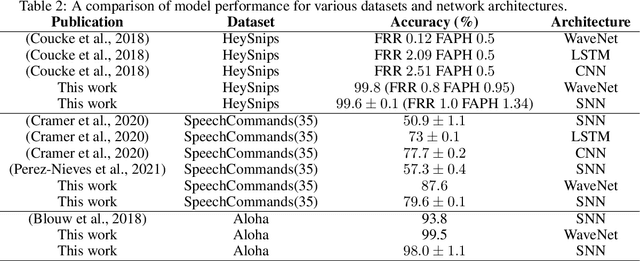

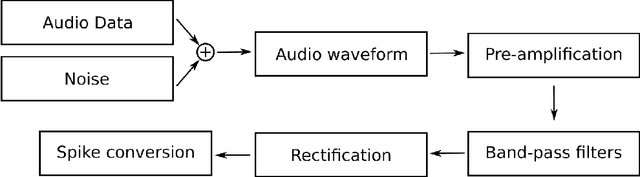

WaveSense: Efficient Temporal Convolutions with Spiking Neural Networks for Keyword Spotting

Nov 02, 2021

Abstract:Ultra-low power local signal processing is a crucial aspect for edge applications on always-on devices. Neuromorphic processors emulating spiking neural networks show great computational power while fulfilling the limited power budget as needed in this domain. In this work we propose spiking neural dynamics as a natural alternative to dilated temporal convolutions. We extend this idea to WaveSense, a spiking neural network inspired by the WaveNet architecture. WaveSense uses simple neural dynamics, fixed time-constants and a simple feed-forward architecture and hence is particularly well suited for a neuromorphic implementation. We test the capabilities of this model on several datasets for keyword-spotting. The results show that the proposed network beats the state of the art of other spiking neural networks and reaches near state-of-the-art performance of artificial neural networks such as CNNs and LSTMs.

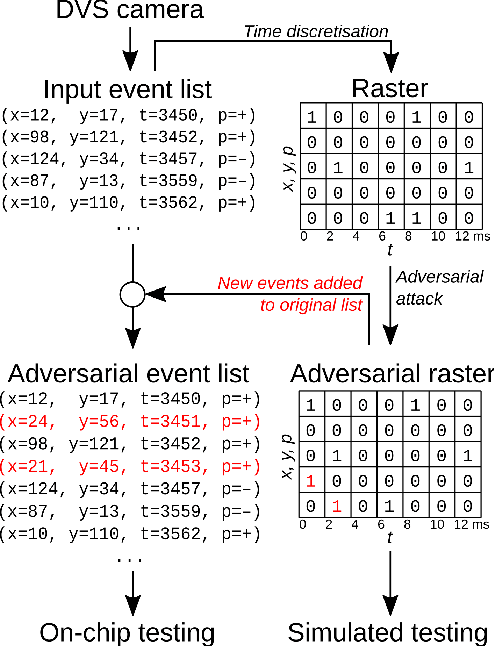

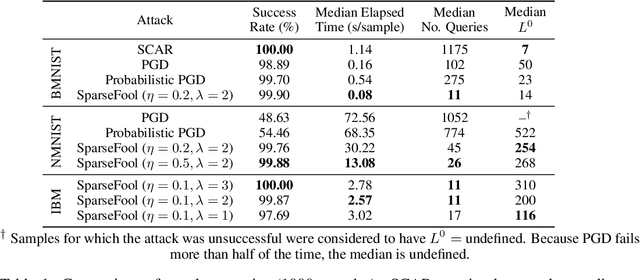

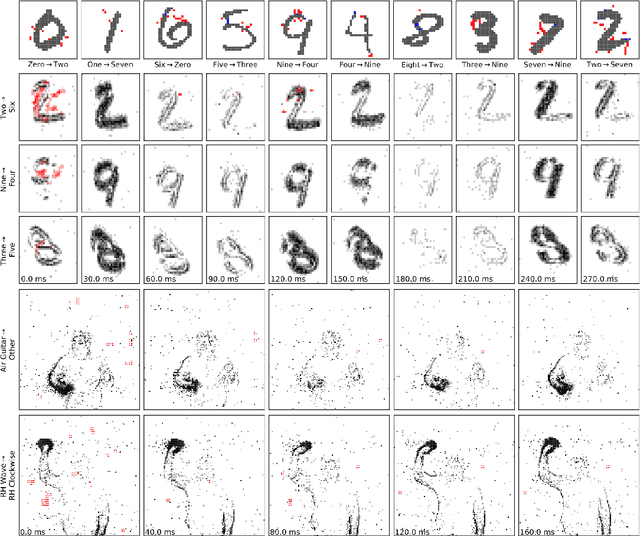

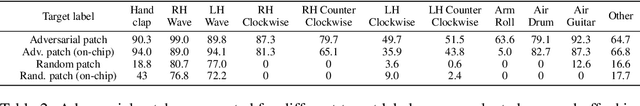

Adversarial Attacks on Spiking Convolutional Networks for Event-based Vision

Oct 06, 2021

Abstract:Event-based sensing using dynamic vision sensors is gaining traction in low-power vision applications. Spiking neural networks work well with the sparse nature of event-based data and suit deployment on low-power neuromorphic hardware. Being a nascent field, the sensitivity of spiking neural networks to potentially malicious adversarial attacks has received very little attention so far. In this work, we show how white-box adversarial attack algorithms can be adapted to the discrete and sparse nature of event-based visual data, and to the continuous-time setting of spiking neural networks. We test our methods on the N-MNIST and IBM Gestures neuromorphic vision datasets and show adversarial perturbations achieve a high success rate, by injecting a relatively small number of appropriately placed events. We also verify, for the first time, the effectiveness of these perturbations directly on neuromorphic hardware. Finally, we discuss the properties of the resulting perturbations and possible future directions.

Optimizing the energy consumption of spiking neural networks for neuromorphic applications

Dec 03, 2019

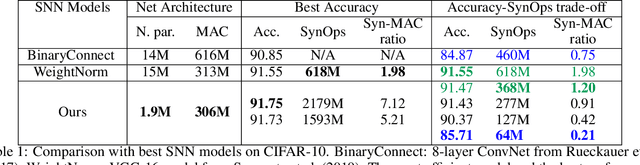

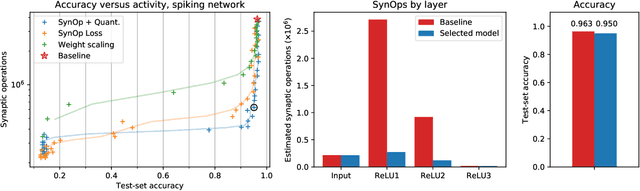

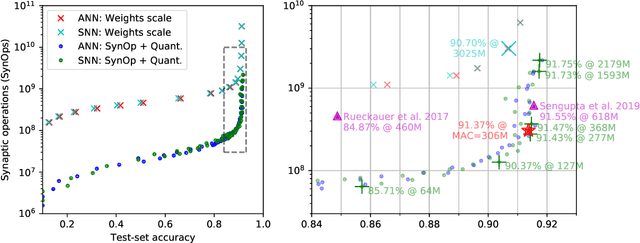

Abstract:In the last few years, spiking neural networks have been demonstrated to perform on par with regular convolutional neural networks. Several works have proposed methods to convert a pre-trained CNN to a Spiking CNN without a significant sacrifice of performance. We demonstrate first that quantization-aware training of CNNs leads to better accuracy in SNNs. One of the benefits of converting CNNs to spiking CNNs is to leverage the sparse computation of SNNs and consequently perform equivalent computation at a lower energy consumption. Here we propose an efficient optimization strategy to train spiking networks at lower energy consumption, while maintaining similar accuracy levels. We demonstrate results on the MNIST-DVS and CIFAR-10 datasets.

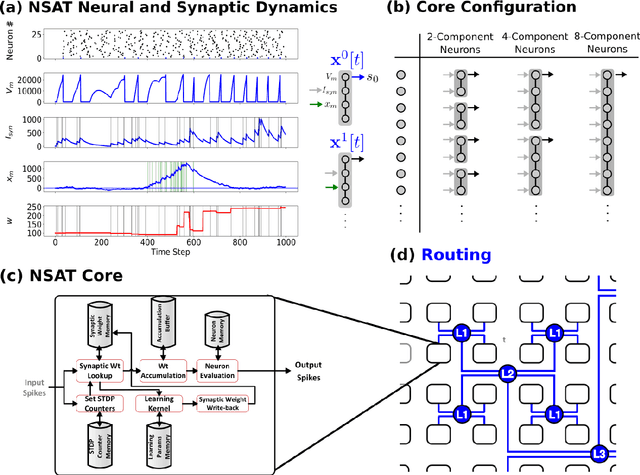

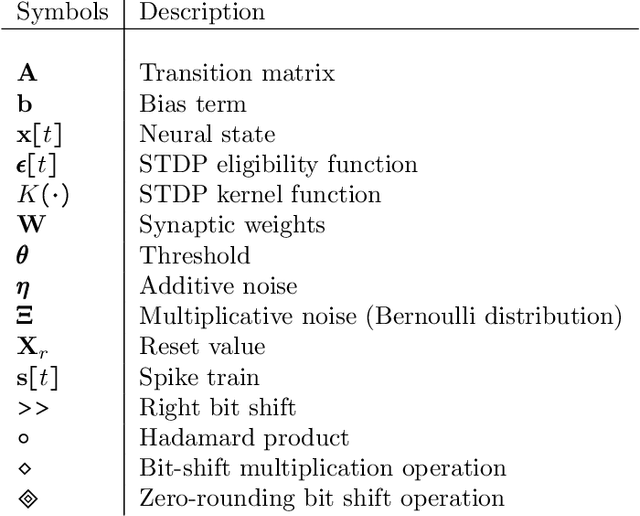

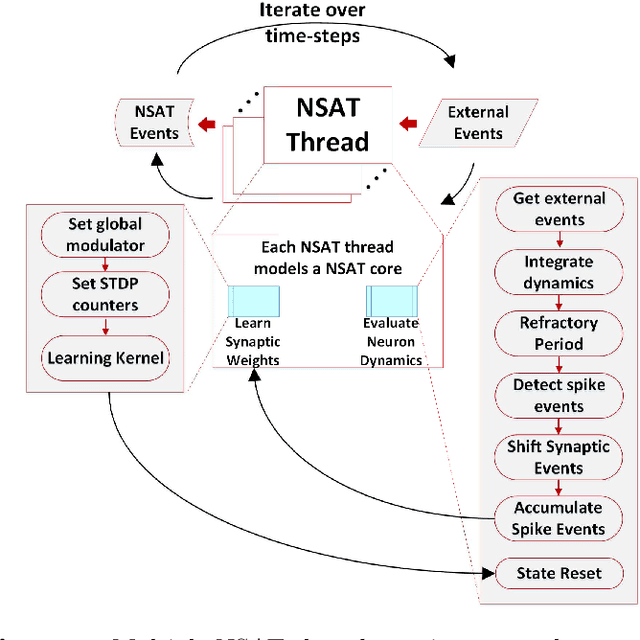

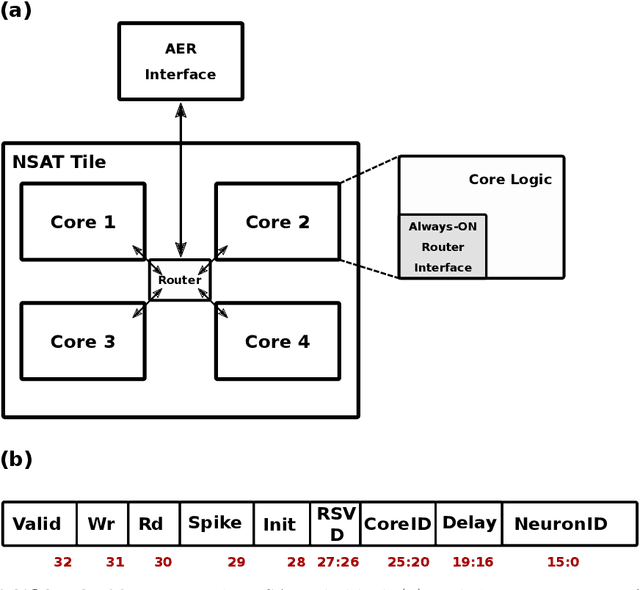

Neural and Synaptic Array Transceiver: A Brain-Inspired Computing Framework for Embedded Learning

Aug 08, 2018

Abstract:Embedded, continual learning for autonomous and adaptive behavior is a key application of neuromorphic hardware. However, neuromorphic implementations of embedded learning at large scales that are both flexible and efficient have been hindered by a lack of a suitable algorithmic framework. As a result, the most neuromorphic hardware is trained off-line on large clusters of dedicated processors or GPUs and transferred post hoc to the device. We address this by introducing the neural and synaptic array transceiver (NSAT), a neuromorphic computational framework facilitating flexible and efficient embedded learning by matching algorithmic requirements and neural and synaptic dynamics. NSAT supports event-driven supervised, unsupervised and reinforcement learning algorithms including deep learning. We demonstrate the NSAT in a wide range of tasks, including the simulation of Mihalas-Niebur neuron, dynamic neural fields, event-driven random back-propagation for event-based deep learning, event-based contrastive divergence for unsupervised learning, and voltage-based learning rules for sequence learning. We anticipate that this contribution will establish the foundation for a new generation of devices enabling adaptive mobile systems, wearable devices, and robots with data-driven autonomy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge