Jeffrey Krichmar

NeuroXplorer 1.0: An Extensible Framework for Architectural Exploration with Spiking Neural Networks

May 04, 2021

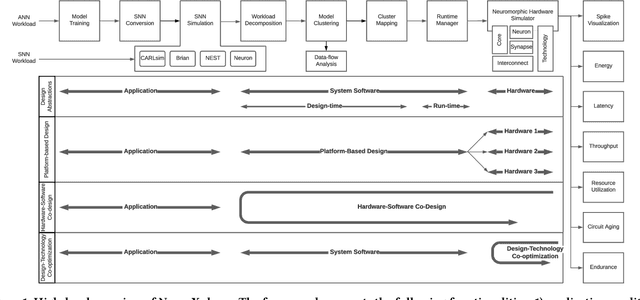

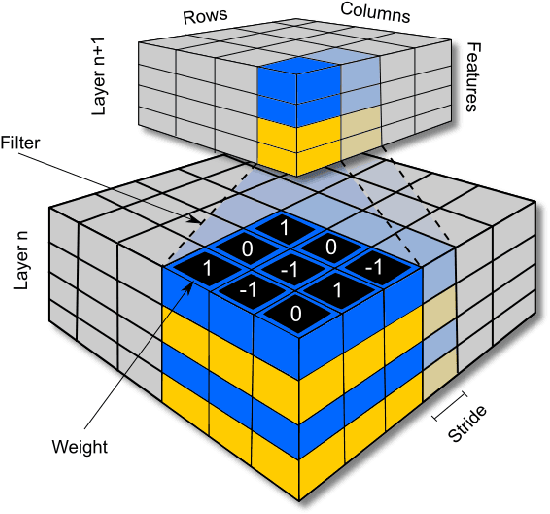

Abstract:Recently, both industry and academia have proposed many different neuromorphic architectures to execute applications that are designed with Spiking Neural Network (SNN). Consequently, there is a growing need for an extensible simulation framework that can perform architectural explorations with SNNs, including both platform-based design of today's hardware, and hardware-software co-design and design-technology co-optimization of the future. We present NeuroXplorer, a fast and extensible framework that is based on a generalized template for modeling a neuromorphic architecture that can be infused with the specific details of a given hardware and/or technology. NeuroXplorer can perform both low-level cycle-accurate architectural simulations and high-level analysis with data-flow abstractions. NeuroXplorer's optimization engine can incorporate hardware-oriented metrics such as energy, throughput, and latency, as well as SNN-oriented metrics such as inter-spike interval distortion and spike disorder, which directly impact SNN performance. We demonstrate the architectural exploration capabilities of NeuroXplorer through case studies with many state-of-the-art machine learning models.

Endurance-Aware Mapping of Spiking Neural Networks to Neuromorphic Hardware

Mar 09, 2021

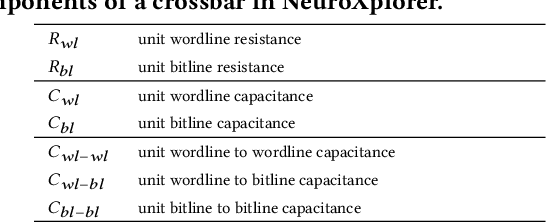

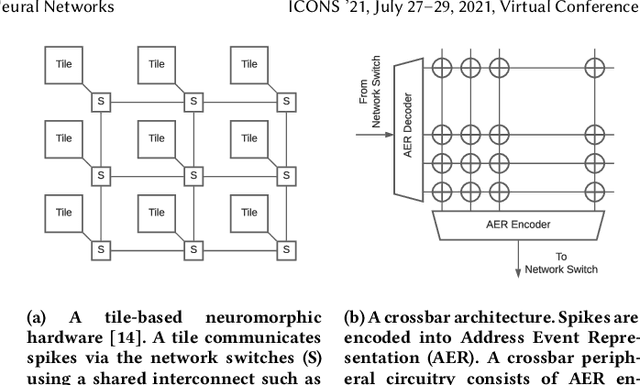

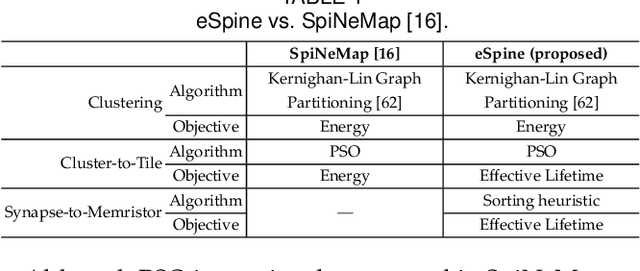

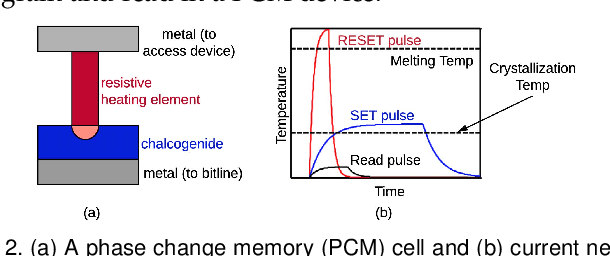

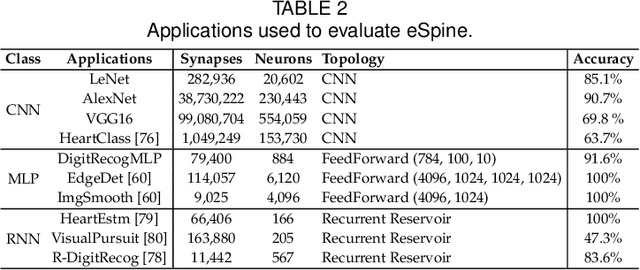

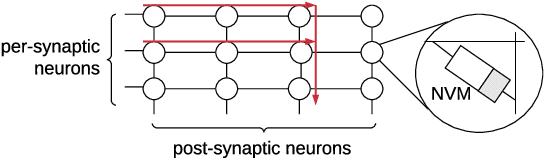

Abstract:Neuromorphic computing systems are embracing memristors to implement high density and low power synaptic storage as crossbar arrays in hardware. These systems are energy efficient in executing Spiking Neural Networks (SNNs). We observe that long bitlines and wordlines in a memristive crossbar are a major source of parasitic voltage drops, which create current asymmetry. Through circuit simulations, we show the significant endurance variation that results from this asymmetry. Therefore, if the critical memristors (ones with lower endurance) are overutilized, they may lead to a reduction of the crossbar's lifetime. We propose eSpine, a novel technique to improve lifetime by incorporating the endurance variation within each crossbar in mapping machine learning workloads, ensuring that synapses with higher activation are always implemented on memristors with higher endurance, and vice versa. eSpine works in two steps. First, it uses the Kernighan-Lin Graph Partitioning algorithm to partition a workload into clusters of neurons and synapses, where each cluster can fit in a crossbar. Second, it uses an instance of Particle Swarm Optimization (PSO) to map clusters to tiles, where the placement of synapses of a cluster to memristors of a crossbar is performed by analyzing their activation within the workload. We evaluate eSpine for a state-of-the-art neuromorphic hardware model with phase-change memory (PCM)-based memristors. Using 10 SNN workloads, we demonstrate a significant improvement in the effective lifetime.

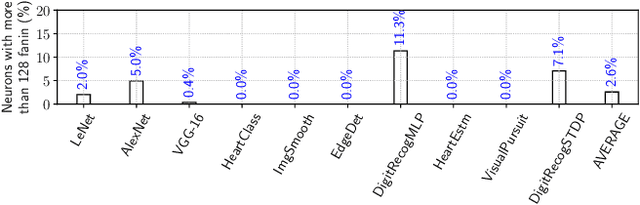

Enabling Resource-Aware Mapping of Spiking Neural Networks via Spatial Decomposition

Sep 19, 2020

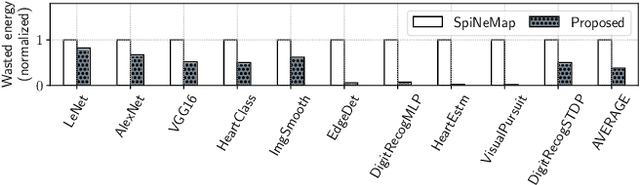

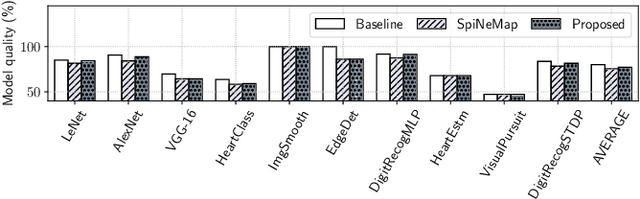

Abstract:With growing model complexity, mapping Spiking Neural Network (SNN)-based applications to tile-based neuromorphic hardware is becoming increasingly challenging. This is because the synaptic storage resources on a tile, viz. a crossbar, can accommodate only a fixed number of pre-synaptic connections per post-synaptic neuron. For complex SNN models that have many pre-synaptic connections per neuron, some connections may need to be pruned after training to fit onto the tile resources, leading to a loss in model quality, e.g., accuracy. In this work, we propose a novel unrolling technique that decomposes a neuron function with many pre-synaptic connections into a sequence of homogeneous neural units, where each neural unit is a function computation node, with two pre-synaptic connections. This spatial decomposition technique significantly improves crossbar utilization and retains all pre-synaptic connections, resulting in no loss of the model quality derived from connection pruning. We integrate the proposed technique within an existing SNN mapping framework and evaluate it using machine learning applications on the DYNAP-SE state-of-the-art neuromorphic hardware. Our results demonstrate an average 60% lower crossbar requirement, 9x higher synapse utilization, 62% lower wasted energy on the hardware, and between 0.8% and 4.6% increase in model quality.

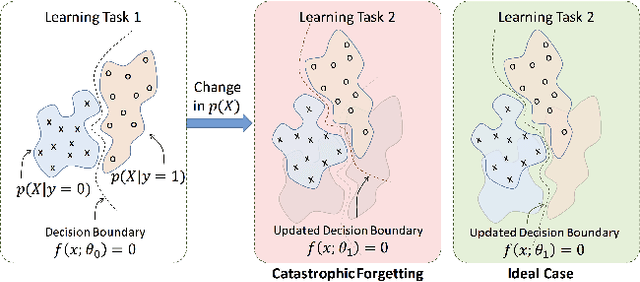

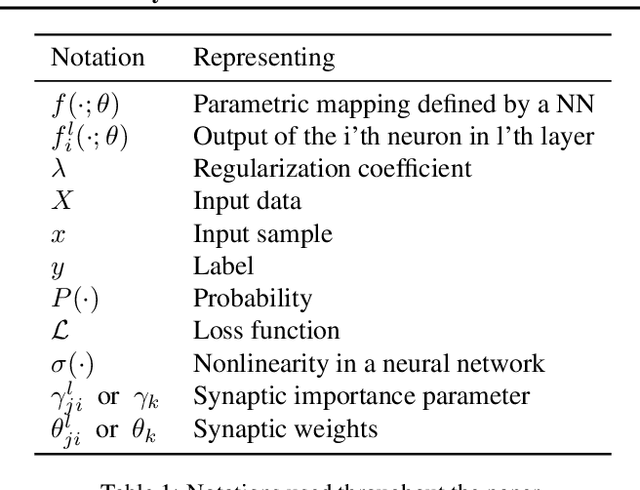

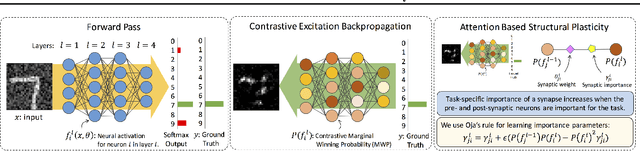

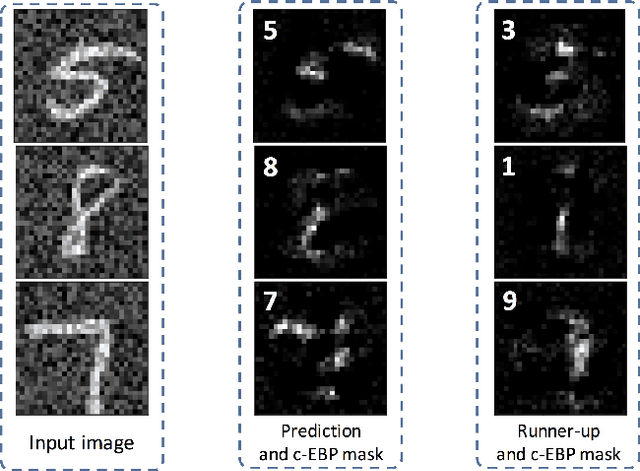

Attention-Based Structural-Plasticity

Mar 02, 2019

Abstract:Catastrophic forgetting/interference is a critical problem for lifelong learning machines, which impedes the agents from maintaining their previously learned knowledge while learning new tasks. Neural networks, in particular, suffer plenty from the catastrophic forgetting phenomenon. Recently there has been several efforts towards overcoming catastrophic forgetting in neural networks. Here, we propose a biologically inspired method toward overcoming catastrophic forgetting. Specifically, we define an attention-based selective plasticity of synapses based on the cholinergic neuromodulatory system in the brain. We define synaptic importance parameters in addition to synaptic weights and then use Hebbian learning in parallel with backpropagation algorithm to learn synaptic importances in an online and seamless manner. We test our proposed method on benchmark tasks including the Permuted MNIST and the Split MNIST problems and show competitive performance compared to the state-of-the-art methods.

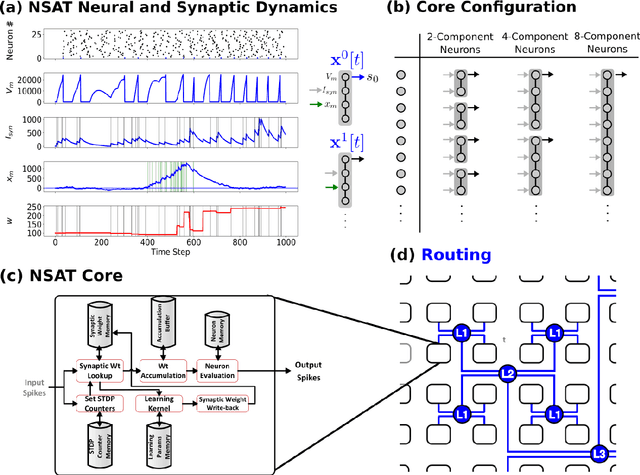

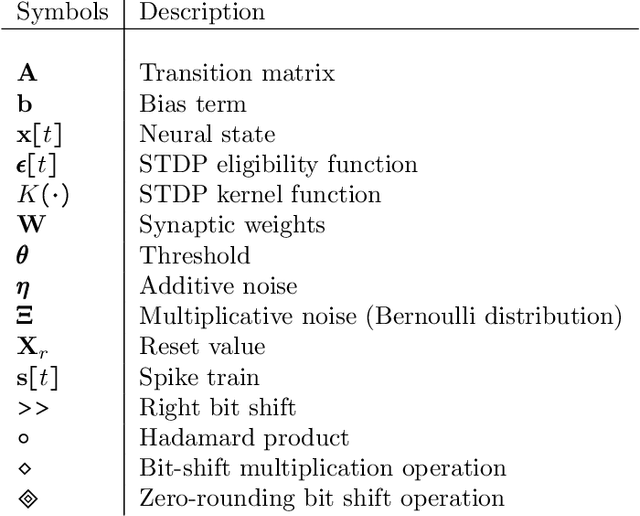

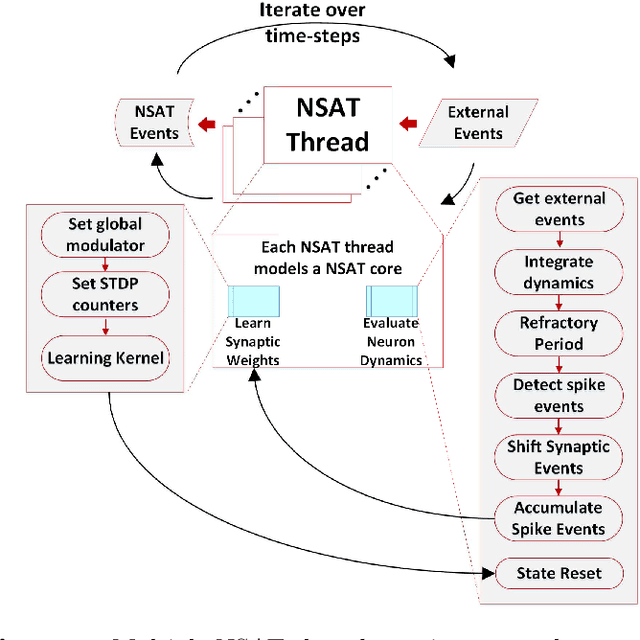

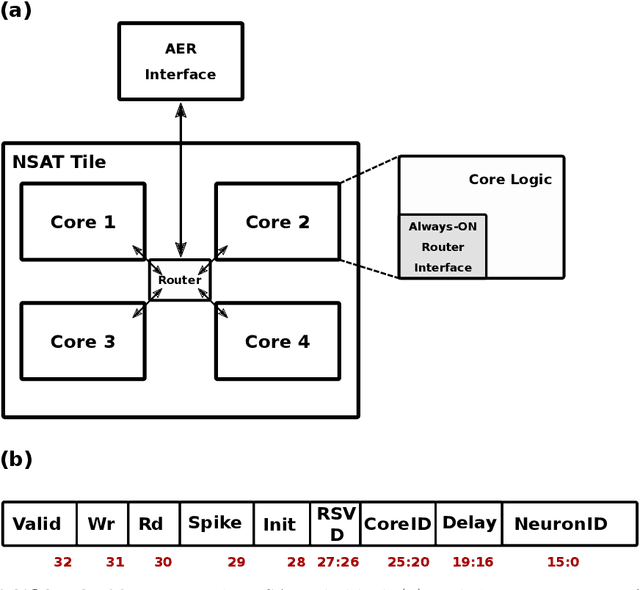

Neural and Synaptic Array Transceiver: A Brain-Inspired Computing Framework for Embedded Learning

Aug 08, 2018

Abstract:Embedded, continual learning for autonomous and adaptive behavior is a key application of neuromorphic hardware. However, neuromorphic implementations of embedded learning at large scales that are both flexible and efficient have been hindered by a lack of a suitable algorithmic framework. As a result, the most neuromorphic hardware is trained off-line on large clusters of dedicated processors or GPUs and transferred post hoc to the device. We address this by introducing the neural and synaptic array transceiver (NSAT), a neuromorphic computational framework facilitating flexible and efficient embedded learning by matching algorithmic requirements and neural and synaptic dynamics. NSAT supports event-driven supervised, unsupervised and reinforcement learning algorithms including deep learning. We demonstrate the NSAT in a wide range of tasks, including the simulation of Mihalas-Niebur neuron, dynamic neural fields, event-driven random back-propagation for event-based deep learning, event-based contrastive divergence for unsupervised learning, and voltage-based learning rules for sequence learning. We anticipate that this contribution will establish the foundation for a new generation of devices enabling adaptive mobile systems, wearable devices, and robots with data-driven autonomy.

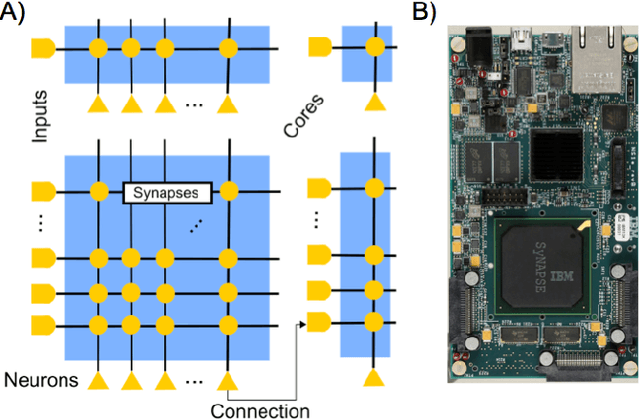

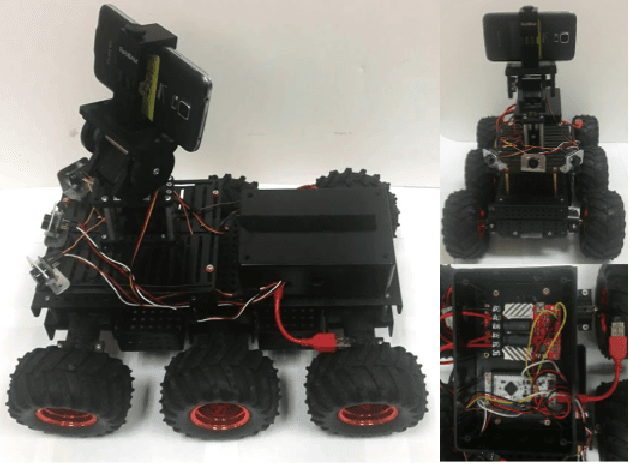

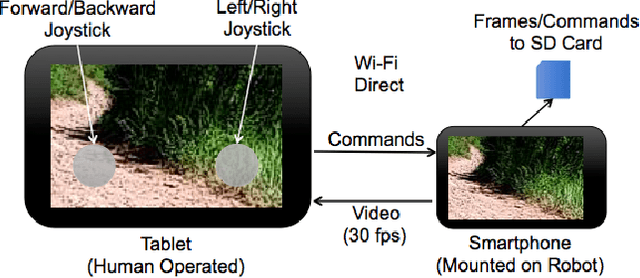

A Self-Driving Robot Using Deep Convolutional Neural Networks on Neuromorphic Hardware

Nov 04, 2016

Abstract:Neuromorphic computing is a promising solution for reducing the size, weight and power of mobile embedded systems. In this paper, we introduce a realization of such a system by creating the first closed-loop battery-powered communication system between an IBM TrueNorth NS1e and an autonomous Android-Based Robotics platform. Using this system, we constructed a dataset of path following behavior by manually driving the Android-Based robot along steep mountain trails and recording video frames from the camera mounted on the robot along with the corresponding motor commands. We used this dataset to train a deep convolutional neural network implemented on the TrueNorth NS1e. The NS1e, which was mounted on the robot and powered by the robot's battery, resulted in a self-driving robot that could successfully traverse a steep mountain path in real time. To our knowledge, this represents the first time the TrueNorth NS1e neuromorphic chip has been embedded on a mobile platform under closed-loop control.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge