Emre Neftci

Dissecting Linear Recurrent Models: How Different Gating Strategies Drive Selectivity and Generalization

Jan 18, 2026Abstract:Linear recurrent neural networks have emerged as efficient alternatives to the original Transformer's softmax attention mechanism, thanks to their highly parallelizable training and constant memory and computation requirements at inference. Iterative refinements of these models have introduced an increasing number of architectural mechanisms, leading to increased complexity and computational costs. Nevertheless, systematic direct comparisons among these models remain limited. Existing benchmark tasks are either too simplistic to reveal substantial differences or excessively resource-intensive for experimentation. In this work, we propose a refined taxonomy of linear recurrent models and introduce SelectivBench, a set of lightweight and customizable synthetic benchmark tasks for systematically evaluating sequence models. SelectivBench specifically evaluates selectivity in sequence models at small to medium scale, such as the capacity to focus on relevant inputs while ignoring context-based distractors. It employs rule-based grammars to generate sequences with adjustable complexity, incorporating irregular gaps that intentionally violate transition rules. Evaluations of linear recurrent models on SelectivBench reveal performance patterns consistent with results from large-scale language tasks. Our analysis clarifies the roles of essential architectural features: gating and rapid forgetting mechanisms facilitate recall, in-state channel mixing is unnecessary for selectivity, but critical for generalization, and softmax attention remains dominant due to its memory capacity scaling with sequence length. Our benchmark enables targeted, efficient exploration of linear recurrent models and provides a controlled setting for studying behaviors observed in large-scale evaluations. Code is available at https://github.com/symseqbench/selectivbench

Hybrid guided variational autoencoder for visual place recognition

Jan 14, 2026Abstract:Autonomous agents such as cars, robots and drones need to precisely localize themselves in diverse environments, including in GPS-denied indoor environments. One approach for precise localization is visual place recognition (VPR), which estimates the place of an image based on previously seen places. State-of-the-art VPR models require high amounts of memory, making them unwieldy for mobile deployment, while more compact models lack robustness and generalization capabilities. This work overcomes these limitations for robotics using a combination of event-based vision sensors and an event-based novel guided variational autoencoder (VAE). The encoder part of our model is based on a spiking neural network model which is compatible with power-efficient low latency neuromorphic hardware. The VAE successfully disentangles the visual features of 16 distinct places in our new indoor VPR dataset with a classification performance comparable to other state-of-the-art approaches while, showing robust performance also under various illumination conditions. When tested with novel visual inputs from unknown scenes, our model can distinguish between these places, which demonstrates a high generalization capability by learning the essential features of location. Our compact and robust guided VAE with generalization capabilities poses a promising model for visual place recognition that can significantly enhance mobile robot navigation in known and unknown indoor environments.

SymSeqBench: a unified framework for the generation and analysis of rule-based symbolic sequences and datasets

Dec 31, 2025Abstract:Sequential structure is a key feature of multiple domains of natural cognition and behavior, such as language, movement and decision-making. Likewise, it is also a central property of tasks to which we would like to apply artificial intelligence. It is therefore of great importance to develop frameworks that allow us to evaluate sequence learning and processing in a domain agnostic fashion, whilst simultaneously providing a link to formal theories of computation and computability. To address this need, we introduce two complementary software tools: SymSeq, designed to rigorously generate and analyze structured symbolic sequences, and SeqBench, a comprehensive benchmark suite of rule-based sequence processing tasks to evaluate the performance of artificial learning systems in cognitively relevant domains. In combination, SymSeqBench offers versatility in investigating sequential structure across diverse knowledge domains, including experimental psycholinguistics, cognitive psychology, behavioral analysis, neuromorphic computing and artificial intelligence. Due to its basis in Formal Language Theory (FLT), SymSeqBench provides researchers in multiple domains with a convenient and practical way to apply the concepts of FLT to conceptualize and standardize their experiments, thus advancing our understanding of cognition and behavior through shared computational frameworks and formalisms. The tool is modular, openly available and accessible to the research community.

QS4D: Quantization-aware training for efficient hardware deployment of structured state-space sequential models

Jul 08, 2025Abstract:Structured State Space models (SSM) have recently emerged as a new class of deep learning models, particularly well-suited for processing long sequences. Their constant memory footprint, in contrast to the linearly scaling memory demands of Transformers, makes them attractive candidates for deployment on resource-constrained edge-computing devices. While recent works have explored the effect of quantization-aware training (QAT) on SSMs, they typically do not address its implications for specialized edge hardware, for example, analog in-memory computing (AIMC) chips. In this work, we demonstrate that QAT can significantly reduce the complexity of SSMs by up to two orders of magnitude across various performance metrics. We analyze the relation between model size and numerical precision, and show that QAT enhances robustness to analog noise and enables structural pruning. Finally, we integrate these techniques to deploy SSMs on a memristive analog in-memory computing substrate and highlight the resulting benefits in terms of computational efficiency.

Structured State Space Model Dynamics and Parametrization for Spiking Neural Networks

Jun 04, 2025Abstract:Multi-state spiking neurons such as the adaptive leaky integrate-and-fire (AdLIF) neuron offer compelling alternatives to conventional deep learning models thanks to their sparse binary activations, second-order nonlinear recurrent dynamics, and efficient hardware realizations. However, such internal dynamics can cause instabilities during inference and training, often limiting performance and scalability. Meanwhile, state space models (SSMs) excel in long sequence processing using linear state-intrinsic recurrence resembling spiking neurons' subthreshold regime. Here, we establish a mathematical bridge between SSMs and second-order spiking neuron models. Based on structure and parametrization strategies of diagonal SSMs, we propose two novel spiking neuron models. The first extends the AdLIF neuron through timestep training and logarithmic reparametrization to facilitate training and improve final performance. The second additionally brings initialization and structure from complex-state SSMs, broadening the dynamical regime to oscillatory dynamics. Together, our two models achieve beyond or near state-of-the-art (SOTA) performances for reset-based spiking neuron models across both event-based and raw audio speech recognition datasets. We achieve this with a favorable number of parameters and required dynamic memory while maintaining high activity sparsity. Our models demonstrate enhanced scalability in network size and strike a favorable balance between performance and efficiency with respect to SSM models.

Contrastive Consolidation of Top-Down Modulations Achieves Sparsely Supervised Continual Learning

May 20, 2025Abstract:Biological brains learn continually from a stream of unlabeled data, while integrating specialized information from sparsely labeled examples without compromising their ability to generalize. Meanwhile, machine learning methods are susceptible to catastrophic forgetting in this natural learning setting, as supervised specialist fine-tuning degrades performance on the original task. We introduce task-modulated contrastive learning (TMCL), which takes inspiration from the biophysical machinery in the neocortex, using predictive coding principles to integrate top-down information continually and without supervision. We follow the idea that these principles build a view-invariant representation space, and that this can be implemented using a contrastive loss. Then, whenever labeled samples of a new class occur, new affine modulations are learned that improve separation of the new class from all others, without affecting feedforward weights. By co-opting the view-invariance learning mechanism, we then train feedforward weights to match the unmodulated representation of a data sample to its modulated counterparts. This introduces modulation invariance into the representation space, and, by also using past modulations, stabilizes it. Our experiments show improvements in both class-incremental and transfer learning over state-of-the-art unsupervised approaches, as well as over comparable supervised approaches, using as few as 1% of available labels. Taken together, our work suggests that top-down modulations play a crucial role in balancing stability and plasticity.

A Grid Cell-Inspired Structured Vector Algebra for Cognitive Maps

Mar 11, 2025Abstract:The entorhinal-hippocampal formation is the mammalian brain's navigation system, encoding both physical and abstract spaces via grid cells. This system is well-studied in neuroscience, and its efficiency and versatility make it attractive for applications in robotics and machine learning. While continuous attractor networks (CANs) successfully model entorhinal grid cells for encoding physical space, integrating both continuous spatial and abstract spatial computations into a unified framework remains challenging. Here, we attempt to bridge this gap by proposing a mechanistic model for versatile information processing in the entorhinal-hippocampal formation inspired by CANs and Vector Symbolic Architectures (VSAs), a neuro-symbolic computing framework. The novel grid-cell VSA (GC-VSA) model employs a spatially structured encoding scheme with 3D neuronal modules mimicking the discrete scales and orientations of grid cell modules, reproducing their characteristic hexagonal receptive fields. In experiments, the model demonstrates versatility in spatial and abstract tasks: (1) accurate path integration for tracking locations, (2) spatio-temporal representation for querying object locations and temporal relations, and (3) symbolic reasoning using family trees as a structured test case for hierarchical relationships.

A Truly Sparse and General Implementation of Gradient-Based Synaptic Plasticity

Jan 20, 2025

Abstract:Online synaptic plasticity rules derived from gradient descent achieve high accuracy on a wide range of practical tasks. However, their software implementation often requires tediously hand-derived gradients or using gradient backpropagation which sacrifices the online capability of the rules. In this work, we present a custom automatic differentiation (AD) pipeline for sparse and online implementation of gradient-based synaptic plasticity rules that generalizes to arbitrary neuron models. Our work combines the programming ease of backpropagation-type methods for forward AD while being memory-efficient. To achieve this, we exploit the advantageous compute and memory scaling of online synaptic plasticity by providing an inherently sparse implementation of AD where expensive tensor contractions are replaced with simple element-wise multiplications if the tensors are diagonal. Gradient-based synaptic plasticity rules such as eligibility propagation (e-prop) have exactly this property and thus profit immensely from this feature. We demonstrate the alignment of our gradients with respect to gradient backpropagation on an synthetic task where e-prop gradients are exact, as well as audio speech classification benchmarks. We demonstrate how memory utilization scales with network size without dependence on the sequence length, as expected from forward AD methods.

Optimal Gradient Checkpointing for Sparse and Recurrent Architectures using Off-Chip Memory

Dec 16, 2024

Abstract:Recurrent neural networks (RNNs) are valued for their computational efficiency and reduced memory requirements on tasks involving long sequence lengths but require high memory-processor bandwidth to train. Checkpointing techniques can reduce the memory requirements by only storing a subset of intermediate states, the checkpoints, but are still rarely used due to the computational overhead of the additional recomputation phase. This work addresses these challenges by introducing memory-efficient gradient checkpointing strategies tailored for the general class of sparse RNNs and Spiking Neural Networks (SNNs). SNNs are energy efficient alternatives to RNNs thanks to their local, event-driven operation and potential neuromorphic implementation. We use the Intelligence Processing Unit (IPU) as an exemplary platform for architectures with distributed local memory. We exploit its suitability for sparse and irregular workloads to scale SNN training on long sequence lengths. We find that Double Checkpointing emerges as the most effective method, optimizing the use of local memory resources while minimizing recomputation overhead. This approach reduces dependency on slower large-scale memory access, enabling training on sequences over 10 times longer or 4 times larger networks than previously feasible, with only marginal time overhead. The presented techniques demonstrate significant potential to enhance scalability and efficiency in training sparse and recurrent networks across diverse hardware platforms, and highlights the benefits of sparse activations for scalable recurrent neural network training.

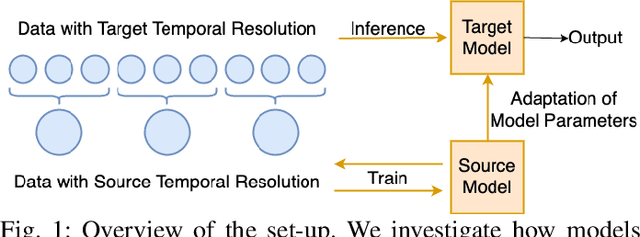

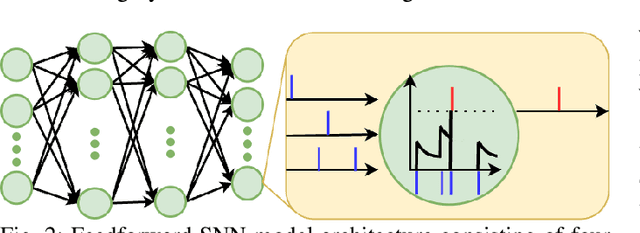

Zero-Shot Temporal Resolution Domain Adaptation for Spiking Neural Networks

Nov 07, 2024

Abstract:Spiking Neural Networks (SNNs) are biologically-inspired deep neural networks that efficiently extract temporal information while offering promising gains in terms of energy efficiency and latency when deployed on neuromorphic devices. However, SNN model parameters are sensitive to temporal resolution, leading to significant performance drops when the temporal resolution of target data at the edge is not the same with that of the pre-deployment source data used for training, especially when fine-tuning is not possible at the edge. To address this challenge, we propose three novel domain adaptation methods for adapting neuron parameters to account for the change in time resolution without re-training on target time-resolution. The proposed methods are based on a mapping between neuron dynamics in SNNs and State Space Models (SSMs); and are applicable to general neuron models. We evaluate the proposed methods under spatio-temporal data tasks, namely the audio keyword spotting datasets SHD and MSWC as well as the image classification NMINST dataset. Our methods provide an alternative to - and in majority of the cases significantly outperform - the existing reference method that simply scales the time constant. Moreover, our results show that high accuracy on high temporal resolution data can be obtained by time efficient training on lower temporal resolution data and model adaptation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge