Michael Neumeier

EEvAct: Early Event-Based Action Recognition with High-Rate Two-Stream Spiking Neural Networks

Jul 10, 2025Abstract:Recognizing human activities early is crucial for the safety and responsiveness of human-robot and human-machine interfaces. Due to their high temporal resolution and low latency, event-based vision sensors are a perfect match for this early recognition demand. However, most existing processing approaches accumulate events to low-rate frames or space-time voxels which limits the early prediction capabilities. In contrast, spiking neural networks (SNNs) can process the events at a high-rate for early predictions, but most works still fall short on final accuracy. In this work, we introduce a high-rate two-stream SNN which closes this gap by outperforming previous work by 2% in final accuracy on the large-scale THU EACT-50 dataset. We benchmark the SNNs within a novel early event-based recognition framework by reporting Top-1 and Top-5 recognition scores for growing observation time. Finally, we exemplify the impact of these methods on a real-world task of early action triggering for human motion capture in sports.

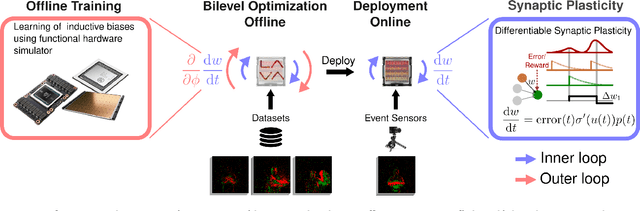

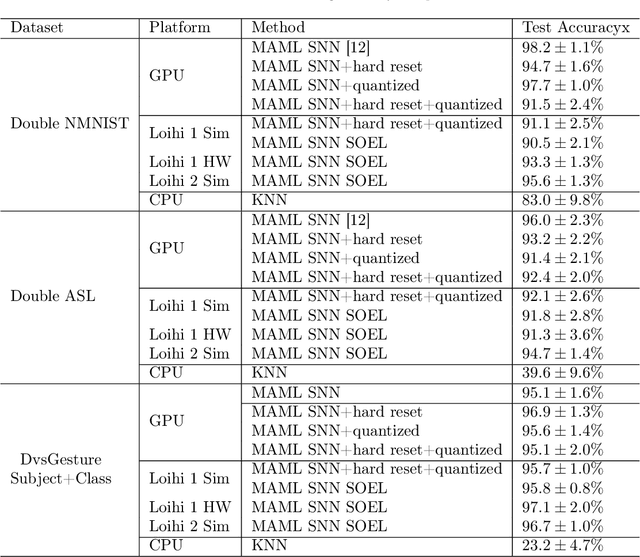

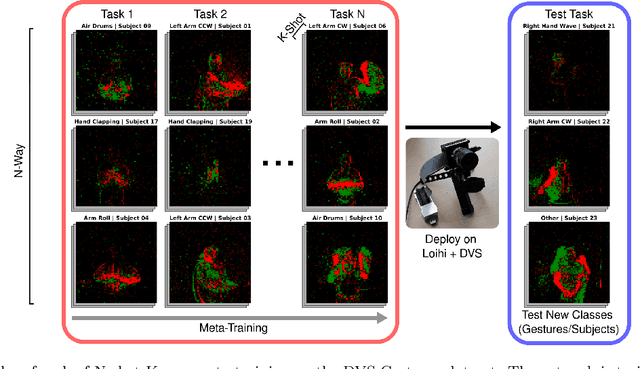

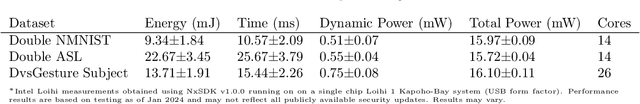

Emulating Brain-like Rapid Learning in Neuromorphic Edge Computing

Aug 28, 2024

Abstract:Achieving personalized intelligence at the edge with real-time learning capabilities holds enormous promise in enhancing our daily experiences and helping decision making, planning, and sensing. However, efficient and reliable edge learning remains difficult with current technology due to the lack of personalized data, insufficient hardware capabilities, and inherent challenges posed by online learning. Over time and across multiple developmental stages, the brain has evolved to efficiently incorporate new knowledge by gradually building on previous knowledge. In this work, we emulate the multiple stages of learning with digital neuromorphic technology that simulates the neural and synaptic processes of the brain using two stages of learning. First, a meta-training stage trains the hyperparameters of synaptic plasticity for one-shot learning using a differentiable simulation of the neuromorphic hardware. This meta-training process refines a hardware local three-factor synaptic plasticity rule and its associated hyperparameters to align with the trained task domain. In a subsequent deployment stage, these optimized hyperparameters enable fast, data-efficient, and accurate learning of new classes. We demonstrate our approach using event-driven vision sensor data and the Intel Loihi neuromorphic processor with its plasticity dynamics, achieving real-time one-shot learning of new classes that is vastly improved over transfer learning. Our methodology can be deployed with arbitrary plasticity models and can be applied to situations demanding quick learning and adaptation at the edge, such as navigating unfamiliar environments or learning unexpected categories of data through user engagement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge