Garrick Orchard

Emulating Brain-like Rapid Learning in Neuromorphic Edge Computing

Aug 28, 2024

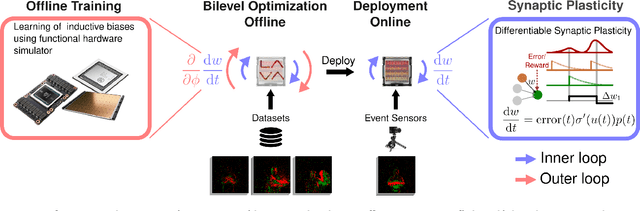

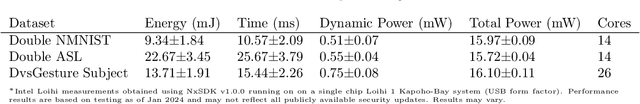

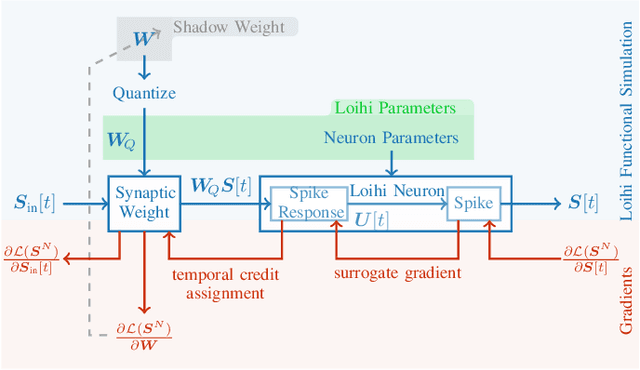

Abstract:Achieving personalized intelligence at the edge with real-time learning capabilities holds enormous promise in enhancing our daily experiences and helping decision making, planning, and sensing. However, efficient and reliable edge learning remains difficult with current technology due to the lack of personalized data, insufficient hardware capabilities, and inherent challenges posed by online learning. Over time and across multiple developmental stages, the brain has evolved to efficiently incorporate new knowledge by gradually building on previous knowledge. In this work, we emulate the multiple stages of learning with digital neuromorphic technology that simulates the neural and synaptic processes of the brain using two stages of learning. First, a meta-training stage trains the hyperparameters of synaptic plasticity for one-shot learning using a differentiable simulation of the neuromorphic hardware. This meta-training process refines a hardware local three-factor synaptic plasticity rule and its associated hyperparameters to align with the trained task domain. In a subsequent deployment stage, these optimized hyperparameters enable fast, data-efficient, and accurate learning of new classes. We demonstrate our approach using event-driven vision sensor data and the Intel Loihi neuromorphic processor with its plasticity dynamics, achieving real-time one-shot learning of new classes that is vastly improved over transfer learning. Our methodology can be deployed with arbitrary plasticity models and can be applied to situations demanding quick learning and adaptation at the edge, such as navigating unfamiliar environments or learning unexpected categories of data through user engagement.

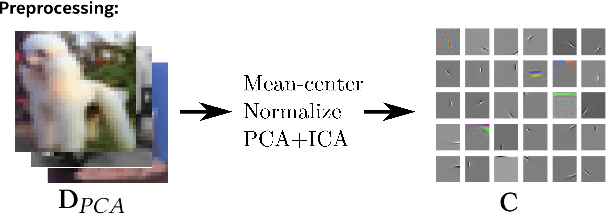

Ultra-low-power Image Classification on Neuromorphic Hardware

Sep 28, 2023

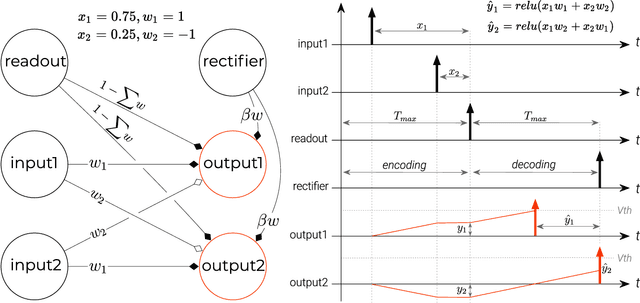

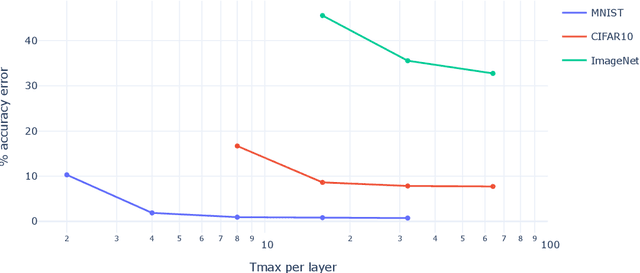

Abstract:Spiking neural networks (SNNs) promise ultra-low-power applications by exploiting temporal and spatial sparsity. The number of binary activations, called spikes, is proportional to the power consumed when executed on neuromorphic hardware. Training such SNNs using backpropagation through time for vision tasks that rely mainly on spatial features is computationally costly. Training a stateless artificial neural network (ANN) to then convert the weights to an SNN is a straightforward alternative when it comes to image recognition datasets. Most conversion methods rely on rate coding in the SNN to represent ANN activation, which uses enormous amounts of spikes and, therefore, energy to encode information. Recently, temporal conversion methods have shown promising results requiring significantly fewer spikes per neuron, but sometimes complex neuron models. We propose a temporal ANN-to-SNN conversion method, which we call Quartz, that is based on the time to first spike (TTFS). Quartz achieves high classification accuracy and can be easily implemented on neuromorphic hardware while using the least amount of synaptic operations and memory accesses. It incurs a cost of two additional synapses per neuron compared to previous temporal conversion methods, which are readily available on neuromorphic hardware. We benchmark Quartz on MNIST, CIFAR10, and ImageNet in simulation to show the benefits of our method and follow up with an implementation on Loihi, a neuromorphic chip by Intel. We provide evidence that temporal coding has advantages in terms of power consumption, throughput, and latency for similar classification accuracy. Our code and models are publicly available.

The Intel Neuromorphic DNS Challenge

Mar 17, 2023Abstract:A critical enabler for progress in neuromorphic computing research is the ability to transparently evaluate different neuromorphic solutions on important tasks and to compare them to state-of-the-art conventional solutions. The Intel Neuromorphic Deep Noise Suppression Challenge (Intel N-DNS Challenge), inspired by the Microsoft DNS Challenge, tackles a ubiquitous and commercially relevant task: real-time audio denoising. Audio denoising is likely to reap the benefits of neuromorphic computing due to its low-bandwidth, temporal nature and its relevance for low-power devices. The Intel N-DNS Challenge consists of two tracks: a simulation-based algorithmic track to encourage algorithmic innovation, and a neuromorphic hardware (Loihi 2) track to rigorously evaluate solutions. For both tracks, we specify an evaluation methodology based on energy, latency, and resource consumption in addition to output audio quality. We make the Intel N-DNS Challenge dataset scripts and evaluation code freely accessible, encourage community participation with monetary prizes, and release a neuromorphic baseline solution which shows promising audio quality, high power efficiency, and low resource consumption when compared to Microsoft NsNet2 and a proprietary Intel denoising model used in production. We hope the Intel N-DNS Challenge will hasten innovation in neuromorphic algorithms research, especially in the area of training tools and methods for real-time signal processing. We expect the winners of the challenge will demonstrate that for problems like audio denoising, significant gains in power and resources can be realized on neuromorphic devices available today compared to conventional state-of-the-art solutions.

Efficient Neuromorphic Signal Processing with Loihi 2

Nov 05, 2021

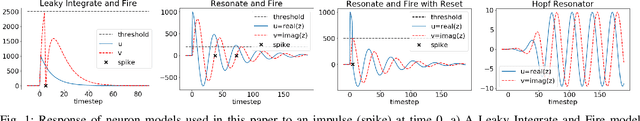

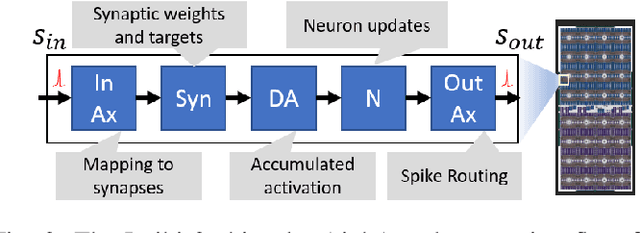

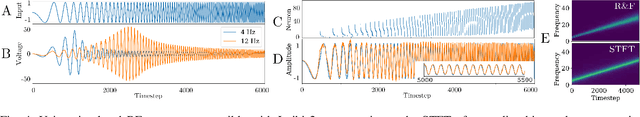

Abstract:The biologically inspired spiking neurons used in neuromorphic computing are nonlinear filters with dynamic state variables -- very different from the stateless neuron models used in deep learning. The next version of Intel's neuromorphic research processor, Loihi 2, supports a wide range of stateful spiking neuron models with fully programmable dynamics. Here we showcase advanced spiking neuron models that can be used to efficiently process streaming data in simulation experiments on emulated Loihi 2 hardware. In one example, Resonate-and-Fire (RF) neurons are used to compute the Short Time Fourier Transform (STFT) with similar computational complexity but 47x less output bandwidth than the conventional STFT. In another example, we describe an algorithm for optical flow estimation using spatiotemporal RF neurons that requires over 90x fewer operations than a conventional DNN-based solution. We also demonstrate promising preliminary results using backpropagation to train RF neurons for audio classification tasks. Finally, we show that a cascade of Hopf resonators - a variant of the RF neuron - replicates novel properties of the cochlea and motivates an efficient spike-based spectrogram encoder.

e-TLD: Event-based Framework for Dynamic Object Tracking

Sep 02, 2020Abstract:This paper presents a long-term object tracking framework with a moving event camera under general tracking conditions. A first of its kind for these revolutionary cameras, the tracking framework uses a discriminative representation for the object with online learning, and detects and re-tracks the object when it comes back into the field-of-view. One of the key novelties is the use of an event-based local sliding window technique that tracks reliably in scenes with cluttered and textured background. In addition, Bayesian bootstrapping is used to assist real-time processing and boost the discriminative power of the object representation. On the other hand, when the object re-enters the field-of-view of the camera, a data-driven, global sliding window detector locates the object for subsequent tracking. Extensive experiments demonstrate the ability of the proposed framework to track and detect arbitrary objects of various shapes and sizes, including dynamic objects such as a human. This is a significant improvement compared to earlier works that simply track objects as long as they are visible under simpler background settings. Using the ground truth locations for five different objects under three motion settings, namely translation, rotation and 6-DOF, quantitative measurement is reported for the event-based tracking framework with critical insights on various performance issues. Finally, real-time implementation in C++ highlights tracking ability under scale, rotation, view-point and occlusion scenarios in a lab setting.

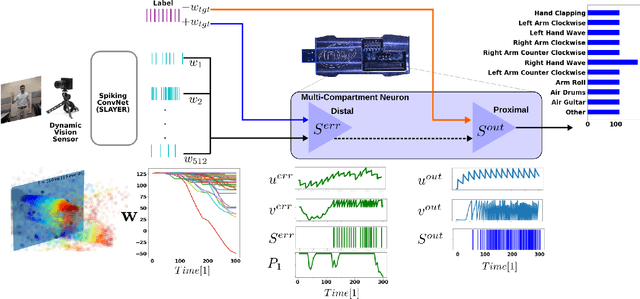

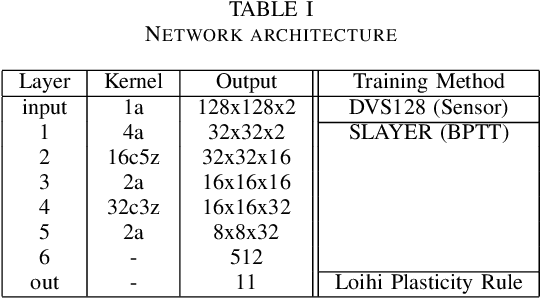

Online Few-shot Gesture Learning on a Neuromorphic Processor

Aug 03, 2020

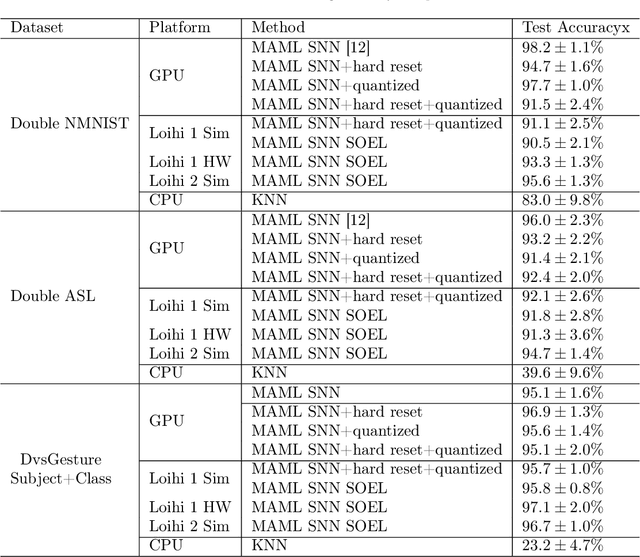

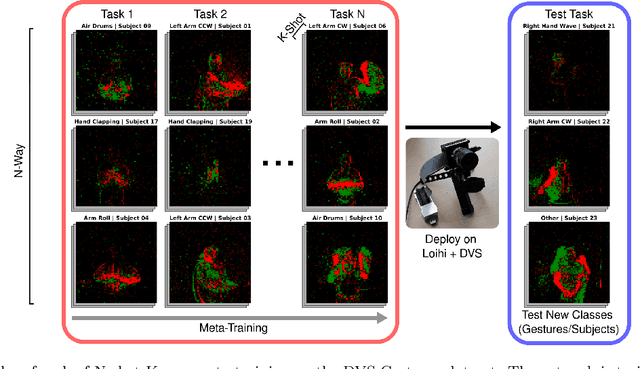

Abstract:We present the Surrogate-gradient Online Error-triggered Learning (SOEL) system for online few-shot learningon neuromorphic processors. The SOEL learning system usesa combination of transfer learning and principles of computa-tional neuroscience and deep learning. We show that partiallytrained deep Spiking Neural Networks (SNNs) implemented onneuromorphic hardware can rapidly adapt online to new classesof data within a domain. SOEL updates trigger when an erroroccurs, enabling faster learning with fewer updates. Using gesturerecognition as a case study, we show SOEL can be used for onlinefew-shot learning of new classes of pre-recorded gesture data andrapid online learning of new gestures from data streamed livefrom a Dynamic Active-pixel Vision Sensor to an Intel Loihineuromorphic research processor.

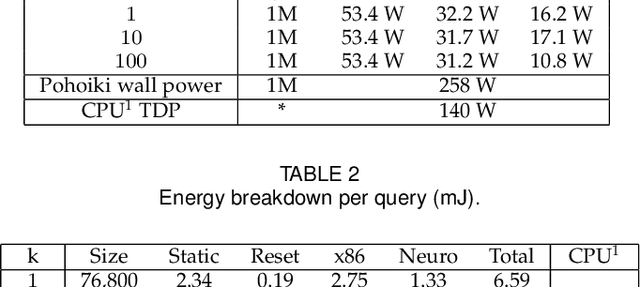

Neuromorphic Nearest-Neighbor Search Using Intel's Pohoiki Springs

Apr 27, 2020

Abstract:Neuromorphic computing applies insights from neuroscience to uncover innovations in computing technology. In the brain, billions of interconnected neurons perform rapid computations at extremely low energy levels by leveraging properties that are foreign to conventional computing systems, such as temporal spiking codes and finely parallelized processing units integrating both memory and computation. Here, we showcase the Pohoiki Springs neuromorphic system, a mesh of 768 interconnected Loihi chips that collectively implement 100 million spiking neurons in silicon. We demonstrate a scalable approximate k-nearest neighbor (k-NN) algorithm for searching large databases that exploits neuromorphic principles. Compared to state-of-the-art conventional CPU-based implementations, we achieve superior latency, index build time, and energy efficiency when evaluated on several standard datasets containing over 1 million high-dimensional patterns. Further, the system supports adding new data points to the indexed database online in O(1) time unlike all but brute force conventional k-NN implementations.

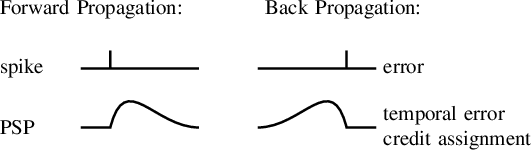

On-chip Few-shot Learning with Surrogate Gradient Descent on a Neuromorphic Processor

Nov 05, 2019

Abstract:Recent work suggests that synaptic plasticity dynamics in biological models of neurons and neuromorphic hardware are compatible with gradient-based learning (Neftci et al., 2019). Gradient-based learning requires iterating several times over a dataset, which is both time-consuming and constrains the training samples to be independently and identically distributed. This is incompatible with learning systems that do not have boundaries between training and inference, such as in neuromorphic hardware. One approach to overcome these constraints is transfer learning, where a portion of the network is pre-trained and mapped into hardware and the remaining portion is trained online. Transfer learning has the advantage that pre-training can be accelerated offline if the task domain is known, and few samples of each class are sufficient for learning the target task at reasonable accuracies. Here, we demonstrate on-line surrogate gradient few-shot learning on Intel's Loihi neuromorphic research processor using features pre-trained with spike-based gradient backpropagation-through-time. Our experimental results show that the Loihi chip can learn gestures online using a small number of shots and achieve results that are comparable to the models simulated on a conventional processor.

A low-power end-to-end hybrid neuromorphic framework for surveillance applications

Oct 25, 2019

Abstract:With the success of deep learning, object recognition systems that can be deployed for real-world applications are becoming commonplace. However, inference that needs to largely take place on the `edge' (not processed on servers), is a highly computational and memory intensive workload, making it intractable for low-power mobile nodes and remote security applications. To address this challenge, this paper proposes a low-power (5W) end-to-end neuromorphic framework for object tracking and classification using event-based cameras that possess desirable properties such as low power consumption (5-14 mW) and high dynamic range (120 dB). Nonetheless, unlike traditional approaches of using event-by-event processing, this work uses a mixed frame and event approach to get energy savings with high performance. Using a frame-based region proposal method based on the density of foreground events, a hardware-friendly object tracking is implemented using the apparent object velocity while tackling occlusion scenarios. For low-power classification of the tracked objects, the event camera is interfaced to IBM TrueNorth, which is time-multiplexed to tackle up to eight instances for a traffic monitoring application. The frame-based object track input is converted back to spikes for Truenorth classification via the energy efficient deep network (EEDN) pipeline. Using originally collected datasets, we train the TrueNorth model on the hardware track outputs, instead of using ground truth object locations as commonly done, and demonstrate the efficacy of our system to handle practical surveillance scenarios. Finally, we compare the proposed methodologies to state-of-the-art event-based systems for object tracking and classification, and demonstrate the use case of our neuromorphic approach for low-power applications without sacrificing on performance.

EBBIOT: A Low-complexity Tracking Algorithm for Surveillance in IoVT Using Stationary Neuromorphic Vision Sensors

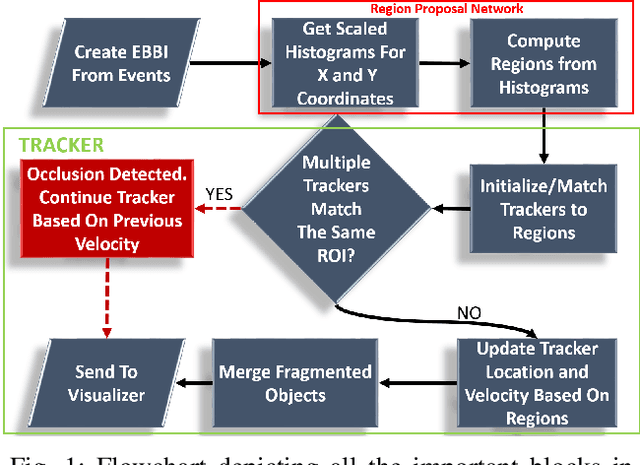

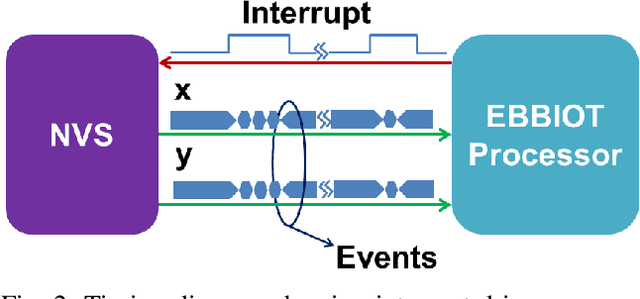

Oct 04, 2019

Abstract:In this paper, we present EBBIOT-a novel paradigm for object tracking using stationary neuromorphic vision sensors in low-power sensor nodes for the Internet of Video Things (IoVT). Different from fully event based tracking or fully frame based approaches, we propose a mixed approach where we create event-based binary images (EBBI) that can use memory efficient noise filtering algorithms. We exploit the motion triggering aspect of neuromorphic sensors to generate region proposals based on event density counts with >1000X less memory and computes compared to frame based approaches. We also propose a simple overlap based tracker (OT) with prediction based handling of occlusion. Our overall approach requires 7X less memory and 3X less computations than conventional noise filtering and event based mean shift (EBMS) tracking. Finally, we show that our approach results in significantly higher precision and recall compared to EBMS approach as well as Kalman Filter tracker when evaluated over 1.1 hours of traffic recordings at two different locations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge