Vandana Reddy Padala

A low-power end-to-end hybrid neuromorphic framework for surveillance applications

Oct 25, 2019

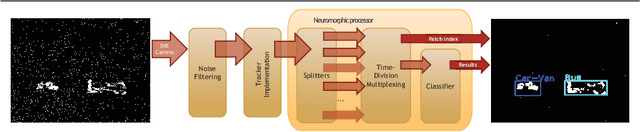

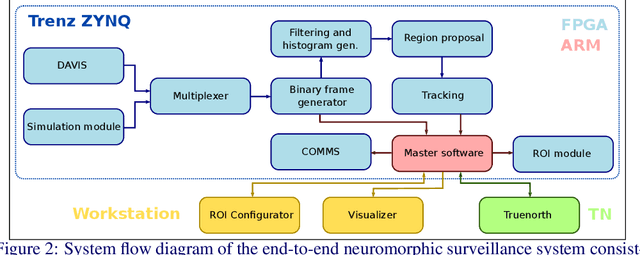

Abstract:With the success of deep learning, object recognition systems that can be deployed for real-world applications are becoming commonplace. However, inference that needs to largely take place on the `edge' (not processed on servers), is a highly computational and memory intensive workload, making it intractable for low-power mobile nodes and remote security applications. To address this challenge, this paper proposes a low-power (5W) end-to-end neuromorphic framework for object tracking and classification using event-based cameras that possess desirable properties such as low power consumption (5-14 mW) and high dynamic range (120 dB). Nonetheless, unlike traditional approaches of using event-by-event processing, this work uses a mixed frame and event approach to get energy savings with high performance. Using a frame-based region proposal method based on the density of foreground events, a hardware-friendly object tracking is implemented using the apparent object velocity while tackling occlusion scenarios. For low-power classification of the tracked objects, the event camera is interfaced to IBM TrueNorth, which is time-multiplexed to tackle up to eight instances for a traffic monitoring application. The frame-based object track input is converted back to spikes for Truenorth classification via the energy efficient deep network (EEDN) pipeline. Using originally collected datasets, we train the TrueNorth model on the hardware track outputs, instead of using ground truth object locations as commonly done, and demonstrate the efficacy of our system to handle practical surveillance scenarios. Finally, we compare the proposed methodologies to state-of-the-art event-based systems for object tracking and classification, and demonstrate the use case of our neuromorphic approach for low-power applications without sacrificing on performance.

EBBIOT: A Low-complexity Tracking Algorithm for Surveillance in IoVT Using Stationary Neuromorphic Vision Sensors

Oct 04, 2019

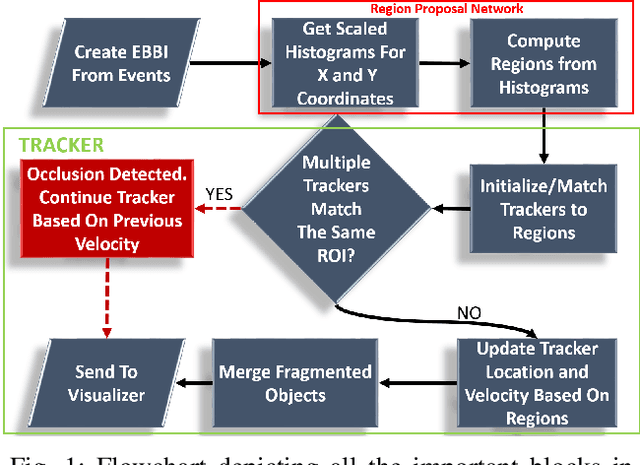

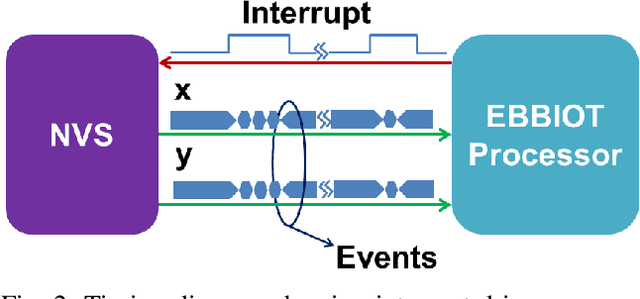

Abstract:In this paper, we present EBBIOT-a novel paradigm for object tracking using stationary neuromorphic vision sensors in low-power sensor nodes for the Internet of Video Things (IoVT). Different from fully event based tracking or fully frame based approaches, we propose a mixed approach where we create event-based binary images (EBBI) that can use memory efficient noise filtering algorithms. We exploit the motion triggering aspect of neuromorphic sensors to generate region proposals based on event density counts with >1000X less memory and computes compared to frame based approaches. We also propose a simple overlap based tracker (OT) with prediction based handling of occlusion. Our overall approach requires 7X less memory and 3X less computations than conventional noise filtering and event based mean shift (EBMS) tracking. Finally, we show that our approach results in significantly higher precision and recall compared to EBMS approach as well as Kalman Filter tracker when evaluated over 1.1 hours of traffic recordings at two different locations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge