Deepak Singla

A 51.3 TOPS/W, 134.4 GOPS In-memory Binary Image Filtering in 65nm CMOS

Jul 29, 2021

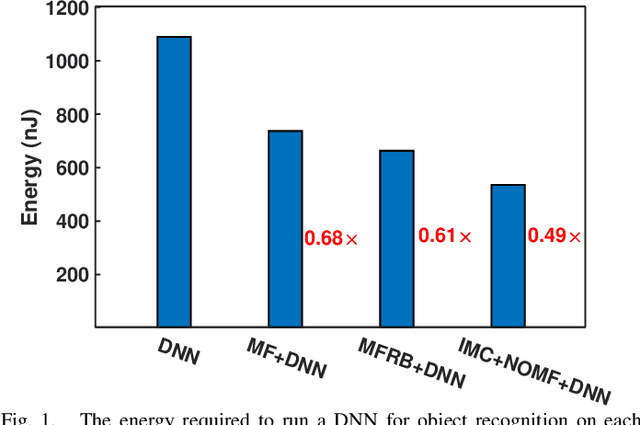

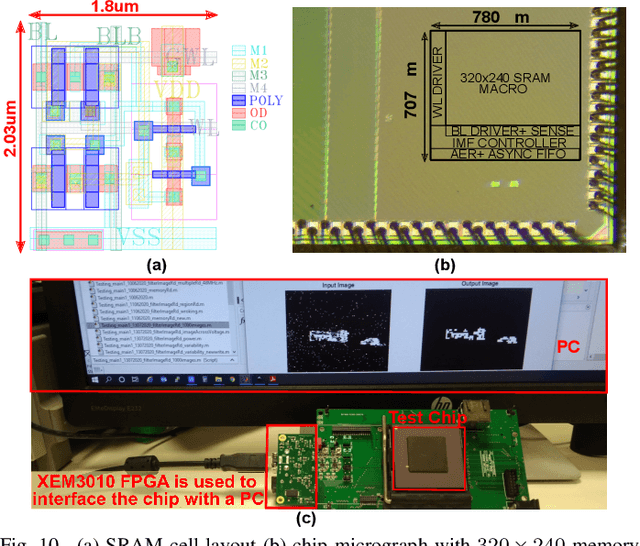

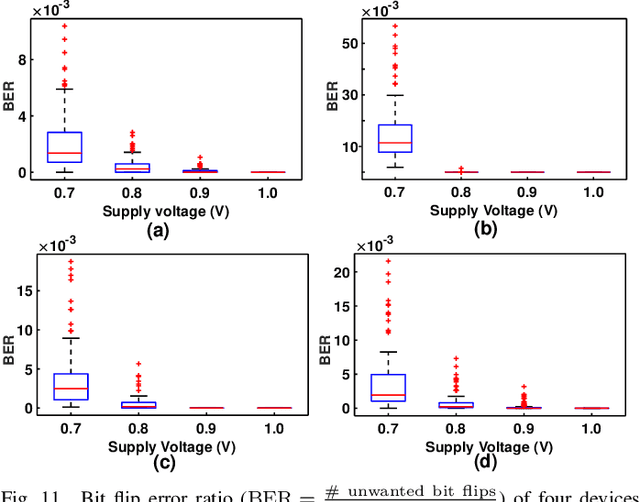

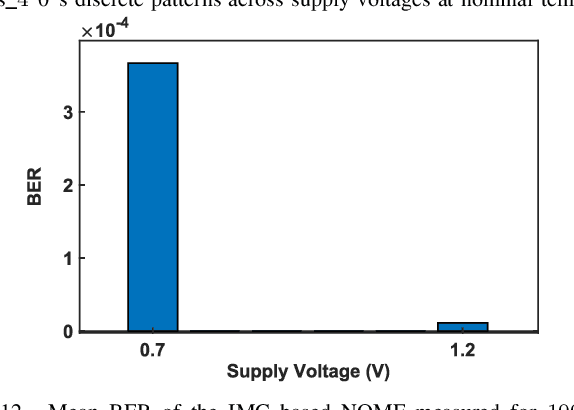

Abstract:Neuromorphic vision sensors (NVS) can enable energy savings due to their event-driven that exploits the temporal redundancy in video streams from a stationary camera. However, noise-driven events lead to the false triggering of the object recognition processor. Image denoise operations require memoryintensive processing leading to a bottleneck in energy and latency. In this paper, we present in-memory filtering (IMF), a 6TSRAM in-memory computing based image denoising for eventbased binary image (EBBI) frame from an NVS. We propose a non-overlap median filter (NOMF) for image denoising. An inmemory computing framework enables hardware implementation of NOMF leveraging the inherent read disturb phenomenon of 6T-SRAM. To demonstrate the energy-saving and effectiveness of the algorithm, we fabricated the proposed architecture in a 65nm CMOS process. As compared to fully digital implementation, IMF enables > 70x energy savings and a > 3x improvement of processing time when tested with the video recordings from a DAVIS sensor and achieves a peak throughput of 134.4 GOPS. Furthermore, the peak energy efficiencies of the NOMF is 51.3 TOPS/W, comparable with state of the art inmemory processors. We also show that the accuracy of the images obtained by NOMF provide comparable accuracy in tracking and classification applications when compared with images obtained by conventional median filtering.

A Hybrid Neuromorphic Object Tracking and Classification Framework for Real-time Systems

Jul 21, 2020

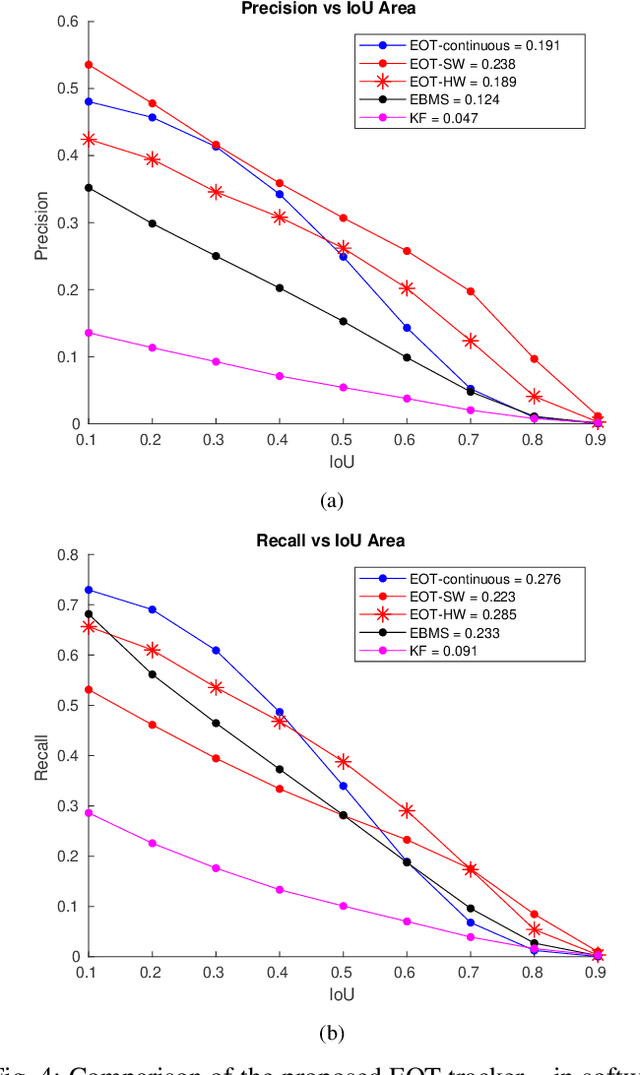

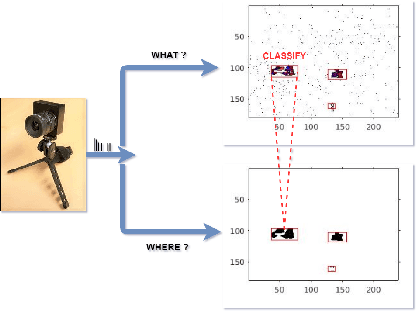

Abstract:Deep learning inference that needs to largely take place on the 'edge' is a highly computational and memory intensive workload, making it intractable for low-power, embedded platforms such as mobile nodes and remote security applications. To address this challenge, this paper proposes a real-time, hybrid neuromorphic framework for object tracking and classification using event-based cameras that possess properties such as low-power consumption (5-14 mW) and high dynamic range (120 dB). Nonetheless, unlike traditional approaches of using event-by-event processing, this work uses a mixed frame and event approach to get energy savings with high performance. Using a frame-based region proposal method based on the density of foreground events, a hardware-friendly object tracking scheme is implemented using the apparent object velocity while tackling occlusion scenarios. The object track input is converted back to spikes for TrueNorth classification via the energy-efficient deep network (EEDN) pipeline. Using originally collected datasets, we train the TrueNorth model on the hardware track outputs, instead of using ground truth object locations as commonly done, and demonstrate the ability of our system to handle practical surveillance scenarios. As an optional paradigm, to exploit the low latency and asynchronous nature of neuromorphic vision sensors (NVS), we also propose a continuous-time tracker with C++ implementation where each event is processed individually. Thereby, we extensively compare the proposed methodologies to state-of-the-art event-based and frame-based methods for object tracking and classification, and demonstrate the use case of our neuromorphic approach for real-time and embedded applications without sacrificing performance. Finally, we also showcase the efficacy of the proposed system to a standard RGB camera setup when evaluated over several hours of traffic recordings.

EBBINNOT: A Hardware Efficient Hybrid Event-Frame Tracker for Stationary Neuromorphic Vision Sensors

May 31, 2020

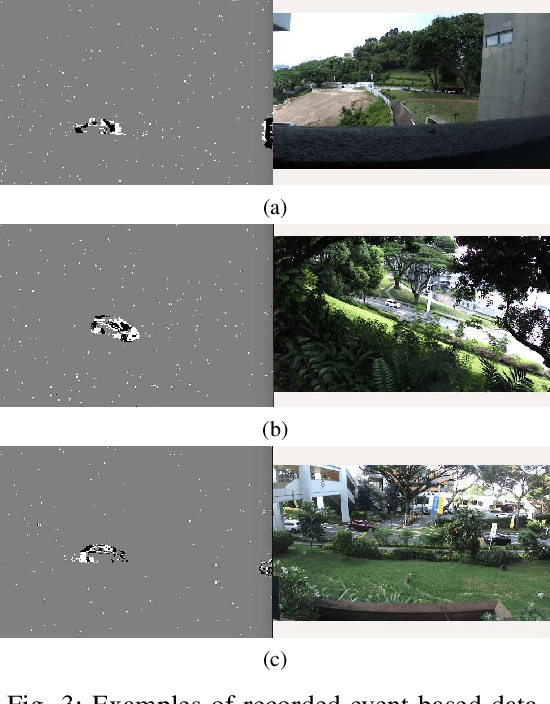

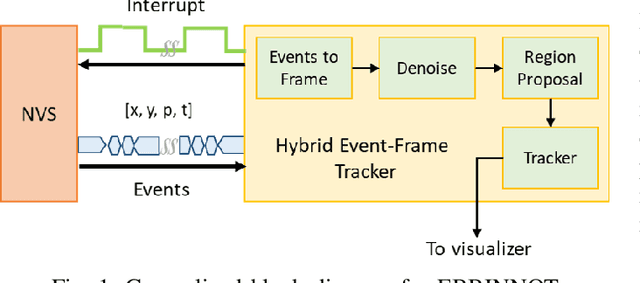

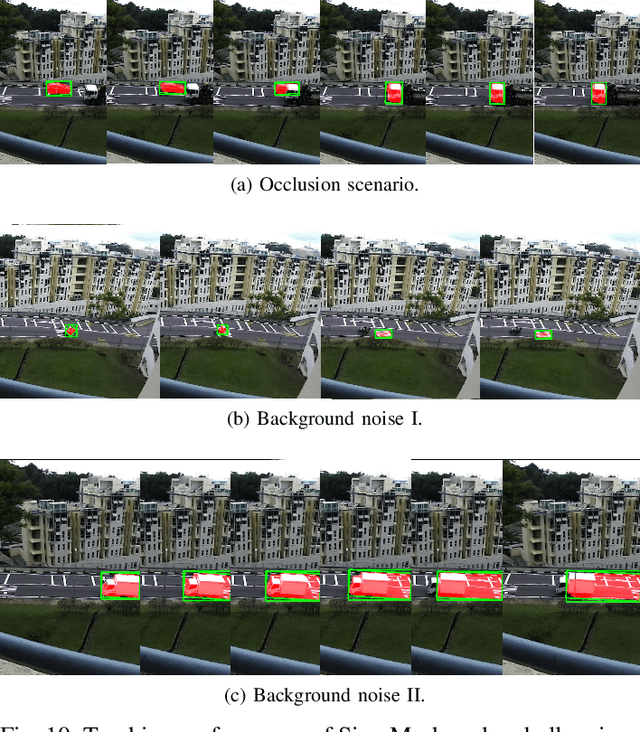

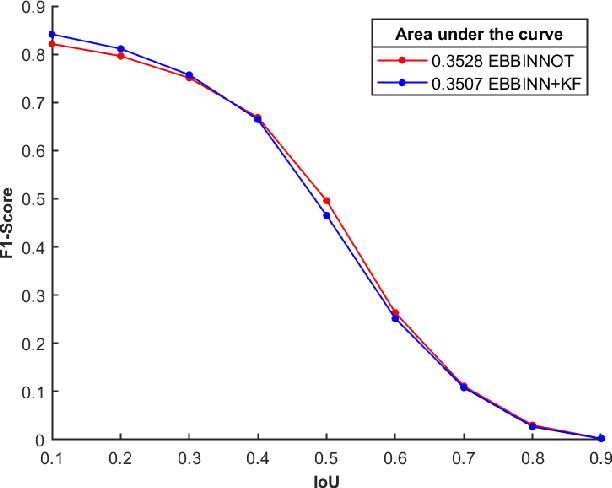

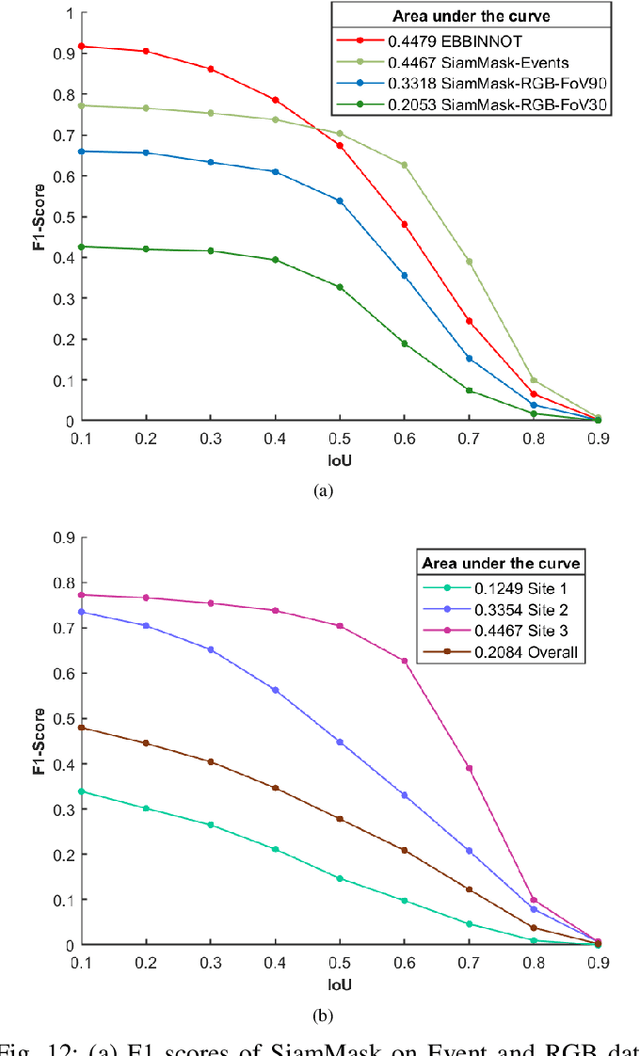

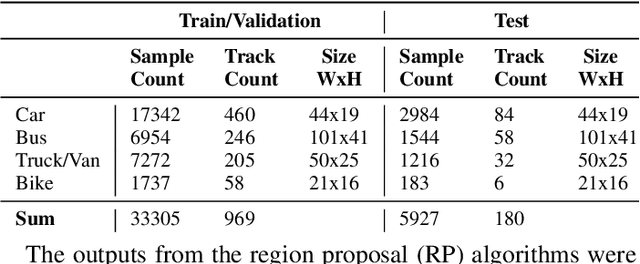

Abstract:In this paper, we present a hybrid event-frame approach for detecting and tracking objects recorded by a stationary neuromorphic vision sensor (NVS) used in the application of traffic monitoring with a hardware efficient processing pipeline that optimizes memory and computational needs. The usage of NVS gives the advantage of rejecting background while it has a unique disadvantage of fragmented objects due to lack of events generated by smooth areas such as glass windows. To exploit the background removal, we propose an event based binary image (EBBI) creation that signals presence or absence of events in a frame duration. This reduces memory requirement and enables usage of simple algorithms like median filtering and connected component labeling (CCL) for denoise and region proposal (RP) respectively. To overcome the fragmentation issue, a YOLO inspired neural network based detector and classifier (NNDC) to merge fragmented region proposals has been proposed. Finally, a simplified version of Kalman filter, termed overlap based tracker (OT), exploiting overlap between detections and tracks is proposed with heuristics to overcome occlusion. The proposed pipeline is evaluated using more than 5 hours of traffic recordings. Our proposed hybrid architecture outperformed (AUC = $0.45$) Deep learning (DL) based tracker SiamMask (AUC = $0.33$) operating on simultaneously recorded RGB frames while requiring $2200\times$ less computations. Compared to pure event based mean shift (AUC = $0.31$), our approach requires $68\times$ more computations but provides much better performance. Finally, we also evaluated our performance on two different NVS: DAVIS and CeleX and demonstrated similar gains. To the best of our knowledge, this is the first report where an NVS based solution is directly compared to other simultaneously recorded frame based method and shows tremendous promise.

HyNNA: Improved Performance for Neuromorphic Vision Sensor based Surveillance using Hybrid Neural Network Architecture

Mar 19, 2020

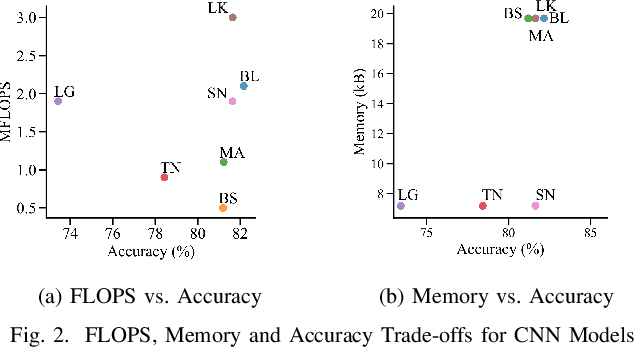

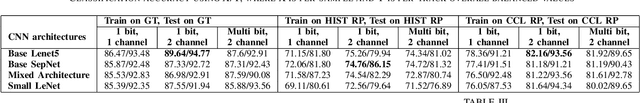

Abstract:Applications in the Internet of Video Things (IoVT) domain have very tight constraints with respect to power and area. While neuromorphic vision sensors (NVS) may offer advantages over traditional imagers in this domain, the existing NVS systems either do not meet the power constraints or have not demonstrated end-to-end system performance. To address this, we improve on a recently proposed hybrid event-frame approach by using morphological image processing algorithms for region proposal and address the low-power requirement for object detection and classification by exploring various convolutional neural network (CNN) architectures. Specifically, we compare the results obtained from our object detection framework against the state-of-the-art low-power NVS surveillance system and show an improved accuracy of 82.16% from 63.1%. Moreover, we show that using multiple bits does not improve accuracy, and thus, system designers can save power and area by using only single bit event polarity information. In addition, we explore the CNN architecture space for object classification and show useful insights to trade-off accuracy for lower power using lesser memory and arithmetic operations.

A low-power end-to-end hybrid neuromorphic framework for surveillance applications

Oct 25, 2019

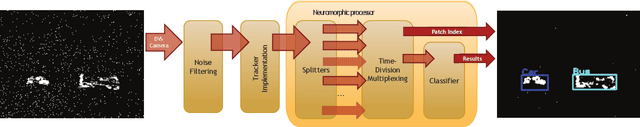

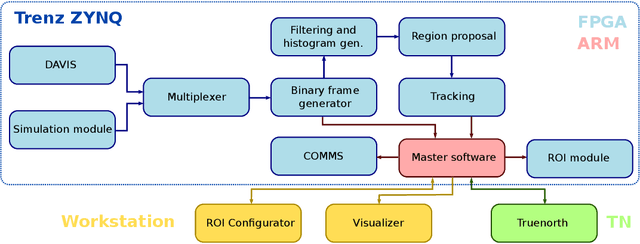

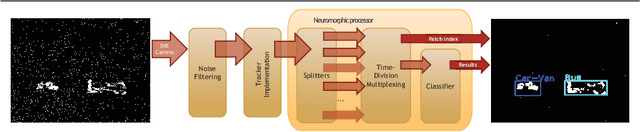

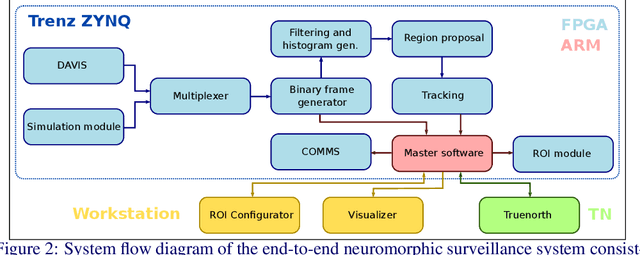

Abstract:With the success of deep learning, object recognition systems that can be deployed for real-world applications are becoming commonplace. However, inference that needs to largely take place on the `edge' (not processed on servers), is a highly computational and memory intensive workload, making it intractable for low-power mobile nodes and remote security applications. To address this challenge, this paper proposes a low-power (5W) end-to-end neuromorphic framework for object tracking and classification using event-based cameras that possess desirable properties such as low power consumption (5-14 mW) and high dynamic range (120 dB). Nonetheless, unlike traditional approaches of using event-by-event processing, this work uses a mixed frame and event approach to get energy savings with high performance. Using a frame-based region proposal method based on the density of foreground events, a hardware-friendly object tracking is implemented using the apparent object velocity while tackling occlusion scenarios. For low-power classification of the tracked objects, the event camera is interfaced to IBM TrueNorth, which is time-multiplexed to tackle up to eight instances for a traffic monitoring application. The frame-based object track input is converted back to spikes for Truenorth classification via the energy efficient deep network (EEDN) pipeline. Using originally collected datasets, we train the TrueNorth model on the hardware track outputs, instead of using ground truth object locations as commonly done, and demonstrate the efficacy of our system to handle practical surveillance scenarios. Finally, we compare the proposed methodologies to state-of-the-art event-based systems for object tracking and classification, and demonstrate the use case of our neuromorphic approach for low-power applications without sacrificing on performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge