Arindam Basu

STEMNIST: Spiking Tactile Extended MNIST Neuromorphic Dataset

Jan 04, 2026Abstract:Tactile sensing is essential for robotic manipulation, prosthetics and assistive technologies, yet neuromorphic tactile datasets remain limited compared to their visual counterparts. We introduce STEMNIST, a large-scale neuromorphic tactile dataset extending ST-MNIST from 10 digits to 35 alphanumeric classes (uppercase letters A--Z and digits 1--9), providing a challenging benchmark for event-based haptic recognition. The dataset comprises 7,700 samples collected from 34 participants using a custom \(16\times 16\) tactile sensor array operating at 120 Hz, encoded as 1,005,592 spike events through adaptive temporal differentiation. Following EMNIST's visual character recognition protocol, STEMNIST addresses the critical gap between simplified digit classification and real-world tactile interaction scenarios requiring alphanumeric discrimination. Baseline experiments using conventional CNNs (90.91% test accuracy) and spiking neural networks (89.16%) establish performance benchmarks. The dataset's event-based format, unrestricted spatial variability and rich temporal structure makes it suitable for testing neuromorphic hardware and bio-inspired learning algorithms. STEMNIST enables reproducible evaluation of tactile recognition systems and provides a foundation for advancing energy-efficient neuromorphic perception in robotics, biomedical engineering and human-machine interfaces. The dataset, documentation and codes are publicly available to accelerate research in neuromorphic tactile computing.

1024-Channel 0.8V 23.9-nW/Channel Event-based Compute In-memory Neural Spike Detector

Dec 09, 2025

Abstract:The increasing data rate has become a major issue confronting next-generation intracortical brain-machine interfaces (iBMIs). The scaling number of recording sites requires complex analog wiring and lead to huge digitization power consumption. Compressive event-based neural frontends have been used in high-density neural implants to support the simultaneous recording of more channels. Event-based frontends (EBF) convert recorded signals into asynchronous digital events via delta modulation and can inherently achieve considerable compression. But EBFs are prone to false events that do not correspond to neural spikes. Spike detection (SPD) is a key process in the iBMI pipeline to detect neural spikes and further reduce the data rate. However, conventional digital SPD suffers from the increasing buffer size and frequent memory access power, and conventional spike emphasizers are not compatible with EBFs. In this work we introduced an event-based spike detection (Ev-SPD) algorithm for scalable compressive EBFs. To implement the algorithm effectively, we proposed a novel low-power 10-T eDRAM-SRAM hybrid random-access memory in-memory computing bitcell for event processing. We fabricated the proposed 1024-channel IMC SPD macro in a 65nm process and tested the macro with both synthetic dataset and Neuropixel recordings. The proposed macro achieved a high spike detection accuracy of 96.06% on a synthetic dataset and 95.08% similarity and 0.05 firing pattern MAE on Neuropixel recordings. Our event-based IMC SPD macro achieved a high per channel spike detection energy efficiency of 23.9 nW per channel and an area efficiency of 375 um^2 per channel. Our work presented a SPD scheme compatible with compressive EBFs for high-density iBMIs, achieving ultra-low power consumption with an IMC architecture while maintaining considerable accuracy.

Event-based Neural Spike Detection Using Spiking Neural Networks for Neuromorphic iBMI Systems

May 10, 2025Abstract:Implantable brain-machine interfaces (iBMIs) are evolving to record from thousands of neurons wirelessly but face challenges in data bandwidth, power consumption, and implant size. We propose a novel Spiking Neural Network Spike Detector (SNN-SPD) that processes event-based neural data generated via delta modulation and pulse count modulation, converting signals into sparse events. By leveraging the temporal dynamics and inherent sparsity of spiking neural networks, our method improves spike detection performance while maintaining low computational overhead suitable for implantable devices. Our experimental results demonstrate that the proposed SNN-SPD achieves an accuracy of 95.72% at high noise levels (standard deviation 0.2), which is about 2% higher than the existing Artificial Neural Network Spike Detector (ANN-SPD). Moreover, SNN-SPD requires only 0.41% of the computation and about 26.62% of the weight parameters compared to ANN-SPD, with zero multiplications. This approach balances efficiency and performance, enabling effective data compression and power savings for next-generation iBMIs.

Architectural Exploration of Hybrid Neural Decoders for Neuromorphic Implantable BMI

May 09, 2025Abstract:This work presents an efficient decoding pipeline for neuromorphic implantable brain-machine interfaces (Neu-iBMI), leveraging sparse neural event data from an event-based neural sensing scheme. We introduce a tunable event filter (EvFilter), which also functions as a spike detector (EvFilter-SPD), significantly reducing the number of events processed for decoding by 192X and 554X, respectively. The proposed pipeline achieves high decoding performance, up to R^2=0.73, with ANN- and SNN-based decoders, eliminating the need for signal recovery, spike detection, or sorting, commonly performed in conventional iBMI systems. The SNN-Decoder reduces computations and memory required by 5-23X compared to NN-, and LSTM-Decoders, while the ST-NN-Decoder delivers similar performance to an LSTM-Decoder requiring 2.5X fewer resources. This streamlined approach significantly reduces computational and memory demands, making it ideal for low-power, on-implant, or wearable iBMIs.

SPACE: SPike-Aware Consistency Enhancement for Test-Time Adaptation in Spiking Neural Networks

Apr 03, 2025

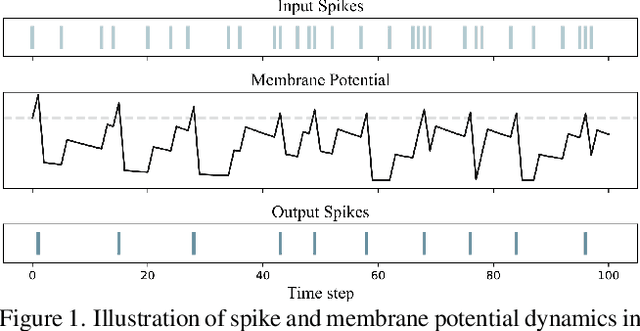

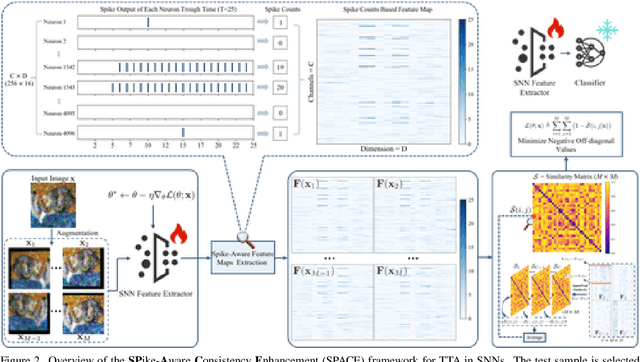

Abstract:Spiking Neural Networks (SNNs), as a biologically plausible alternative to Artificial Neural Networks (ANNs), have demonstrated advantages in terms of energy efficiency, temporal processing, and biological plausibility. However, SNNs are highly sensitive to distribution shifts, which can significantly degrade their performance in real-world scenarios. Traditional test-time adaptation (TTA) methods designed for ANNs often fail to address the unique computational dynamics of SNNs, such as sparsity and temporal spiking behavior. To address these challenges, we propose $\textbf{SP}$ike-$\textbf{A}$ware $\textbf{C}$onsistency $\textbf{E}$nhancement (SPACE), the first source-free and single-instance TTA method specifically designed for SNNs. SPACE leverages the inherent spike dynamics of SNNs to maximize the consistency of spike-behavior-based local feature maps across augmented versions of a single test sample, enabling robust adaptation without requiring source data. We evaluate SPACE on multiple datasets, including CIFAR-10-C, CIFAR-100-C, Tiny-ImageNet-C and DVS Gesture-C. Furthermore, SPACE demonstrates strong generalization across different model architectures, achieving consistent performance improvements on both VGG9 and ResNet11. Experimental results show that SPACE outperforms state-of-the-art methods, highlighting its effectiveness and robustness in real-world settings.

Hybrid Event-Frame Neural Spike Detector for Neuromorphic Implantable BMI

May 14, 2024Abstract:This work introduces two novel neural spike detection schemes intended for use in next-generation neuromorphic brain-machine interfaces (iBMIs). The first, an Event-based Spike Detector (Ev-SPD) which examines the temporal neighborhood of a neural event for spike detection, is designed for in-vivo processing and offers high sensitivity and decent accuracy (94-97%). The second, Neural Network-based Spike Detector (NN-SPD) which operates on hybrid temporal event frames, provides an off-implant solution using shallow neural networks with impressive detection accuracy (96-99%) and minimal false detections. These methods are evaluated using a synthetic dataset with varying noise levels and validated through comparison with ground truth data. The results highlight their potential in next-gen neuromorphic iBMI systems and emphasize the need to explore this direction further to understand their resource-efficient and high-performance capabilities for practical iBMI settings.

A Low-Power Spike Detector Using In-Memory Computing for Event-based Neural Frontend

May 14, 2024Abstract:With the sensor scaling of next-generation Brain-Machine Interface (BMI) systems, the massive A/D conversion and analog multiplexing at the neural frontend poses a challenge in terms of power and data rates for wireless and implantable BMIs. While previous works have reported the neuromorphic compression of neural signal, further compression requires integration of spike detectors on chip. In this work, we propose an efficient HRAM-based spike detector using In-memory computing for compressive event-based neural frontend. Our proposed method involves detecting spikes from event pulses without reconstructing the signal and uses a 10T hybrid in-memory computing bitcell for the accumulation and thresholding operations. We show that our method ensures a spike detection accuracy of 92-99% for neural signal inputs while consuming only 13.8 nW per channel in 65 nm CMOS.

Memory Efficient Corner Detection for Event-driven Dynamic Vision Sensors

Jan 18, 2024

Abstract:Event cameras offer low-latency and data compression for visual applications, through event-driven operation, that can be exploited for edge processing in tiny autonomous agents. Robust, accurate and low latency extraction of highly informative features such as corners is key for most visual processing. While several corner detection algorithms have been proposed, state-of-the-art performance is achieved by luvHarris. However, this algorithm requires a high number of memory accesses per event, making it less-than ideal for low-latency, low-energy implementation in tiny edge processors. In this paper, we propose a new event-driven corner detection implementation tailored for edge computing devices, which requires much lower memory access than luvHarris while also improving accuracy. Our method trades computation for memory access, which is more expensive for large memories. For a DAVIS346 camera, our method requires ~3.8X less memory, ~36.6X less memory accesses with only ~2.3X more computes.

ANN vs SNN: A case study for Neural Decoding in Implantable Brain-Machine Interfaces

Dec 26, 2023Abstract:While it is important to make implantable brain-machine interfaces (iBMI) wireless to increase patient comfort and safety, the trend of increased channel count in recent neural probes poses a challenge due to the concomitant increase in the data rate. Extracting information from raw data at the source by using edge computing is a promising solution to this problem, with integrated intention decoders providing the best compression ratio. In this work, we compare different neural networks (NN) for motor decoding in terms of accuracy and implementation cost. We further show that combining traditional signal processing techniques with machine learning ones deliver surprisingly good performance even with simple NNs. Adding a block Bidirectional Bessel filter provided maximum gains of $\approx 0.05$, $0.04$ and $0.03$ in $R^2$ for ANN\_3d, SNN\_3D and ANN models, while the gains were lower ($\approx 0.02$ or less) for LSTM and SNN\_streaming models. Increasing training data helped improve the $R^2$ of all models by $0.03-0.04$ indicating they have more capacity for future improvement. In general, LSTM and SNN\_streaming models occupy the high and low ends of the pareto curves (for accuracy vs. memory/operations) respectively while SNN\_3D and ANN\_3D occupy intermediate positions. Our work presents state of the art results for this dataset and paves the way for decoder-integrated-implants of the future.

Towards Neuromorphic Compression based Neural Sensing for Next-Generation Wireless Implantable Brain Machine Interface

Dec 15, 2023Abstract:This work introduces a neuromorphic compression based neural sensing architecture with address-event representation inspired readout protocol for massively parallel, next-gen wireless iBMI. The architectural trade-offs and implications of the proposed method are quantitatively analyzed in terms of compression ratio and spike information preservation. For the latter, we use metrics such as root-mean-square error and correlation coefficient between the original and recovered signal to assess the effect of neuromorphic compression on spike shape. Furthermore, we use accuracy, sensitivity, and false detection rate to understand the effect of compression on downstream iBMI tasks, specifically, spike detection. We demonstrate that a data compression ratio of $50-100$ can be achieved, $5-18\times$ more than prior work, by selective transmission of event pulses corresponding to neural spikes. A correlation coefficient of $\approx0.9$ and spike detection accuracy of over $90\%$ for the worst-case analysis involving $10K$-channel simulated recording and typical analysis using $100$ or $384$-channel real neural recordings. We also analyze the collision handling capability and scalability of the proposed pipeline.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge