Jyotibdha Acharya

A Hybrid Neuromorphic Object Tracking and Classification Framework for Real-time Systems

Jul 21, 2020

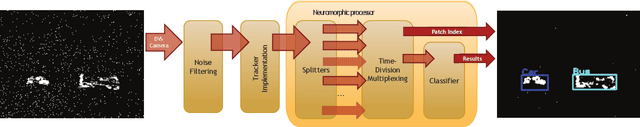

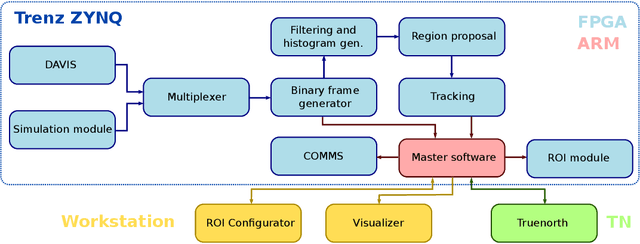

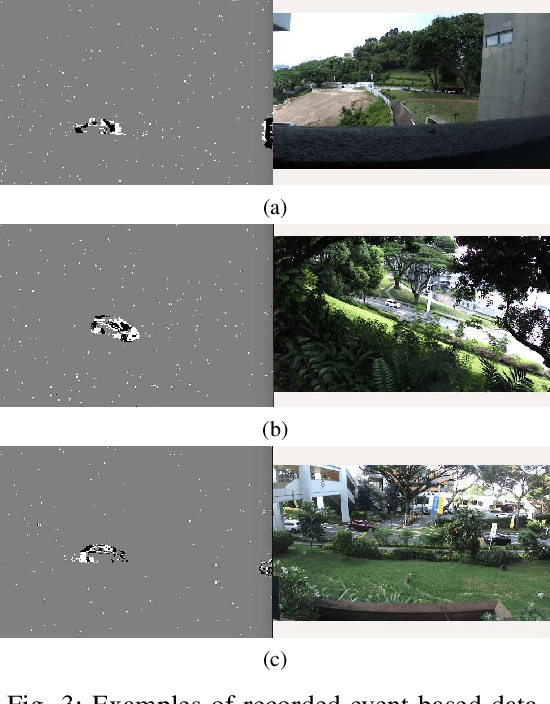

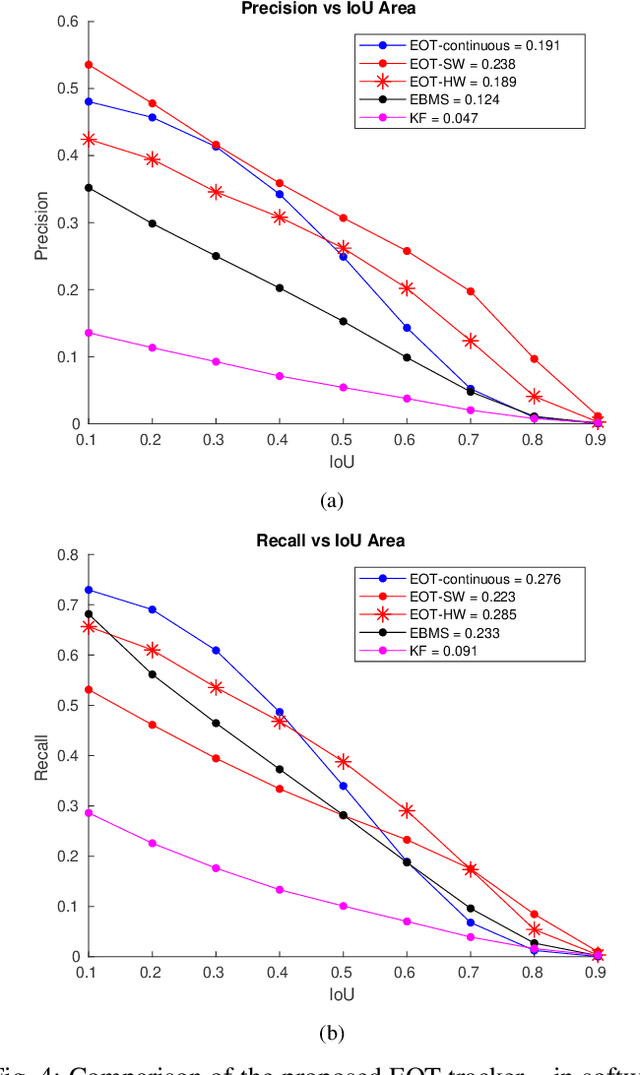

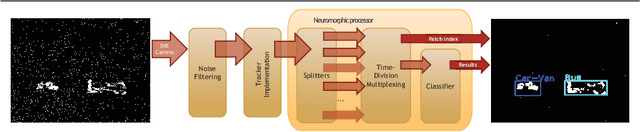

Abstract:Deep learning inference that needs to largely take place on the 'edge' is a highly computational and memory intensive workload, making it intractable for low-power, embedded platforms such as mobile nodes and remote security applications. To address this challenge, this paper proposes a real-time, hybrid neuromorphic framework for object tracking and classification using event-based cameras that possess properties such as low-power consumption (5-14 mW) and high dynamic range (120 dB). Nonetheless, unlike traditional approaches of using event-by-event processing, this work uses a mixed frame and event approach to get energy savings with high performance. Using a frame-based region proposal method based on the density of foreground events, a hardware-friendly object tracking scheme is implemented using the apparent object velocity while tackling occlusion scenarios. The object track input is converted back to spikes for TrueNorth classification via the energy-efficient deep network (EEDN) pipeline. Using originally collected datasets, we train the TrueNorth model on the hardware track outputs, instead of using ground truth object locations as commonly done, and demonstrate the ability of our system to handle practical surveillance scenarios. As an optional paradigm, to exploit the low latency and asynchronous nature of neuromorphic vision sensors (NVS), we also propose a continuous-time tracker with C++ implementation where each event is processed individually. Thereby, we extensively compare the proposed methodologies to state-of-the-art event-based and frame-based methods for object tracking and classification, and demonstrate the use case of our neuromorphic approach for real-time and embedded applications without sacrificing performance. Finally, we also showcase the efficacy of the proposed system to a standard RGB camera setup when evaluated over several hours of traffic recordings.

Deep Neural Network for Respiratory Sound Classification in Wearable Devices Enabled by Patient Specific Model Tuning

Apr 16, 2020

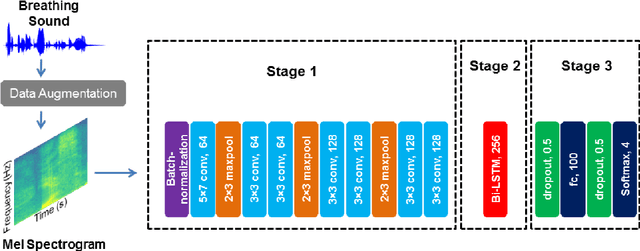

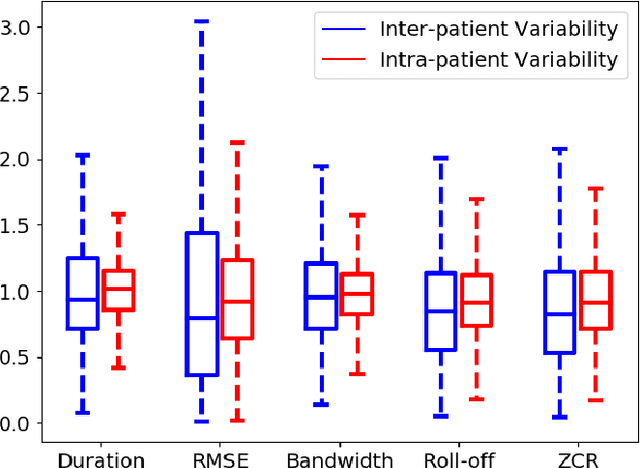

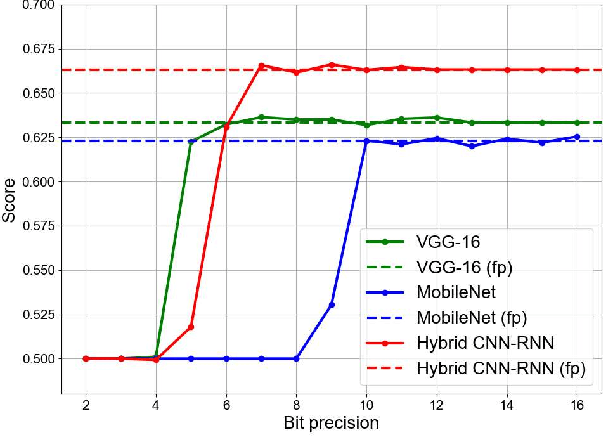

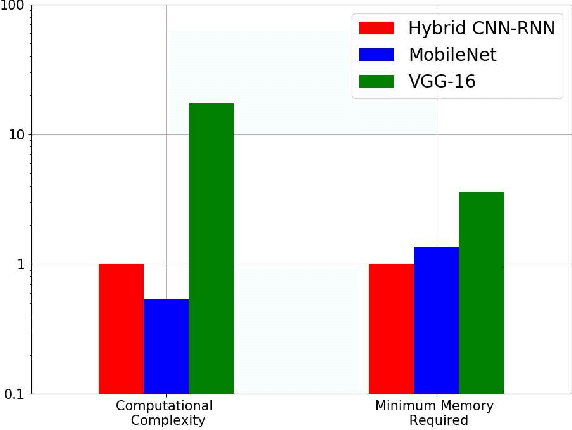

Abstract:The primary objective of this paper is to build classification models and strategies to identify breathing sound anomalies (wheeze, crackle) for automated diagnosis of respiratory and pulmonary diseases. In this work we propose a deep CNN-RNN model that classifies respiratory sounds based on Mel-spectrograms. We also implement a patient specific model tuning strategy that first screens respiratory patients and then builds patient specific classification models using limited patient data for reliable anomaly detection. Moreover, we devise a local log quantization strategy for model weights to reduce the memory footprint for deployment in memory constrained systems such as wearable devices. The proposed hybrid CNN-RNN model achieves a score of 66.31% on four-class classification of breathing cycles for ICBHI'17 scientific challenge respiratory sound database. When the model is re-trained with patient specific data, it produces a score of 71.81% for leave-one-out validation. The proposed weight quantization technique achieves ~4X reduction in total memory cost without loss of performance. The main contribution of the paper is as follows: Firstly, the proposed model is able to achieve state of the art score on the ICBHI'17 dataset. Secondly, deep learning models are shown to successfully learn domain specific knowledge when pre-trained with breathing data and produce significantly superior performance compared to generalized models. Finally, local log quantization of trained weights is shown to be able to reduce the memory requirement significantly. This type of patient-specific re-training strategy can be very useful in developing reliable long-term automated patient monitoring systems particularly in wearable healthcare solutions.

Is my Neural Network Neuromorphic? Taxonomy, Recent Trends and Future Directions in Neuromorphic Engineering

Feb 27, 2020

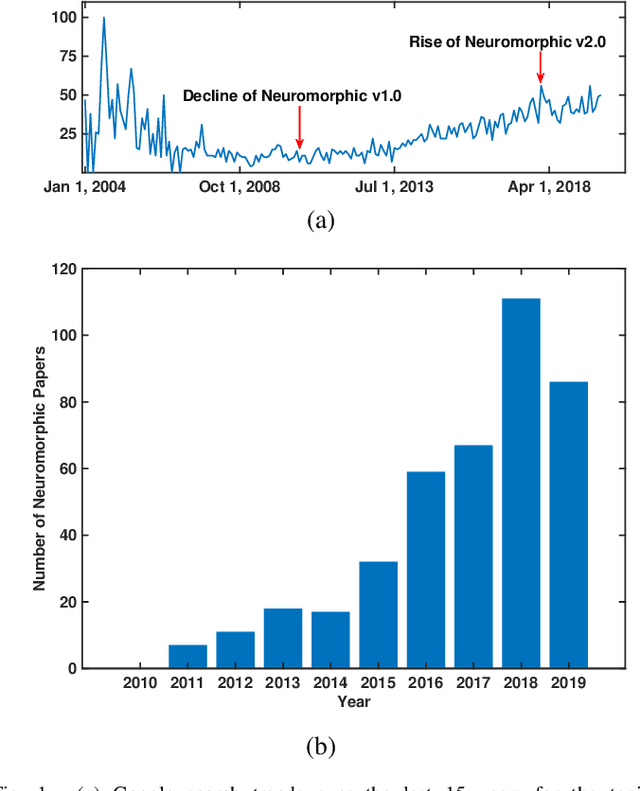

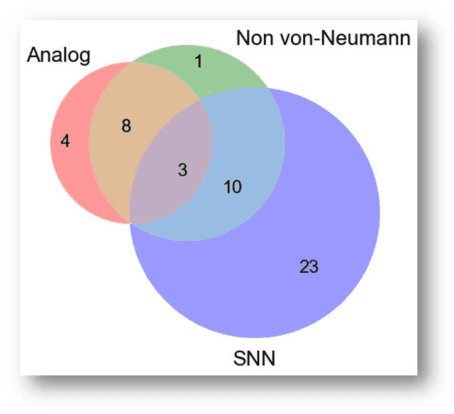

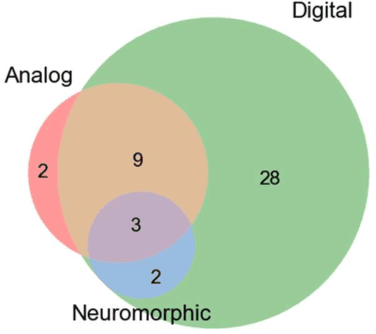

Abstract:In this paper, we review recent work published over the last 3 years under the umbrella of Neuromorphic engineering to analyze what are the common features among such systems. We see that there is no clear consensus but each system has one or more of the following features:(1) Analog computing (2) Non vonNeumann Architecture and low-precision digital processing (3) Spiking Neural Networks (SNN) with components closely related to biology. We compare recent machine learning accelerator chips to show that indeed analog processing and reduced bit precision architectures have best throughput, energy and area efficiencies. However, pure digital architectures can also achieve quite high efficiencies by just adopting a non von-Neumann architecture. Given the design automation tools for digital hardware design, it raises a question on the likelihood of adoption of analog processing in the near future for industrial designs. Next, we argue about the importance of defining standards and choosing proper benchmarks for the progress of neuromorphic system designs and propose some desired characteristics of such benchmarks. Finally, we show brain-machine interfaces as a potential task that fulfils all the criteria of such benchmarks.

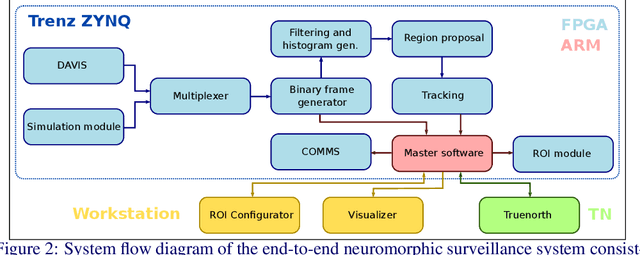

A low-power end-to-end hybrid neuromorphic framework for surveillance applications

Oct 25, 2019

Abstract:With the success of deep learning, object recognition systems that can be deployed for real-world applications are becoming commonplace. However, inference that needs to largely take place on the `edge' (not processed on servers), is a highly computational and memory intensive workload, making it intractable for low-power mobile nodes and remote security applications. To address this challenge, this paper proposes a low-power (5W) end-to-end neuromorphic framework for object tracking and classification using event-based cameras that possess desirable properties such as low power consumption (5-14 mW) and high dynamic range (120 dB). Nonetheless, unlike traditional approaches of using event-by-event processing, this work uses a mixed frame and event approach to get energy savings with high performance. Using a frame-based region proposal method based on the density of foreground events, a hardware-friendly object tracking is implemented using the apparent object velocity while tackling occlusion scenarios. For low-power classification of the tracked objects, the event camera is interfaced to IBM TrueNorth, which is time-multiplexed to tackle up to eight instances for a traffic monitoring application. The frame-based object track input is converted back to spikes for Truenorth classification via the energy efficient deep network (EEDN) pipeline. Using originally collected datasets, we train the TrueNorth model on the hardware track outputs, instead of using ground truth object locations as commonly done, and demonstrate the efficacy of our system to handle practical surveillance scenarios. Finally, we compare the proposed methodologies to state-of-the-art event-based systems for object tracking and classification, and demonstrate the use case of our neuromorphic approach for low-power applications without sacrificing on performance.

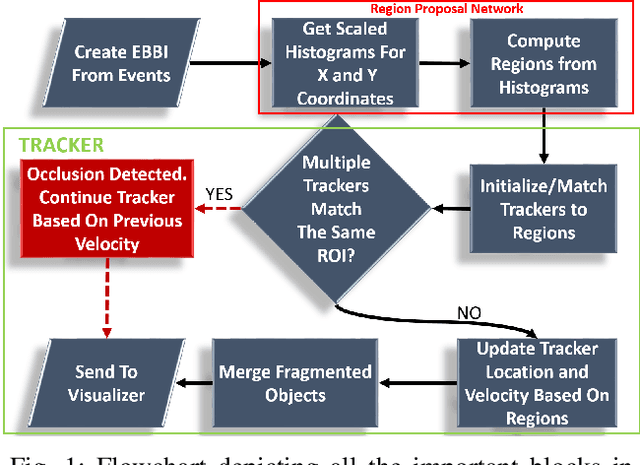

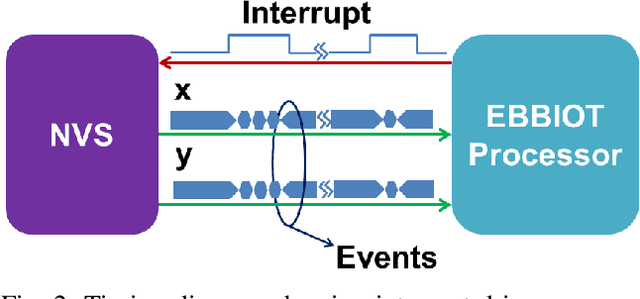

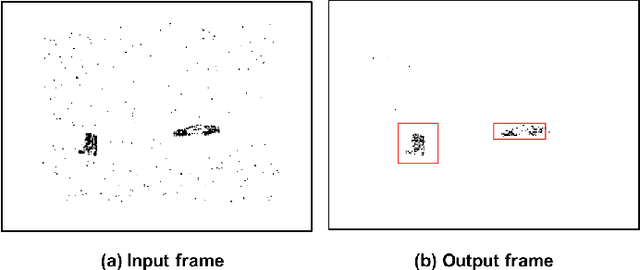

EBBIOT: A Low-complexity Tracking Algorithm for Surveillance in IoVT Using Stationary Neuromorphic Vision Sensors

Oct 04, 2019

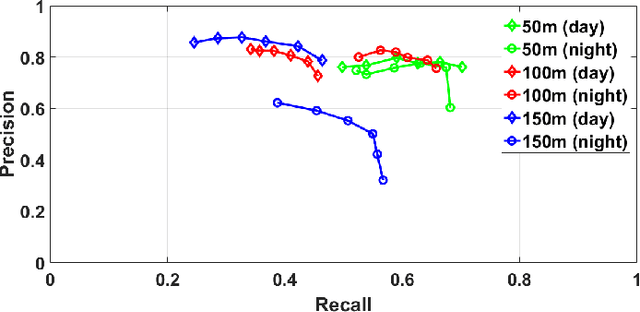

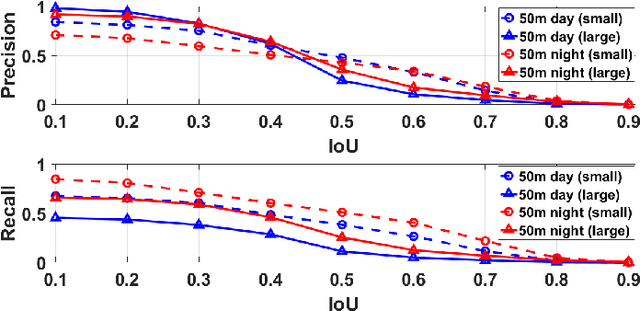

Abstract:In this paper, we present EBBIOT-a novel paradigm for object tracking using stationary neuromorphic vision sensors in low-power sensor nodes for the Internet of Video Things (IoVT). Different from fully event based tracking or fully frame based approaches, we propose a mixed approach where we create event-based binary images (EBBI) that can use memory efficient noise filtering algorithms. We exploit the motion triggering aspect of neuromorphic sensors to generate region proposals based on event density counts with >1000X less memory and computes compared to frame based approaches. We also propose a simple overlap based tracker (OT) with prediction based handling of occlusion. Our overall approach requires 7X less memory and 3X less computations than conventional noise filtering and event based mean shift (EBMS) tracking. Finally, we show that our approach results in significantly higher precision and recall compared to EBMS approach as well as Kalman Filter tracker when evaluated over 1.1 hours of traffic recordings at two different locations.

Spiking Neural Network based Region Proposal Networks for Neuromorphic Vision Sensors

Feb 26, 2019

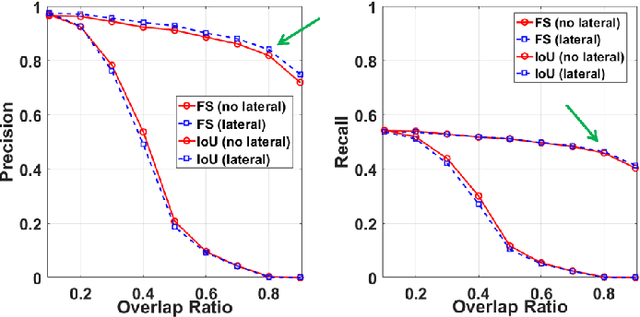

Abstract:This paper presents a three layer spiking neural network based region proposal network operating on data generated by neuromorphic vision sensors. The proposed architecture consists of refractory, convolution and clustering layers designed with bio-realistic leaky integrate and fire (LIF) neurons and synapses. The proposed algorithm is tested on traffic scene recordings from a DAVIS sensor setup. The performance of the region proposal network has been compared with event based mean shift algorithm and is found to be far superior (~50% better) in recall for similar precision (~85%). Computational and memory complexity of the proposed method are also shown to be similar to that of event based mean shift

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge