Sumon Kumar Bose

A 51.3 TOPS/W, 134.4 GOPS In-memory Binary Image Filtering in 65nm CMOS

Jul 29, 2021

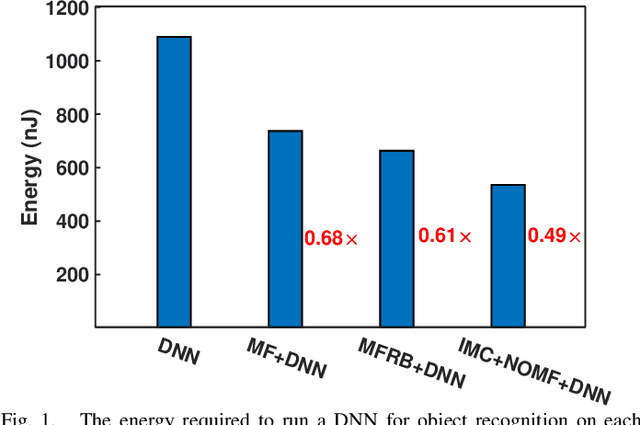

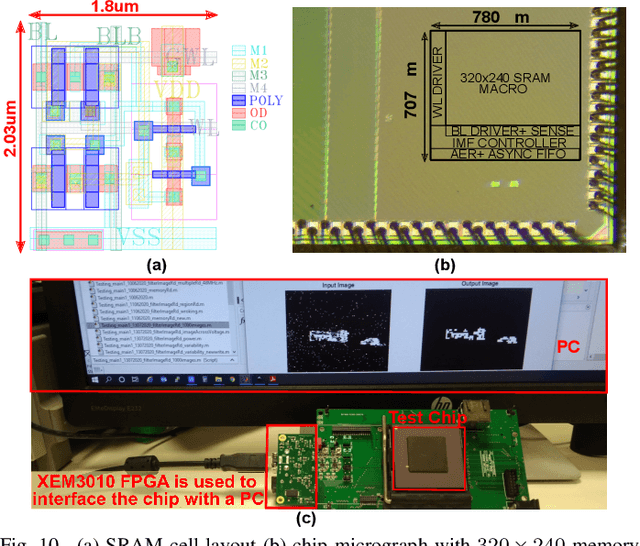

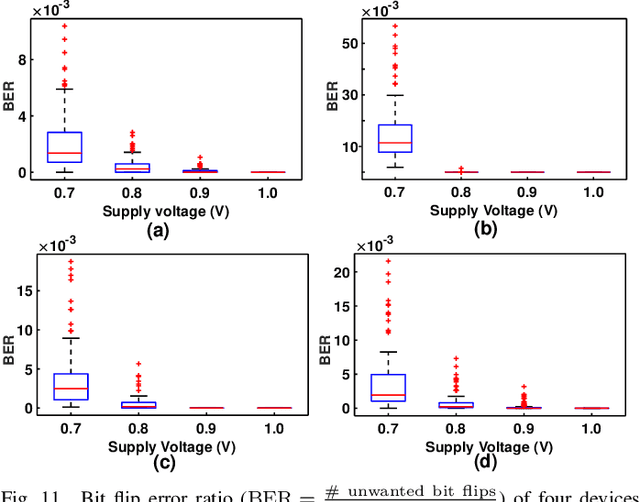

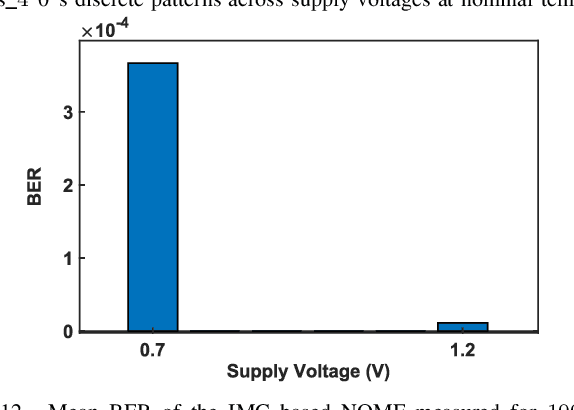

Abstract:Neuromorphic vision sensors (NVS) can enable energy savings due to their event-driven that exploits the temporal redundancy in video streams from a stationary camera. However, noise-driven events lead to the false triggering of the object recognition processor. Image denoise operations require memoryintensive processing leading to a bottleneck in energy and latency. In this paper, we present in-memory filtering (IMF), a 6TSRAM in-memory computing based image denoising for eventbased binary image (EBBI) frame from an NVS. We propose a non-overlap median filter (NOMF) for image denoising. An inmemory computing framework enables hardware implementation of NOMF leveraging the inherent read disturb phenomenon of 6T-SRAM. To demonstrate the energy-saving and effectiveness of the algorithm, we fabricated the proposed architecture in a 65nm CMOS process. As compared to fully digital implementation, IMF enables > 70x energy savings and a > 3x improvement of processing time when tested with the video recordings from a DAVIS sensor and achieves a peak throughput of 134.4 GOPS. Furthermore, the peak energy efficiencies of the NOMF is 51.3 TOPS/W, comparable with state of the art inmemory processors. We also show that the accuracy of the images obtained by NOMF provide comparable accuracy in tracking and classification applications when compared with images obtained by conventional median filtering.

ADIC: Anomaly Detection Integrated Circuit in 65nm CMOS utilizing Approximate Computing

Aug 21, 2020

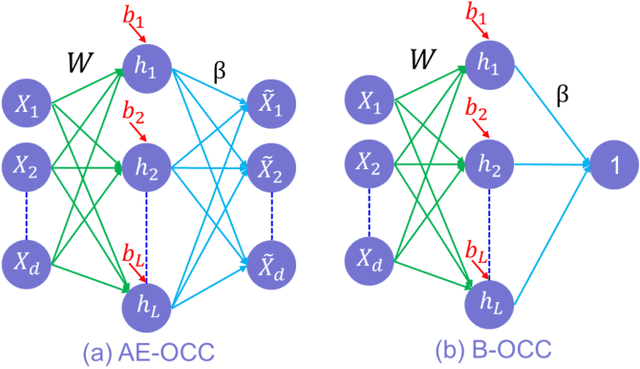

Abstract:In this paper, we present a low-power anomaly detection integrated circuit (ADIC) based on a one-class classifier (OCC) neural network. The ADIC achieves low-power operation through a combination of (a) careful choice of algorithm for online learning and (b) approximate computing techniques to lower average energy. In particular, online pseudoinverse update method (OPIUM) is used to train a randomized neural network for quick and resource efficient learning. An additional 42% energy saving can be achieved when a lighter version of OPIUM method is used for training with the same number of data samples lead to no significant compromise on the quality of inference. Instead of a single classifier with large number of neurons, an ensemble of K base learner approach is chosen to reduce learning memory by a factor of K. This also enables approximate computing by dynamically varying the neural network size based on anomaly detection. Fabricated in 65nm CMOS, the ADIC has K = 7 Base Learners (BL) with 32 neurons in each BL and dissipates 11.87pJ/OP and 3.35pJ/OP during learning and inference respectively at Vdd = 0.75V when all 7 BLs are enabled. Further, evaluated on the NASA bearing dataset, approximately 80% of the chip can be shut down for 99% of the lifetime leading to an energy efficiency of 0.48pJ/OP, an 18.5 times reduction over full-precision computing running at Vdd = 1.2V throughout the lifetime.

Is my Neural Network Neuromorphic? Taxonomy, Recent Trends and Future Directions in Neuromorphic Engineering

Feb 27, 2020

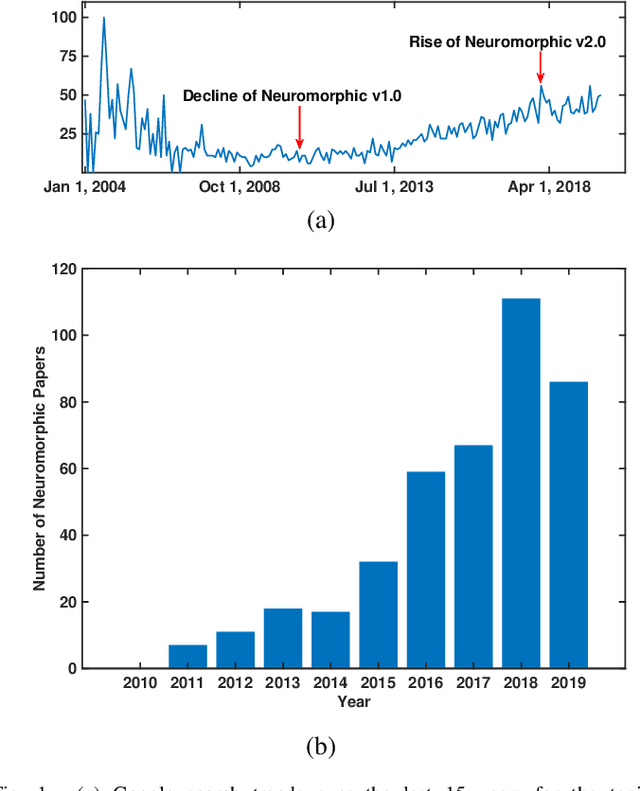

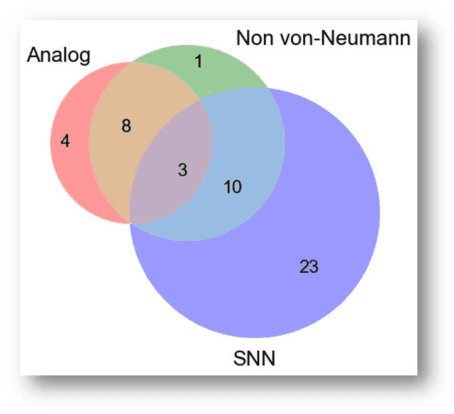

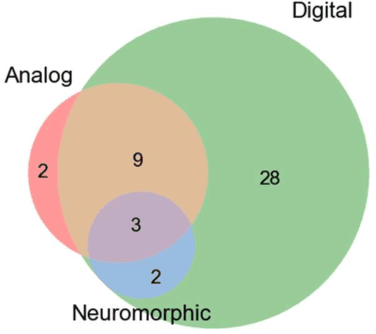

Abstract:In this paper, we review recent work published over the last 3 years under the umbrella of Neuromorphic engineering to analyze what are the common features among such systems. We see that there is no clear consensus but each system has one or more of the following features:(1) Analog computing (2) Non vonNeumann Architecture and low-precision digital processing (3) Spiking Neural Networks (SNN) with components closely related to biology. We compare recent machine learning accelerator chips to show that indeed analog processing and reduced bit precision architectures have best throughput, energy and area efficiencies. However, pure digital architectures can also achieve quite high efficiencies by just adopting a non von-Neumann architecture. Given the design automation tools for digital hardware design, it raises a question on the likelihood of adoption of analog processing in the near future for industrial designs. Next, we argue about the importance of defining standards and choosing proper benchmarks for the progress of neuromorphic system designs and propose some desired characteristics of such benchmarks. Finally, we show brain-machine interfaces as a potential task that fulfils all the criteria of such benchmarks.

ADEPOS: A Novel Approximate Computing Framework for Anomaly Detection Systems and its Implementation in 65nm CMOS

Dec 04, 2019

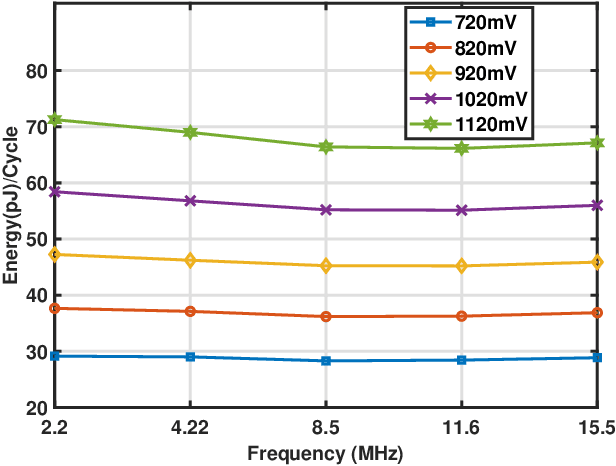

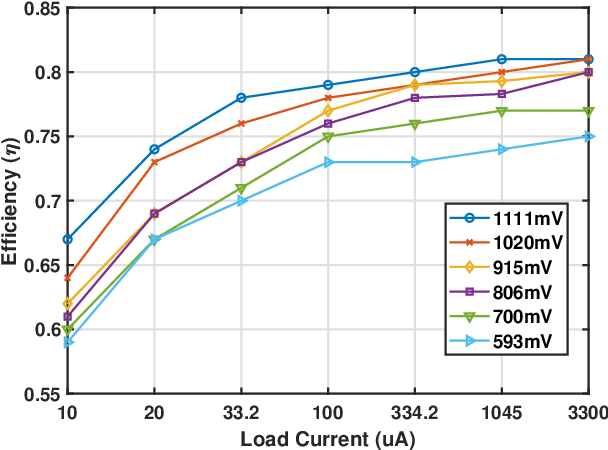

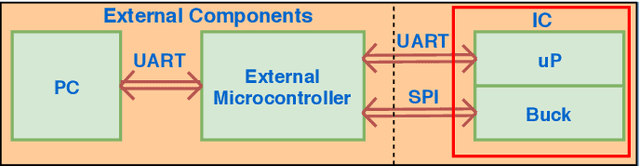

Abstract:To overcome the energy and bandwidth limitations of traditional IoT systems, edge computing or information extraction at the sensor node has become popular. However, now it is important to create very low energy information extraction or pattern recognition systems. In this paper, we present an approximate computing method to reduce the computation energy of a specific type of IoT system used for anomaly detection (e.g. in predictive maintenance, epileptic seizure detection, etc). Termed as Anomaly Detection Based Power Savings (ADEPOS), our proposed method uses low precision computing and low complexity neural networks at the beginning when it is easy to distinguish healthy data. However, on the detection of anomalies, the complexity of the network and computing precision are adaptively increased for accurate predictions. We show that ensemble approaches are well suited for adaptively changing network size. To validate our proposed scheme, a chip has been fabricated in UMC65nm process that includes an MSP430 microprocessor along with an on-chip switching mode DC-DC converter for dynamic voltage and frequency scaling. Using NASA bearing dataset for machine health monitoring, we show that using ADEPOS we can achieve 8.95X saving of energy along the lifetime without losing any detection accuracy. The energy savings are obtained by reducing the execution time of the neural network on the microprocessor.

* 14 pages

A Stacked Autoencoder Neural Network based Automated Feature Extraction Method for Anomaly detection in On-line Condition Monitoring

Oct 19, 2018

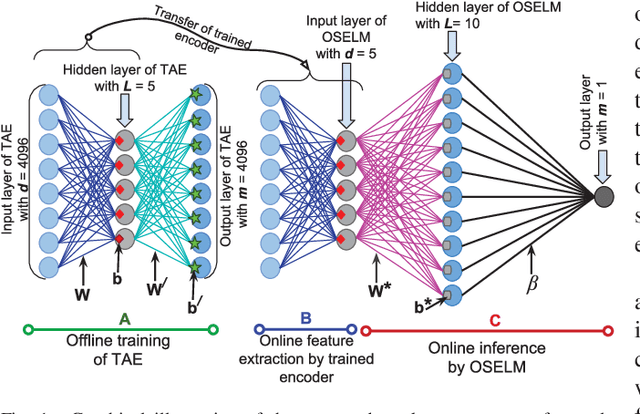

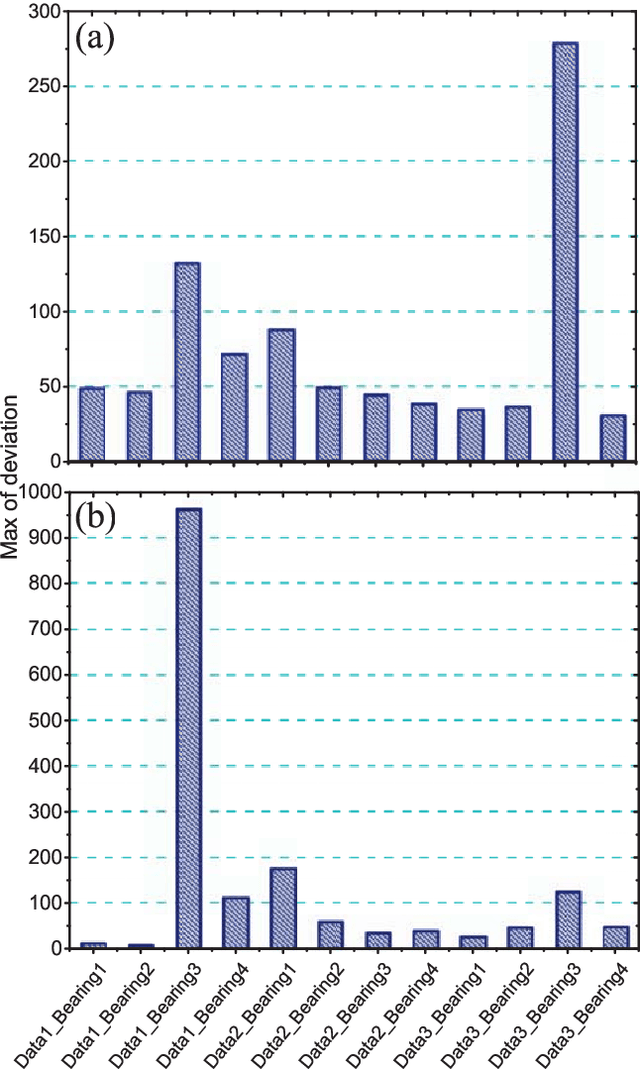

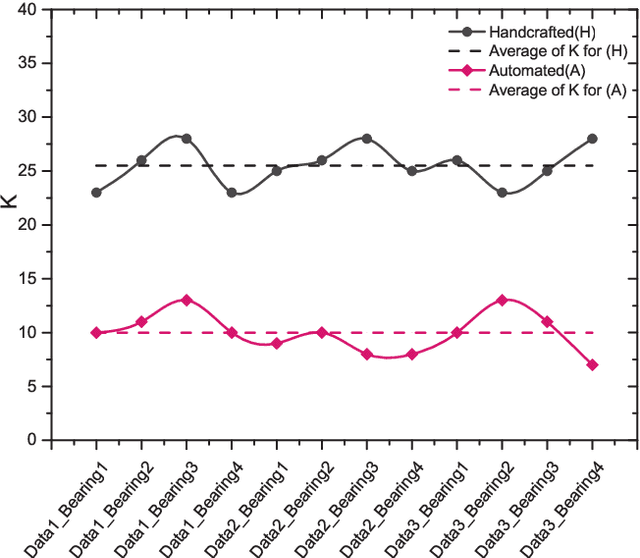

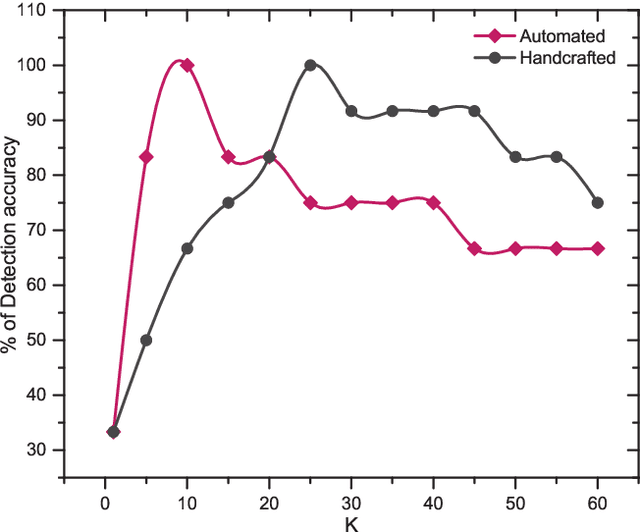

Abstract:Condition monitoring is one of the routine tasks in all major process industries. The mechanical parts such as a motor, gear, bearings are the major components of a process industry and any fault in them may cause a total shutdown of the whole process, which may result in serious losses. Therefore, it is very crucial to predict any approaching defects before its occurrence. Several methods exist for this purpose and many research are being carried out for better and efficient models. However, most of them are based on the processing of raw sensor signals, which is tedious and expensive. Recently, there has been an increase in the feature based condition monitoring, where only the useful features are extracted from the raw signals and interpreted for the prediction of the fault. Most of these are handcrafted features, where these are manually obtained based on the nature of the raw data. This of course requires the prior knowledge of the nature of data and related processes. This limits the feature extraction process. However, recent development in the autoencoder based feature extraction method provides an alternative to the traditional handcrafted approaches; however, they have mostly been confined in the area of image and audio processing. In this work, we have developed an automated feature extraction method for on-line condition monitoring based on the stack of the traditional autoencoder and an on-line sequential extreme learning machine(OSELM) network. The performance of this method is comparable to that of the traditional feature extraction approaches. The method can achieve 100% detection accuracy for determining the bearing health states of NASA bearing dataset. The simple design of this method is promising for the easy hardware implementation of Internet of Things(IoT) based prognostics solutions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge