Mohendra Roy

Artificial Intelligence in Material Engineering: A review on applications of AI in Material Engineering

Sep 15, 2022

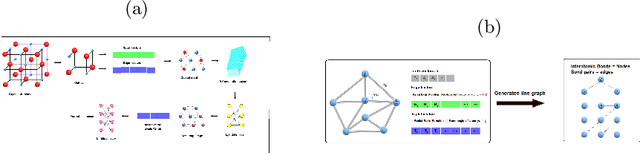

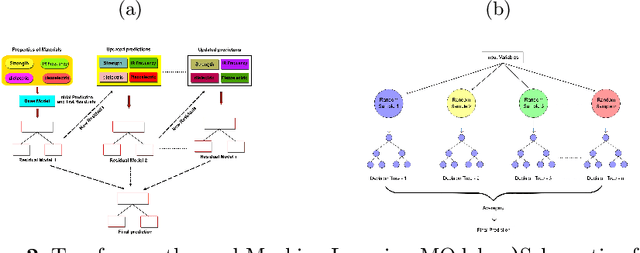

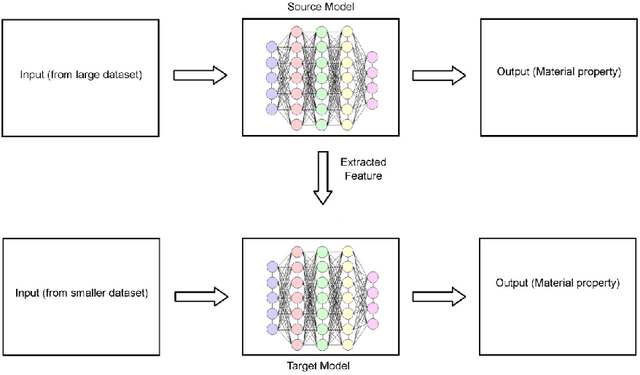

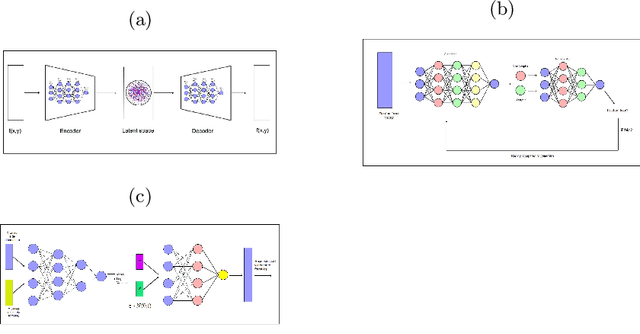

Abstract:Recently, there has been extensive use of artificial Intelligence (AI) in the field of material engineering. This can be attributed to the development of high performance computing and thereby feasibility to test deep learning models with large parameters. In this article we tried to review some of the latest developments in the applications of AI in material engineering.

A Hybrid CNN-LSTM model for Video Deepfake Detection by Leveraging Optical Flow Features

Jul 28, 2022

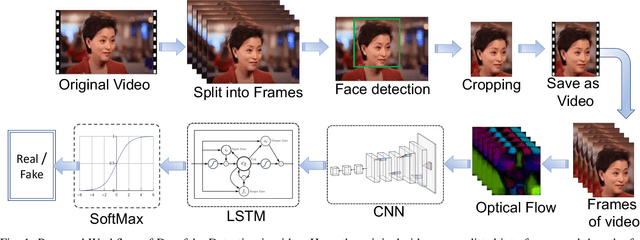

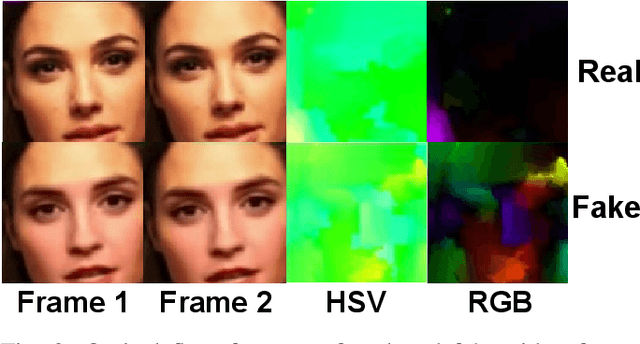

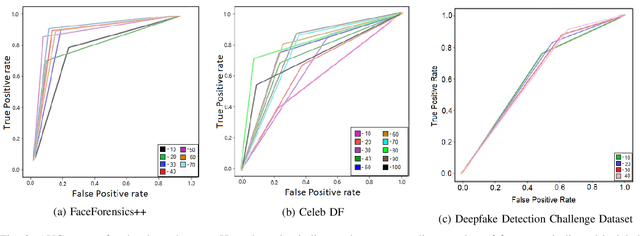

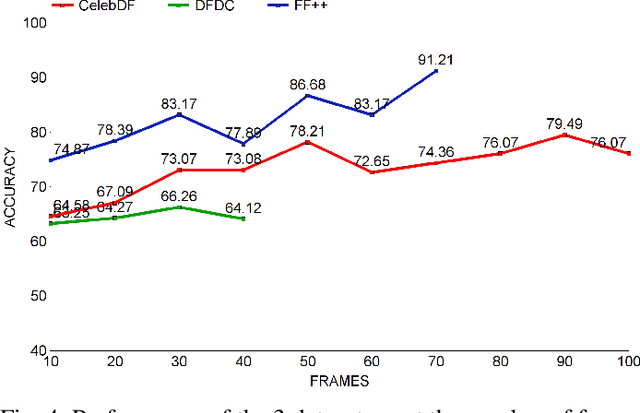

Abstract:Deepfakes are the synthesized digital media in order to create ultra-realistic fake videos to trick the spectator. Deep generative algorithms, such as, Generative Adversarial Networks(GAN) are widely used to accomplish such tasks. This approach synthesizes pseudo-realistic contents that are very difficult to distinguish by traditional detection methods. In most cases, Convolutional Neural Network(CNN) based discriminators are being used for detecting such synthesized media. However, it emphasise primarily on the spatial attributes of individual video frames, thereby fail to learn the temporal information from their inter-frame relations. In this paper, we leveraged an optical flow based feature extraction approach to extract the temporal features, which are then fed to a hybrid model for classification. This hybrid model is based on the combination of CNN and recurrent neural network (RNN) architectures. The hybrid model provides effective performance on open source data-sets such as, DFDC, FF++ and Celeb-DF. This proposed method shows an accuracy of 66.26%, 91.21% and 79.49% in DFDC, FF++, and Celeb-DF respectively with a very reduced No of sample size of approx 100 samples(frames). This promises early detection of fake contents compared to existing modalities.

Modelling Social Context for Fake News Detection: A Graph Neural Network Based Approach

Jul 27, 2022

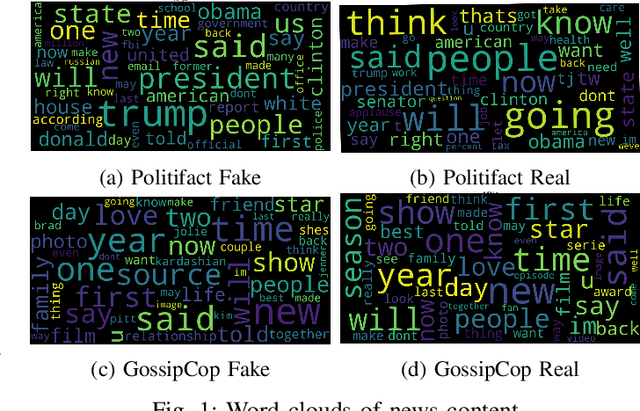

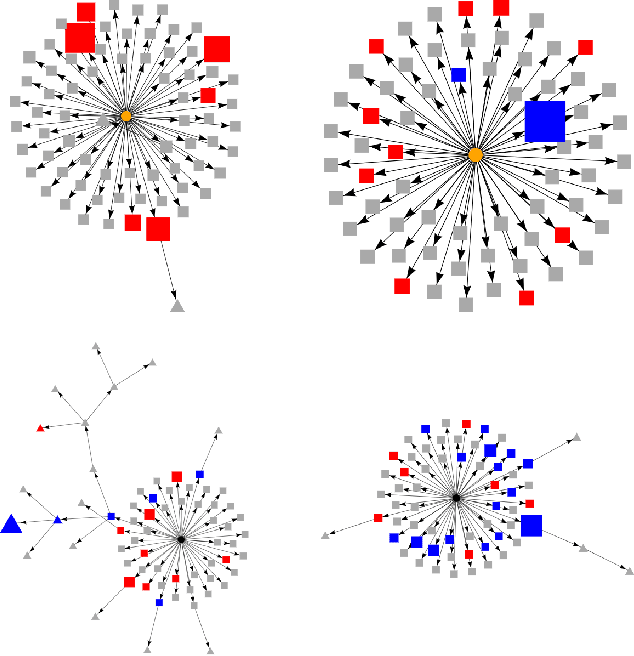

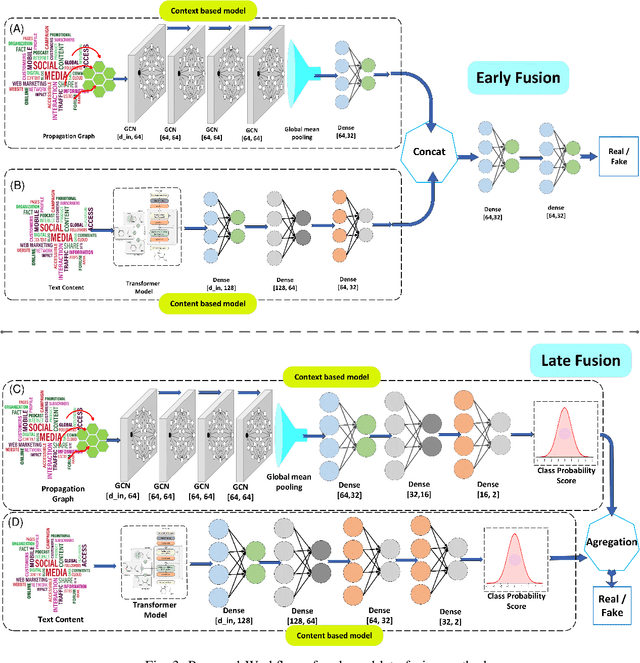

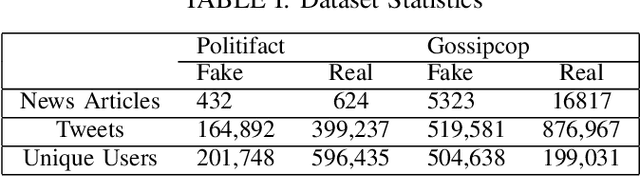

Abstract:Detection of fake news is crucial to ensure the authenticity of information and maintain the news ecosystems reliability. Recently, there has been an increase in fake news content due to the recent proliferation of social media and fake content generation techniques such as Deep Fake. The majority of the existing modalities of fake news detection focus on content based approaches. However, most of these techniques fail to deal with ultra realistic synthesized media produced by generative models. Our recent studies find that the propagation characteristics of authentic and fake news are distinguishable, irrespective of their modalities. In this regard, we have investigated the auxiliary information based on social context to detect fake news. This paper has analyzed the social context of fake news detection with a hybrid graph neural network based approach. This hybrid model is based on integrating a graph neural network on the propagation of news and bi directional encoder representations from the transformers model on news content to learn the text features. Thus this proposed approach learns the content as well as the context features and hence able to outperform the baseline models with an f1 score of 0.91 on PolitiFact and 0.93 on the Gossipcop dataset, respectively

Machine learning based lens-free imaging technique for field-portable cytometry

Mar 03, 2022

Abstract:Lens-free Shadow Imaging Technique (LSIT) is a well-established technique for the characterization of microparticles and biological cells. Due to its simplicity and cost-effectiveness, various low-cost solutions have been evolved, such as automatic analysis of complete blood count (CBC), cell viability, 2D cell morphology, 3D cell tomography, etc. The developed auto characterization algorithm so far for this custom-developed LSIT cytometer was based on the hand-crafted features of the cell diffraction patterns from the LSIT cytometer, that were determined from our empirical findings on thousands of samples of individual cell types, which limit the system in terms of induction of a new cell type for auto classification or characterization. Further, its performance is suffering from poor image (cell diffraction pattern) signatures due to its small signal or background noise. In this work, we address these issues by leveraging the artificial intelligence-powered auto signal enhancing scheme such as denoising autoencoder and adaptive cell characterization technique based on the transfer of learning in deep neural networks. The performance of our proposed method shows an increase in accuracy >98% along with the signal enhancement of >5 dB for most of the cell types, such as Red Blood Cell (RBC) and White Blood Cell (WBC). Furthermore, the model is adaptive to learn new type of samples within a few learning iterations and able to successfully classify the newly introduced sample along with the existing other sample types.

* Published in Biosensors Journal

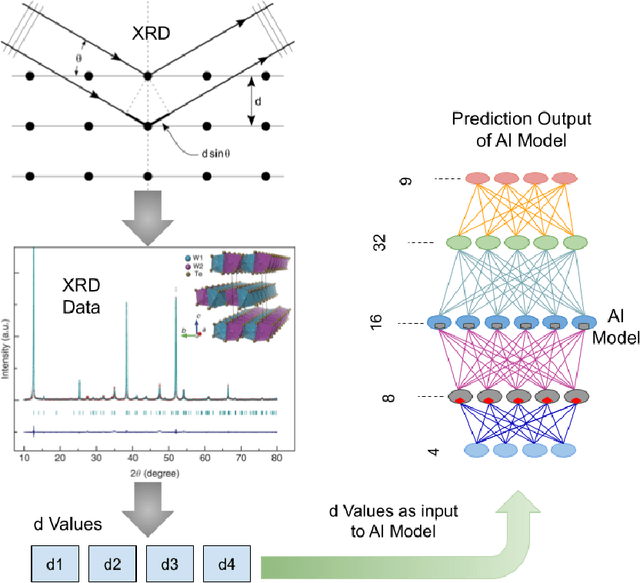

Artificial Intelligence Powered Material Search Engine

Jan 19, 2022

Abstract:Many data-driven applications in material science have been made possible because of recent breakthroughs in artificial intelligence(AI). The use of AI in material engineering is becoming more viable as the number of material data such as X-Ray diffraction, various spectroscopy, and microscope data grows. In this work, we have reported a material search engine that uses the interatomic space (d value) from X-ray diffraction to provide material information. We have investigated various techniques for predicting prospective material using X-ray diffraction data. We used the Random Forest, Naive Bayes (Gaussian), and Neural Network algorithms to achieve this. These algorithms have an average accuracy of 88.50\%, 100.0\%, and 88.89\%, respectively. Finally, we combined all these techniques into an ensemble approach to make the prediction more generic. This ensemble method has a ~100\% accuracy rate. Furthermore, we are designing a graph neural network (GNN)-based architecture to improve interpretability and accuracy. Thus, we want to solve the computational and time complexity of traditional dictionary-based and metadata-based material search engines and to provide a more generic prediction.

* 4 pages

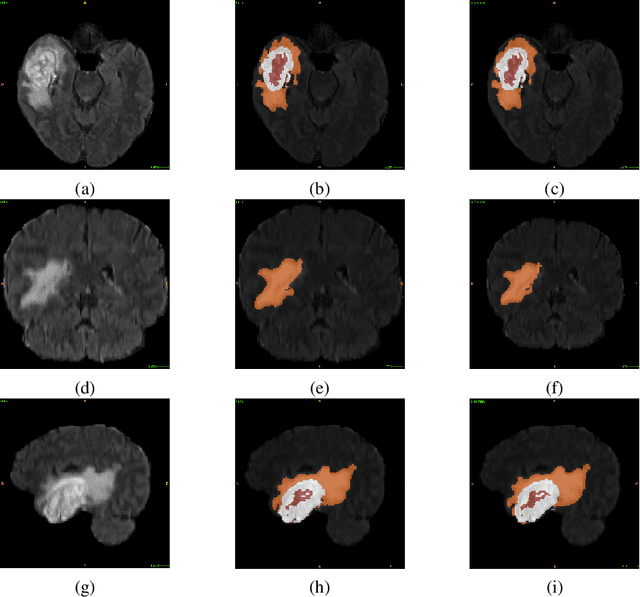

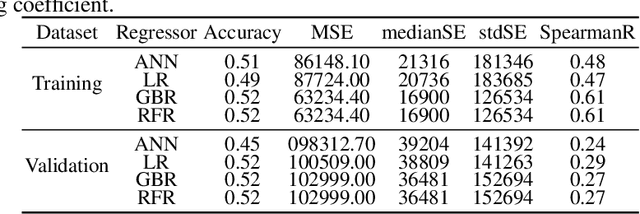

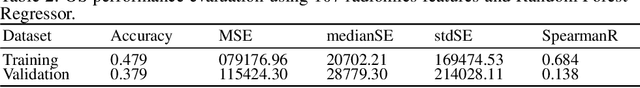

Glioblastoma Multiforme Patient Survival Prediction

Jan 26, 2021

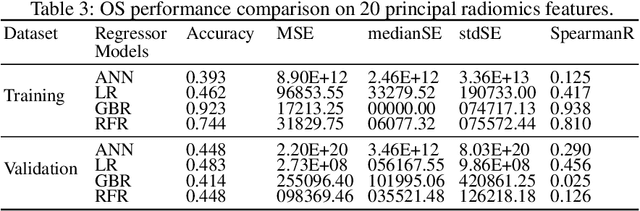

Abstract:Glioblastoma Multiforme is a very aggressive type of brain tumor. Due to spatial and temporal intra-tissue inhomogeneity, location and the extent of the cancer tissue, it is difficult to detect and dissect the tumor regions. In this paper, we propose survival prognosis models using four regressors operating on handcrafted image-based and radiomics features. We hypothesize that the radiomics shape features have the highest correlation with survival prediction. The proposed approaches were assessed on the Brain Tumor Segmentation (BraTS-2020) challenge dataset. The highest accuracy of image features with random forest regressor approach was 51.5\% for the training and 51.7\% for the validation dataset. The gradient boosting regressor with shape features gave an accuracy of 91.5\% and 62.1\% on training and validation datasets respectively. It is better than the BraTS 2020 survival prediction challenge winners on the training and validation datasets. Our work shows that handcrafted features exhibit a strong correlation with survival prediction. The consensus based regressor with gradient boosting and radiomics shape features is the best combination for survival prediction.

* 10 pages, 9 figures

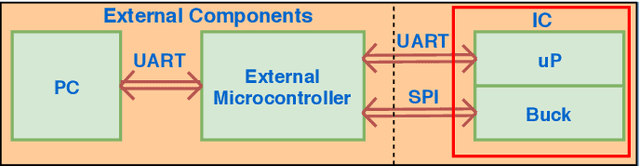

ADIC: Anomaly Detection Integrated Circuit in 65nm CMOS utilizing Approximate Computing

Aug 21, 2020

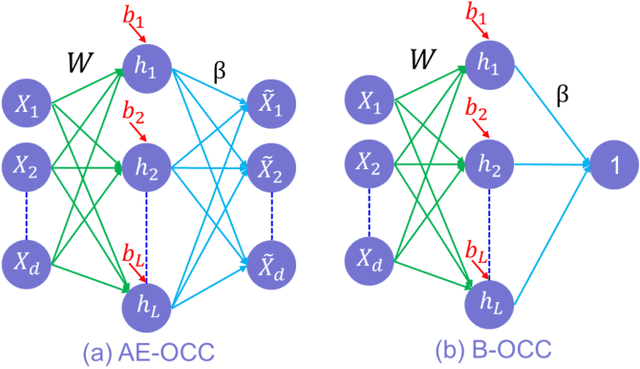

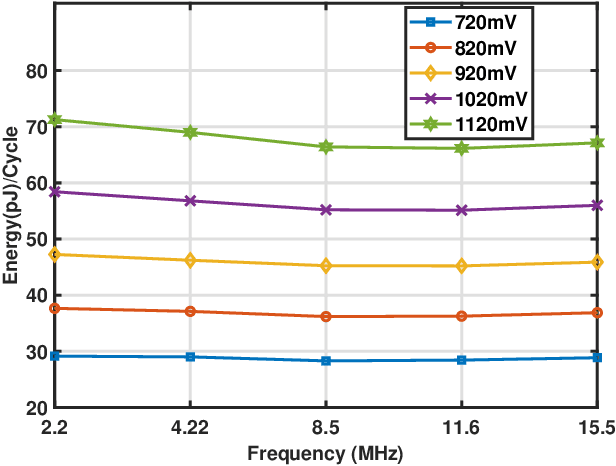

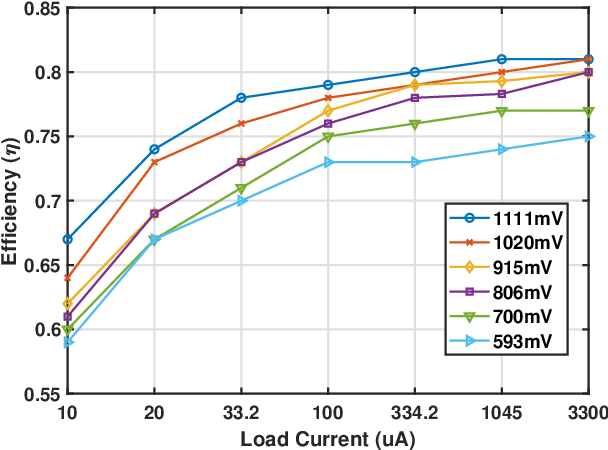

Abstract:In this paper, we present a low-power anomaly detection integrated circuit (ADIC) based on a one-class classifier (OCC) neural network. The ADIC achieves low-power operation through a combination of (a) careful choice of algorithm for online learning and (b) approximate computing techniques to lower average energy. In particular, online pseudoinverse update method (OPIUM) is used to train a randomized neural network for quick and resource efficient learning. An additional 42% energy saving can be achieved when a lighter version of OPIUM method is used for training with the same number of data samples lead to no significant compromise on the quality of inference. Instead of a single classifier with large number of neurons, an ensemble of K base learner approach is chosen to reduce learning memory by a factor of K. This also enables approximate computing by dynamically varying the neural network size based on anomaly detection. Fabricated in 65nm CMOS, the ADIC has K = 7 Base Learners (BL) with 32 neurons in each BL and dissipates 11.87pJ/OP and 3.35pJ/OP during learning and inference respectively at Vdd = 0.75V when all 7 BLs are enabled. Further, evaluated on the NASA bearing dataset, approximately 80% of the chip can be shut down for 99% of the lifetime leading to an energy efficiency of 0.48pJ/OP, an 18.5 times reduction over full-precision computing running at Vdd = 1.2V throughout the lifetime.

ADEPOS: A Novel Approximate Computing Framework for Anomaly Detection Systems and its Implementation in 65nm CMOS

Dec 04, 2019

Abstract:To overcome the energy and bandwidth limitations of traditional IoT systems, edge computing or information extraction at the sensor node has become popular. However, now it is important to create very low energy information extraction or pattern recognition systems. In this paper, we present an approximate computing method to reduce the computation energy of a specific type of IoT system used for anomaly detection (e.g. in predictive maintenance, epileptic seizure detection, etc). Termed as Anomaly Detection Based Power Savings (ADEPOS), our proposed method uses low precision computing and low complexity neural networks at the beginning when it is easy to distinguish healthy data. However, on the detection of anomalies, the complexity of the network and computing precision are adaptively increased for accurate predictions. We show that ensemble approaches are well suited for adaptively changing network size. To validate our proposed scheme, a chip has been fabricated in UMC65nm process that includes an MSP430 microprocessor along with an on-chip switching mode DC-DC converter for dynamic voltage and frequency scaling. Using NASA bearing dataset for machine health monitoring, we show that using ADEPOS we can achieve 8.95X saving of energy along the lifetime without losing any detection accuracy. The energy savings are obtained by reducing the execution time of the neural network on the microprocessor.

* 14 pages

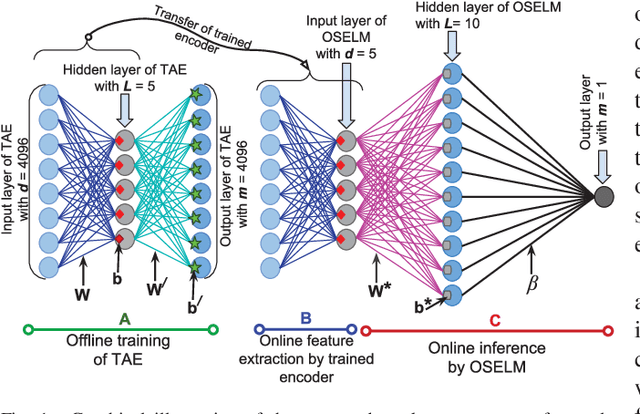

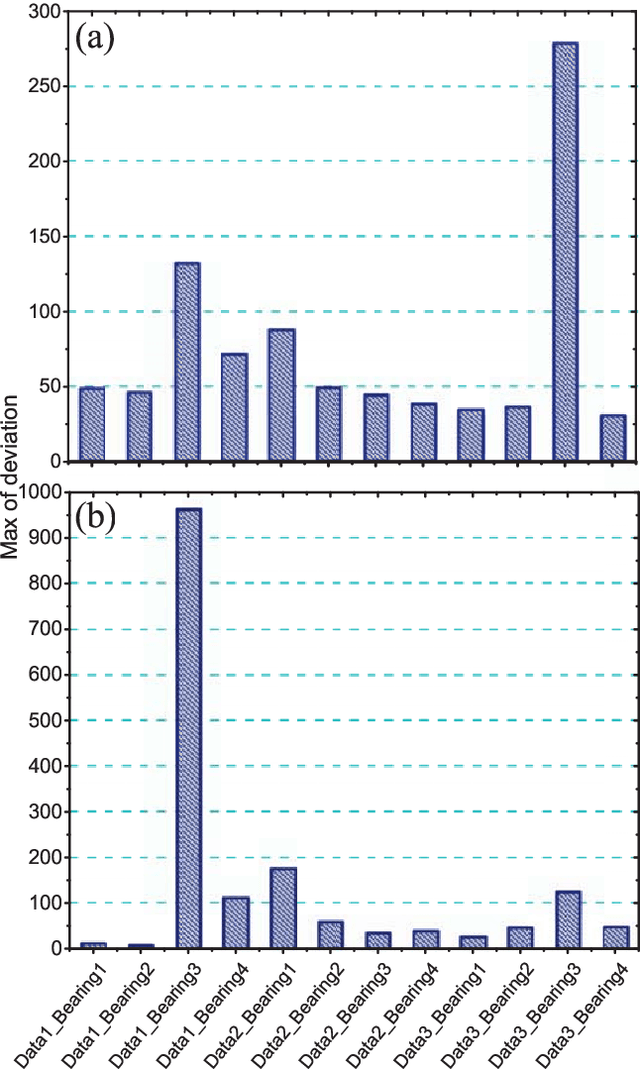

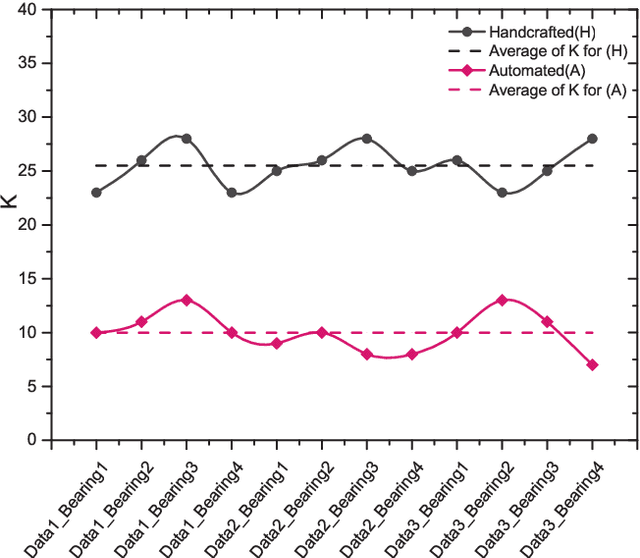

A Stacked Autoencoder Neural Network based Automated Feature Extraction Method for Anomaly detection in On-line Condition Monitoring

Oct 19, 2018

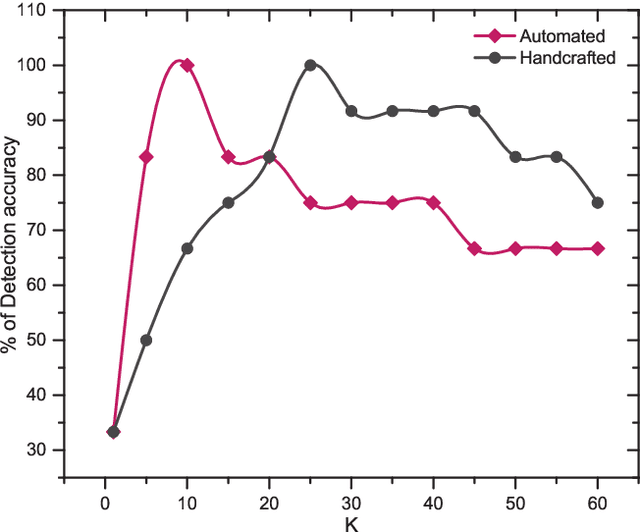

Abstract:Condition monitoring is one of the routine tasks in all major process industries. The mechanical parts such as a motor, gear, bearings are the major components of a process industry and any fault in them may cause a total shutdown of the whole process, which may result in serious losses. Therefore, it is very crucial to predict any approaching defects before its occurrence. Several methods exist for this purpose and many research are being carried out for better and efficient models. However, most of them are based on the processing of raw sensor signals, which is tedious and expensive. Recently, there has been an increase in the feature based condition monitoring, where only the useful features are extracted from the raw signals and interpreted for the prediction of the fault. Most of these are handcrafted features, where these are manually obtained based on the nature of the raw data. This of course requires the prior knowledge of the nature of data and related processes. This limits the feature extraction process. However, recent development in the autoencoder based feature extraction method provides an alternative to the traditional handcrafted approaches; however, they have mostly been confined in the area of image and audio processing. In this work, we have developed an automated feature extraction method for on-line condition monitoring based on the stack of the traditional autoencoder and an on-line sequential extreme learning machine(OSELM) network. The performance of this method is comparable to that of the traditional feature extraction approaches. The method can achieve 100% detection accuracy for determining the bearing health states of NASA bearing dataset. The simple design of this method is promising for the easy hardware implementation of Internet of Things(IoT) based prognostics solutions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge