Twisha Titirsha

On the Mitigation of Read Disturbances in Neuromorphic Inference Hardware

Jan 27, 2022

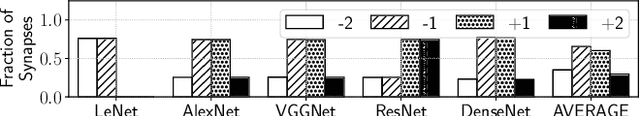

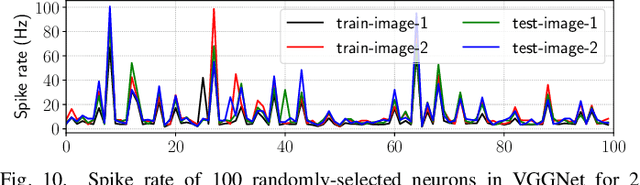

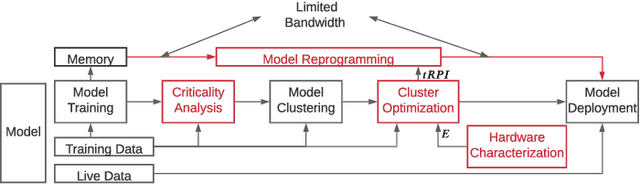

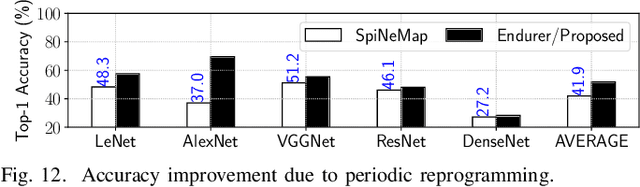

Abstract:Non-Volatile Memory (NVM) cells are used in neuromorphic hardware to store model parameters, which are programmed as resistance states. NVMs suffer from the read disturb issue, where the programmed resistance state drifts upon repeated access of a cell during inference. Resistance drifts can lower the inference accuracy. To address this, it is necessary to periodically reprogram model parameters (a high overhead operation). We study read disturb failures of an NVM cell. Our analysis show both a strong dependency on model characteristics such as synaptic activation and criticality, and on the voltage used to read resistance states during inference. We propose a system software framework to incorporate such dependencies in programming model parameters on NVM cells of a neuromorphic hardware. Our framework consists of a convex optimization formulation which aims to implement synaptic weights that have more activations and are critical, i.e., those that have high impact on accuracy on NVM cells that are exposed to lower voltages during inference. In this way, we increase the time interval between two consecutive reprogramming of model parameters. We evaluate our system software with many emerging inference models on a neuromorphic hardware simulator and show a significant reduction in the system overhead.

Improving Inference Lifetime of Neuromorphic Systems via Intelligent Synapse Mapping

Jun 16, 2021

Abstract:Non-Volatile Memories (NVMs) such as Resistive RAM (RRAM) are used in neuromorphic systems to implement high-density and low-power analog synaptic weights. Unfortunately, an RRAM cell can switch its state after reading its content a certain number of times. Such behavior challenges the integrity and program-once-read-many-times philosophy of implementing machine learning inference on neuromorphic systems, impacting the Quality-of-Service (QoS). Elevated temperatures and frequent usage can significantly shorten the number of times an RRAM cell can be reliably read before it becomes absolutely necessary to reprogram. We propose an architectural solution to extend the read endurance of RRAM-based neuromorphic systems. We make two key contributions. First, we formulate the read endurance of an RRAM cell as a function of the programmed synaptic weight and its activation within a machine learning workload. Second, we propose an intelligent workload mapping strategy incorporating the endurance formulation to place the synapses of a machine learning model onto the RRAM cells of the hardware. The objective is to extend the inference lifetime, defined as the number of times the model can be used to generate output (inference) before the trained weights need to be reprogrammed on the RRAM cells of the system. We evaluate our architectural solution with machine learning workloads on a cycle-accurate simulator of an RRAM-based neuromorphic system. Our results demonstrate a significant increase in inference lifetime with only a minimal performance impact.

NeuroXplorer 1.0: An Extensible Framework for Architectural Exploration with Spiking Neural Networks

May 04, 2021

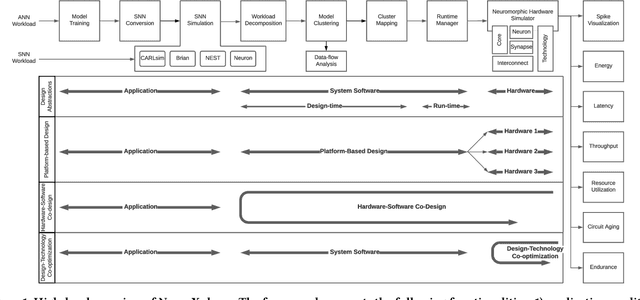

Abstract:Recently, both industry and academia have proposed many different neuromorphic architectures to execute applications that are designed with Spiking Neural Network (SNN). Consequently, there is a growing need for an extensible simulation framework that can perform architectural explorations with SNNs, including both platform-based design of today's hardware, and hardware-software co-design and design-technology co-optimization of the future. We present NeuroXplorer, a fast and extensible framework that is based on a generalized template for modeling a neuromorphic architecture that can be infused with the specific details of a given hardware and/or technology. NeuroXplorer can perform both low-level cycle-accurate architectural simulations and high-level analysis with data-flow abstractions. NeuroXplorer's optimization engine can incorporate hardware-oriented metrics such as energy, throughput, and latency, as well as SNN-oriented metrics such as inter-spike interval distortion and spike disorder, which directly impact SNN performance. We demonstrate the architectural exploration capabilities of NeuroXplorer through case studies with many state-of-the-art machine learning models.

On the Role of System Software in Energy Management of Neuromorphic Computing

Mar 22, 2021

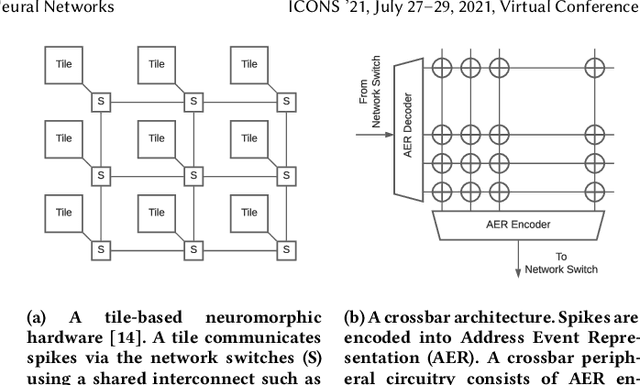

Abstract:Neuromorphic computing systems such as DYNAPs and Loihi have recently been introduced to the computing community to improve performance and energy efficiency of machine learning programs, especially those that are implemented using Spiking Neural Network (SNN). The role of a system software for neuromorphic systems is to cluster a large machine learning model (e.g., with many neurons and synapses) and map these clusters to the computing resources of the hardware. In this work, we formulate the energy consumption of a neuromorphic hardware, considering the power consumed by neurons and synapses, and the energy consumed in communicating spikes on the interconnect. Based on such formulation, we first evaluate the role of a system software in managing the energy consumption of neuromorphic systems. Next, we formulate a simple heuristic-based mapping approach to place the neurons and synapses onto the computing resources to reduce energy consumption. We evaluate our approach with 10 machine learning applications and demonstrate that the proposed mapping approach leads to a significant reduction of energy consumption of neuromorphic computing systems.

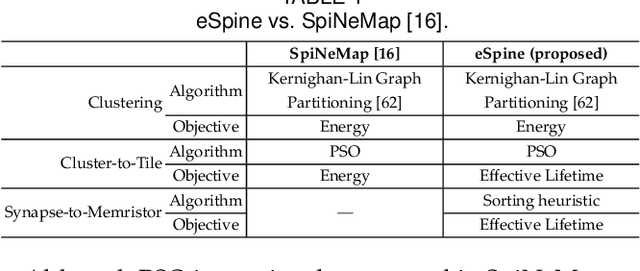

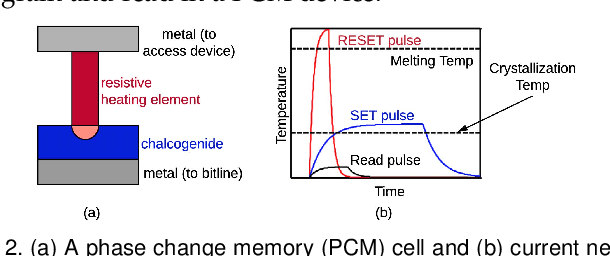

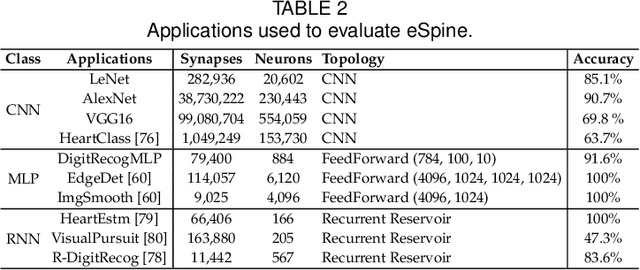

Endurance-Aware Mapping of Spiking Neural Networks to Neuromorphic Hardware

Mar 09, 2021

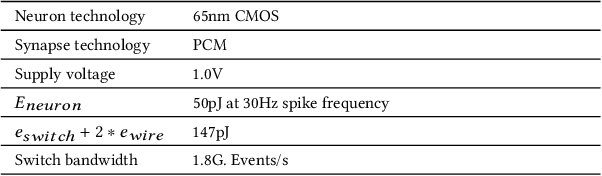

Abstract:Neuromorphic computing systems are embracing memristors to implement high density and low power synaptic storage as crossbar arrays in hardware. These systems are energy efficient in executing Spiking Neural Networks (SNNs). We observe that long bitlines and wordlines in a memristive crossbar are a major source of parasitic voltage drops, which create current asymmetry. Through circuit simulations, we show the significant endurance variation that results from this asymmetry. Therefore, if the critical memristors (ones with lower endurance) are overutilized, they may lead to a reduction of the crossbar's lifetime. We propose eSpine, a novel technique to improve lifetime by incorporating the endurance variation within each crossbar in mapping machine learning workloads, ensuring that synapses with higher activation are always implemented on memristors with higher endurance, and vice versa. eSpine works in two steps. First, it uses the Kernighan-Lin Graph Partitioning algorithm to partition a workload into clusters of neurons and synapses, where each cluster can fit in a crossbar. Second, it uses an instance of Particle Swarm Optimization (PSO) to map clusters to tiles, where the placement of synapses of a cluster to memristors of a crossbar is performed by analyzing their activation within the workload. We evaluate eSpine for a state-of-the-art neuromorphic hardware model with phase-change memory (PCM)-based memristors. Using 10 SNN workloads, we demonstrate a significant improvement in the effective lifetime.

Thermal-Aware Compilation of Spiking Neural Networks to Neuromorphic Hardware

Oct 09, 2020

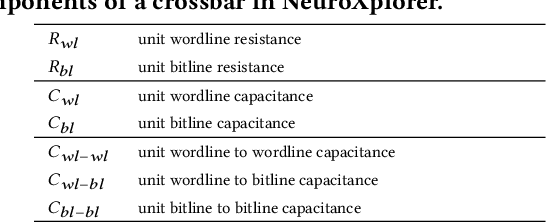

Abstract:Hardware implementation of neuromorphic computing can significantly improve performance and energy efficiency of machine learning tasks implemented with spiking neural networks (SNNs), making these hardware platforms particularly suitable for embedded systems and other energy-constrained environments. We observe that the long bitlines and wordlines in a crossbar of the hardware create significant current variations when propagating spikes through its synaptic elements, which are typically designed with non-volatile memory (NVM). Such current variations create a thermal gradient within each crossbar of the hardware, depending on the machine learning workload and the mapping of neurons and synapses of the workload to these crossbars. \mr{This thermal gradient becomes significant at scaled technology nodes and it increases the leakage power in the hardware leading to an increase in the energy consumption.} We propose a novel technique to map neurons and synapses of SNN-based machine learning workloads to neuromorphic hardware. We make two novel contributions. First, we formulate a detailed thermal model for a crossbar in a neuromorphic hardware incorporating workload dependency, where the temperature of each NVM-based synaptic cell is computed considering the thermal contributions from its neighboring cells. Second, we incorporate this thermal model in the mapping of neurons and synapses of SNN-based workloads using a hill-climbing heuristic. The objective is to reduce the thermal gradient in crossbars. We evaluate our neuron and synapse mapping technique using 10 machine learning workloads for a state-of-the-art neuromorphic hardware. We demonstrate an average 11.4K reduction in the average temperature of each crossbar in the hardware, leading to a 52% reduction in the leakage power consumption (11% lower total energy consumption) compared to a performance-oriented SNN mapping technique.

Reliability-Performance Trade-offs in Neuromorphic Computing

Sep 26, 2020

Abstract:Neuromorphic architectures built with Non-Volatile Memory (NVM) can significantly improve the energy efficiency of machine learning tasks designed with Spiking Neural Networks (SNNs). A major source of voltage drop in a crossbar of these architectures are the parasitic components on the crossbar's bitlines and wordlines, which are deliberately made longer to achieve lower cost-per-bit. We observe that the parasitic voltage drops create a significant asymmetry in programming speed and reliability of NVM cells in a crossbar. Specifically, NVM cells that are on shorter current paths are faster to program but have lower endurance than those on longer current paths, and vice versa. This asymmetry in neuromorphic architectures create reliability-performance trade-offs, which can be exploited efficiently using SNN mapping techniques. In this work, we demonstrate such trade-offs using a previously-proposed SNN mapping technique with 10 workloads from contemporary machine learning tasks for a state-of-the art neuromoorphic hardware.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge