Mattias Nilsson

Resource-Efficient Speech Quality Prediction through Quantization Aware Training and Binary Activation Maps

Jul 05, 2024Abstract:As speech processing systems in mobile and edge devices become more commonplace, the demand for unintrusive speech quality monitoring increases. Deep learning methods provide high-quality estimates of objective and subjective speech quality metrics. However, their significant computational requirements are often prohibitive on resource-constrained devices. To address this issue, we investigated binary activation maps (BAMs) for speech quality prediction on a convolutional architecture based on DNSMOS. We show that the binary activation model with quantization aware training matches the predictive performance of the baseline model. It further allows using other compression techniques. Combined with 8-bit weight quantization, our approach results in a 25-fold memory reduction during inference, while replacing almost all dot products with summations. Our findings show a path toward substantial resource savings by supporting mixed-precision binary multiplication in hard- and software.

DISCOUNT: Distributional Counterfactual Explanation With Optimal Transport

Jan 27, 2024Abstract:Counterfactual Explanations (CE) is the de facto method for providing insight and interpretability in black-box decision-making models by identifying alternative input instances that lead to different outcomes. This paper extends the concept of CEs to a distributional context, broadening the scope from individual data points to entire input and output distributions, named Distributional Counterfactual Explanation (DCE). In DCE, our focus shifts to analyzing the distributional properties of the factual and counterfactual, drawing parallels to the classical approach of assessing individual instances and their resulting decisions. We leverage Optimal Transport (OT) to frame a chance-constrained optimization problem, aiming to derive a counterfactual distribution that closely aligns with its factual counterpart, substantiated by statistical confidence. Our proposed optimization method, DISCOUNT, strategically balances this confidence across both input and output distributions. This algorithm is accompanied by an analysis of its convergence rate. The efficacy of our proposed method is substantiated through a series of illustrative case studies, highlighting its potential in providing deep insights into decision-making models.

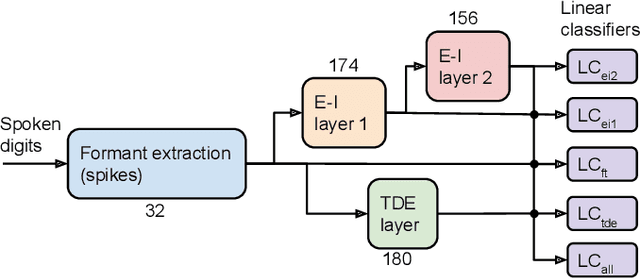

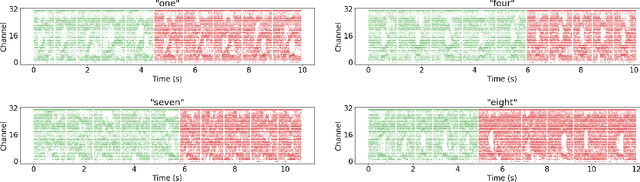

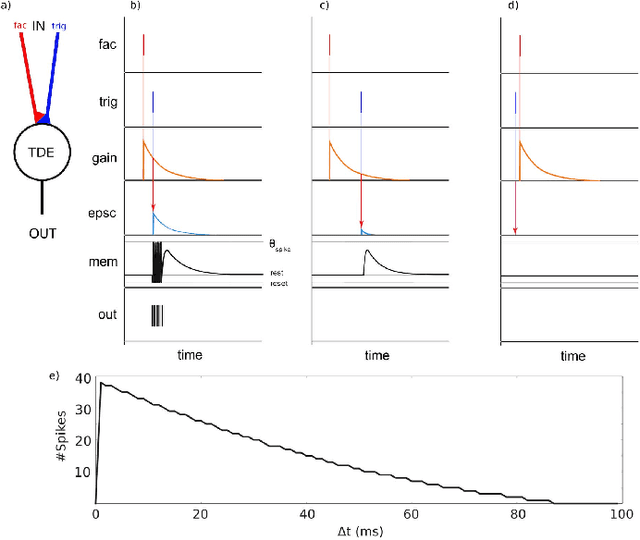

A Comparison of Temporal Encoders for Neuromorphic Keyword Spotting with Few Neurons

Jan 24, 2023

Abstract:With the expansion of AI-powered virtual assistants, there is a need for low-power keyword spotting systems providing a "wake-up" mechanism for subsequent computationally expensive speech recognition. One promising approach is the use of neuromorphic sensors and spiking neural networks (SNNs) implemented in neuromorphic processors for sparse event-driven sensing. However, this requires resource-efficient SNN mechanisms for temporal encoding, which need to consider that these systems process information in a streaming manner, with physical time being an intrinsic property of their operation. In this work, two candidate neurocomputational elements for temporal encoding and feature extraction in SNNs described in recent literature - the spiking time-difference encoder (TDE) and disynaptic excitatory-inhibitory (E-I) elements - are comparatively investigated in a keyword-spotting task on formants computed from spoken digits in the TIDIGITS dataset. While both encoders improve performance over direct classification of the formant features in the training data, enabling a complete binary classification with a logistic regression model, they show no clear improvements on the test set. Resource-efficient keyword spotting applications may benefit from the use of these encoders, but further work on methods for learning the time constants and weights is required to investigate their full potential.

Integration of Neuromorphic AI in Event-Driven Distributed Digitized Systems: Concepts and Research Directions

Oct 20, 2022

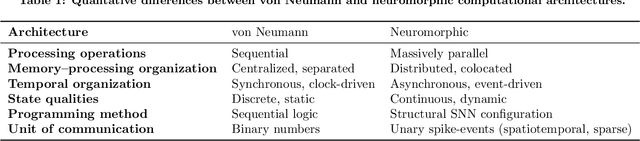

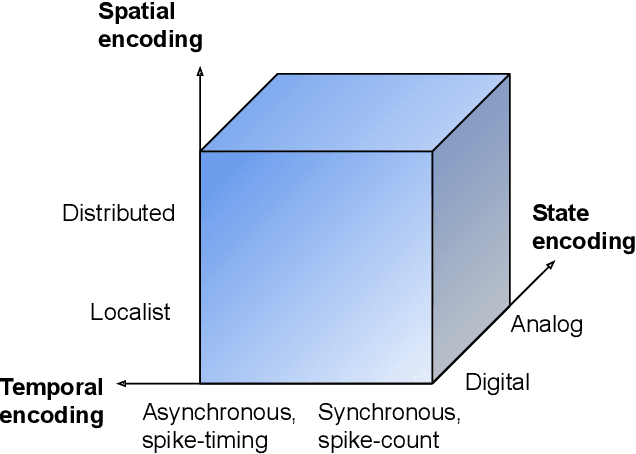

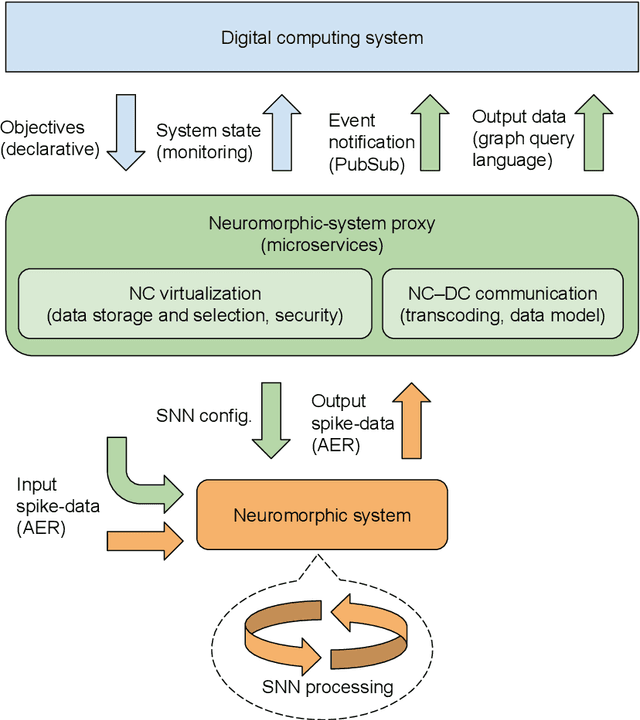

Abstract:Increasing complexity and data-generation rates in cyber-physical systems and the industrial Internet of things are calling for a corresponding increase in AI capabilities at the resource-constrained edges of the Internet. Meanwhile, the resource requirements of digital computing and deep learning are growing exponentially, in an unsustainable manner. One possible way to bridge this gap is the adoption of resource-efficient brain-inspired "neuromorphic" processing and sensing devices, which use event-driven, asynchronous, dynamic neurosynaptic elements with colocated memory for distributed processing and machine learning. However, since neuromorphic systems are fundamentally different from conventional von Neumann computers and clock-driven sensor systems, several challenges are posed to large-scale adoption and integration of neuromorphic devices into the existing distributed digital-computational infrastructure. Here, we describe the current landscape of neuromorphic computing, focusing on characteristics that pose integration challenges. Based on this analysis, we propose a microservice-based framework for neuromorphic systems integration, consisting of a neuromorphic-system proxy, which provides virtualization and communication capabilities required in distributed systems of systems, in combination with a declarative programming approach offering engineering-process abstraction. We also present concepts that could serve as a basis for the realization of this framework, and identify directions for further research required to enable large-scale system integration of neuromorphic devices.

On the Relevance of Bandwidth Extension for Speaker Verification

Apr 05, 2022

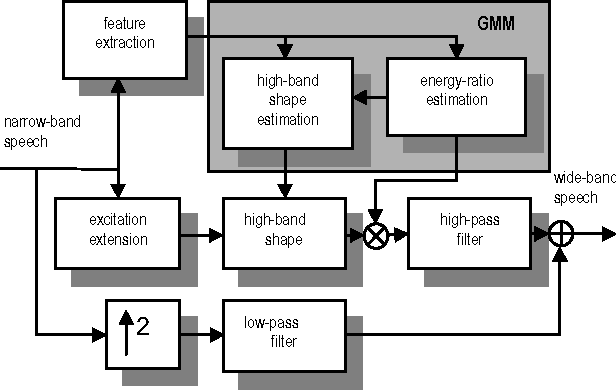

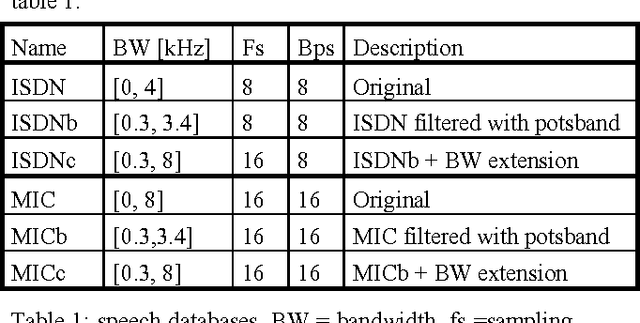

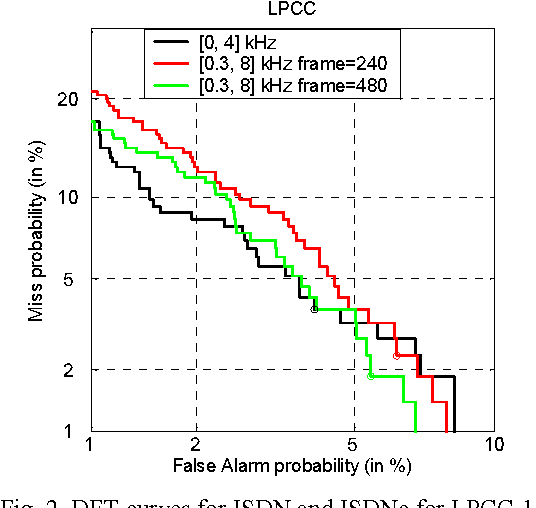

Abstract:In this paper, we consider the effect of a bandwidth extension of narrow-band speech signals (0.3-3.4 kHz) to 0.3-8 kHz on speaker verification. Using covariance matrix based verification systems together with detection error trade-off curves, we compare the performance between systems operating on narrow-band, wide-band (0-8 kHz), and bandwidth-extended speech. The experiments were conducted using different short-time spectral parameterizations derived from microphone and ISDN speech databases. The studied bandwidth-extension algorithm did not introduce artifacts that affected the speaker verification task, and we achieved improvements between 1 and 10 percent (depending on the model order) over the verification system designed for narrow-band speech when mel-frequency cepstral coefficients for the short-time spectral parameterization were used.

* 4 pages published in 7th International Conference on Spoken Language Processing, September 16-20, 2002, Denver, Colorado, USA. arXiv admin note: text overlap with arXiv:2202.13865

On the relevance of bandwidth extension for speaker identification

Feb 24, 2022

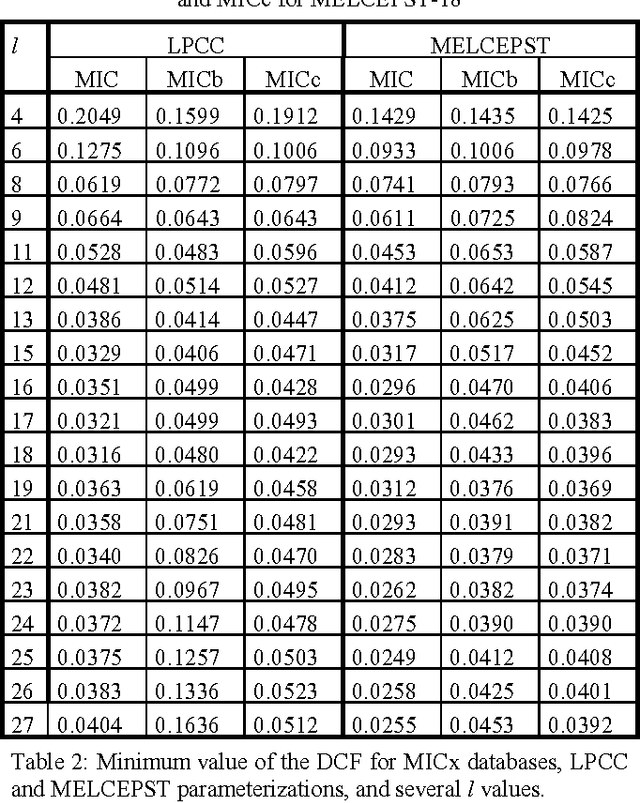

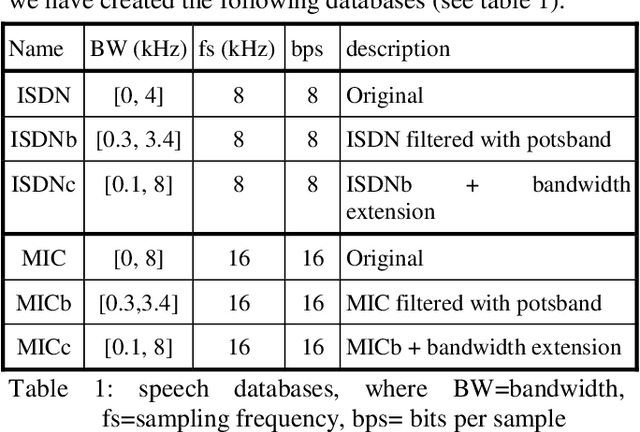

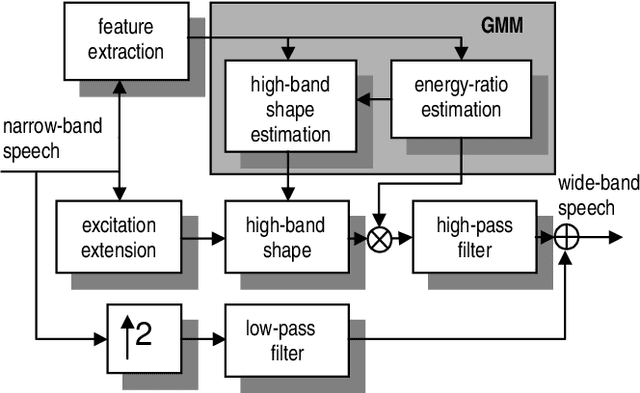

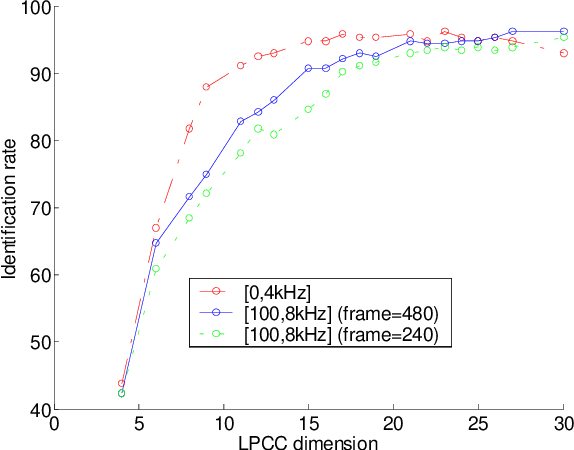

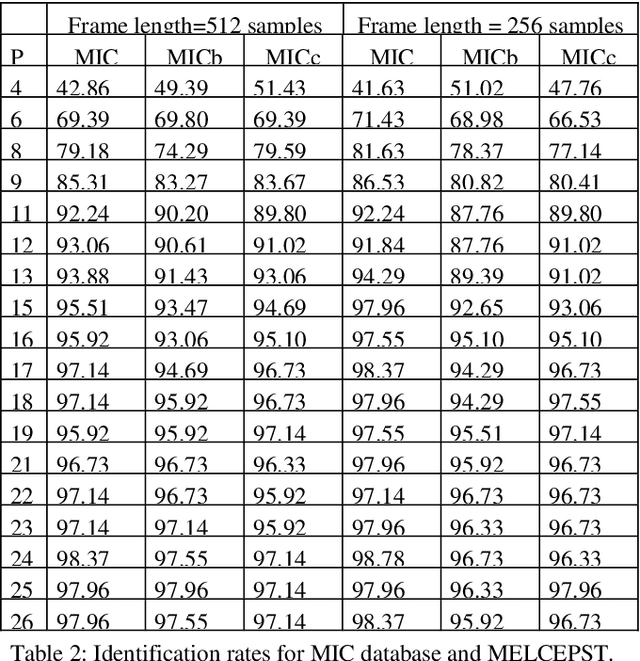

Abstract:In this paper we discuss the relevance of bandwidth extension for speaker identification tasks. Mainly we want to study if it is possible to recognize voices that have been bandwith extended. For this purpose, we created two different databases (microphonic and ISDN) of speech signals that were bandwidth extended from telephone bandwidth ([300, 3400] Hz) to full bandwidth ([100, 8000] Hz). We have evaluated different parameterizations, and we have found that the MELCEPST parameterization can take advantage of the bandwidth extension algorithms in several situations.

* 4 pages

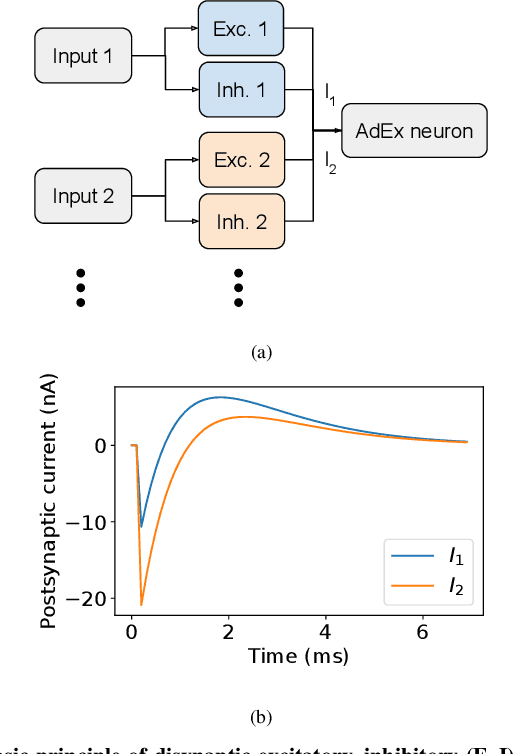

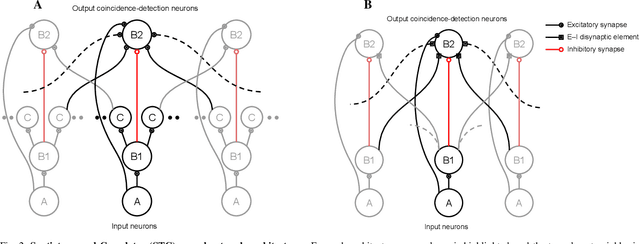

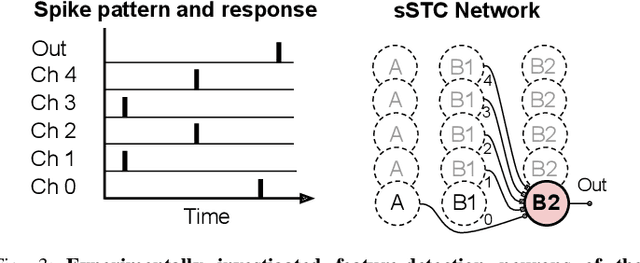

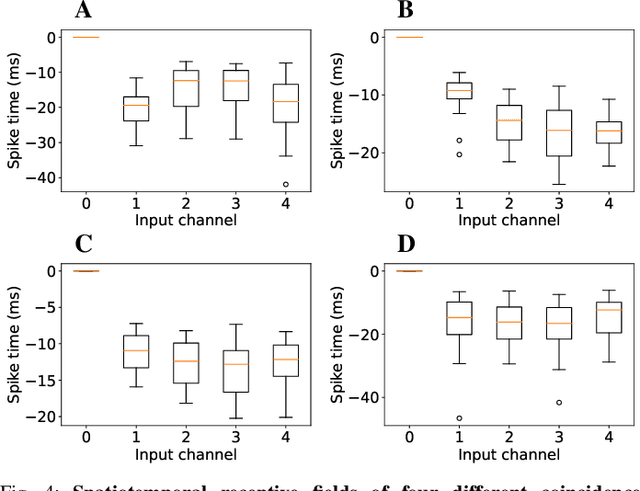

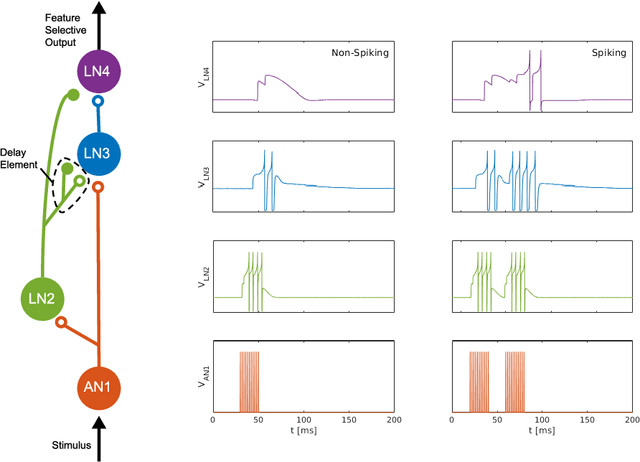

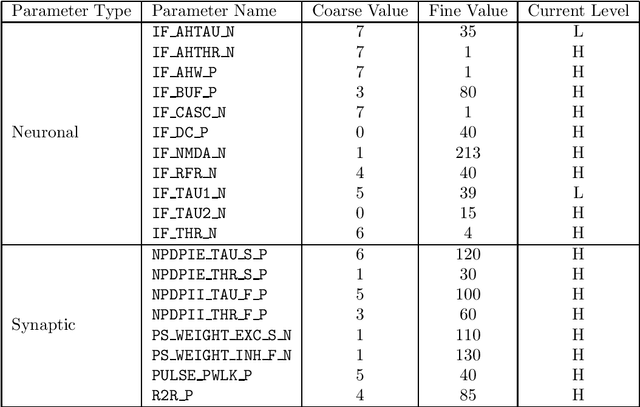

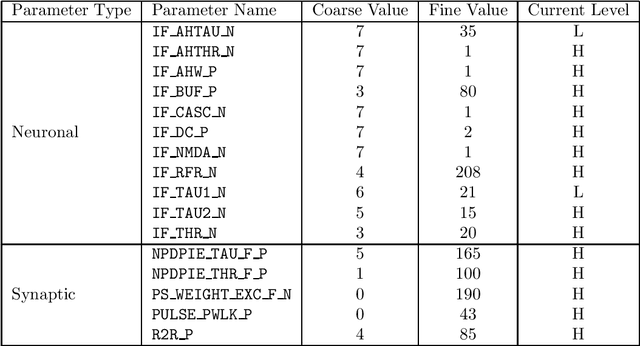

Spatiotemporal Spike-Pattern Selectivity in Single Mixed-Signal Neurons with Balanced Synapses

Jun 10, 2021

Abstract:Realizing the potential of mixed-signal neuromorphic processors for ultra-low-power inference and learning requires efficient use of their inhomogeneous analog circuitry as well as sparse, time-based information encoding and processing. Here, we investigate spike-timing-based spatiotemporal receptive fields of output-neurons in the Spatiotemporal Correlator (STC) network, for which we used excitatory-inhibitory balanced disynaptic inputs instead of dedicated axonal or neuronal delays. We present hardware-in-the-loop experiments with a mixed-signal DYNAP-SE neuromorphic processor, in which five-dimensional receptive fields of hardware neurons were mapped by randomly sampling input spike-patterns from a uniform distribution. We find that, when the balanced disynaptic elements are randomly programmed, some of the neurons display distinct receptive fields. Furthermore, we demonstrate how a neuron was tuned to detect a particular spatiotemporal feature, to which it initially was non-selective, by activating a different subset of the inhomogeneous analog synaptic circuits. The energy dissipation of the balanced synaptic elements is one order of magnitude lower per lateral connection (0.65 nJ vs 9.3 nJ per spike) than former delay-based neuromorphic hardware implementations. Thus, we show how the inhomogeneous synaptic circuits could be utilized for resource-efficient implementation of STC network layers, in a way that enables synapse-address reprogramming as a discrete mechanism for feature tuning.

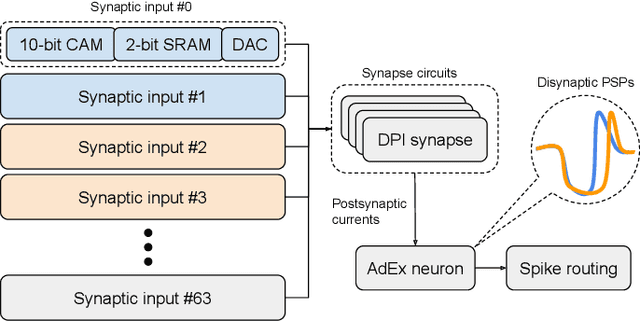

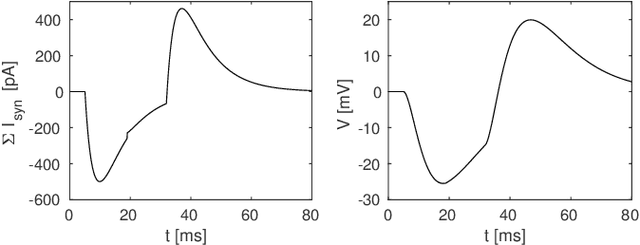

Synaptic Integration of Spatiotemporal Features with a Dynamic Neuromorphic Processor

Feb 12, 2020

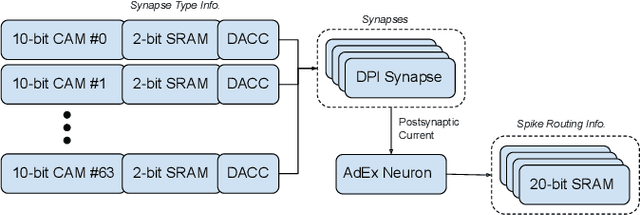

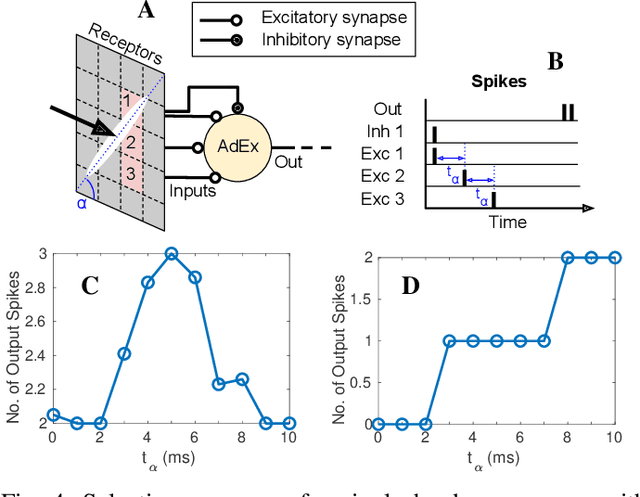

Abstract:Spiking neurons can perform spatiotemporal feature detection by nonlinear synaptic and dendritic integration of presynaptic spike patterns. Multicompartment models of nonlinear dendrites and related neuromorphic circuit designs enable faithful imitation of such dynamic integration processes, but these approaches are also associated with a relatively high computing cost or circuit size. Here, we investigate synaptic integration of spatiotemporal spike patterns with multiple dynamic synapses on point-neurons in the DYNAP-SE neuromorphic processor, which can offer a complementary resource-efficient, albeit less flexible, approach to feature detection. We investigate how previously proposed excitatory--inhibitory pairs of dynamic synapses can be combined to integrate multiple inputs, and we generalize that concept to a case in which one inhibitory synapse is combined with multiple excitatory synapses. We characterize the resulting delayed excitatory postsynaptic potentials (EPSPs) by measuring and analyzing the membrane potentials of the neuromorphic neuronal circuits. We find that biologically relevant EPSP delays, with variability of order 10 milliseconds per neuron, can be realized in the proposed manner by selecting different synapse combinations, thanks to device mismatch. Based on these results, we demonstrate that a single point-neuron with dynamic synapses in the DYNAP-SE can respond selectively to presynaptic spikes with a particular spatiotemporal structure, which enables, for instance, visual feature tuning of single neurons.

Synaptic Delays for Temporal Feature Detection in Dynamic Neuromorphic Processors

Jun 28, 2019

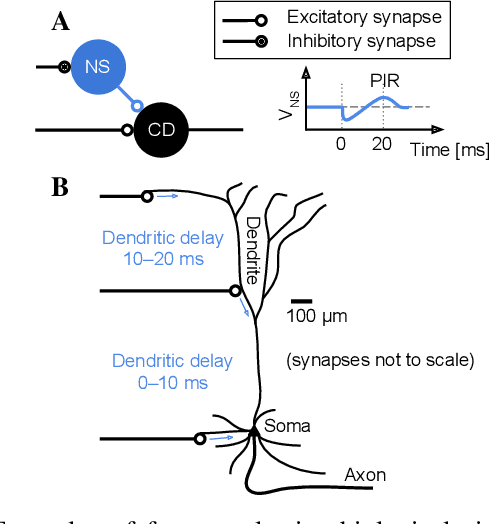

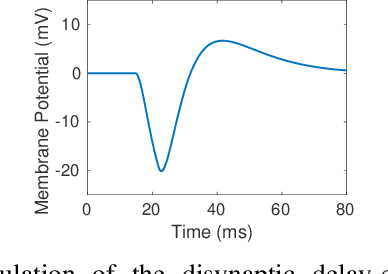

Abstract:Spiking neural networks implemented in dynamic neuromorphic processors are well suited for spatiotemporal feature detection and learning, for example in ultra low-power embedded intelligence and deep edge applications. Such pattern recognition networks naturally involve a combination of dynamic delay mechanisms and coincidence detection. Inspired by an auditory feature detection circuit in crickets, featuring a delayed excitation by postinhibitory rebound, we investigate disynaptic delay elements formed by inhibitory-excitatory pairs of dynamic synapses. We configure such disynaptic delay elements in the DYNAP-SE neuromorphic processor and characterize the distribution of delayed excitations resulting from device mismatch. Furthermore, we present a network that mimics the auditory feature detection circuit of crickets and demonstrate how varying synapse weights, input noise and processor temperature affects the circuit. Interestingly, we find that the disynaptic delay elements can be configured such that the timing and magnitude of the delayed postsynaptic excitation depend mainly on the efficacy of the inhibitory and excitatory synapses, respectively. Delay elements of this kind can be implemented in other reconfigurable dynamic neuromorphic processors and opens up for synapse level temporal feature tuning with large fan-in and flexible delays of order 10-100 ms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge