Anders Lindgren

Bio-inspired Agentic Self-healing Framework for Resilient Distributed Computing Continuum Systems

Jan 01, 2026Abstract:Human biological systems sustain life through extraordinary resilience, continually detecting damage, orchestrating targeted responses, and restoring function through self-healing. Inspired by these capabilities, this paper introduces ReCiSt, a bio-inspired agentic self-healing framework designed to achieve resilience in Distributed Computing Continuum Systems (DCCS). Modern DCCS integrate heterogeneous computing resources, ranging from resource-constrained IoT devices to high-performance cloud infrastructures, and their inherent complexity, mobility, and dynamic operating conditions expose them to frequent faults that disrupt service continuity. These challenges underscore the need for scalable, adaptive, and self-regulated resilience strategies. ReCiSt reconstructs the biological phases of Hemostasis, Inflammation, Proliferation, and Remodeling into the computational layers Containment, Diagnosis, Meta-Cognitive, and Knowledge for DCCS. These four layers perform autonomous fault isolation, causal diagnosis, adaptive recovery, and long-term knowledge consolidation through Language Model (LM)-powered agents. These agents interpret heterogeneous logs, infer root causes, refine reasoning pathways, and reconfigure resources with minimal human intervention. The proposed ReCiSt framework is evaluated on public fault datasets using multiple LMs, and no baseline comparison is included due to the scarcity of similar approaches. Nevertheless, our results, evaluated under different LMs, confirm ReCiSt's self-healing capabilities within tens of seconds with minimum of 10% of agent CPU usage. Our results also demonstrated depth of analysis to over come uncertainties and amount of micro-agents invoked to achieve resilience.

Integration of Neuromorphic AI in Event-Driven Distributed Digitized Systems: Concepts and Research Directions

Oct 20, 2022

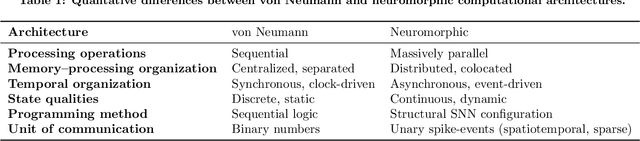

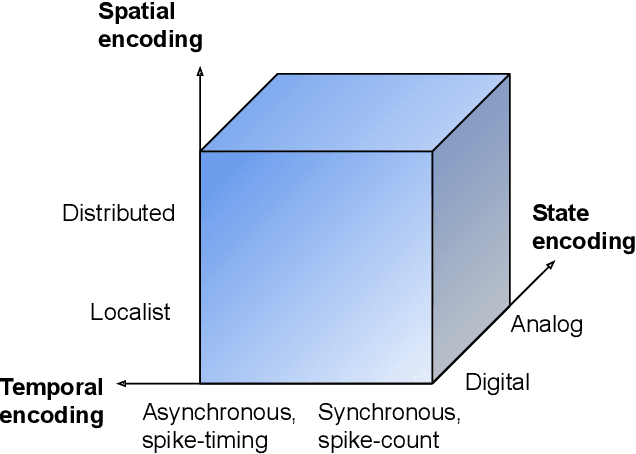

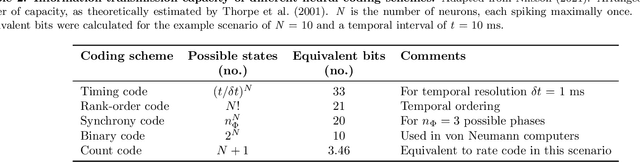

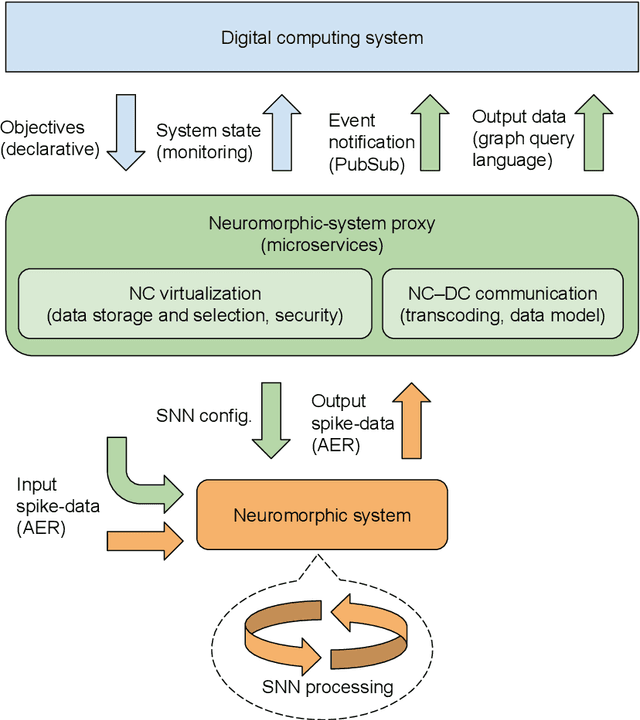

Abstract:Increasing complexity and data-generation rates in cyber-physical systems and the industrial Internet of things are calling for a corresponding increase in AI capabilities at the resource-constrained edges of the Internet. Meanwhile, the resource requirements of digital computing and deep learning are growing exponentially, in an unsustainable manner. One possible way to bridge this gap is the adoption of resource-efficient brain-inspired "neuromorphic" processing and sensing devices, which use event-driven, asynchronous, dynamic neurosynaptic elements with colocated memory for distributed processing and machine learning. However, since neuromorphic systems are fundamentally different from conventional von Neumann computers and clock-driven sensor systems, several challenges are posed to large-scale adoption and integration of neuromorphic devices into the existing distributed digital-computational infrastructure. Here, we describe the current landscape of neuromorphic computing, focusing on characteristics that pose integration challenges. Based on this analysis, we propose a microservice-based framework for neuromorphic systems integration, consisting of a neuromorphic-system proxy, which provides virtualization and communication capabilities required in distributed systems of systems, in combination with a declarative programming approach offering engineering-process abstraction. We also present concepts that could serve as a basis for the realization of this framework, and identify directions for further research required to enable large-scale system integration of neuromorphic devices.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge