Susanna Pirttikangas

Bio-inspired Agentic Self-healing Framework for Resilient Distributed Computing Continuum Systems

Jan 01, 2026Abstract:Human biological systems sustain life through extraordinary resilience, continually detecting damage, orchestrating targeted responses, and restoring function through self-healing. Inspired by these capabilities, this paper introduces ReCiSt, a bio-inspired agentic self-healing framework designed to achieve resilience in Distributed Computing Continuum Systems (DCCS). Modern DCCS integrate heterogeneous computing resources, ranging from resource-constrained IoT devices to high-performance cloud infrastructures, and their inherent complexity, mobility, and dynamic operating conditions expose them to frequent faults that disrupt service continuity. These challenges underscore the need for scalable, adaptive, and self-regulated resilience strategies. ReCiSt reconstructs the biological phases of Hemostasis, Inflammation, Proliferation, and Remodeling into the computational layers Containment, Diagnosis, Meta-Cognitive, and Knowledge for DCCS. These four layers perform autonomous fault isolation, causal diagnosis, adaptive recovery, and long-term knowledge consolidation through Language Model (LM)-powered agents. These agents interpret heterogeneous logs, infer root causes, refine reasoning pathways, and reconfigure resources with minimal human intervention. The proposed ReCiSt framework is evaluated on public fault datasets using multiple LMs, and no baseline comparison is included due to the scarcity of similar approaches. Nevertheless, our results, evaluated under different LMs, confirm ReCiSt's self-healing capabilities within tens of seconds with minimum of 10% of agent CPU usage. Our results also demonstrated depth of analysis to over come uncertainties and amount of micro-agents invoked to achieve resilience.

UserCentrix: An Agentic Memory-augmented AI Framework for Smart Spaces

May 01, 2025Abstract:Agentic AI, with its autonomous and proactive decision-making, has transformed smart environments. By integrating Generative AI (GenAI) and multi-agent systems, modern AI frameworks can dynamically adapt to user preferences, optimize data management, and improve resource allocation. This paper introduces UserCentrix, an agentic memory-augmented AI framework designed to enhance smart spaces through dynamic, context-aware decision-making. This framework integrates personalized Large Language Model (LLM) agents that leverage user preferences and LLM memory management to deliver proactive and adaptive assistance. Furthermore, it incorporates a hybrid hierarchical control system, balancing centralized and distributed processing to optimize real-time responsiveness while maintaining global situational awareness. UserCentrix achieves resource-efficient AI interactions by embedding memory-augmented reasoning, cooperative agent negotiation, and adaptive orchestration strategies. Our key contributions include (i) a self-organizing framework with proactive scaling based on task urgency, (ii) a Value of Information (VoI)-driven decision-making process, (iii) a meta-reasoning personal LLM agent, and (iv) an intelligent multi-agent coordination system for seamless environment adaptation. Experimental results across various models confirm the effectiveness of our approach in enhancing response accuracy, system efficiency, and computational resource management in real-world application.

Large Language Model Based Multi-Agent System Augmented Complex Event Processing Pipeline for Internet of Multimedia Things

Jan 03, 2025Abstract:This paper presents the development and evaluation of a Large Language Model (LLM), also known as foundation models, based multi-agent system framework for complex event processing (CEP) with a focus on video query processing use cases. The primary goal is to create a proof-of-concept (POC) that integrates state-of-the-art LLM orchestration frameworks with publish/subscribe (pub/sub) tools to address the integration of LLMs with current CEP systems. Utilizing the Autogen framework in conjunction with Kafka message brokers, the system demonstrates an autonomous CEP pipeline capable of handling complex workflows. Extensive experiments evaluate the system's performance across varying configurations, complexities, and video resolutions, revealing the trade-offs between functionality and latency. The results show that while higher agent count and video complexities increase latency, the system maintains high consistency in narrative coherence. This research builds upon and contributes to, existing novel approaches to distributed AI systems, offering detailed insights into integrating such systems into existing infrastructures.

Pub/Sub Message Brokers for GenAI

Dec 22, 2023Abstract:In today's digital world, Generative Artificial Intelligence (GenAI) such as Large Language Models (LLMs) is becoming increasingly prevalent, extending its reach across diverse applications. This surge in adoption has sparked a significant increase in demand for data-centric GenAI models, highlighting the necessity for robust data communication infrastructures. Central to this need are message brokers, which serve as essential channels for data transfer within various system components. This survey aims to delve into a comprehensive analysis of traditional and modern message brokers, offering a comparative study of prevalent platforms. Our study considers numerous criteria including, but not limited to, open-source availability, integrated monitoring tools, message prioritization mechanisms, capabilities for parallel processing, reliability, distribution and clustering functionalities, authentication processes, data persistence strategies, fault tolerance, and scalability. Furthermore, we explore the intrinsic constraints that the design and operation of each message broker might impose, recognizing that these limitations are crucial in understanding their real-world applicability. We then leverage these insights to propose a sophisticated message broker framework -- one designed with the adaptability and robustness necessary to meet the evolving requisites of GenAI applications. Finally, this study examines the enhancement of message broker mechanisms specifically for GenAI contexts, emphasizing the criticality of developing a versatile message broker framework. Such a framework would be poised for quick adaptation, catering to the dynamic and growing demands of GenAI in the foreseeable future. Through this dual-pronged approach, we intend to contribute a foundational compendium that can guide future innovations and infrastructural advancements in the realm of GenAI data communication.

TSViT: A Time Series Vision Transformer for Fault Diagnosis

Nov 12, 2023Abstract:Traditional fault diagnosis methods using Convolutional Neural Networks (CNNs) face limitations in capturing temporal features (i.e., the variation of vibration signals over time). To address this issue, this paper introduces a novel model, the Time Series Vision Transformer (TSViT), specifically designed for fault diagnosis. On one hand, TSViT model integrates a convolutional layer to segment vibration signals and capture local features. On the other hand, it employs a transformer encoder to learn long-term temporal information. The experimental results with other methods on two distinct datasets validate the effectiveness and generalizability of TSViT with a comparative analysis of its hyperparameters' impact on model performance, computational complexity, and overall parameter quantity. TSViT reaches average accuracies of 100% and 99.99% on two test sets, correspondingly.

Autonomy and Intelligence in the Computing Continuum: Challenges, Enablers, and Future Directions for Orchestration

May 03, 2022

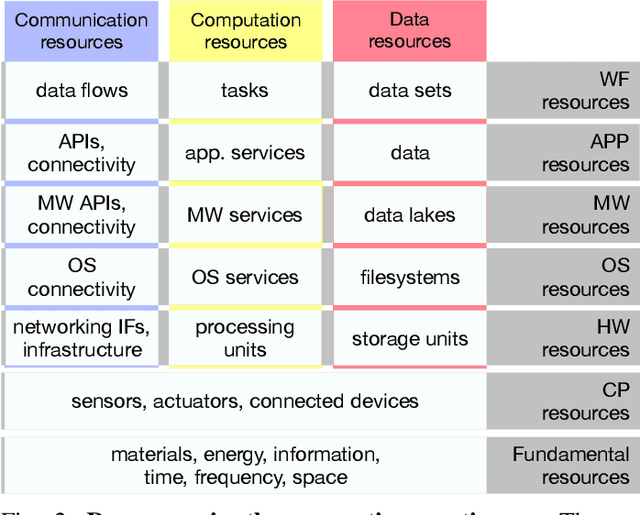

Abstract:Future AI applications require performance, reliability and privacy that the existing, cloud-dependant system architectures cannot provide. In this article, we study orchestration in the device-edge-cloud continuum, and focus on AI for edge, that is, the AI methods used in resource orchestration. We claim that to support the constantly growing requirements of intelligent applications in the device-edge-cloud computing continuum, resource orchestration needs to embrace edge AI and emphasize local autonomy and intelligence. To justify the claim, we provide a general definition for continuum orchestration, and look at how current and emerging orchestration paradigms are suitable for the computing continuum. We describe certain major emerging research themes that may affect future orchestration, and provide an early vision of an orchestration paradigm that embraces those research themes. Finally, we survey current key edge AI methods and look at how they may contribute into fulfilling the vision of future continuum orchestration.

StressNAS: Affect State and Stress Detection Using Neural Architecture Search

Aug 26, 2021

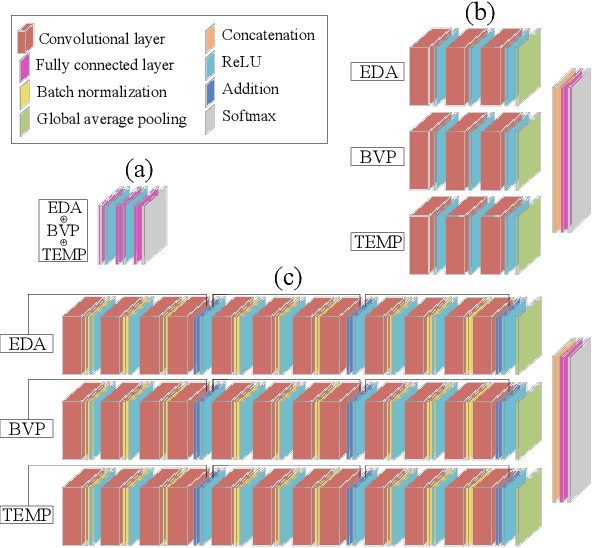

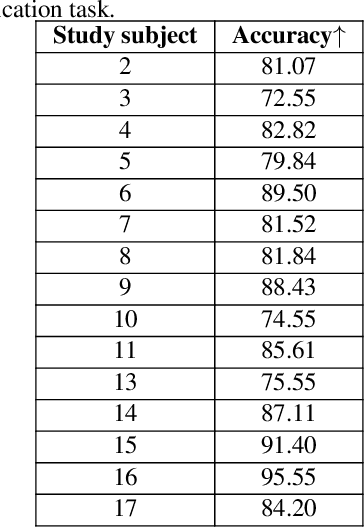

Abstract:Smartwatches have rapidly evolved towards capabilities to accurately capture physiological signals. As an appealing application, stress detection attracts many studies due to its potential benefits to human health. It is propitious to investigate the applicability of deep neural networks (DNN) to enhance human decision-making through physiological signals. However, manually engineering DNN proves a tedious task especially in stress detection due to the complex nature of this phenomenon. To this end, we propose an optimized deep neural network training scheme using neural architecture search merely using wrist-worn data from WESAD. Experiments show that our approach outperforms traditional ML methods by 8.22% and 6.02% in the three-state and two-state classifiers, respectively, using the combination of WESAD wrist signals. Moreover, the proposed method can minimize the need for human-design DNN while improving performance by 4.39% (three-state) and 8.99% (binary).

A Survey on Federated Learning and its Applications for Accelerating Industrial Internet of Things

Apr 21, 2021

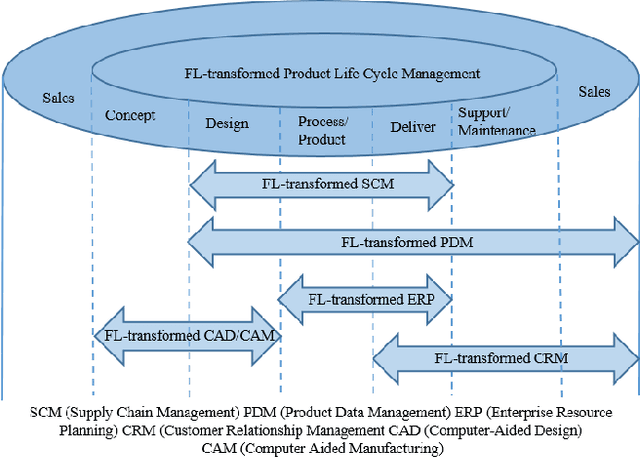

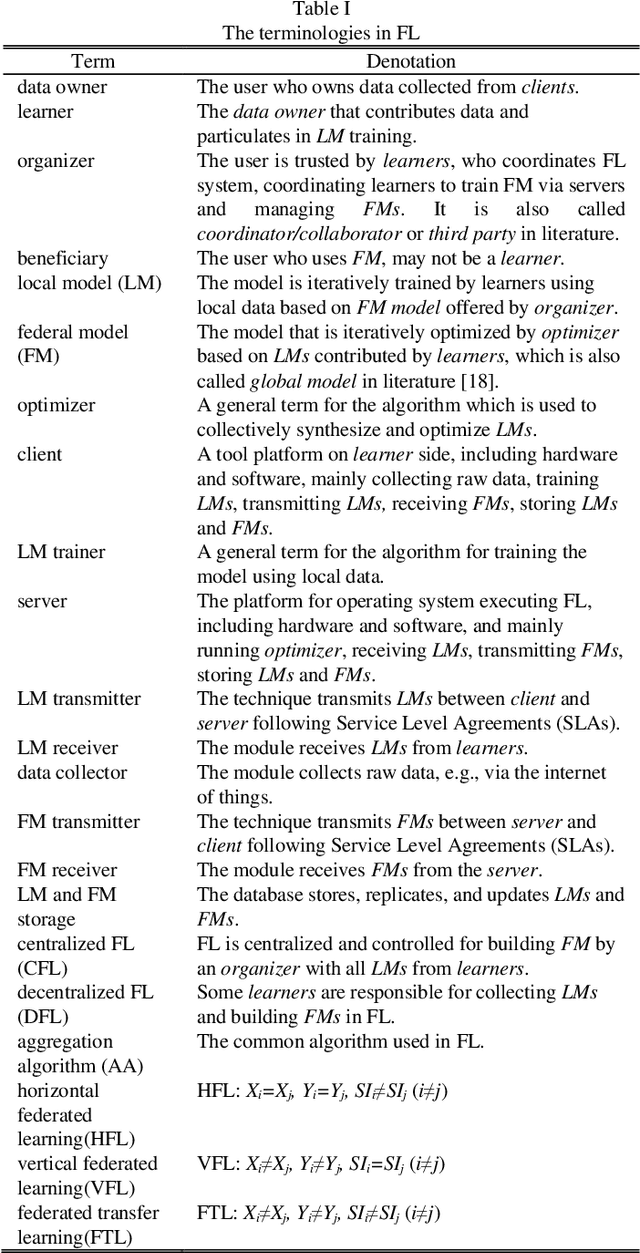

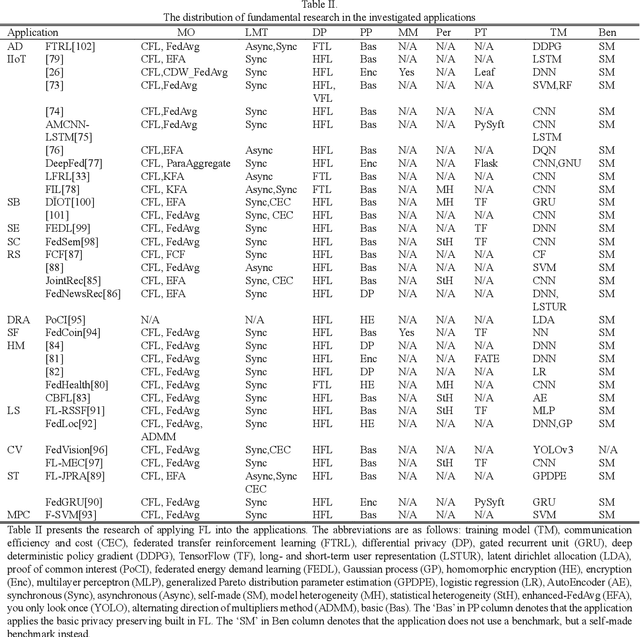

Abstract:Federated learning (FL) brings collaborative intelligence into industries without centralized training data to accelerate the process of Industry 4.0 on the edge computing level. FL solves the dilemma in which enterprises wish to make the use of data intelligence with security concerns. To accelerate industrial Internet of things with the further leverage of FL, existing achievements on FL are developed from three aspects: 1) define terminologies and elaborate a general framework of FL for accommodating various scenarios; 2) discuss the state-of-the-art of FL on fundamental researches including data partitioning, privacy preservation, model optimization, local model transportation, personalization, motivation mechanism, platform & tools, and benchmark; 3) discuss the impacts of FL from the economic perspective. To attract more attention from industrial academia and practice, a FL-transformed manufacturing paradigm is presented, and future research directions of FL are given and possible immediate applications in Industry 4.0 domain are also proposed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge