Pekka Siirtola

Feature Relevance Analysis to Explain Concept Drift -- A Case Study in Human Activity Recognition

Jan 20, 2023

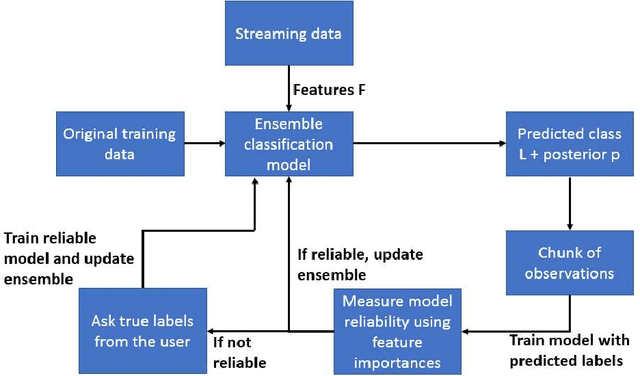

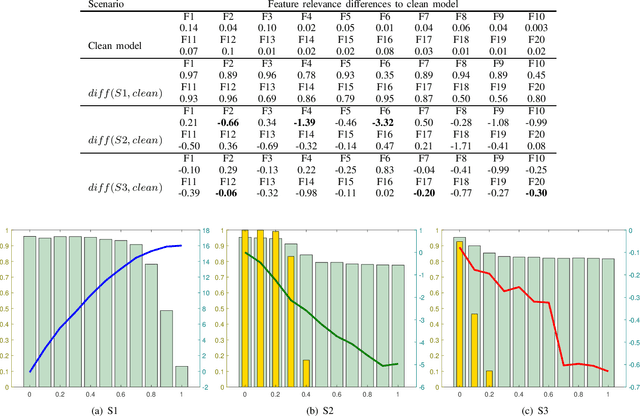

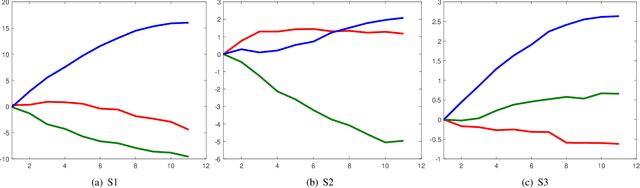

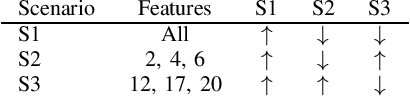

Abstract:This article studies how to detect and explain concept drift. Human activity recognition is used as a case study together with a online batch learning situation where the quality of the labels used in the model updating process starts to decrease. Drift detection is based on identifying a set of features having the largest relevance difference between the drifting model and a model that is known to be accurate and monitoring how the relevance of these features changes over time. As a main result of this article, it is shown that feature relevance analysis cannot only be used to detect the concept drift but also to explain the reason for the drift when a limited number of typical reasons for the concept drift are predefined. To explain the reason for the concept drift, it is studied how these predefined reasons effect to feature relevance. In fact, it is shown that each of these has an unique effect to features relevance and these can be used to explain the reason for concept drift.

StressNAS: Affect State and Stress Detection Using Neural Architecture Search

Aug 26, 2021

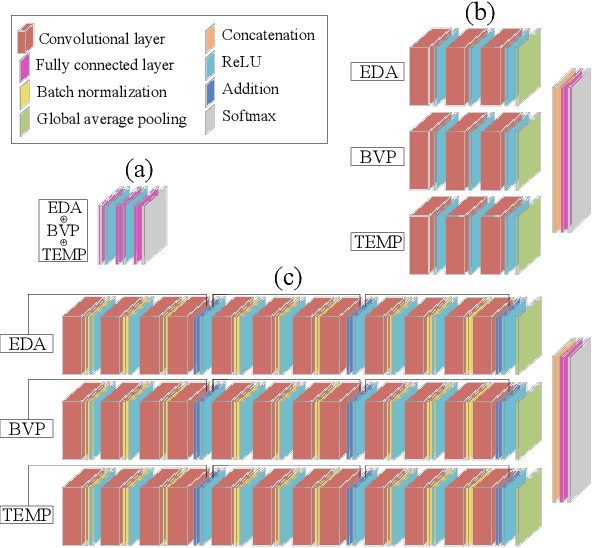

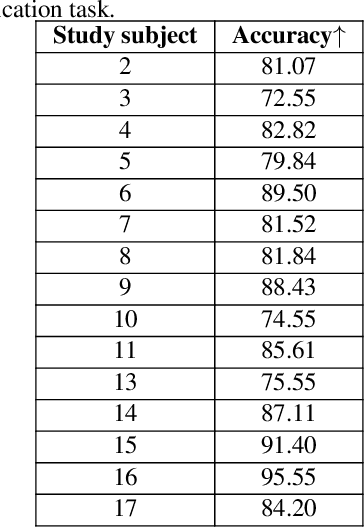

Abstract:Smartwatches have rapidly evolved towards capabilities to accurately capture physiological signals. As an appealing application, stress detection attracts many studies due to its potential benefits to human health. It is propitious to investigate the applicability of deep neural networks (DNN) to enhance human decision-making through physiological signals. However, manually engineering DNN proves a tedious task especially in stress detection due to the complex nature of this phenomenon. To this end, we propose an optimized deep neural network training scheme using neural architecture search merely using wrist-worn data from WESAD. Experiments show that our approach outperforms traditional ML methods by 8.22% and 6.02% in the three-state and two-state classifiers, respectively, using the combination of WESAD wrist signals. Moreover, the proposed method can minimize the need for human-design DNN while improving performance by 4.39% (three-state) and 8.99% (binary).

Personalizing human activity recognition models using incremental learning

May 29, 2019

Abstract:In this study, the aim is to personalize inertial sensor data-based human activity recognition models using incremental learning. At first, the recognition is based on user-independent model. However, when personal streaming data becomes available, the incremental learning-based recognition model can be updated, and therefore personalized, based on the data without user-interruption. The used incremental learning algorithm is Learn++ which is an ensemble method that can use any classifier as a base classifier. In fact, study compares three different base classifiers: linear discriminant analysis (LDA), quadratic discriminant analysis (QDA) and classification and regression tree (CART). Experiments are based on publicly open data set and they show that already a small personal training data set can improve the classification accuracy. Improvement using LDA as base classifier is 4.6 percentage units, using QDA 2.0 percentage units, and 2.3 percentage units using CART. However, if the user-independent model used in the first phase of the recognition process is not accurate enough, personalization cannot improve recognition accuracy.

From User-independent to Personal Human Activity Recognition Models Exploiting the Sensors of a Smartphone

May 29, 2019

Abstract:In this study, a novel method to obtain user-dependent human activity recognition models unobtrusively by exploiting the sensors of a smartphone is presented. The recognition consists of two models: sensor fusion-based user-independent model for data labeling and single sensor-based user-dependent model for final recognition. The functioning of the presented method is tested with human activity data set, including data from accelerometer and magnetometer, and with two classifiers. Comparison of the detection accuracies of the proposed method to traditional user-independent model shows that the presented method has potential, in nine cases out of ten it is better than the traditional method, but more experiments using different sensor combinations should be made to show the full potential of the method.

Effect of context in swipe gesture-based continuous authentication on smartphones

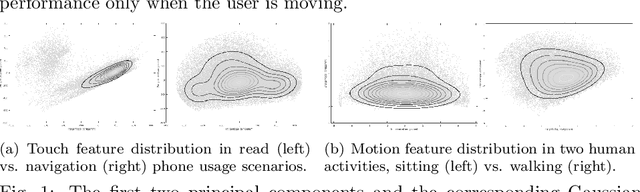

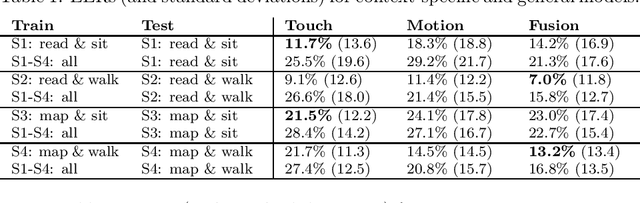

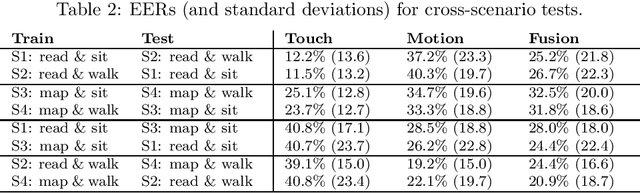

May 28, 2019

Abstract:This work investigates how context should be taken into account when performing continuous authentication of a smartphone user based on touchscreen and accelerometer readings extracted from swipe gestures. The study is conducted on the publicly available HMOG dataset consisting of 100 study subjects performing pre-defined reading and navigation tasks while sitting and walking. It is shown that context-specific models are needed for different smartphone usage and human activity scenarios to minimize authentication error. Also, the experimental results suggests that utilization of phone movement improves swipe gesture-based verification performance only when the user is moving.

Importance of user inputs while using incremental learning to personalize human activity recognition models

May 28, 2019

Abstract:In this study, importance of user inputs is studied in the context of personalizing human activity recognition models using incremental learning. Inertial sensor data from three body positions are used, and the classification is based on Learn++ ensemble method. Three different approaches to update models are compared: non-supervised, semi-supervised and supervised. Non-supervised approach relies fully on predicted labels, supervised fully on user labeled data, and the proposed method for semi-supervised learning, is a combination of these two. In fact, our experiments show that by relying on predicted labels with high confidence, and asking the user to label only uncertain observations (from 12% to 26% of the observations depending on the used base classifier), almost as low error rates can be achieved as by using supervised approach. In fact, the difference was less than 2%-units. Moreover, unlike non-supervised approach, semi-supervised approach does not suffer from drastic concept drift, and thus, the error rate of the non-supervised approach is over 5%-units higher than using semi-supervised approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge