Feature Relevance Analysis to Explain Concept Drift -- A Case Study in Human Activity Recognition

Paper and Code

Jan 20, 2023

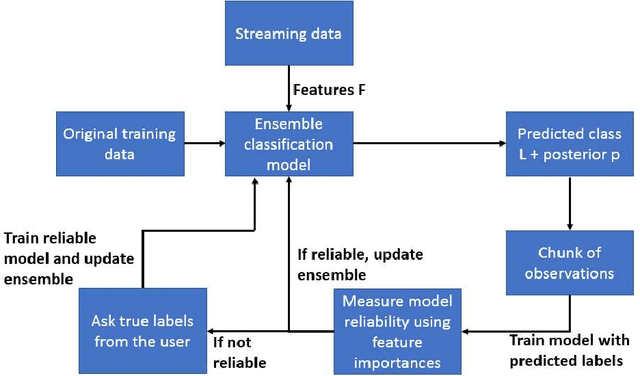

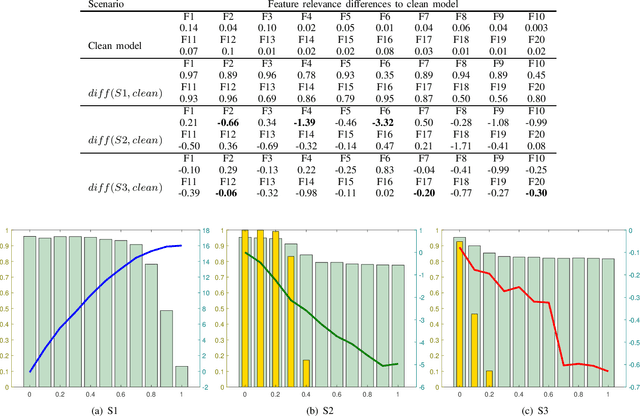

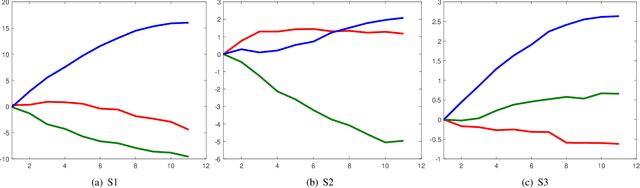

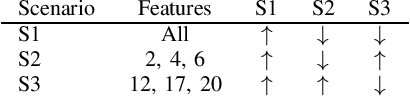

This article studies how to detect and explain concept drift. Human activity recognition is used as a case study together with a online batch learning situation where the quality of the labels used in the model updating process starts to decrease. Drift detection is based on identifying a set of features having the largest relevance difference between the drifting model and a model that is known to be accurate and monitoring how the relevance of these features changes over time. As a main result of this article, it is shown that feature relevance analysis cannot only be used to detect the concept drift but also to explain the reason for the drift when a limited number of typical reasons for the concept drift are predefined. To explain the reason for the concept drift, it is studied how these predefined reasons effect to feature relevance. In fact, it is shown that each of these has an unique effect to features relevance and these can be used to explain the reason for concept drift.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge