Robust and Energy-efficient PPG-based Heart-Rate Monitoring

Paper and Code

Mar 28, 2022

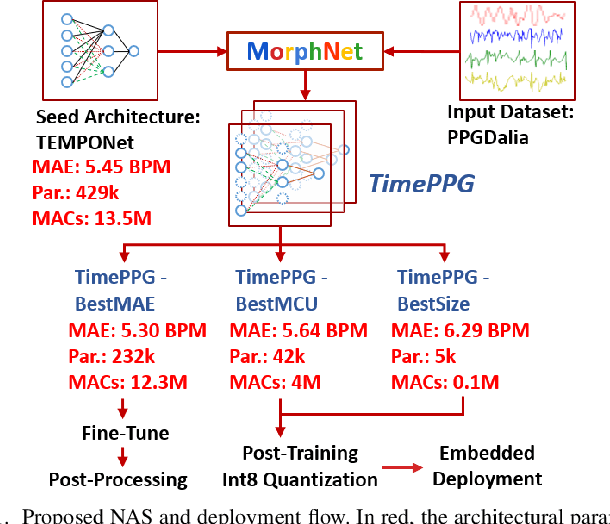

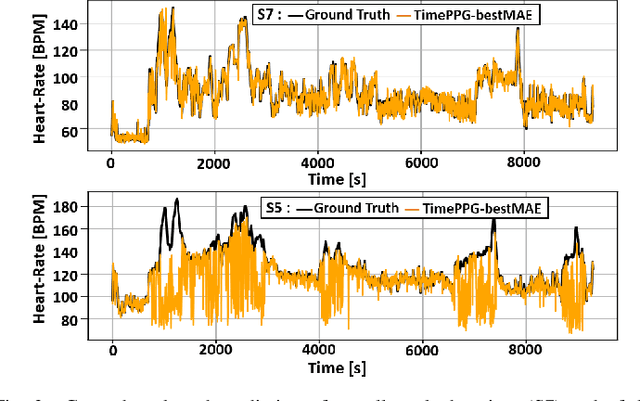

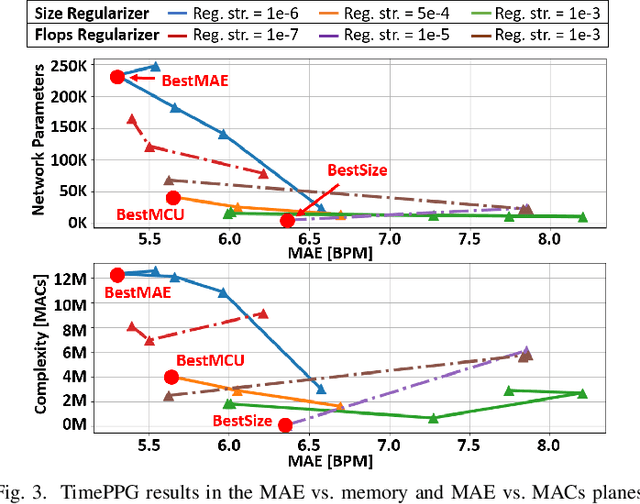

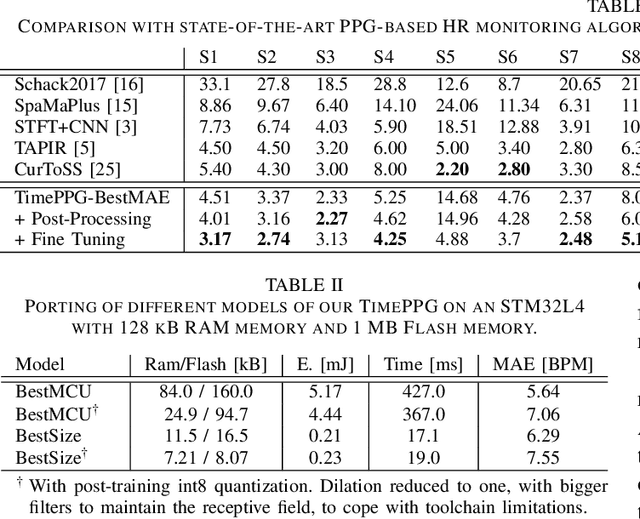

A wrist-worn PPG sensor coupled with a lightweight algorithm can run on a MCU to enable non-invasive and comfortable monitoring, but ensuring robust PPG-based heart-rate monitoring in the presence of motion artifacts is still an open challenge. Recent state-of-the-art algorithms combine PPG and inertial signals to mitigate the effect of motion artifacts. However, these approaches suffer from limited generality. Moreover, their deployment on MCU-based edge nodes has not been investigated. In this work, we tackle both the aforementioned problems by proposing the use of hardware-friendly Temporal Convolutional Networks (TCN) for PPG-based heart estimation. Starting from a single "seed" TCN, we leverage an automatic Neural Architecture Search (NAS) approach to derive a rich family of models. Among them, we obtain a TCN that outperforms the previous state-of-the-art on the largest PPG dataset available (PPGDalia), achieving a Mean Absolute Error (MAE) of just 3.84 Beats Per Minute (BPM). Furthermore, we tested also a set of smaller yet still accurate (MAE of 5.64 - 6.29 BPM) networks that can be deployed on a commercial MCU (STM32L4) which require as few as 5k parameters and reach a latency of 17.1 ms consuming just 0.21 mJ per inference.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge