Carlos Santos

Real-Time, Single-Ear, Wearable ECG Reconstruction, R-Peak Detection, and HR/HRV Monitoring

May 03, 2025Abstract:Biosignal monitoring, in particular heart activity through heart rate (HR) and heart rate variability (HRV) tracking, is vital in enabling continuous, non-invasive tracking of physiological and cognitive states. Recent studies have explored compact, head-worn devices for HR and HRV monitoring to improve usability and reduce stigma. However, this approach is challenged by the current reliance on wet electrodes, which limits usability, the weakness of ear-derived signals, making HR/HRV extraction more complex, and the incompatibility of current algorithms for embedded deployment. This work introduces a single-ear wearable system for real-time ECG (Electrocardiogram) parameter estimation, which directly runs on BioGAP, an energy-efficient device for biosignal acquisition and processing. By combining SoA in-ear electrode technology, an optimized DeepMF algorithm, and BioGAP, our proposed subject-independent approach allows for robust extraction of HR/HRV parameters directly on the device with just 36.7 uJ/inference at comparable performance with respect to the current state-of-the-art architecture, achieving 0.49 bpm and 25.82 ms for HR/HRV mean errors, respectively and an estimated battery life of 36h with a total system power consumption of 7.6 mW. Clinical relevance: The ability to reconstruct ECG signals and extract HR and HRV paves the way for continuous, unobtrusive cardiovascular monitoring with head-worn devices. In particular, the integration of cardiovascular measurements in everyday-use devices (such as earbuds) has potential in continuous at-home monitoring to enable early detection of cardiovascular irregularities.

Improving Image Classification of Knee Radiographs: An Automated Image Labeling Approach

Sep 06, 2023Abstract:Large numbers of radiographic images are available in knee radiology practices which could be used for training of deep learning models for diagnosis of knee abnormalities. However, those images do not typically contain readily available labels due to limitations of human annotations. The purpose of our study was to develop an automated labeling approach that improves the image classification model to distinguish normal knee images from those with abnormalities or prior arthroplasty. The automated labeler was trained on a small set of labeled data to automatically label a much larger set of unlabeled data, further improving the image classification performance for knee radiographic diagnosis. We developed our approach using 7,382 patients and validated it on a separate set of 637 patients. The final image classification model, trained using both manually labeled and pseudo-labeled data, had the higher weighted average AUC (WAUC: 0.903) value and higher AUC-ROC values among all classes (normal AUC-ROC: 0.894; abnormal AUC-ROC: 0.896, arthroplasty AUC-ROC: 0.990) compared to the baseline model (WAUC=0.857; normal AUC-ROC: 0.842; abnormal AUC-ROC: 0.848, arthroplasty AUC-ROC: 0.987), trained using only manually labeled data. DeLong tests show that the improvement is significant on normal (p-value<0.002) and abnormal (p-value<0.001) images. Our findings demonstrated that the proposed automated labeling approach significantly improves the performance of image classification for radiographic knee diagnosis, allowing for facilitating patient care and curation of large knee datasets.

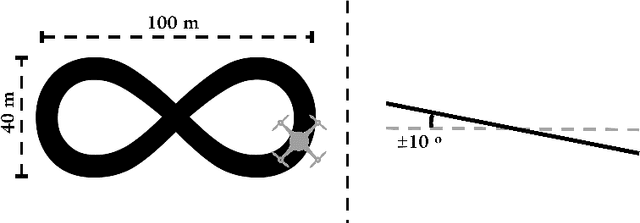

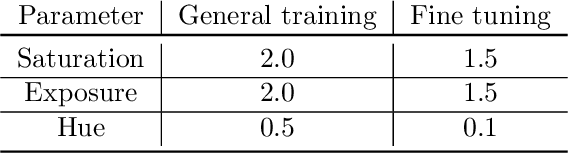

Autonomous Aerial Robot for High-Speed Search and Intercept Applications

Dec 10, 2021

Abstract:In recent years, high-speed navigation and environment interaction in the context of aerial robotics has become a field of interest for several academic and industrial research studies. In particular, Search and Intercept (SaI) applications for aerial robots pose a compelling research area due to their potential usability in several environments. Nevertheless, SaI tasks involve a challenging development regarding sensory weight, on-board computation resources, actuation design and algorithms for perception and control, among others. In this work, a fully-autonomous aerial robot for high-speed object grasping has been proposed. As an additional sub-task, our system is able to autonomously pierce balloons located in poles close to the surface. Our first contribution is the design of the aerial robot at an actuation and sensory level consisting of a novel gripper design with additional sensors enabling the robot to grasp objects at high speeds. The second contribution is a complete software framework consisting of perception, state estimation, motion planning, motion control and mission control in order to rapid- and robustly perform the autonomous grasping mission. Our approach has been validated in a challenging international competition and has shown outstanding results, being able to autonomously search, follow and grasp a moving object at 6 m/s in an outdoor environment

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge