Alejandro Rodriguez-Ramos

Autonomous Aerial Robot for High-Speed Search and Intercept Applications

Dec 10, 2021

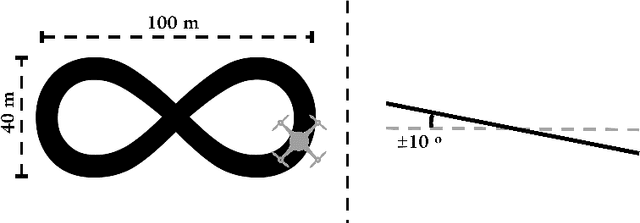

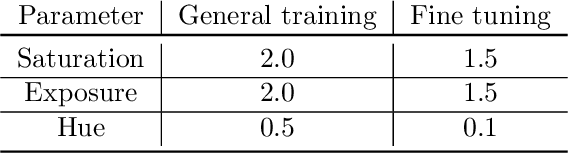

Abstract:In recent years, high-speed navigation and environment interaction in the context of aerial robotics has become a field of interest for several academic and industrial research studies. In particular, Search and Intercept (SaI) applications for aerial robots pose a compelling research area due to their potential usability in several environments. Nevertheless, SaI tasks involve a challenging development regarding sensory weight, on-board computation resources, actuation design and algorithms for perception and control, among others. In this work, a fully-autonomous aerial robot for high-speed object grasping has been proposed. As an additional sub-task, our system is able to autonomously pierce balloons located in poles close to the surface. Our first contribution is the design of the aerial robot at an actuation and sensory level consisting of a novel gripper design with additional sensors enabling the robot to grasp objects at high speeds. The second contribution is a complete software framework consisting of perception, state estimation, motion planning, motion control and mission control in order to rapid- and robustly perform the autonomous grasping mission. Our approach has been validated in a challenging international competition and has shown outstanding results, being able to autonomously search, follow and grasp a moving object at 6 m/s in an outdoor environment

Skyeye Team at MBZIRC 2020: A team of aerial and ground robots for GPS-denied autonomous fire extinguishing in an urban building scenario

Apr 05, 2021

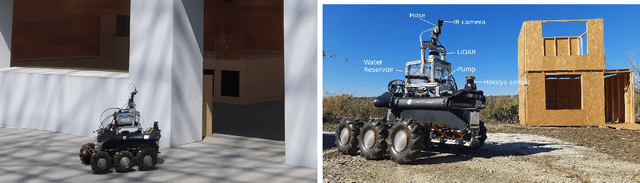

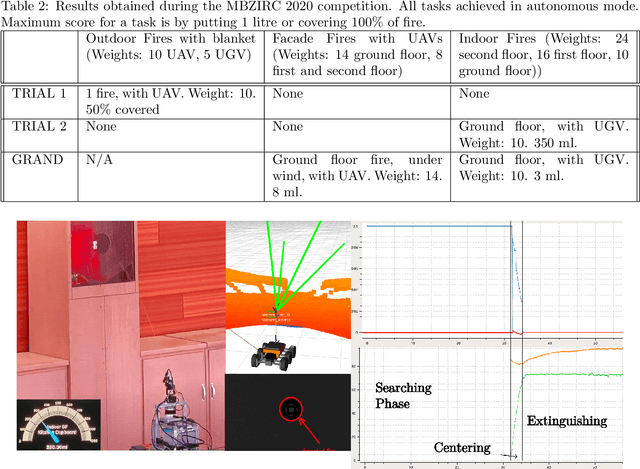

Abstract:The paper presents a presents a framework for fire extinguishing in an urban scenario by a team of aerial and ground robots. The system was developed for the Challenge 3 of the 2020 Mohamed Bin Zayed International Robotics Challenge (MBZIRC). The challenge required to autonomously detect, locate and extinguish fires in different floors of a building, as well as in the surroundings. The multi-robot system developed consists of a heterogeneous robot team of up to three Unmanned Aerial Vehicles (UAV) and one Unmanned Ground Vehicle (UGV). The paper describes the main hardware and software components for UAV and UGV platforms. It also presents the main algorithmic components of the system: a 3D LIDAR-based mapping and localization module able to work in GPS-denied scenarios; a global planner and a fast local re-planning system for robot navigation; infrared-based perception and robot actuation control for fire extinguishing; and a mission executive and coordination module based on Behavior Trees. The paper finally describes the results obtained during competition, where the system worked fully autonomously and scored in all the trials performed. The system contributed to the third place achieved by the Skyeye team in the Grand Challenge of MBZIRC 2020.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge