Luis Merino

Radio-based Multi-Robot Odometry and Relative Localization

Sep 30, 2025Abstract:Radio-based methods such as Ultra-Wideband (UWB) and RAdio Detection And Ranging (radar), which have traditionally seen limited adoption in robotics, are experiencing a boost in popularity thanks to their robustness to harsh environmental conditions and cluttered environments. This work proposes a multi-robot UGV-UAV localization system that leverages the two technologies with inexpensive and readily-available sensors, such as Inertial Measurement Units (IMUs) and wheel encoders, to estimate the relative position of an aerial robot with respect to a ground robot. The first stage of the system pipeline includes a nonlinear optimization framework to trilaterate the location of the aerial platform based on UWB range data, and a radar pre-processing module with loosely coupled ego-motion estimation which has been adapted for a multi-robot scenario. Then, the pre-processed radar data as well as the relative transformation are fed to a pose-graph optimization framework with odometry and inter-robot constraints. The system, implemented for the Robotic Operating System (ROS 2) with the Ceres optimizer, has been validated in Software-in-the-Loop (SITL) simulations and in a real-world dataset. The proposed relative localization module outperforms state-of-the-art closed-form methods which are less robust to noise. Our SITL environment includes a custom Gazebo plugin for generating realistic UWB measurements modeled after real data. Conveniently, the proposed factor graph formulation makes the system readily extensible to full Simultaneous Localization And Mapping (SLAM). Finally, all the code and experimental data is publicly available to support reproducibility and to serve as a common open dataset for benchmarking.

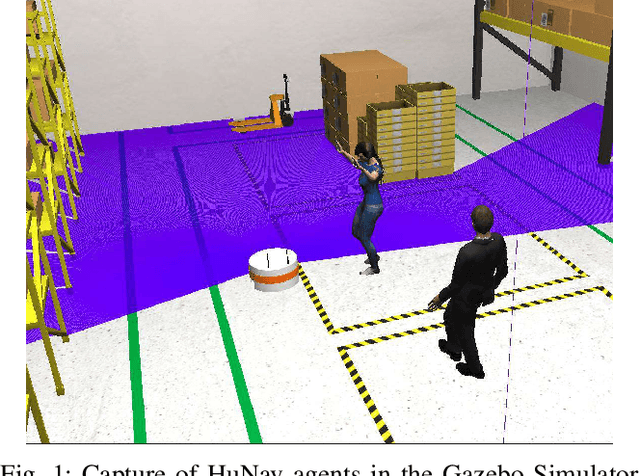

HuNavSim 2.0

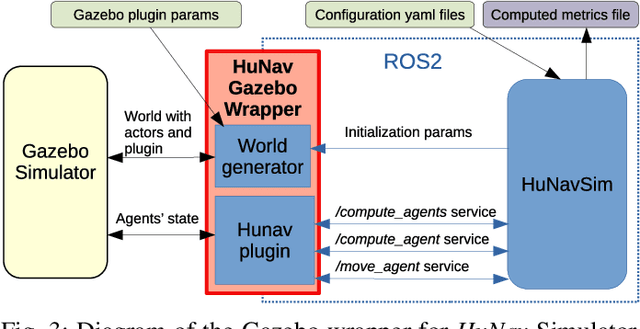

Jul 23, 2025Abstract:This work presents a new iteration of the Human Navigation Simulator (HuNavSim), a novel open-source tool for the simulation of different human-agent navigation behaviors in scenarios with mobile robots. The tool, programmed under the ROS 2 framework, can be used together with different well-known robotics simulators such as Gazebo or NVidia Isaac Sim. The main goal is to facilitate the development and evaluation of human-aware robot navigation systems in simulation. In this new version, several features have been improved and new ones added, such as the extended set of actions and conditions that can be combined in Behavior Trees to compound complex and realistic human behaviors.

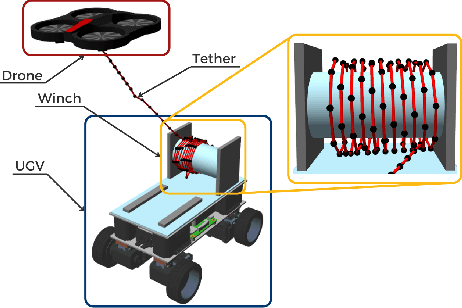

Long Duration Inspection of GNSS-Denied Environments with a Tethered UAV-UGV Marsupial System

May 29, 2025Abstract:Unmanned Aerial Vehicles (UAVs) have become essential tools in inspection and emergency response operations due to their high maneuverability and ability to access hard-to-reach areas. However, their limited battery life significantly restricts their use in long-duration missions. This paper presents a novel tethered marsupial robotic system composed of a UAV and an Unmanned Ground Vehicle (UGV), specifically designed for autonomous, long-duration inspection tasks in Global Navigation Satellite System (GNSS)-denied environments. The system extends the UAV's operational time by supplying power through a tether connected to high-capacity battery packs carried by the UGV. We detail the hardware architecture based on off-the-shelf components to ensure replicability and describe our full-stack software framework, which is composed of open-source components and built upon the Robot Operating System (ROS). The proposed software architecture enables precise localization using a Direct LiDAR Localization (DLL) method and ensures safe path planning and coordinated trajectory tracking for the integrated UGV-tether-UAV system. We validate the system through three field experiments: (1) a manual flight endurance test to estimate the operational duration, (2) an autonomous navigation test, and (3) an inspection mission to demonstrate autonomous inspection capabilities. Experimental results confirm the robustness and autonomy of the system, its capacity to operate in GNSS-denied environments, and its potential for long-endurance, autonomous inspection and monitoring tasks.

4D Radar-Inertial Odometry based on Gaussian Modeling and Multi-Hypothesis Scan Matching

Dec 18, 2024

Abstract:4D millimeter-wave (mmWave) radars are sensors that provide robustness against adverse weather conditions (rain, snow, fog, etc.), and as such they are increasingly being used for odometry and SLAM applications. However, the noisy and sparse nature of the returned scan data proves to be a challenging obstacle for existing point cloud matching based solutions, especially those originally intended for more accurate sensors such as LiDAR. Inspired by visual odometry research around 3D Gaussian Splatting, in this paper we propose using freely positioned 3D Gaussians to create a summarized representation of a radar point cloud tolerant to sensor noise, and subsequently leverage its inherent probability distribution function for registration (similar to NDT). Moreover, we propose simultaneously optimizing multiple scan matching hypotheses in order to further increase the robustness of the system against local optima of the function. Finally, we fuse our Gaussian modeling and scan matching algorithms into an EKF radar-inertial odometry system designed after current best practices. Experiments show that our Gaussian-based odometry is able to outperform current baselines on a well-known 4D radar dataset used for evaluation.

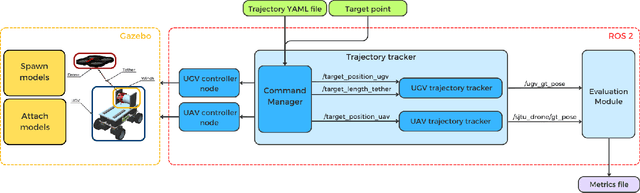

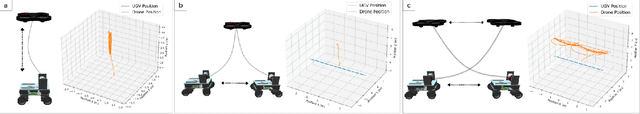

Physical simulation of Marsupial UAV-UGV Systems Connected by a Hanging Tether using Gazebo

Dec 17, 2024

Abstract:This paper presents a ROS 2-based simulator framework for tethered UAV-UGV marsupial systems in Gazebo. The framework models interactions among a UAV, a UGV, and a winch with dynamically adjustable length and slack of the tether. It supports both manual control and automated trajectory tracking, with the winch adjusting the length of the tether based on the relative distance between the robots. The simulator's performance is demonstrated through experiments, including comparisons with real-world data, showcasing its capability to simulate tethered robotic systems. The framework offers a flexible tool for researchers exploring tethered robot dynamics. The source code of the simulator is publicly available for the research community.

Loosely coupled 4D-Radar-Inertial Odometry for Ground Robots

Nov 26, 2024Abstract:Accurate robot odometry is essential for autonomous navigation. While numerous techniques have been developed based on various sensor suites, odometry estimation using only radar and IMU remains an underexplored area. Radar proves particularly valuable in environments where traditional sensors, like cameras or LiDAR, may struggle, especially in low-light conditions or when faced with environmental challenges like fog, rain or smoke. However, despite its robustness, radar data is noisier and more prone to outliers, requiring specialized processing approaches. In this paper, we propose a graph-based optimization approach using a sliding window for radar-based odometry, designed to maintain robust relationships between poses by forming a network of connections, while keeping computational costs fixed (specially beneficial in long trajectories). Additionally, we introduce an enhancement in the ego-velocity estimation specifically for ground vehicles, both holonomic and non-holonomic, which subsequently improves the direct odometry input required by the optimizer. Finally, we present a comparative study of our approach against existing algorithms, showing how our pure odometry approach inproves the state of art in most trajectories of the NTU4DRadLM dataset, achieving promising results when evaluating key performance metrics.

Adaptive Social Force Window Planner with Reinforcement Learning

Apr 21, 2024Abstract:Human-aware navigation is a complex task for mobile robots, requiring an autonomous navigation system capable of achieving efficient path planning together with socially compliant behaviors. Social planners usually add costs or constraints to the objective function, leading to intricate tuning processes or tailoring the solution to the specific social scenario. Machine Learning can enhance planners' versatility and help them learn complex social behaviors from data. This work proposes an adaptive social planner, using a Deep Reinforcement Learning agent to dynamically adjust the weighting parameters of the cost function used to evaluate trajectories. The resulting planner combines the robustness of the classic Dynamic Window Approach, integrated with a social cost based on the Social Force Model, and the flexibility of learning methods to boost the overall performance on social navigation tasks. Our extensive experimentation on different environments demonstrates the general advantage of the proposed method over static cost planners.

ars548_ros. An ARS 548 RDI radar driver for ROS2

Apr 06, 2024

Abstract:The ARS 548 RDI Radar is a premium model of the fifth generation of 77 GHz long range radar sensors with new RF antenna arrays, which offer digital beam forming. This radar measures independently the distance, speed and angle of objects without any reflectors in one measurement cycle based on Pulse Compression with New Frequency Modulation [1]. Unfortunately, there were not any drivers available for Linux systems to make the user able to analyze the data acquired from this sensor to the best of our knowledge. In this paper, we present a driver that is able to interpret the data from the ARS 548 RDI sensor and produce data in Robot Operation System version 2 (ROS2). Thus, this data can be stored, represented and analyzed by using the powerful tools offered by ROS2. Besides, our driver offers advanced object features provided by the sensor, such as relative estimated velocity and acceleration of each object, its orientation and angular velocity. We focus on the configuration of the sensor and the use of our driver and advanced filtering and representation tools, offering a video tutorial for these purposes. Finally, a dataset acquired with this sensor and an Ouster OS1-32 LiDAR sensor for baseline purposes is available, so that the user can check the correctness of our driver.

FROG: A new people detection dataset for knee-high 2D range finders

Jun 14, 2023Abstract:Mobile robots require knowledge of the environment, especially of humans located in its vicinity. While the most common approaches for detecting humans involve computer vision, an often overlooked hardware feature of robots for people detection are their 2D range finders. These were originally intended for obstacle avoidance and mapping/SLAM tasks. In most robots, they are conveniently located at a height approximately between the ankle and the knee, so they can be used for detecting people too, and with a larger field of view and depth resolution compared to cameras. In this paper, we present a new dataset for people detection using knee-high 2D range finders called FROG. This dataset has greater laser resolution, scanning frequency, and more complete annotation data compared to existing datasets such as DROW. Particularly, the FROG dataset contains annotations for 100% of its laser scans (unlike DROW which only annotates 5%), 17x more annotated scans, 100x more people annotations, and over twice the distance traveled by the robot. We propose a benchmark based on the FROG dataset, and analyze a collection of state-of-the-art people detectors based on 2D range finder data. We also propose and evaluate a new end-to-end deep learning approach for people detection. Our solution works with the raw sensor data directly (not needing hand-crafted input data features), thus avoiding CPU preprocessing and releasing the developer of understanding specific domain heuristics. Experimental results show how the proposed people detector attains results comparable to the state of the art, while an optimized implementation for ROS can operate at more than 500 Hz.

HuNavSim: A ROS 2 Human Navigation Simulator for Benchmarking Human-Aware Robot Navigation

May 02, 2023

Abstract:This work presents the Human Navigation Simulator (HuNavSim), a novel open-source tool for the simulation of different human-agent navigation behaviors in scenarios with mobile robots. The tool, the first programmed under the ROS 2 framework, can be employed along with different well-known robotics simulators like Gazebo. The main goal is to ease the development and evaluation of human-aware robot navigation systems in simulation. Besides a general human-navigation model, HuNavSim includes, as a novelty, a rich set of individual and realistic human navigation behaviors and a complete set of metrics for social navigation benchmarking.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge