Pascual Campoy

Computer Vision and Aerial Robotics group

Flocking behavior for dynamic and complex swarm structures

Jan 29, 2026Abstract:Maintaining the formation of complex structures with multiple UAVs and achieving complex trajectories remains a major challenge. This work presents an algorithm for implementing the flocking behavior of UAVs based on the concept of Virtual Centroid to easily develop a structure for the flock. The approach builds on the classical virtual-based behavior, providing a theoretical framework for incorporating enhancements to dynamically control both the number of agents and the formation of the structure. Simulation tests and real-world experiments were conducted, demonstrating its simplicity even with complex formations and complex trajectories.

A Methodology for Designing Knowledge-Driven Missions for Robots

Jan 28, 2026Abstract:This paper presents a comprehensive methodology for implementing knowledge graphs in ROS 2 systems, aiming to enhance the efficiency and intelligence of autonomous robotic missions. The methodology encompasses several key steps: defining initial and target conditions, structuring tasks and subtasks, planning their sequence, representing task-related data in a knowledge graph, and designing the mission using a high-level language. Each step builds on the previous one to ensure a cohesive process from initial setup to final execution. A practical implementation within the Aerostack2 framework is demonstrated through a simulated search and rescue mission in a Gazebo environment, where drones autonomously locate a target. This implementation highlights the effectiveness of the methodology in improving decision-making and mission performance by leveraging knowledge graphs.

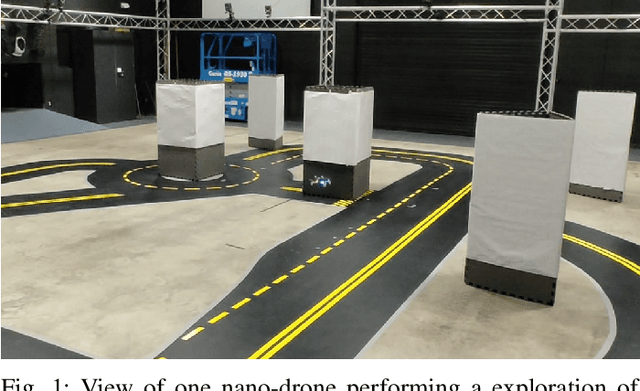

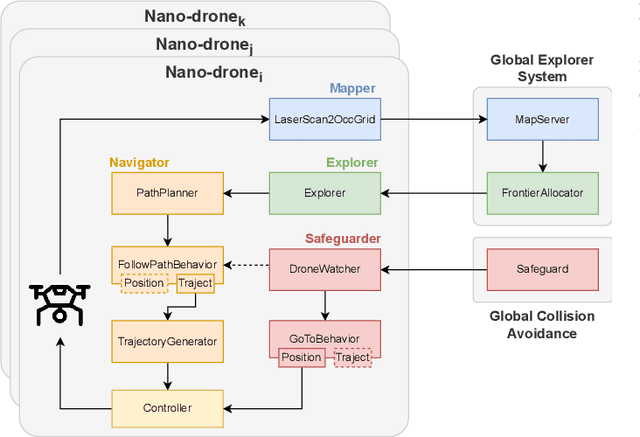

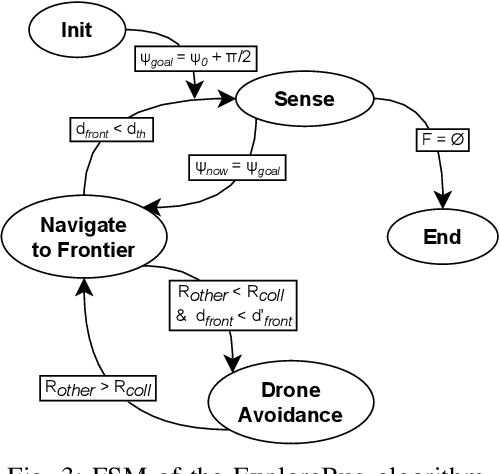

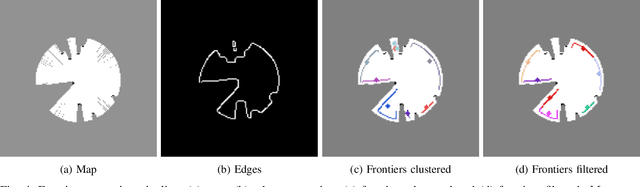

Exploring Unstructured Environments using Minimal Sensing on Cooperative Nano-Drones

Jul 09, 2024

Abstract:Recent advances have improved autonomous navigation and mapping under payload constraints, but current multi-robot inspection algorithms are unsuitable for nano-drones due to their need for heavy sensors and high computational resources. To address these challenges, we introduce ExploreBug, a novel hybrid frontier range bug algorithm designed to handle limited sensing capabilities for a swarm of nano-drones. This system includes three primary components: a mapping subsystem, an exploration subsystem, and a navigation subsystem. Additionally, an intra-swarm collision avoidance system is integrated to prevent collisions between drones. We validate the efficacy of our approach through extensive simulations and real-world exploration experiments involving up to seven drones in simulations and three in real-world settings, across various obstacle configurations and with a maximum navigation speed of 0.75 m/s. Our tests demonstrate that the algorithm efficiently completes exploration tasks, even with minimal sensing, across different swarm sizes and obstacle densities. Furthermore, our frontier allocation heuristic ensures an equal distribution of explored areas and paths traveled by each drone in the swarm. We publicly release the source code of the proposed system to foster further developments in mapping and exploration using autonomous nano drones.

The landscape of Collective Awareness in multi-robot systems

Jan 17, 2024Abstract:The development of collective-aware multi-robot systems is crucial for enhancing the efficiency and robustness of robotic applications in multiple fields. These systems enable collaboration, coordination, and resource sharing among robots, leading to improved scalability, adaptability to dynamic environments, and increased overall system robustness. In this work, we want to provide a brief overview of this research topic and identify open challenges.

Multi S-Graphs: an Efficient Real-time Distributed Semantic-Relational Collaborative SLAM

Jan 10, 2024Abstract:Collaborative Simultaneous Localization and Mapping (CSLAM) is critical to enable multiple robots to operate in complex environments. Most CSLAM techniques rely on raw sensor measurement or low-level features such as keyframe descriptors, which can lead to wrong loop closures due to the lack of deep understanding of the environment. Moreover, the exchange of these measurements and low-level features among the robots requires the transmission of a significant amount of data, which limits the scalability of the system. To overcome these limitations, we present Multi S-Graphs, a decentralized CSLAM system that utilizes high-level semantic-relational information embedded in the four-layered hierarchical and optimizable situational graphs for cooperative map generation and localization while minimizing the information exchanged between the robots. To support this, we present a novel room-based descriptor which, along with its connected walls, is used to perform inter-robot loop closures, addressing the challenges of multi-robot kidnapped problem initialization. Multiple experiments in simulated and real environments validate the improvement in accuracy and robustness of the proposed approach while reducing the amount of data exchanged between robots compared to other state-of-the-art approaches. Software available within a docker image: https://github.com/snt-arg/multi_s_graphs_docker

Local Gaussian Modifiers (LGMs): UAV dynamic trajectory generation for onboard computation

May 05, 2023

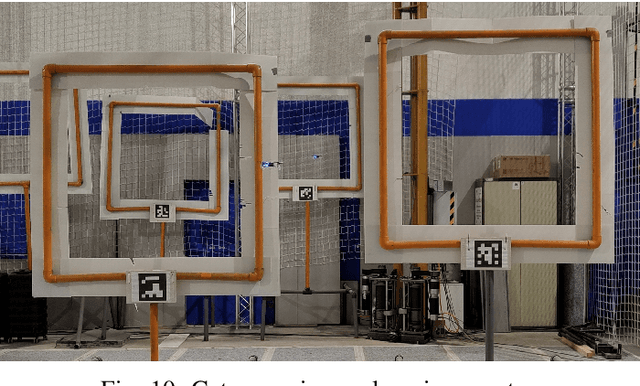

Abstract:Agile autonomous drones are becoming increasingly popular in research due to the challenges they represent in fields like control, state estimation, or perception at high speeds. When all algorithms are computed onboard the uav, the computational limitations make the task of agile and robust flight even more difficult. One of the most computationally expensive tasks in agile flight is the generation of optimal trajectories that tackles the problem of planning a minimum time trajectory for a quadrotor over a sequence of specified waypoints. When these trajectories must be updated online due to changes in the environment or uncertainties, this high computational cost can leverage to not reach the desired waypoints or even crash in cluttered environments. In this paper, a fast lightweight dynamic trajectory modification approach is presented to allow modifying computational heavy trajectories using Local Gaussian Modifiers (LGMs), when recalculating a trajectory is not possible due to the time of computation. Our approach was validated in simulation, being able to pass through a race circuit with dynamic gates with top speeds up to 16.0 m/s, and was also validated in real flight reaching speeds up to 4.0 m/s in a fully autonomous onboard computing condition.

Multi S-graphs: A Collaborative Semantic SLAM architecture

May 05, 2023Abstract:Collaborative Simultaneous Localization and Mapping (CSLAM) is a critical capability for enabling multiple robots to operate in complex environments. Most CSLAM techniques rely on the transmission of low-level features for visual and LiDAR-based approaches, which are used for pose graph optimization. However, these low-level features can lead to incorrect loop closures, negatively impacting map generation.Recent approaches have proposed the use of high-level semantic information in the form of Hierarchical Semantic Graphs to improve the loop closure procedures and overall precision of SLAM algorithms. In this work, we present Multi S-Graphs, an S-graphs [1] based distributed CSLAM algorithm that utilizes high-level semantic information for cooperative map generation while minimizing the amount of information exchanged between robots. Experimental results demonstrate the promising performance of the proposed algorithm in map generation tasks.

Aerostack2: A Software Framework for Developing Multi-robot Aerial Systems

Mar 31, 2023

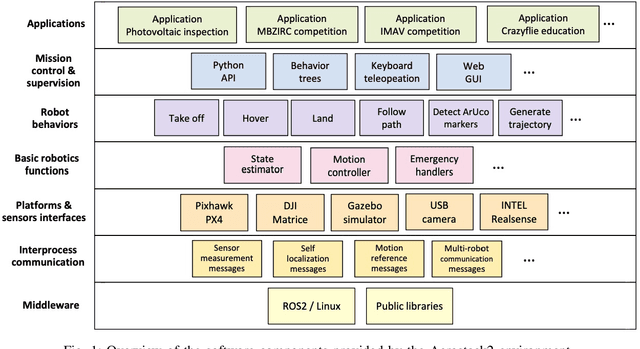

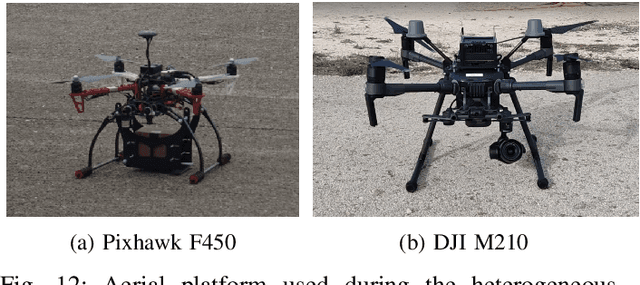

Abstract:In recent years, the robotics community has witnessed the development of several software stacks for ground and articulated robots, such as Navigation2 and MoveIt. However, the same level of collaboration and standardization is yet to be achieved in the field of aerial robotics, where each research group has developed their own frameworks. This work presents Aerostack2, a framework for the development of autonomous aerial robotics systems that aims to address the lack of standardization and fragmentation of efforts in the field. Built on ROS 2 middleware and featuring an efficient modular software architecture and multi-robot orientation, Aerostack2 is a versatile and platform-independent environment that covers a wide range of robot capabilities for autonomous operation. Its major contributions include providing a logical level for specifying missions, reusing components and sub-systems for aerial robotics, and enabling the development of complete control architectures. All major contributions have been tested in simulation and real flights with multiple heterogeneous swarms. Aerostack2 is open source and community oriented, democratizing the access to its technology by autonomous drone systems developers.

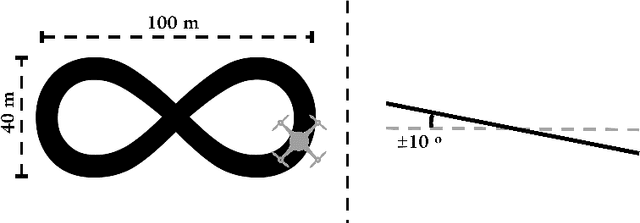

Autonomous Aerial Robot for High-Speed Search and Intercept Applications

Dec 10, 2021

Abstract:In recent years, high-speed navigation and environment interaction in the context of aerial robotics has become a field of interest for several academic and industrial research studies. In particular, Search and Intercept (SaI) applications for aerial robots pose a compelling research area due to their potential usability in several environments. Nevertheless, SaI tasks involve a challenging development regarding sensory weight, on-board computation resources, actuation design and algorithms for perception and control, among others. In this work, a fully-autonomous aerial robot for high-speed object grasping has been proposed. As an additional sub-task, our system is able to autonomously pierce balloons located in poles close to the surface. Our first contribution is the design of the aerial robot at an actuation and sensory level consisting of a novel gripper design with additional sensors enabling the robot to grasp objects at high speeds. The second contribution is a complete software framework consisting of perception, state estimation, motion planning, motion control and mission control in order to rapid- and robustly perform the autonomous grasping mission. Our approach has been validated in a challenging international competition and has shown outstanding results, being able to autonomously search, follow and grasp a moving object at 6 m/s in an outdoor environment

Skyeye Team at MBZIRC 2020: A team of aerial and ground robots for GPS-denied autonomous fire extinguishing in an urban building scenario

Apr 05, 2021

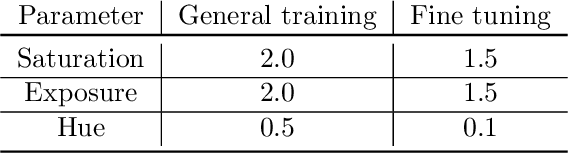

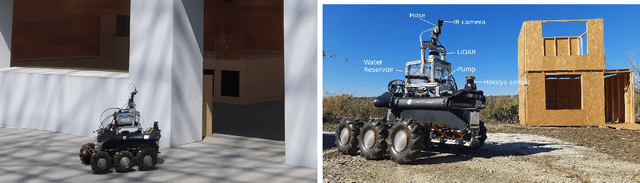

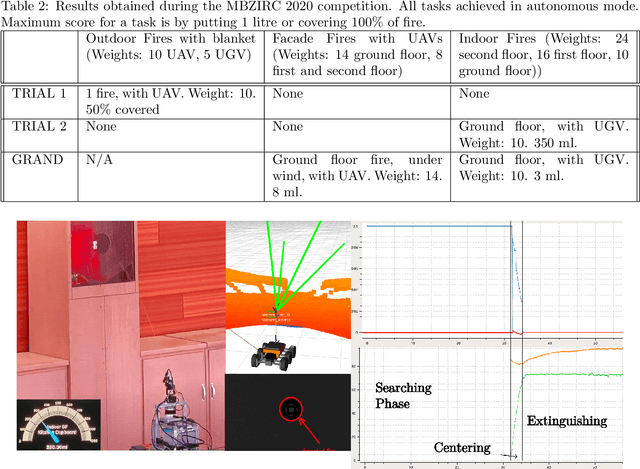

Abstract:The paper presents a presents a framework for fire extinguishing in an urban scenario by a team of aerial and ground robots. The system was developed for the Challenge 3 of the 2020 Mohamed Bin Zayed International Robotics Challenge (MBZIRC). The challenge required to autonomously detect, locate and extinguish fires in different floors of a building, as well as in the surroundings. The multi-robot system developed consists of a heterogeneous robot team of up to three Unmanned Aerial Vehicles (UAV) and one Unmanned Ground Vehicle (UGV). The paper describes the main hardware and software components for UAV and UGV platforms. It also presents the main algorithmic components of the system: a 3D LIDAR-based mapping and localization module able to work in GPS-denied scenarios; a global planner and a fast local re-planning system for robot navigation; infrared-based perception and robot actuation control for fire extinguishing; and a mission executive and coordination module based on Behavior Trees. The paper finally describes the results obtained during competition, where the system worked fully autonomously and scored in all the trials performed. The system contributed to the third place achieved by the Skyeye team in the Grand Challenge of MBZIRC 2020.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge