Alvika Gautam

Online Planning for Cooperative Air-Ground Robot Systems with Unknown Fuel Requirements

Jun 25, 2025Abstract:We consider an online variant of the fuel-constrained UAV routing problem with a ground-based mobile refueling station (FCURP-MRS), where targets incur unknown fuel costs. We develop a two-phase solution: an offline heuristic-based planner computes initial UAV and UGV paths, and a novel online planning algorithm that dynamically adjusts rendezvous points based on real-time fuel consumption during target processing. Preliminary Gazebo simulations demonstrate the feasibility of our approach in maintaining UAV-UGV path validity, ensuring mission completion. Link to video: https://youtu.be/EmpVj-fjqNY

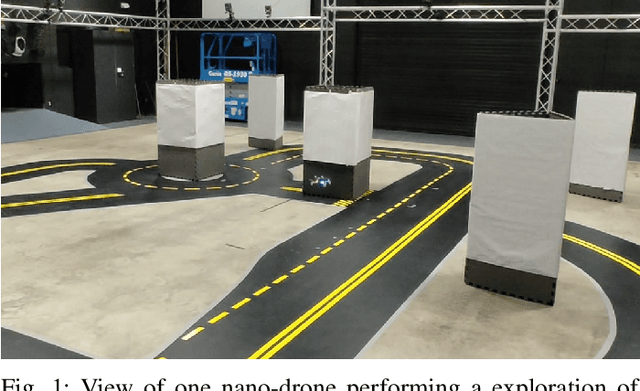

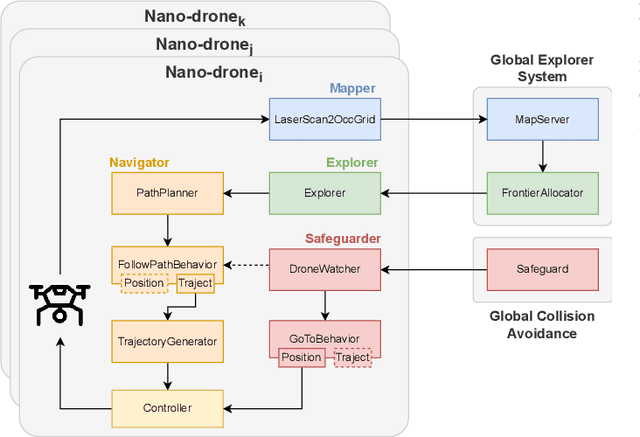

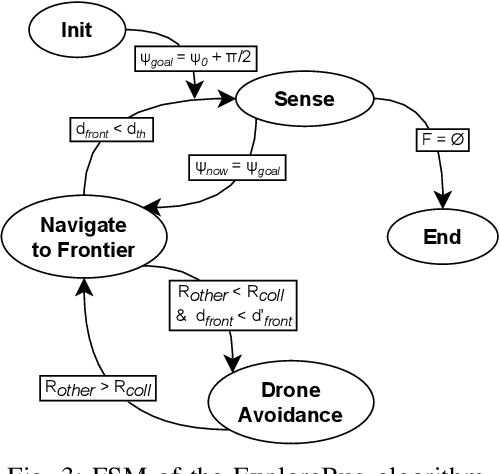

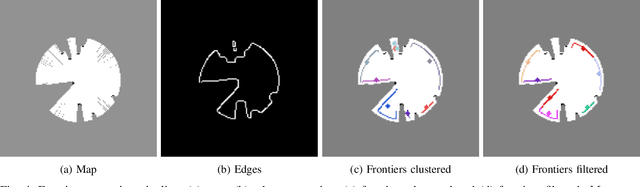

Exploring Unstructured Environments using Minimal Sensing on Cooperative Nano-Drones

Jul 09, 2024

Abstract:Recent advances have improved autonomous navigation and mapping under payload constraints, but current multi-robot inspection algorithms are unsuitable for nano-drones due to their need for heavy sensors and high computational resources. To address these challenges, we introduce ExploreBug, a novel hybrid frontier range bug algorithm designed to handle limited sensing capabilities for a swarm of nano-drones. This system includes three primary components: a mapping subsystem, an exploration subsystem, and a navigation subsystem. Additionally, an intra-swarm collision avoidance system is integrated to prevent collisions between drones. We validate the efficacy of our approach through extensive simulations and real-world exploration experiments involving up to seven drones in simulations and three in real-world settings, across various obstacle configurations and with a maximum navigation speed of 0.75 m/s. Our tests demonstrate that the algorithm efficiently completes exploration tasks, even with minimal sensing, across different swarm sizes and obstacle densities. Furthermore, our frontier allocation heuristic ensures an equal distribution of explored areas and paths traveled by each drone in the swarm. We publicly release the source code of the proposed system to foster further developments in mapping and exploration using autonomous nano drones.

GPT-4V Takes the Wheel: Evaluating Promise and Challenges for Pedestrian Behavior Prediction

Nov 24, 2023

Abstract:Existing pedestrian behavior prediction methods rely primarily on deep neural networks that utilize features extracted from video frame sequences. Although these vision-based models have shown promising results, they face limitations in effectively capturing and utilizing the dynamic spatio-temporal interactions between the target pedestrian and its surrounding traffic elements, crucial for accurate reasoning. Additionally, training these models requires manually annotating domain-specific datasets, a process that is expensive, time-consuming, and difficult to generalize to new environments and scenarios. The recent emergence of Large Multimodal Models (LMMs) offers potential solutions to these limitations due to their superior visual understanding and causal reasoning capabilities, which can be harnessed through semi-supervised training. GPT-4V(ision), the latest iteration of the state-of-the-art Large-Language Model GPTs, now incorporates vision input capabilities. This report provides a comprehensive evaluation of the potential of GPT-4V for pedestrian behavior prediction in autonomous driving using publicly available datasets: JAAD, PIE, and WiDEVIEW. Quantitative and qualitative evaluations demonstrate GPT-4V(ision)'s promise in zero-shot pedestrian behavior prediction and driving scene understanding ability for autonomous driving. However, it still falls short of the state-of-the-art traditional domain-specific models. Challenges include difficulties in handling small pedestrians and vehicles in motion. These limitations highlight the need for further research and development in this area.

WiDEVIEW: An UltraWideBand and Vision Dataset for Deciphering Pedestrian-Vehicle Interactions

Sep 27, 2023

Abstract:Robust and accurate tracking and localization of road users like pedestrians and cyclists is crucial to ensure safe and effective navigation of Autonomous Vehicles (AVs), particularly so in urban driving scenarios with complex vehicle-pedestrian interactions. Existing datasets that are useful to investigate vehicle-pedestrian interactions are mostly image-centric and thus vulnerable to vision failures. In this paper, we investigate Ultra-wideband (UWB) as an additional modality for road users' localization to enable a better understanding of vehicle-pedestrian interactions. We present WiDEVIEW, the first multimodal dataset that integrates LiDAR, three RGB cameras, GPS/IMU, and UWB sensors for capturing vehicle-pedestrian interactions in an urban autonomous driving scenario. Ground truth image annotations are provided in the form of 2D bounding boxes and the dataset is evaluated on standard 2D object detection and tracking algorithms. The feasibility of UWB is evaluated for typical traffic scenarios in both line-of-sight and non-line-of-sight conditions using LiDAR as ground truth. We establish that UWB range data has comparable accuracy with LiDAR with an error of 0.19 meters and reliable anchor-tag range data for up to 40 meters in line-of-sight conditions. UWB performance for non-line-of-sight conditions is subjective to the nature of the obstruction (trees vs. buildings). Further, we provide a qualitative analysis of UWB performance for scenarios susceptible to intermittent vision failures. The dataset can be downloaded via https://github.com/unmannedlab/UWB_Dataset.

Learning Pedestrian Actions to Ensure Safe Autonomous Driving

May 22, 2023

Abstract:To ensure safe autonomous driving in urban environments with complex vehicle-pedestrian interactions, it is critical for Autonomous Vehicles (AVs) to have the ability to predict pedestrians' short-term and immediate actions in real-time. In recent years, various methods have been developed to study estimating pedestrian behaviors for autonomous driving scenarios, but there is a lack of clear definitions for pedestrian behaviors. In this work, the literature gaps are investigated and a taxonomy is presented for pedestrian behavior characterization. Further, a novel multi-task sequence to sequence Transformer encoders-decoders (TF-ed) architecture is proposed for pedestrian action and trajectory prediction using only ego vehicle camera observations as inputs. The proposed approach is compared against an existing LSTM encoders decoders (LSTM-ed) architecture for action and trajectory prediction. The performance of both models is evaluated on the publicly available Joint Attention Autonomous Driving (JAAD) dataset, CARLA simulation data as well as real-time self-driving shuttle data collected on university campus. Evaluation results illustrate that the proposed method reaches an accuracy of 81% on action prediction task on JAAD testing data and outperforms the LSTM-ed by 7.4%, while LSTM counterpart performs much better on trajectory prediction task for a prediction sequence length of 25 frames.

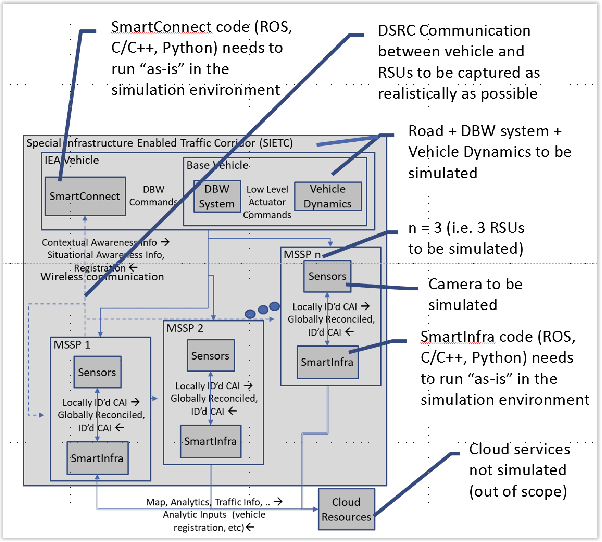

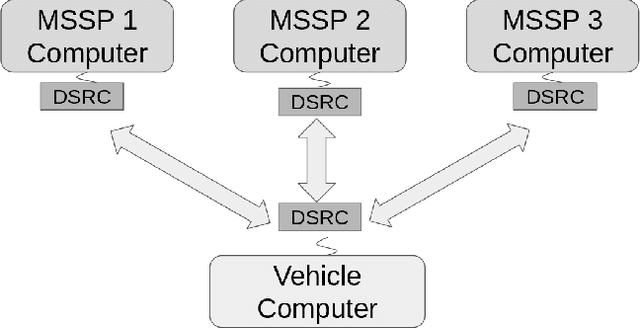

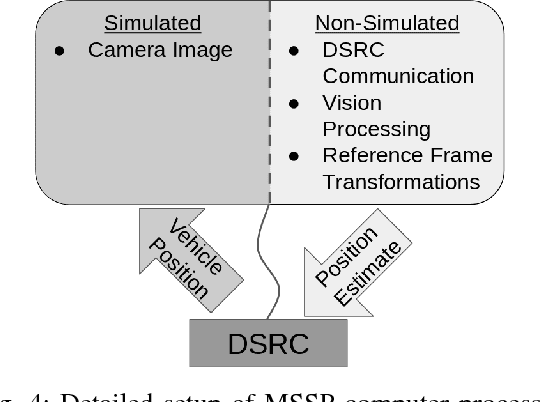

A Distributed Hybrid Hardware-In-the-Loop Simulation framework for Infrastructure Enabled Autonomy

Feb 06, 2018

Abstract:Infrastructure Enabled Autonomy (IEA) is a new paradigm that employs a distributed intelligence architecture for connected autonomous vehicles by offloading core functionalities to the infrastructure. In this paper, we develop a simulation framework that can be used to study the concept. A key challenge for such a simulation is the rapid increase in the scale of the computations with the size of the infrastructure to be considered. Our simulation framework is designed to be distributed and scales proportionally with the infrastructure. By integrally using both the hardware controllers and communication devices as part of the simulation framework, we achieve an optimal balance between modeling of the dynamics and sensors, and reusing real hardware for simulation of proprietary or complex communication methods. Multiple cameras on the infrastructure are simulated. The simulation of the camera image processing is done in distributed hardware and the resultant position information is transmitted wirelessly to the computer simulating the autonomous vehicle. We demonstrate closed loop control of a single vehicle following given waypoints using information from multiple cameras located on Road-Side-Units.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge