Prabhat

Learning from learning machines: a new generation of AI technology to meet the needs of science

Nov 27, 2021

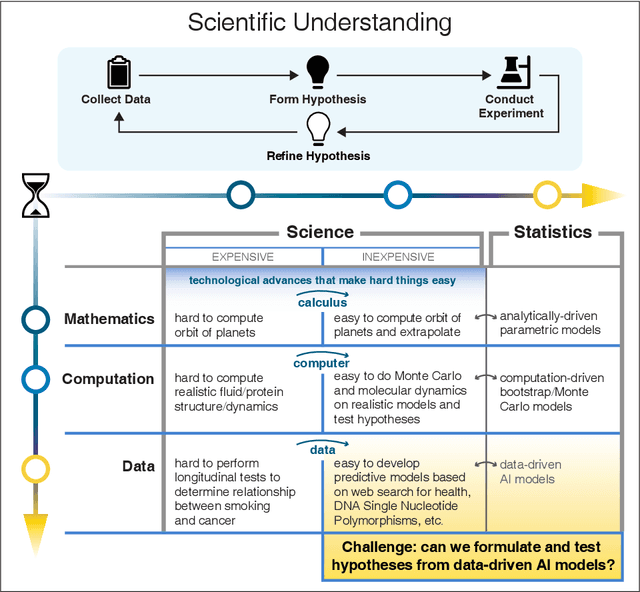

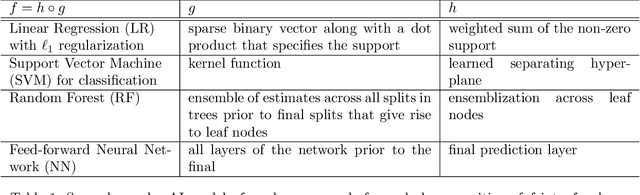

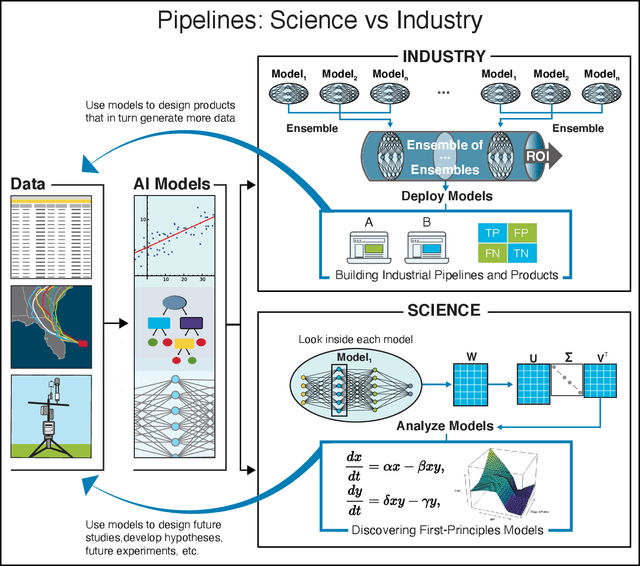

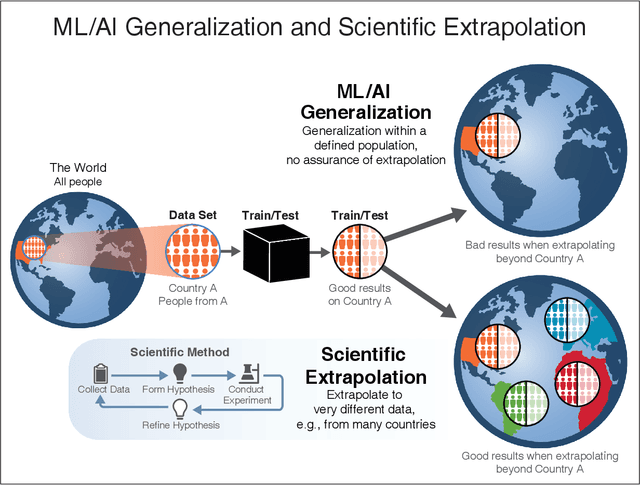

Abstract:We outline emerging opportunities and challenges to enhance the utility of AI for scientific discovery. The distinct goals of AI for industry versus the goals of AI for science create tension between identifying patterns in data versus discovering patterns in the world from data. If we address the fundamental challenges associated with "bridging the gap" between domain-driven scientific models and data-driven AI learning machines, then we expect that these AI models can transform hypothesis generation, scientific discovery, and the scientific process itself.

Track Seeding and Labelling with Embedded-space Graph Neural Networks

Jun 30, 2020

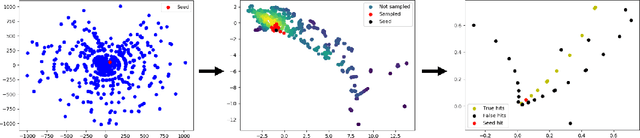

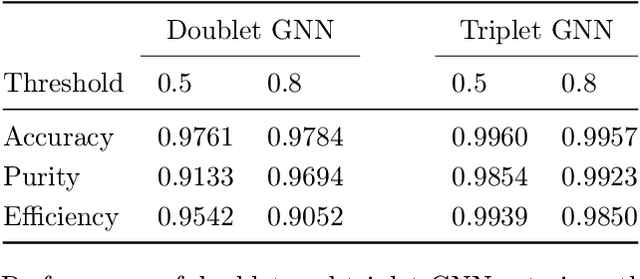

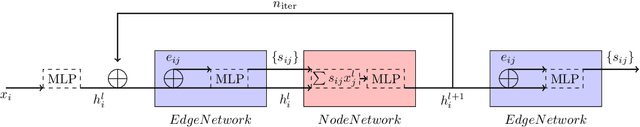

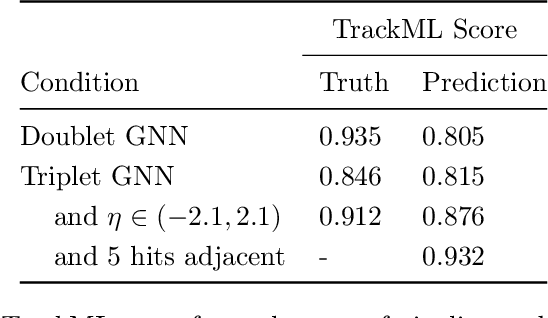

Abstract:To address the unprecedented scale of HL-LHC data, the Exa.TrkX project is investigating a variety of machine learning approaches to particle track reconstruction. The most promising of these solutions, graph neural networks (GNN), process the event as a graph that connects track measurements (detector hits corresponding to nodes) with candidate line segments between the hits (corresponding to edges). Detector information can be associated with nodes and edges, enabling a GNN to propagate the embedded parameters around the graph and predict node-, edge- and graph-level observables. Previously, message-passing GNNs have shown success in predicting doublet likelihood, and we here report updates on the state-of-the-art architectures for this task. In addition, the Exa.TrkX project has investigated innovations in both graph construction, and embedded representations, in an effort to achieve fully learned end-to-end track finding. Hence, we present a suite of extensions to the original model, with encouraging results for hitgraph classification. In addition, we explore increased performance by constructing graphs from learned representations which contain non-linear metric structure, allowing for efficient clustering and neighborhood queries of data points. We demonstrate how this framework fits in with both traditional clustering pipelines, and GNN approaches. The embedded graphs feed into high-accuracy doublet and triplet classifiers, or can be used as an end-to-end track classifier by clustering in an embedded space. A set of post-processing methods improve performance with knowledge of the detector physics. Finally, we present numerical results on the TrackML particle tracking challenge dataset, where our framework shows favorable results in both seeding and track finding.

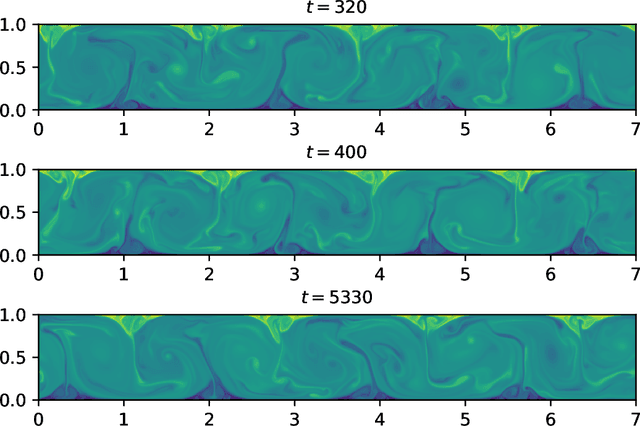

MeshfreeFlowNet: A Physics-Constrained Deep Continuous Space-Time Super-Resolution Framework

May 01, 2020

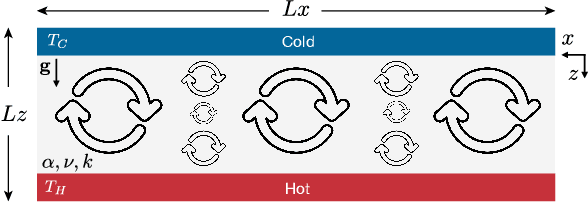

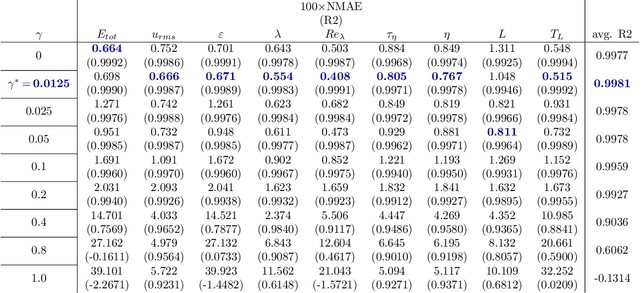

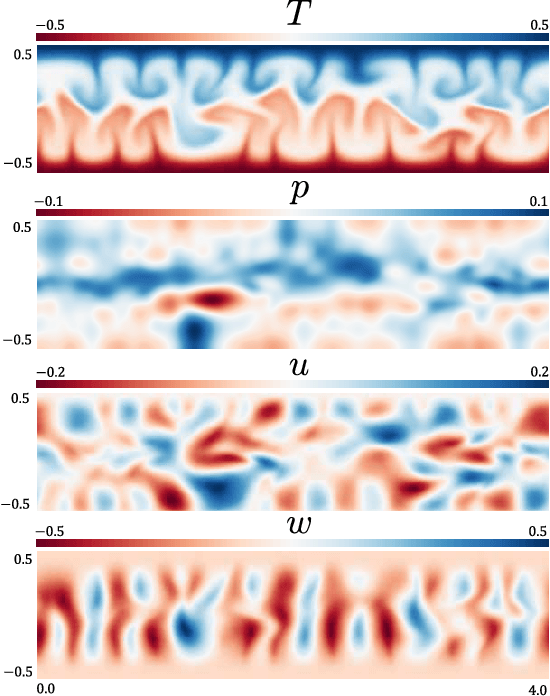

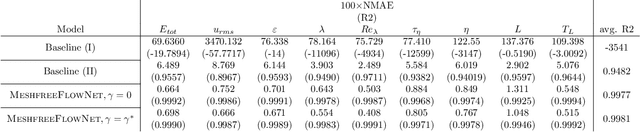

Abstract:We propose MeshfreeFlowNet, a novel deep learning-based super-resolution framework to generate continuous (grid-free) spatio-temporal solutions from the low-resolution inputs. While being computationally efficient, MeshfreeFlowNet accurately recovers the fine-scale quantities of interest. MeshfreeFlowNet allows for: (i) the output to be sampled at all spatio-temporal resolutions, (ii) a set of Partial Differential Equation (PDE) constraints to be imposed, and (iii) training on fixed-size inputs on arbitrarily sized spatio-temporal domains owing to its fully convolutional encoder. We empirically study the performance of MeshfreeFlowNet on the task of super-resolution of turbulent flows in the Rayleigh-Benard convection problem. Across a diverse set of evaluation metrics, we show that MeshfreeFlowNet significantly outperforms existing baselines. Furthermore, we provide a large scale implementation of MeshfreeFlowNet and show that it efficiently scales across large clusters, achieving 96.80% scaling efficiency on up to 128 GPUs and a training time of less than 4 minutes.

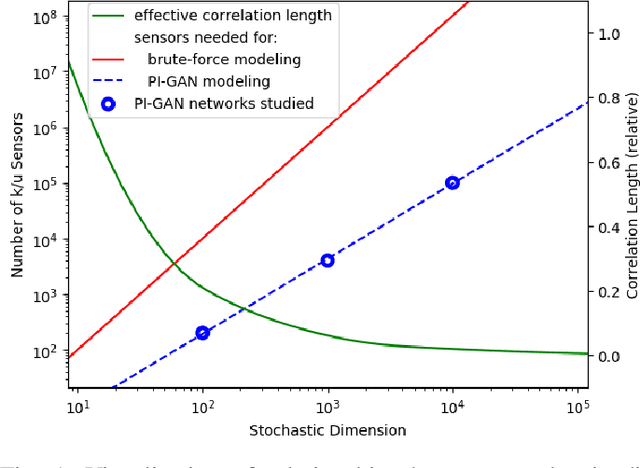

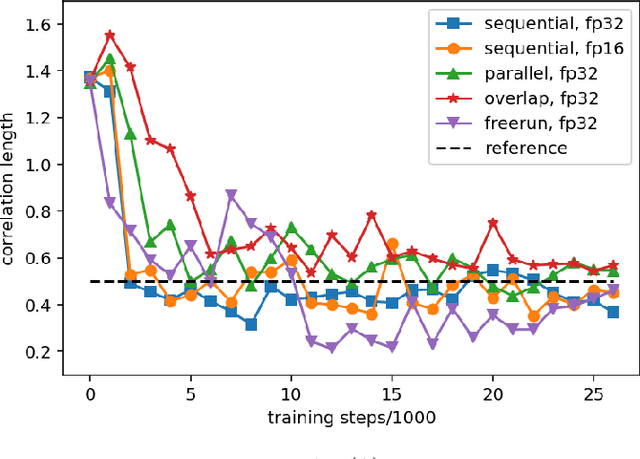

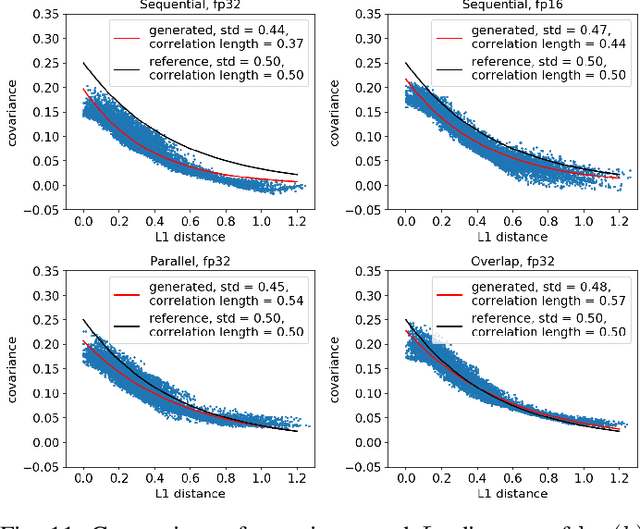

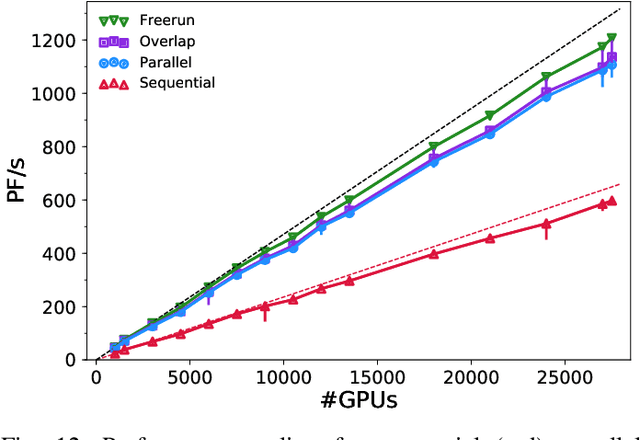

Highly-scalable, physics-informed GANs for learning solutions of stochastic PDEs

Oct 29, 2019

Abstract:Uncertainty quantification for forward and inverse problems is a central challenge across physical and biomedical disciplines. We address this challenge for the problem of modeling subsurface flow at the Hanford Site by combining stochastic computational models with observational data using physics-informed GAN models. The geographic extent, spatial heterogeneity, and multiple correlation length scales of the Hanford Site require training a computationally intensive GAN model to thousands of dimensions. We develop a hierarchical scheme for exploiting domain parallelism, map discriminators and generators to multiple GPUs, and employ efficient communication schemes to ensure training stability and convergence. We developed a highly optimized implementation of this scheme that scales to 27,500 NVIDIA Volta GPUs and 4584 nodes on the Summit supercomputer with a 93.1% scaling efficiency, achieving peak and sustained half-precision rates of 1228 PF/s and 1207 PF/s.

DisCo: Physics-Based Unsupervised Discovery of Coherent Structures in Spatiotemporal Systems

Sep 25, 2019

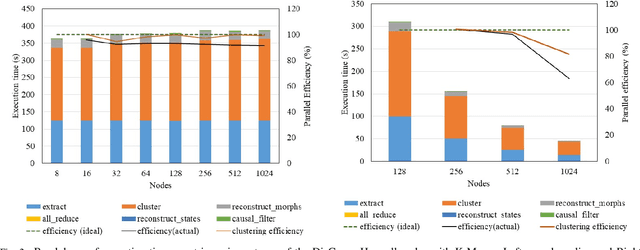

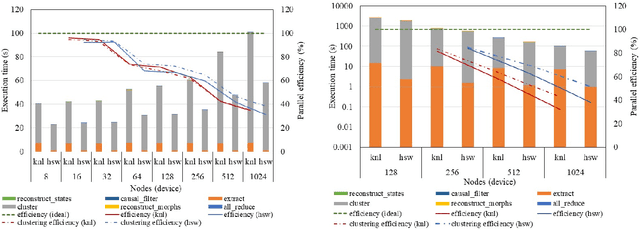

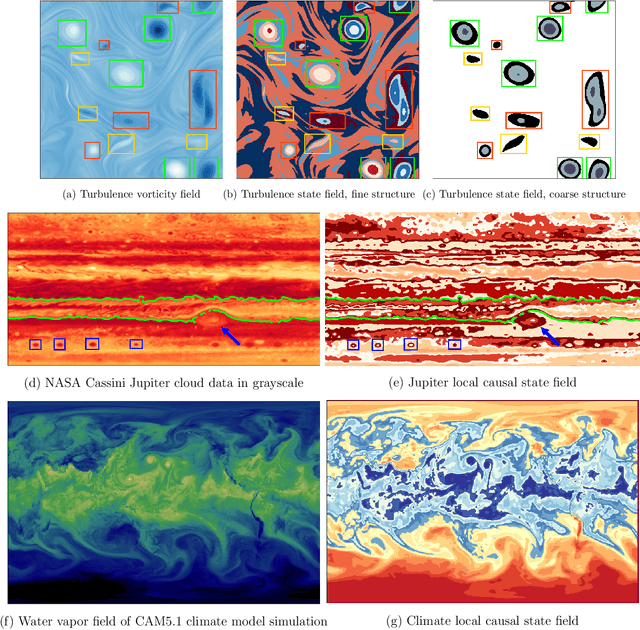

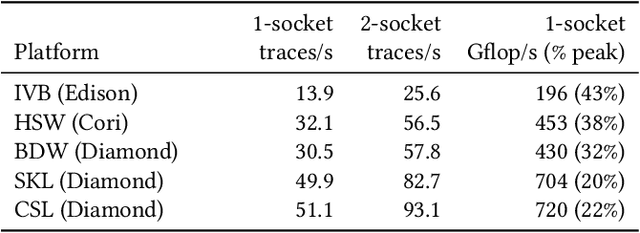

Abstract:Extracting actionable insight from complex unlabeled scientific data is an open challenge and key to unlocking data-driven discovery in science. Complementary and alternative to supervised machine learning approaches, unsupervised physics-based methods based on behavior-driven theories hold great promise. Due to computational limitations, practical application on real-world domain science problems has lagged far behind theoretical development. We present our first step towards bridging this divide - DisCo - a high-performance distributed workflow for the behavior-driven local causal state theory. DisCo provides a scalable unsupervised physics-based representation learning method that decomposes spatiotemporal systems into their structurally relevant components, which are captured by the latent local causal state variables. Complex spatiotemporal systems are generally highly structured and organize around a lower-dimensional skeleton of coherent structures, and in several firsts we demonstrate the efficacy of DisCo in capturing such structures from observational and simulated scientific data. To the best of our knowledge, DisCo is also the first application software developed entirely in Python to scale to over 1000 machine nodes, providing good performance along with ensuring domain scientists' productivity. We developed scalable, performant methods optimized for Intel many-core processors that will be upstreamed to open-source Python library packages. Our capstone experiment, using newly developed DisCo workflow and libraries, performs unsupervised spacetime segmentation analysis of CAM5.1 climate simulation data, processing an unprecedented 89.5 TB in 6.6 minutes end-to-end using 1024 Intel Haswell nodes on the Cori supercomputer obtaining 91% weak-scaling and 64% strong-scaling efficiency.

Towards Unsupervised Segmentation of Extreme Weather Events

Sep 16, 2019

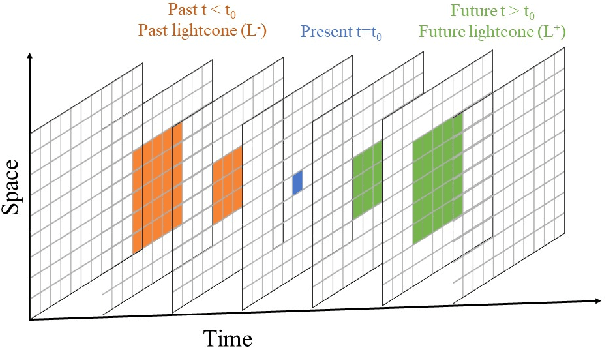

Abstract:Extreme weather is one of the main mechanisms through which climate change will directly impact human society. Coping with such change as a global community requires markedly improved understanding of how global warming drives extreme weather events. While alternative climate scenarios can be simulated using sophisticated models, identifying extreme weather events in these simulations requires automation due to the vast amounts of complex high-dimensional data produced. Atmospheric dynamics, and hydrodynamic flows more generally, are highly structured and largely organize around a lower dimensional skeleton of coherent structures. Indeed, extreme weather events are a special case of more general hydrodynamic coherent structures. We present a scalable physics-based representation learning method that decomposes spatiotemporal systems into their structurally relevant components, which are captured by latent variables known as local causal states. For complex fluid flows we show our method is capable of capturing known coherent structures, and with promising segmentation results on CAM5.1 water vapor data we outline the path to extreme weather identification from unlabeled climate model simulation data.

Etalumis: Bringing Probabilistic Programming to Scientific Simulators at Scale

Jul 08, 2019

Abstract:Probabilistic programming languages (PPLs) are receiving widespread attention for performing Bayesian inference in complex generative models. However, applications to science remain limited because of the impracticability of rewriting complex scientific simulators in a PPL, the computational cost of inference, and the lack of scalable implementations. To address these, we present a novel PPL framework that couples directly to existing scientific simulators through a cross-platform probabilistic execution protocol and provides Markov chain Monte Carlo (MCMC) and deep-learning-based inference compilation (IC) engines for tractable inference. To guide IC inference, we perform distributed training of a dynamic 3DCNN--LSTM architecture with a PyTorch-MPI-based framework on 1,024 32-core CPU nodes of the Cori supercomputer with a global minibatch size of 128k: achieving a performance of 450 Tflop/s through enhancements to PyTorch. We demonstrate a Large Hadron Collider (LHC) use-case with the C++ Sherpa simulator and achieve the largest-scale posterior inference in a Turing-complete PPL.

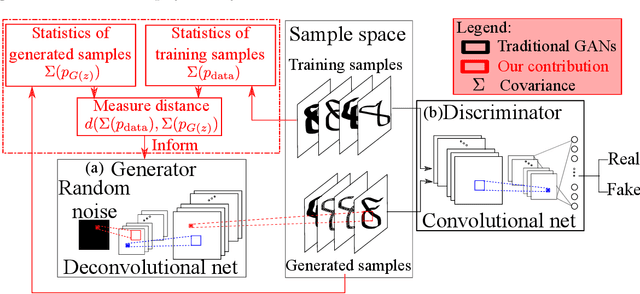

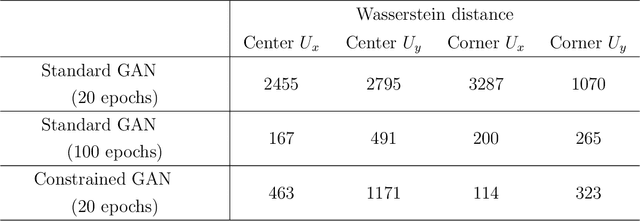

Enforcing Statistical Constraints in Generative Adversarial Networks for Modeling Chaotic Dynamical Systems

May 13, 2019

Abstract:Simulating complex physical systems often involves solving partial differential equations (PDEs) with some closures due to the presence of multi-scale physics that cannot be fully resolved. Therefore, reliable and accurate closure models for unresolved physics remains an important requirement for many computational physics problems, e.g., turbulence simulation. Recently, several researchers have adopted generative adversarial networks (GANs), a novel paradigm of training machine learning models, to generate solutions of PDEs-governed complex systems without having to numerically solve these PDEs. However, GANs are known to be difficult in training and likely to converge to local minima, where the generated samples do not capture the true statistics of the training data. In this work, we present a statistical constrained generative adversarial network by enforcing constraints of covariance from the training data, which results in an improved machine-learning-based emulator to capture the statistics of the training data generated by solving fully resolved PDEs. We show that such a statistical regularization leads to better performance compared to standard GANs, measured by (1) the constrained model's ability to more faithfully emulate certain physical properties of the system and (2) the significantly reduced (by up to 80%) training time to reach the solution. We exemplify this approach on the Rayleigh-Benard convection, a turbulent flow system that is an idealized model of the Earth's atmosphere. With the growth of high-fidelity simulation databases of physical systems, this work suggests great potential for being an alternative to the explicit modeling of closures or parameterizations for unresolved physics, which are known to be a major source of uncertainty in simulating multi-scale physical systems, e.g., turbulence or Earth's climate.

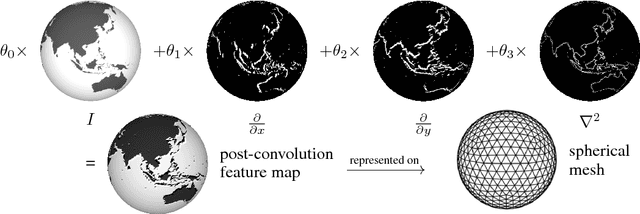

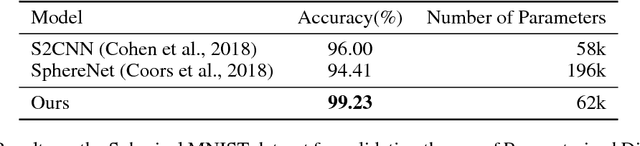

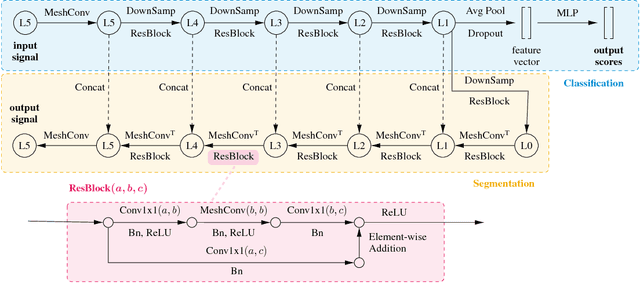

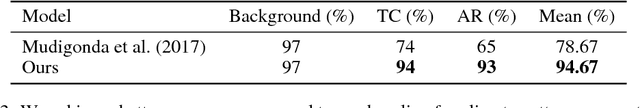

Spherical CNNs on Unstructured Grids

Jan 07, 2019

Abstract:We present an efficient convolution kernel for Convolutional Neural Networks (CNNs) on unstructured grids using parameterized differential operators while focusing on spherical signals such as panorama images or planetary signals. To this end, we replace conventional convolution kernels with linear combinations of differential operators that are weighted by learnable parameters. Differential operators can be efficiently estimated on unstructured grids using one-ring neighbors, and learnable parameters can be optimized through standard back-propagation. As a result, we obtain extremely efficient neural networks that match or outperform state-of-the-art network architectures in terms of performance but with a significantly lower number of network parameters. We evaluate our algorithm in an extensive series of experiments on a variety of computer vision and climate science tasks, including shape classification, climate pattern segmentation, and omnidirectional image semantic segmentation. Overall, we present (1) a novel CNN approach on unstructured grids using parameterized differential operators for spherical signals, and (2) we show that our unique kernel parameterization allows our model to achieve the same or higher accuracy with significantly fewer network parameters.

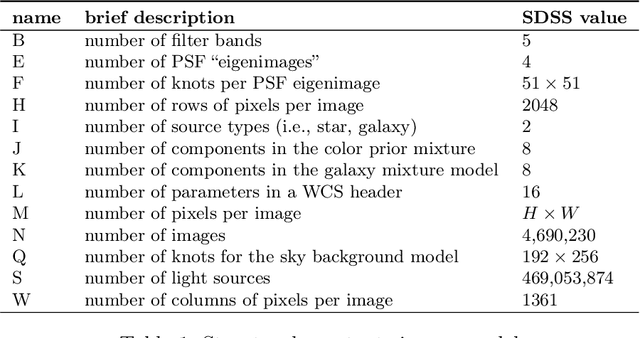

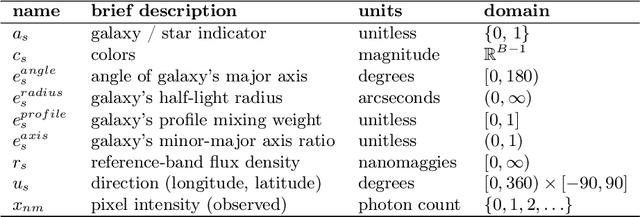

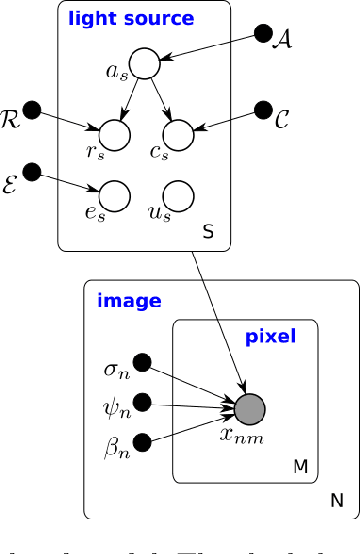

Approximate Inference for Constructing Astronomical Catalogs from Images

Oct 12, 2018

Abstract:We present a new, fully generative model for constructing astronomical catalogs from optical telescope image sets. Each pixel intensity is treated as a Poisson random variable with a rate parameter that depends on the latent properties of stars and galaxies. These latent properties are themselves random, with prior distributions fitted by empirical Bayes. We compare two procedures for posterior inference. One procedure is based on Markov chain Monte Carlo (MCMC) while the other is based on variational inference (VI). We demonstrate that the MCMC procedure excels at quantifying uncertainty while the VI procedure is 1000x faster. For the error metric we consider, both procedures outperform the current state-of-the-art method for measuring the colors, shapes, and morphologies of stars and galaxies. On a supercomputer, the VI procedure efficiently uses 665,000 CPU cores (1.3 million hardware threads) to construct an astronomical catalog from 50 terabytes of images

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge