Adrian Albert

Towards Physics-informed Deep Learning for Turbulent Flow Prediction

Dec 21, 2019

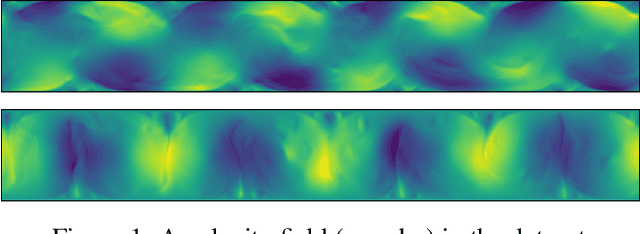

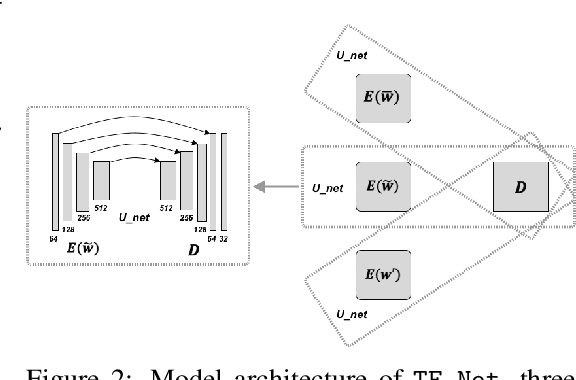

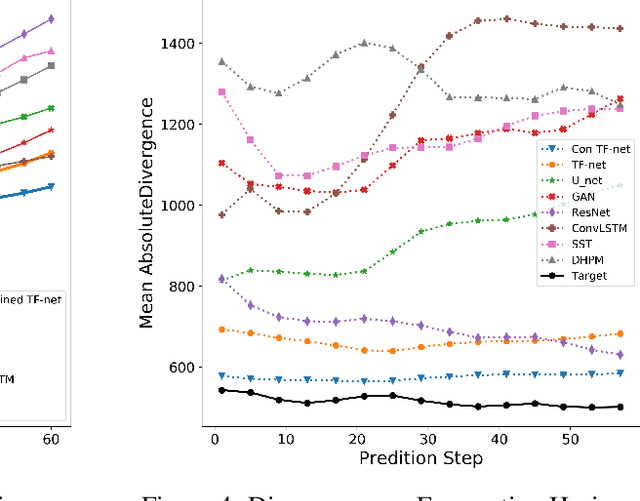

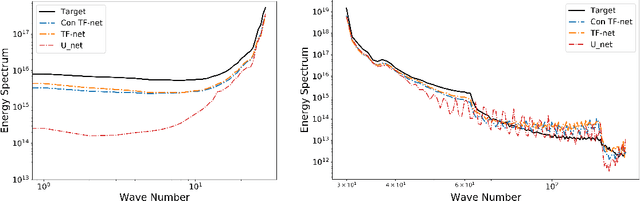

Abstract:While deep learning has shown tremendous success in a wide range of domains, it remains a grand challenge to incorporate physical principles in a systematic manner to the design, training, and inference of such models. In this paper, we aim to predict turbulent flow by learning its highly nonlinear dynamics from spatiotemporal velocity fields of large-scale fluid flow simulations of relevance to turbulence modeling and climate modeling. We adopt a hybrid approach by marrying two well-established turbulent flow simulation techniques with deep learning. Specifically, we introduce trainable spectral filters in a coupled model of Reynolds-averaged Navier-Stokes (RANS) and Large Eddy Simulation (LES), followed by a specialized U-net for prediction. Our approach, which we call turbulent-Flow Net (TF-Net), is grounded in a principled physics model, yet offers the flexibility of learned representations. We compare our model, TF-Net, with state-of-the-art baselines and observe significant reductions in error for predictions 60 frames ahead. Most importantly, our method predicts physical fields that obey desirable physical characteristics, such as conservation of mass, whilst faithfully emulating the turbulent kinetic energy field and spectrum, which are critical for accurate prediction of turbulent flows.

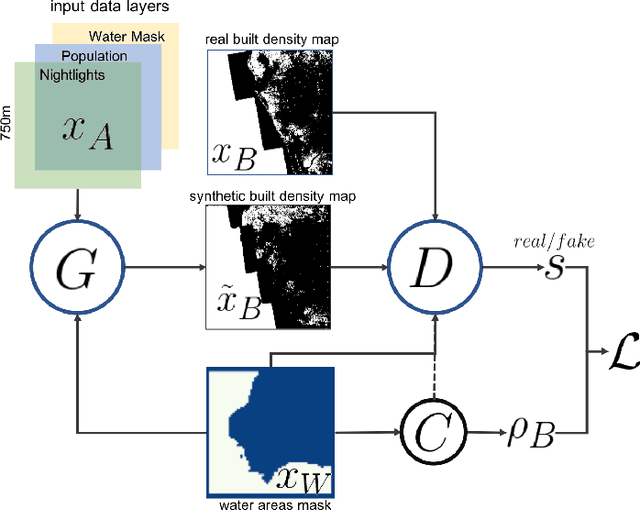

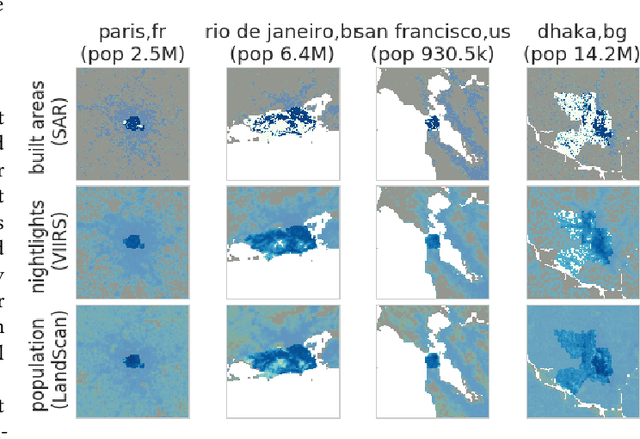

Spatial sensitivity analysis for urban land use prediction with physics-constrained conditional generative adversarial networks

Jul 22, 2019

Abstract:Accurately forecasting urban development and its environmental and climate impacts critically depends on realistic models of the spatial structure of the built environment, and of its dependence on key factors such as population and economic development. Scenario simulation and sensitivity analysis, i.e., predicting how changes in underlying factors at a given location affect urbanization outcomes at other locations, is currently not achievable at a large scale with traditional urban growth models, which are either too simplistic, or depend on detailed locally-collected socioeconomic data that is not available in most places. Here we develop a framework to estimate, purely from globally-available remote-sensing data and without parametric assumptions, the spatial sensitivity of the (\textit{static}) rate of change of urban sprawl to key macroeconomic development indicators. We formulate this spatial regression problem as an image-to-image translation task using conditional generative adversarial networks (GANs), where the gradients necessary for comparative static analysis are provided by the backpropagation algorithm used to train the model. This framework allows to naturally incorporate physical constraints, e.g., the inability to build over water bodies. To validate the spatial structure of model-generated built environment distributions, we use spatial statistics commonly used in urban form analysis. We apply our method to a novel dataset comprising of layers on the built environment, nightlighs measurements (a proxy for economic development and energy use), and population density for the world's most populous 15,000 cities.

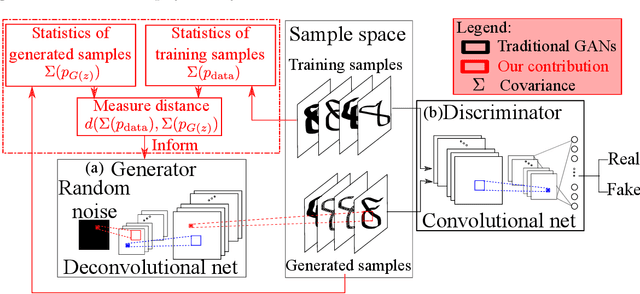

Enforcing Statistical Constraints in Generative Adversarial Networks for Modeling Chaotic Dynamical Systems

May 13, 2019

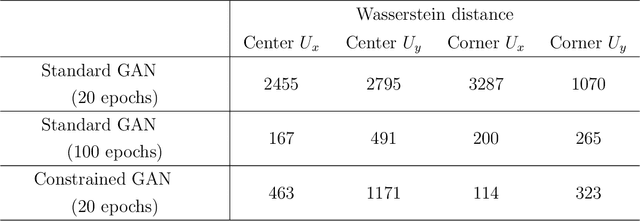

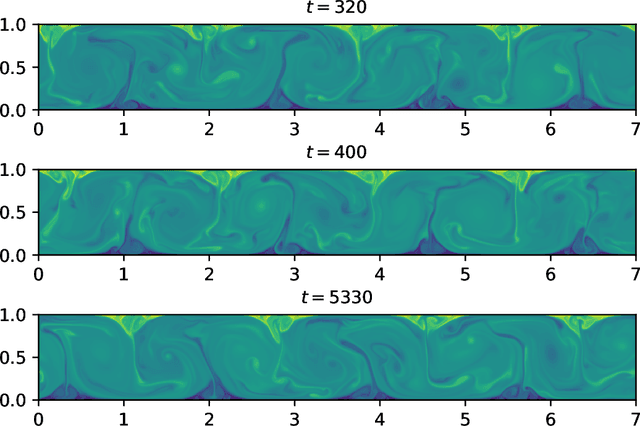

Abstract:Simulating complex physical systems often involves solving partial differential equations (PDEs) with some closures due to the presence of multi-scale physics that cannot be fully resolved. Therefore, reliable and accurate closure models for unresolved physics remains an important requirement for many computational physics problems, e.g., turbulence simulation. Recently, several researchers have adopted generative adversarial networks (GANs), a novel paradigm of training machine learning models, to generate solutions of PDEs-governed complex systems without having to numerically solve these PDEs. However, GANs are known to be difficult in training and likely to converge to local minima, where the generated samples do not capture the true statistics of the training data. In this work, we present a statistical constrained generative adversarial network by enforcing constraints of covariance from the training data, which results in an improved machine-learning-based emulator to capture the statistics of the training data generated by solving fully resolved PDEs. We show that such a statistical regularization leads to better performance compared to standard GANs, measured by (1) the constrained model's ability to more faithfully emulate certain physical properties of the system and (2) the significantly reduced (by up to 80%) training time to reach the solution. We exemplify this approach on the Rayleigh-Benard convection, a turbulent flow system that is an idealized model of the Earth's atmosphere. With the growth of high-fidelity simulation databases of physical systems, this work suggests great potential for being an alternative to the explicit modeling of closures or parameterizations for unresolved physics, which are known to be a major source of uncertainty in simulating multi-scale physical systems, e.g., turbulence or Earth's climate.

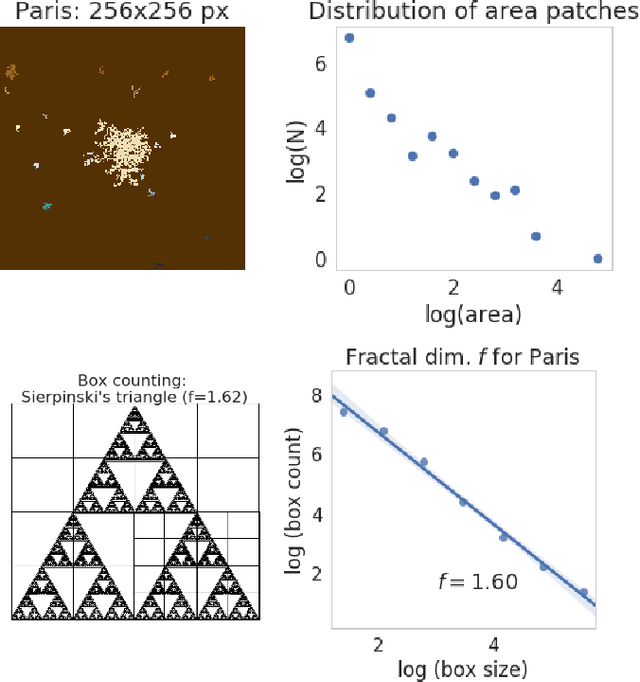

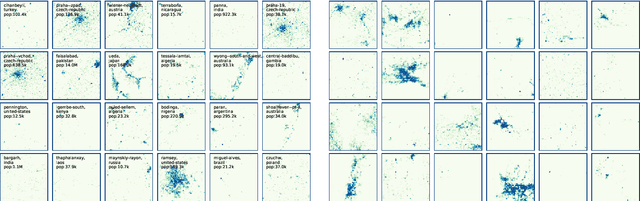

Modeling urbanization patterns with generative adversarial networks

Jan 08, 2018

Abstract:In this study we propose a new method to simulate hyper-realistic urban patterns using Generative Adversarial Networks trained with a global urban land-use inventory. We generated a synthetic urban "universe" that qualitatively reproduces the complex spatial organization observed in global urban patterns, while being able to quantitatively recover certain key high-level urban spatial metrics.

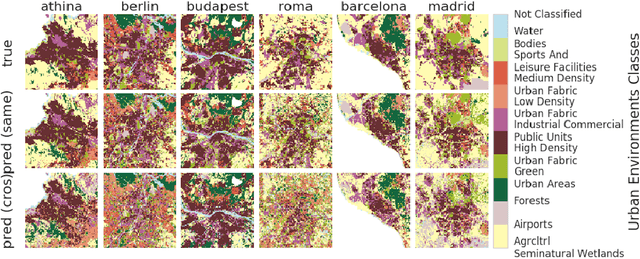

Using convolutional networks and satellite imagery to identify patterns in urban environments at a large scale

Sep 13, 2017

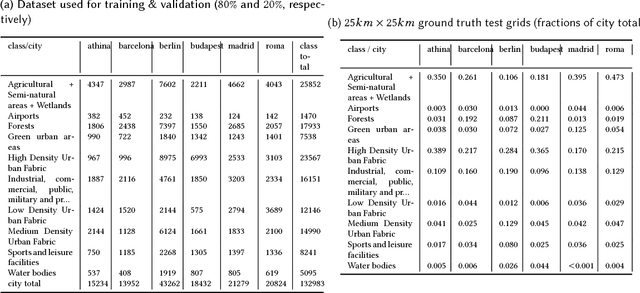

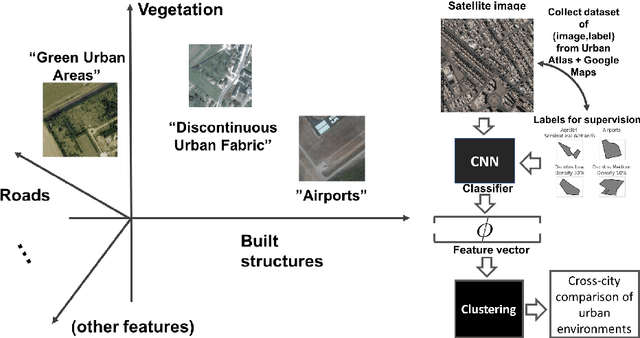

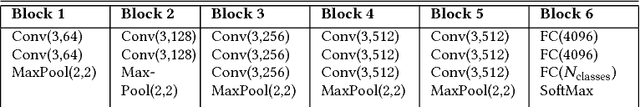

Abstract:Urban planning applications (energy audits, investment, etc.) require an understanding of built infrastructure and its environment, i.e., both low-level, physical features (amount of vegetation, building area and geometry etc.), as well as higher-level concepts such as land use classes (which encode expert understanding of socio-economic end uses). This kind of data is expensive and labor-intensive to obtain, which limits its availability (particularly in developing countries). We analyze patterns in land use in urban neighborhoods using large-scale satellite imagery data (which is available worldwide from third-party providers) and state-of-the-art computer vision techniques based on deep convolutional neural networks. For supervision, given the limited availability of standard benchmarks for remote-sensing data, we obtain ground truth land use class labels carefully sampled from open-source surveys, in particular the Urban Atlas land classification dataset of $20$ land use classes across $~300$ European cities. We use this data to train and compare deep architectures which have recently shown good performance on standard computer vision tasks (image classification and segmentation), including on geospatial data. Furthermore, we show that the deep representations extracted from satellite imagery of urban environments can be used to compare neighborhoods across several cities. We make our dataset available for other machine learning researchers to use for remote-sensing applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge